Software To Help You Turn Your Data Into AI

Forget fragmented workflows, annotation tools, and Notebooks for building AI applications. Encord Data Engine accelerates every step of taking your model into production.

Contents

Perhaps the most common modeling point-of-failure faced by machine learning practitioners is the problem of overfitting. This happens when our models memorize our model training examples but fail to generalize predictions to unseen images. Overfitting is especially pertinent in computer vision where we deal with high-dimensional image inputs and large, over-parameterized deep networks. There are many modern modeling techniques to deal with this problem including dropout-based methods, label smoothing, or architectures that reduce the number of parameters needed while still maintaining the power to fit complex data. But one of the most effective places to combat overfitting is the data itself.

Deep learning models can be incredibly data-hungry, and one of the most effective ways to improve your model’s performance is to give it more data - the fuel of deep learning. This can be done in two ways:

However, data collection can often be very expensive and time-consuming. For example, in healthcare applications, collecting more data usually requires access to patients with specific conditions, considerable time and effort from skilled medical professionals to collect and annotate the data, and often the use of expensive imaging and diagnostic equipment. In many situations, the “just get more data” solution will be very impractical. Furthermore, public datasets aren’t usually used for custom CV problems, aside from transfer learning. Wouldn’t it be great if there were some way to increase the size of our dataset without returning to the data collection phase? This is data augmentation.

Data augmentation is generating new training examples from existing ones through various transformations. It is a very effective regularization tool and is used by experts in virtually all CV problems and models. Data augmentation can increase the size of just about any image training set by 10x, 100x or even infinitely, in a very easy and efficient way. Mathematically speaking:

More data = better model. Data augmentation = more data. Therefore, data augmentation = better machine learning models.

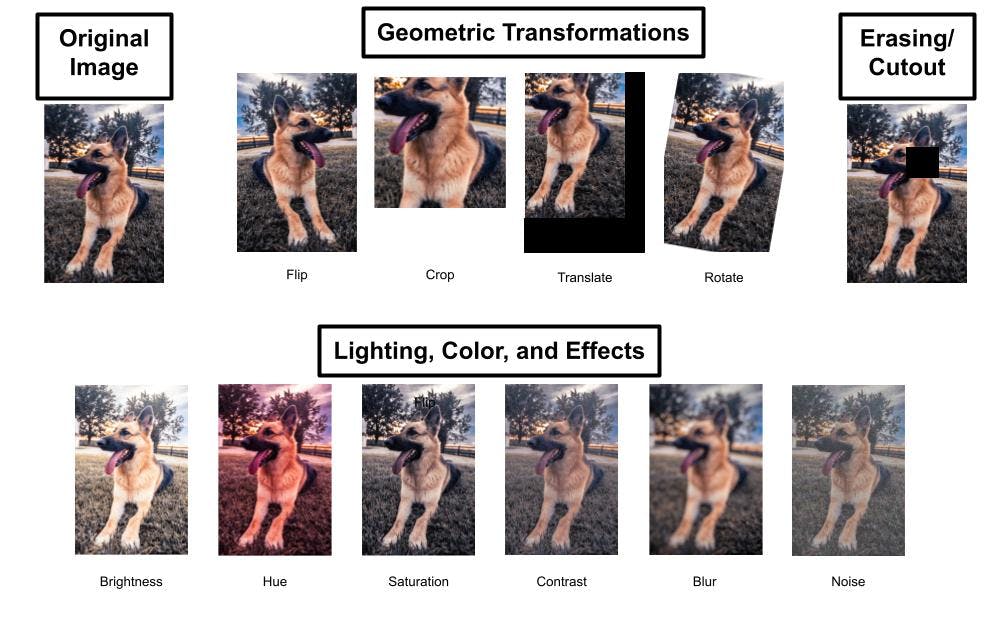

Common image transformations for data augmentation.

The list of methods demonstrated by the figure above is by no means exhaustive. There are countless other ways to manipulate images and create augmented data. You are only limited by your own creativity!

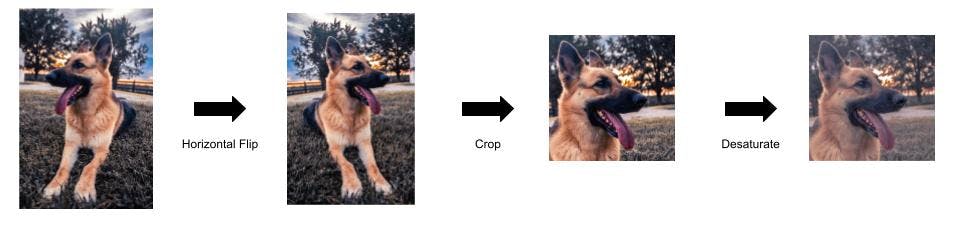

Don’t feel limited to only using each technique in isolation either. You can (and should) chain them together like so:

Multiple transformations

More examples of augmentation combinations from a single source image. (source)

That being said, transformed images don’t need to be perfect to be useful. The quantity of data will often beat the quality of data. The more examples you have, the less detrimental effect one outlier/mistake image will have on your model, and the more diverse your dataset will be.

While it will almost always have a positive effect on your model’s performance, data augmentation isn’t a cure-all silver bullet for problems related to dataset size. You can’t expect to take a tiny dataset of 50 images, blow it up to 50,000 with the above techniques and get all the benefits of a dataset of size 50,000. Data augmentation can help make models more robust to things like rotations, translations, lighting, and camera artifacts, but not for other changes such as different backgrounds, perspectives, variations in the appearance of objects, relative positioning in scenes, etc.

You might be wondering “When should I use data augmentation? When is it beneficial?” The answer is: always! Data augmentation is usually going to help regularize and improve your model, and there are unlikely to be any downsides if you apply it in a reasonable way. The only instance where you might skip it is if your dataset is so incredibly large and diverse that augmentation does not add any meaningful diversity to it. But most of us will not have the luxury of working with such fairytale datasets 🙂.

Augmentation can also be used to deal with class imbalance problems. Instead of using sampling or weighting-based approaches, you can simply augment the smaller classes more to make all classes the same size.

There is no one exact answer, but you should start by thinking about your problem. Does the transformation only generate images that are completely outside the support that you’d ever expect in the real world? Even if an inverted image of a tree in a park isn’t something you’d see in real life, you might see a fallen tree in a similar orientation. However, some transformations might need to be re-considered such as:

Your transformations don’t have to be exclusively realistic, but you should definitely be using any transformations that are likely to occur in practice.

In addition to knowledge of your task and domain, knowledge of your dataset is also important to consider. Better knowledge of the distribution of images in your dataset will allow you to better choose which augmentations will give you sensible results or possibly even which augmentations can help you fill in gaps in your dataset. A great tool to help you explore your dataset, visualize distributions of image attributes, and examine the quality of your image data is Encord Active.

However, we are engineers and data scientists. We don’t just make decisions based on conjectures, we try things out and run experiments. We have the tried-and-true technique of model validation and hyperparameter tuning. We can simply experiment with different techniques and choose the combination that maximizes performance on our validation set.

If you need a good starting point: horizontal reflection (horizontal flip), cropping, blur, noise, and an image erasing method (like a cutout or random erasing) are a good base, to begin with. Then you can experiment with combining them together and adding brightness and coloring changes.

Augmentation techniques for video data are very similar to image data, with a few differences. Generally, the chosen transformation will be applied identically to each frame in the video (with the exception of noise). Trimming videos to create shorter segments is also a popular technique (temporal cropping).

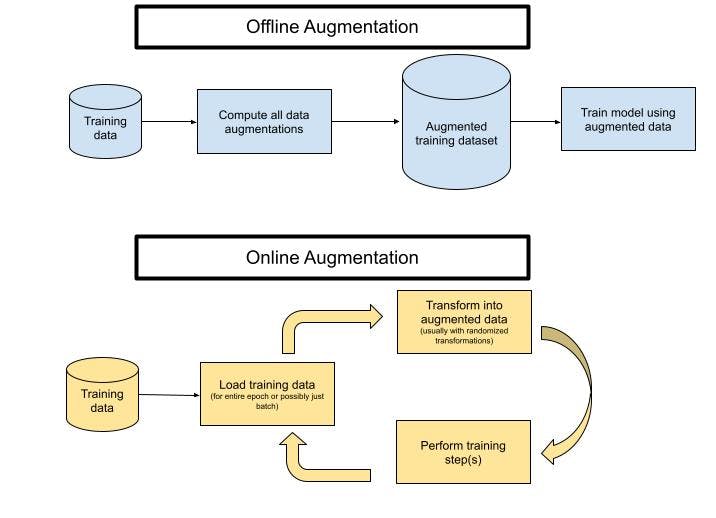

The exact specifics of your implementation will depend on your hardware, chosen deep learning library, chosen transformations, etc. But there are generally two strategies to implement data augmentation: offline and online.

Offline augmentation: Performing data augmentation offline means you will compute a new dataset that includes all of your original and transformed images, and save it to disk. Then you’ll train your model, as usual, using the augmented dataset instead of the original one. This can drastically increase the disk storage required, so we don’t recommend it unless you have a specific reason to do so (such as verifying the quality of the augmented images or controlling for the exact images that are shown during training).

Online augmentation: This is the most common method of implementing data augmentation. In online augmentation, you will transform the images at each epoch or batch when loading them. In this scenario, the model sees a different transformation of the image at each epoch, and the transformations are never saved to disk. Typically, transformations are randomly applied to an image each epoch. For example, you will randomly decide whether or not to flip an image at each epoch, perform a random crop, sample a blur/sharpening amount, etc.

Online and offline data augmentation processes.

TensorFlow and PyTorch both contain a variety of modules and functions to help you with augmentation. For even more options, check out the imgaug Python library.

You may still be wondering, “How do people who train state-of-the-art models use image augmentation?” Let’s take a look:

| Paper | Data Augmentation Techniques |

|---|---|

Translate, Scale, Squeeze, Shear | |

Translate, Flip, Intensity Changing | |

Crop, Flip | |

Flip, Crop, Translate | |

Crop, Elastic distortion | |

Cutout, Crop, Flip | |

AutoAugment, Mixup, Crop | |

AutoAugment, RandAugment, Random erasing, Mixup, CutMix | |

RandAugment, Mixup, CutMix, Random erasing | |

Translate, Rotate, Gray value variation, Elastic deformation | |

Flip | |

Scale, Translate, Color space | |

Crop, Resize, Flip, Color Space, Distortion | |

Mosaic, Distortion, Scale, Color space, Crop, Flip, Rotate, Random erase, Cutout, Hide and Seek, GridMask, Mixup, CutMix, StyleGAN |

Erasing/Cutout: Wait, what is all this cut-mix-rand-aug stuff? Some of these like Cutout, Random Erasing, and GridMask are image-erasing methods. When performing erasing, you can cut out a square, rectangles of different shapes, or even multiple separate cuts/masks within the image. There are also various ways to randomize this process. Erasing is a popular strategy, and for example, in the context of image classification, can force the model to learn to identify objects from each individual part rather than just the most distinct one by erasing the most distinct part (for example learning to recognize dogs by paws and tails, not just faces). Erasing can be thought of as a sort of “dropout in the input space”.

Mixing: Another popular technique in data augmentation is mixing. Mixing involves combining separate examples (usually of different classes) to create a new training image. Mixing is less intuitive than the other methods we have seen because the resulting images do not look realistic. Let’s look at a couple of popular techniques for doing this:

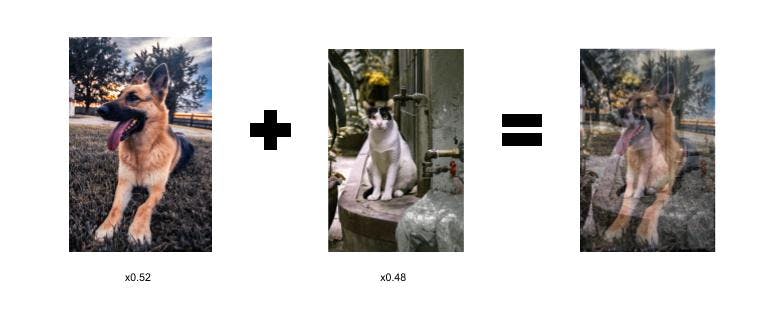

Mixup: Mixup combines two images by linear interpolation (weighted average) of the two images. The same interpolation is then applied to the class label.

An example of a mixup image. The corresponding image label in a binary image classification problem with labels (dog, cat) would then be (0.52, 0.48).

What? This looks like hazy nonsense! And what are those label values? Why does this work?

Essentially, the goal here is to encourage our model to learn smoother, linear transitions between different classes, rather than oscillate or behave erratically. This helps stabilize model behavior on unseen examples at inference time.

CutMix: CutMix is a combination of the Cutout and Mixup approaches. As mentioned before, Mixup images look very unnatural and can be confusing to the model when performing localization. Rather than interpolate between two images, CutMix simply takes a crop of one image and pastes it onto a second image. This also has the benefit over cutout, that the cut-out region is not just thrown away and replaced with garbage, but instead with actual information. The label weighting is similar - for a classification problem, the labels correspond to the percentage of pixels from the corresponding class image that is present in the augmented image. For localization, we keep the same bounding boxes or segmentation from the original images in their respective parts of the composite image.

An example of a CutMix image.

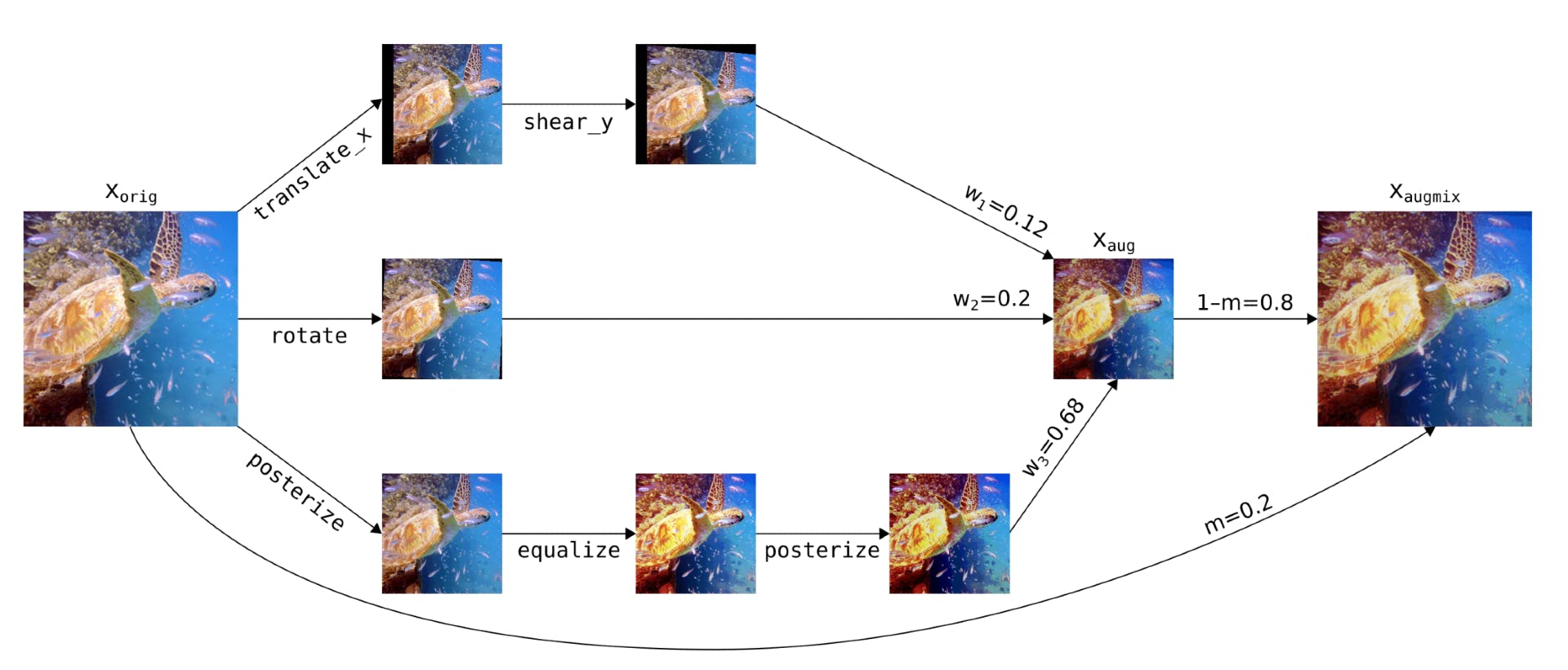

AugMix: Augmix is a little different from the above examples, but is also worth mentioning here. AugMix doesn’t mix different training images together - instead, it mixes different transformations of the same image. This retains some of the benefits of mixing by exploring the input space between images and reduces degradation effects from applying many transformations to the same image. The mixes are computed as follows:

AugMix augmentation process. The entire method involves other parts as well such as a specific loss function. (source)

Image augmentation is still an active research area, and there are a few more advanced methods to be aware of. The following techniques are more complex (particularly the last two) and will not always be the most practical or efficient-to-implement strategies. We list these for the sake of completeness.

Now you know what data augmentation is and how it helps address overfitting by filling out your dataset. You know that you should be using data augmentation for all of your computer vision tasks. You have a good overview of the most essential data augmentation transformations and techniques, you know what to be mindful of, and you’re ready to add data augmentation to your own preprocessing and training pipelines. Good luck!

Ready to automate and improve the quality of your data annotations?

Sign-up for an Encord Free Trial: The Active Learning Platform for Computer Vision, used by the world’s leading computer vision teams.

AI-assisted labeling, model training & diagnostics, find & fix dataset errors and biases, all in one collaborative active learning platform, to get to production AI faster. Try Encord for Free Today.

Want to stay updated?

Follow us on Twitter and LinkedIn for more content on computer vision, training data, and active learning.

Join the Encord Developers community to discuss the latest in computer vision, machine learning, and data-centric AI

Join the communitySoftware To Help You Turn Your Data Into AI

Forget fragmented workflows, annotation tools, and Notebooks for building AI applications. Encord Data Engine accelerates every step of taking your model into production.