Contents

What Is Data Annotation for Physical AI?

Key Criteria for Evaluating Physical AI Annotation Tools

Top Data Annotation Tools for Physical AI (2025 Edition)

Why Physical AI Teams Are Choosing Encord

Real-World Application: Encord for Physical AI Data Annotation

Encord Blog

Best Data Annotation Tools for Physical AI in 2026 [Comparative Guide]

5 min read

Imagine a self-driving car driving up to a busy intersection as the light slowly turns to yellow and then red. In that instant, it is critical that the model understands the environment, the color of the lights and the cars around it, in order to manoeuvre the vehicle safely. This is the perfect example of the importance of successful physical AI model.

Physical AI, or AI models that interact directly with the physical world, are powering the next generation of technologies across domains such as robotics, autonomous vehicles, drones, and advanced medical devices. These systems rely on high-fidelity machine learning models trained to interpret and act within dynamic, real-world environments.

A foundational component in building these models is data annotation — the process of labeling raw data so it can be used to train supervised learning algorithms. For Physical AI, the data involved is often complex, multimodal, and continuous, encompassing video feeds, LiDAR scans, 3D point clouds, radar data, and more.

Given the real-world stakes, safety, compliance, real-time responsiveness, selecting the right annotation tools is not just a technical decision, but a strategic one. Performance, scalability, accuracy, and support for safety-critical environments must all be factored into the equation.

What Is Data Annotation for Physical AI?

Data annotation for Physical AI goes beyond traditional image labeling. These systems operate in environments where both space and time are critical, requiring annotations that reflect motion, depth, and change over time. For example, labeling a pedestrian in a video stream involves tracking that object through multiple frames while adjusting for occlusions and changes in perspective.

Another key element is multimodality. Physical AI systems typically aggregate inputs from several sources, such as combining different video angles of a single object. Effective annotation tools must allow users to overlay and synchronize these different data streams, creating a coherent representation of the environment that mirrors what the AI system will ultimately "see."

The types of labels used are also more sophisticated. Rather than simple image tags or bounding boxes, Physical AI often requires:

- 3D volume rendering: allows physical AI to "see" not just surfaces, but internal structures, occluded objects, and the full spatial context.

- Segmentation masks: provide pixel-level detail about object boundaries, useful in tasks like robotic grasping or surgical navigation.

These requirements introduce several unique challenges. Maintaining annotation accuracy and consistency over time and across modalities is difficult, especially in edge cases like poor lighting, cluttered scenes, or fast-moving objects. Additionally, domain expertise is often necessary. A radiologist may need to label surgical tool interactions, or a robotics engineer may need to review mechanical grasp annotations. This further complicates the workflow.

Key Criteria for Evaluating Physical AI Annotation Tools

Choosing a data annotation tool for Physical AI means looking for more than just label-drawing features. The platform must address the full spectrum of operational needs, from data ingestion to model integration, while supporting the nuanced requirements of spatial-temporal AI development.

Multimodal Data Support

The most critical capability is support for multimodal datasets. Annotation tools must be able to handle a range of formats including video streams, multi-camera setups, and stereo images, to name a few. Synchronization across these modalities must be seamless, enabling annotators to accurately label objects as they appear in different views and data streams. Tools should allow annotators to visualize in 2D, 3D, or both, depending on the task.

Automation and ML-Assisted Labeling

Given the scale and complexity of physical-world data, AI-assisted labeling is a necessity. Tools that offer pre-labeling using machine learning models can significantly accelerate the annotation process. Even more effective are platforms that support active learning, surfacing ambiguous or novel samples for human review. Some systems allow custom model integration, letting teams bring their own detection or segmentation algorithms into the annotation workflow for bootstrapped labeling.

Collaboration and Workflow Management

In enterprise model development, annotation is often a team-based process. Tools should offer robust collaboration features, such as task assignment, label versioning, and detailed progress tracking. Role-based access control is essential to manage permissions across large annotation teams, particularly when domain experts and quality reviewers are involved. Comprehensive audit trails ensure transparency and traceability for every annotation made.

Quality Assurance and Review Pipelines

Maintaining label quality is paramount in safety-critical systems. The best annotation tools support built-in QA workflows, such as multi-pass review. These checks can help catch common errors, while human reviewers can resolve more subtle issues. Review stages should be clearly defined and easy to manage, with options to flag, comment on, and resolve discrepancies.

Security and Compliance

For applications in healthcare, defense, and transportation, security and regulatory compliance are non-negotiable. Annotation tools should offer end-to-end encryption, granular access controls, secure data storage, and audit logging. Compliance with frameworks like HIPAA, GDPR, and ISO 27001 is essential, especially when working with sensitive patient data or proprietary robotics systems. On-premise or VPC deployment options are often necessary for organizations with strict data handling policies.

Top Data Annotation Tools for Physical AI (2025 Edition)

1. Encord

Encord provides a purpose-built solution for labeling and managing high-volume visual datasets in robotics, autonomous vehicles, medical devices, and industrial automation. Its platform is designed to handle complex video workflows and multimodal data — accelerating model development while ensuring high-quality, safety-critical outputs.

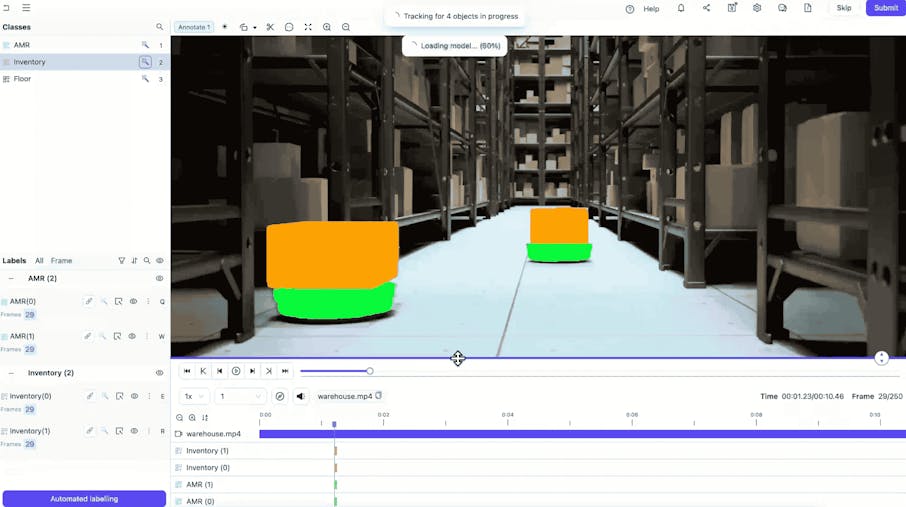

Encord offers a powerful, collaborative annotation environment tailored for Physical AI teams that need to streamline data labeling at scale. With built-in automation, real-time collaboration tools, and active learning integration, Encord enables faster iteration on perception models and more efficient dataset refinement.

At the core of Encord’s platform is its automated video annotation engine, purpose-built to support time-sensitive, spatially complex tasks. Physical AI teams can label sequences up to six times faster than traditional manual workflows, thanks to AI-assisted tracking and labeling automation that adapts over time.

Benefits & Features

- AI-Powered Labeling Engine: Encord leverages micro-models and automated object tracking to drastically reduce manual labeling time. This is critical for teams working with long, continuous sequences from robots, drones, or AVs.

- Multimodal Support: In addition to standard visual formats like MP4 and WebM, Encord natively supports modalities relevant to Physical AI.

- Annotation Types Built for Real-World Perception: The platform supports a wide array of labels such as bounding boxes, segmentation masks, keypoints, polylines, and classification — enabling granular understanding of objects and motion across frames.

- Dataset Quality Evaluation: Encord includes tools to assess dataset integrity using metrics like frame object density, occlusion rates, lighting variance, and duplicate labels — helping Physical AI teams identify blind spots in model training data.

- Collaborative Workflow Management: Built for large-scale operations, Encord includes dashboards for managing annotators, tracking performance, assigning QA reviews, and ensuring compliance across projects.

Ideal For:

- ML and robotics teams building spatial-temporal models that rely on video

- Companies experiencing nonlinear or rapid growth, especially in highly competitive markets where speed and execution are critical, and where AI is core to their product or strategic edge.

- Autonomy and perception teams looking to scale annotation pipelines with quality assurance baked in

- Data operations leads who need a platform to manage internal and outsourced annotation efforts seamlessly

Modalities Supported:

- Video & Images

- DICOM (Medical Imaging)

- SAR (Radar Imagery)

- Documents

- Audio

2. Supervisely

Supervisely positions itself as a “unified operating system” for computer vision, with video annotation tools, support for 3D data, and customizable plugin architecture. Its intuitive interface and support for visual data make it especially useful in domains where multi-sensor inputs and spatial-temporal precision are key to performance and safety.

Supervisely

Benefits & key features:

- End-to-end video annotation support: Supervisely handles full-length video files natively, so teams can annotate continuous footage without breaking it into frame sets. Its multi-track timelines and object tracking tools make it easy to manage annotations across time.

- Advanced annotation types: From bounding boxes and semantic segmentation to 3D point clouds, Supervisely is equipped to handle the modalities critical to physical-world AI, including healthcare imaging and autonomous navigation.

- Custom scripting and extensibility: Teams with specialized needs can build their own plugins and scripts, tailoring the platform to match niche requirements or integrate with proprietary systems.

Best for:

- Teams working heavily with DICOM and other medical imaging modalities

- Organizations prioritizing specialized healthcare and life sciences datasets over general-purpose use

Modalities Covered:

- Image

- Video

- Point-Cloud

- DICOM

3. CVAT

CVAT (Computer Vision Annotation Tool) has become a trusted open-source platform for image and video annotation. Available under the MIT license, CVAT has evolved into an independent, community-driven project supported by thousands of contributors and used by over a million practitioners worldwide.

For Physical AI applications, where large volumes of video data and frame-by-frame spatial reasoning are common, CVAT provides a solid foundation. Its feature set supports the annotation of dynamic scenes, making it especially useful for tasks such as labeling human motion for humanoid robotics, tracking vehicles across intersections, or defining action sequences in industrial robots.

CVAT

Benefits & key features:

- Open-Source and Free to Use: Its source code can be self-hosted and extended to fit custom workflows or integration needs.

- Video Annotation Capabilities: Tailored features like frame-by-frame navigation, object tracking, and interpolation make it effective for annotating time-based data in robotics and autonomous vehicle use cases.

- Wide Community Support: Being under the OpenCV umbrella gives CVAT users access to a vast ecosystem of machine learning engineers, documentation, and plugins — helpful for troubleshooting and extending functionality.

- Semi-Automated Labeling: CVAT supports integration with custom models to assist in labeling, reducing manual effort and accelerating the annotation process.

- Basic Quality Control Features: While not enterprise-grade, CVAT includes fundamental review tools and validation workflows to help teams maintain annotation accuracy.

Best for:

- AI teams optimizing for budget

- Teams that can afford to move slowly and have in-house engineering resources to manage and extend open-source tooling

Modalities Covered:

- Image

- Video

4. Dataloop

Dataloop is especially good for teams working with high-volume video datasets in robotics, surveillance, industrial automation, and autonomous systems. Dataloop combines automated annotation, collaborative workflows, and model feedback tools to help Physical AI teams build and scale real-world computer vision models more efficiently.

Through a combination of AI-assisted labeling and automated QA workflows, Dataloop allows for faster iteration without compromising on label accuracy.

Dataloop

Benefits & key features:

- Multi-format video support: supports various video file types, making it easier to work with raw footage from drones, AVs, or industrial cameras without time-consuming conversions.

- Integrated quality control: Built-in consensus checks, annotation review tools, and validation metrics help teams ensure label integrity — essential for Physical AI systems where edge cases and environmental noise are common.

- Interoperability with ML Tools:integrates with ML platforms and frameworks, making it easy to move labeled data directly into training pipelines

Best for:

- AI teams focused primarily on image annotation workflows

- Enterprises managing outsourced labeling pipelines who don’t need support for complex or multimodal data

Modalities Covered:

- Image

- Video

5. Scale AI

Scale is positioned as the AI data labeling and project/workflow management platform for “generative AI companies, US government agencies, enterprise organizations, and startups.”

While often associated with natural language and generative applications, Scale’s platform also brings powerful capabilities to the physical world, supporting AI systems in robotics, autonomous vehicles, aerial imaging, and sensor-rich environments.

Scale, an enterprise-grade data engine and generative AI platform

Benefits & key features:

- Synthetic data generation tools: With built-in generative capabilities, teams can create synthetic edge cases and rare scenarios — useful for physical AI models that must learn to handle uncommon events or extreme environmental conditions.

- Quality assurance and delivery speed: Scale is known for its fast turnaround on complex labeling tasks, even at enterprise scale, thanks to its managed workforce and internal quality control systems.

- Data aggregation: The platform helps organizations extract value from previously siloed or unlabeled datasets, accelerating development timelines for real-world AI applications.

Best for:

- Government agencies and defense contractors working with sensitive or national security-related sensor data

Modalities Covered:

- Image

- Video

- Test

- Documents

- Audio

Feature Comparison Summary

| Feature / Tool | Encord | CVAT | Scale AI | Dataloop | Supervisely |

| Video Annotation | ✅ | ✅ | ✅ | ✅ | ✅ |

| AI-Assisted Labeling | ✅ | ⚠️* | ✅ | ✅ | ✅ |

| Multimodal Support | ✅ | ⚠️ | ✅ | ⚠️ | ✅ |

| Open Source | ❌ | ✅ | ❌ | ❌ | ❌ |

| Active Learning | ✅ | ⚠️ | ⚠️ | ⚠️ | ❌ |

| QA & Review Tools | ✅ | ⚠️ | ✅ | ✅ | ⚠️ |

| Collaborative Dashboards | ✅ | ⚠️ | ✅ | ✅ | ✅ |

| Custom Scripting | ⚠️ | ✅ | ⚠️ | ⚠️ | ✅ |

Legend:

✅ = Fully supported

⚠️ = Partially or indirectly supported

❌ = Not supported

Why Physical AI Teams Are Choosing Encord

As Physical AI grows more complex, many teams are moving away from general-purpose annotation tools. Encord stands out as a purpose-built platform designed specifically for real-world, multimodal AI — making it a top choice for teams in robotics, healthcare, and industrial automation.

Built for Real-World AI

Encord was designed from the ground up for computer vision data with native video rendering. It supports complex formats, allowing annotators to seamlessly switch between views within a single workspace.

Scales from R&D to Production

Encord adapts to your project’s lifecycle. It supports fast, flexible annotation during experimentation and scales to enterprise-grade workflows as teams grow. You can integrate your own models, close the loop between training and labeling, and continuously refine datasets using real-world feedback.

Trusted in High-Stakes Domains

Encord is proven in safety-critical fields like surgical robotics and industrial automation. Built-in tools for QA, review tracking, and compliance help meet strict regulatory standards — ensuring high-quality, traceable data at every step.

Quality and Feedback at the Core

Encord includes integrated quality control features and consensus checks to enforce annotation standards. You can surface low-confidence predictions or model errors to guide re-annotation — speeding up model improvement while minimizing labeling waste.

Real-World Application: Encord for Physical AI Data Annotation

Pickle Robot, a Cambridge-based robotics company, is redefining warehouse automation with Physical AI. Their green, mobile manipulation robots can unload up to 1,500 packages per hour, handling everything from apparel to tools with speed and precision. But to achieve this, they needed flawless training data.

The Challenge: Incomplete Labels & Inefficient Workflows

Before Encord, Pickle Robot relied on outsourced annotation providers with inconsistent results:

- Low-quality labels (e.g., incomplete polygons)

- Time-consuming audit cycles (20+ mins per round)

- Limited support for complex semantic segmentation

- Unreliable workflows that slowed model development

For robotics, where millimeter-level accuracy matters, these issues directly impacted grasping performance and throughput.

The Solution: A Robust, Integrated Annotation Stack with Encord

Partnering with Encord gave Pickle Robot:

- Consolidated data curation & labeling

- Nested ontologies & pixel-level annotations

- AI-assisted labeling with human-in-the-loop (HITL)

- Seamless integration with their Google Cloud infrastructure

The Results: Faster Models, Smarter Robots

Since switching to Encord, Pickle Robot has achieved:

| Metric | Result |

| Annotation Accuracy | ⬆️ 30% |

| Model Iteration Speed | ⬆️ 60% |

| Robotic Grasping Precision | ⬆️ 15% |

Explore the platform

Data infrastructure for multimodal AI

Explore product

Explore our products

Encord's annotation tools are designed to streamline the process of annotating datasets, making it easier for robotics teams to prepare data for machine learning tasks. By integrating seamlessly with various data sources, including those from smart cameras and industrial robots, Encord can help enhance the efficiency and accuracy of data annotation, ultimately supporting better algorithm development.

Encord provides a robust AI data management platform that facilitates the annotation of various data types, including lidar data. By streamlining the labeling process, Encord enables teams to effectively prepare their lidar datasets for training and improving AI models.

Encord provides a single platform designed to manage all aspects of your AI training data, streamlining workflows and reducing manual efforts associated with data preparation. By consolidating data management into one cohesive environment, teams can scale and navigate increasing data volumes more efficiently, ultimately accelerating the model development process.

Encord provides a comprehensive platform for data management, curation, and annotation specifically designed for physical AI teams. This includes features for geometric annotations and VLAs (visual language annotations) that are commonly utilized by robotics teams to enhance their datasets and improve training models.

Encord supports a variety of annotation tasks tailored for physical AI data, including 2D key point detection for both images and videos. This flexibility allows teams to effectively manage and curate the data necessary for training models in various applications, such as robotics and automation.

Encord is equipped with data curation and model monitoring tools specifically designed to facilitate collaboration between machine learning and data management teams. This ensures that the annotated data meets the requirements for developing AI and machine learning models effectively.

Encord's platform is specifically focused on managing, curating, and annotating physical AI data. We cater to diverse sectors, including autonomous vehicles and warehouse automation, helping teams enhance their model training processes and improve overall outcomes.

The process involves collaborating with clients to create annotated datasets, such as spinal MRIs, which can then be used to benchmark AI solutions. Encord supports this through a structured approach that maintains high-quality annotations for accurate evaluation.

Encord can be utilized to annotate various types of data, including pitch and bat tracking data, segmentation of images for spin detection, and biomechanical skeletal tracking. This allows for enhanced analysis and performance insights in sports technology.

Absolutely! Encord incorporates AI-powered tools that streamline the annotation process, making it faster and more efficient. These integrations help annotators focus on the critical aspects of their tasks while leveraging AI for enhanced accuracy.