Label images 10x faster with

Encord’s AI annotation tool

Combine human precision with AI-assisted labeling to accurately detect, segment, and classify objects 10x faster - supercharge annotation with built-in SAM 2 and GPT-4o integration.

Image

Label images 10x faster with

Encord’s AI annotation tool

Combine human precision with AI-assisted labeling to accurately detect, segment, and classify objects 10x faster - supercharge annotation with built-in SAM 2 and GPT-4o integration.

Powering the world's leading AI teams

Suite of labeling tools for any image annotation project

What our customers say

Charlotte Bax

Founder @ Captur

We needed a platform that enabled various different types of labeling because we have different types of machine learning challenges. Our goal is to diagnose the condition of an object, which requires several steps that include individual issue detection– e.g. damage to a scooter frame– and the condition of where something is placed, like where a scooter is parked.

Increase image annotation throughput and accuracy

Use AI assisted image segmentation and mask prediction to accelerate annotations. Use customizable workflows to orchestrate review steps throughout labeling pipelines for increased label quality. Effectively label the most granular and complex scenarios with nested ontologies and monitor annotator performance with real-time analytics.

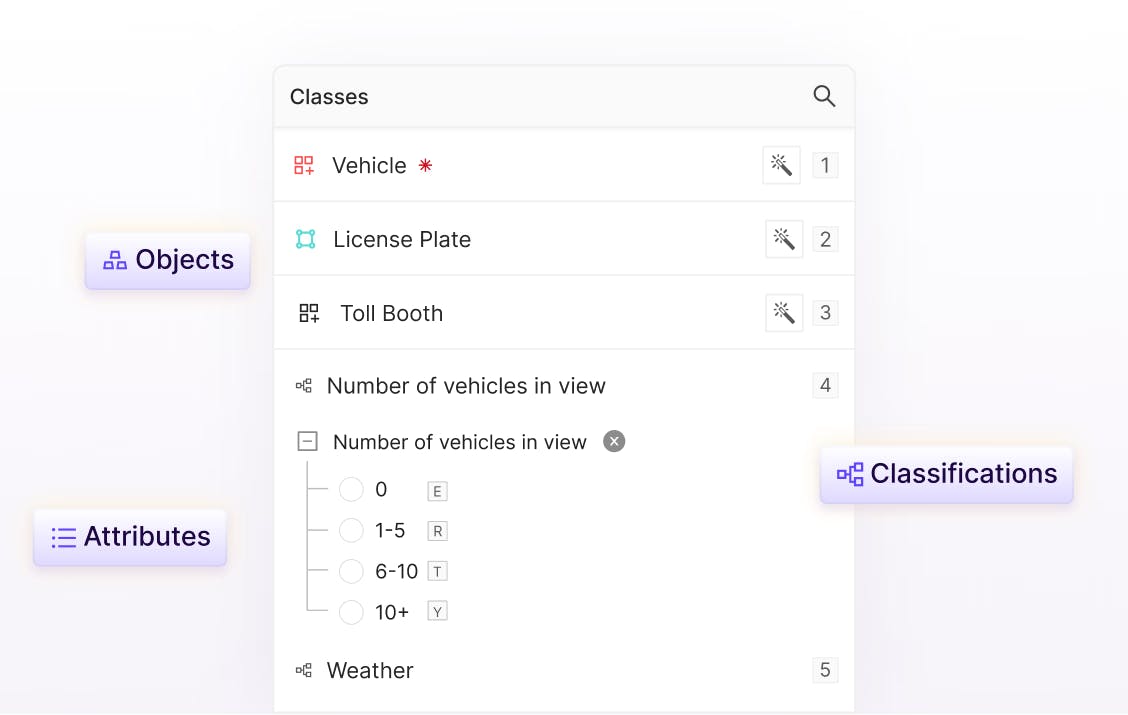

Customizable ontologies for every use case

Create multiple nested classifications with advanced labeling ontologies to establish accurate ground truth datasets.

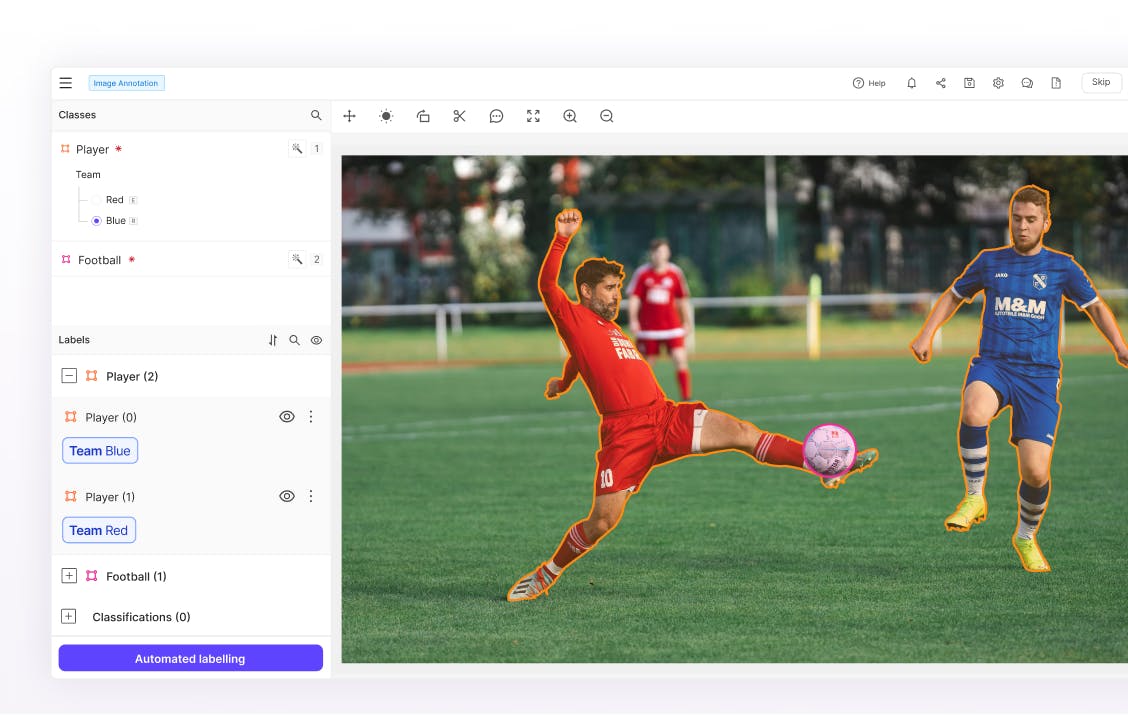

Native AI-assisted labeling with SAM 2

Access SAM 2 natively within Encord for AI-assisted labeling to achieve faster and more accurate mask prediction and object tracking.

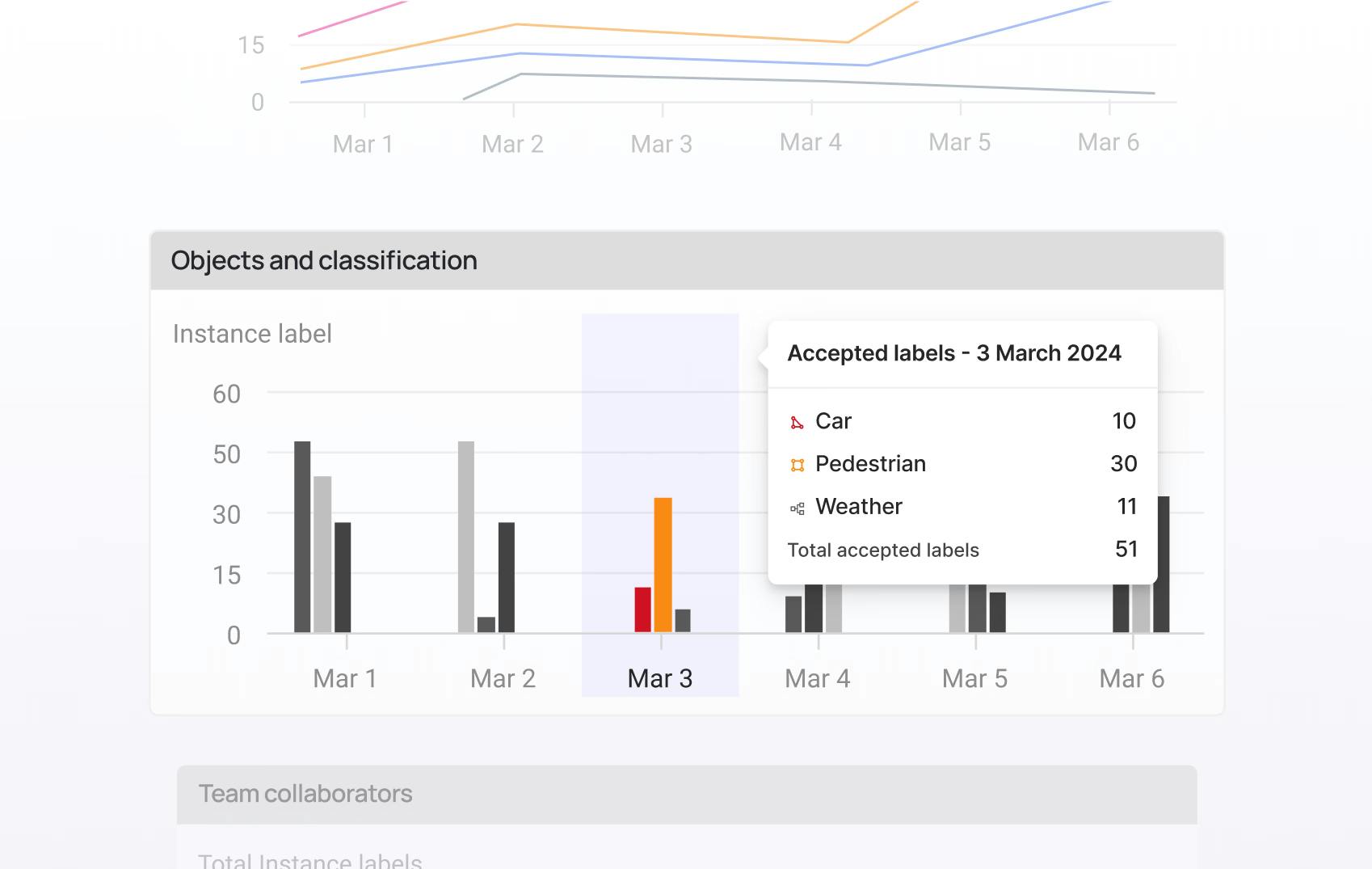

In-depth performance analytics

Uncover rich insights on label quality and annotator performance, to optimize throughput, quality, and workforce efficiency.

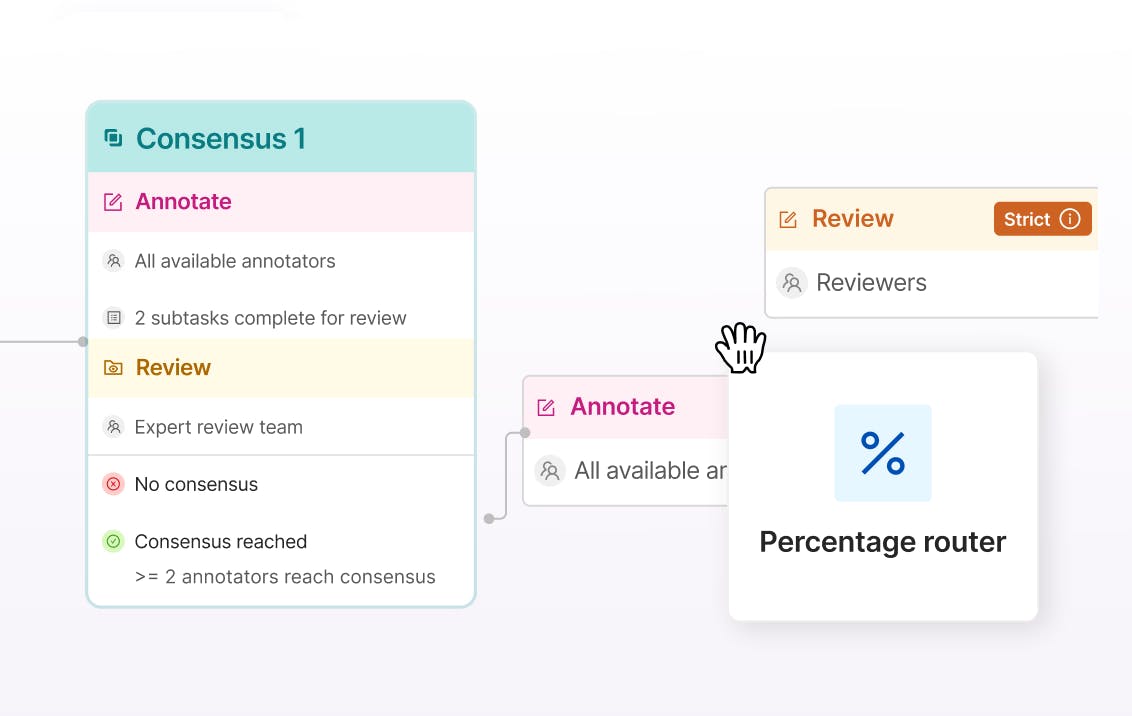

Configurable workflows for quality control

Gaurantee quality control throughout labeling pipelines with customizable data workflows and multi-stage reviews. Assign roles, tasks, and manage project completion.

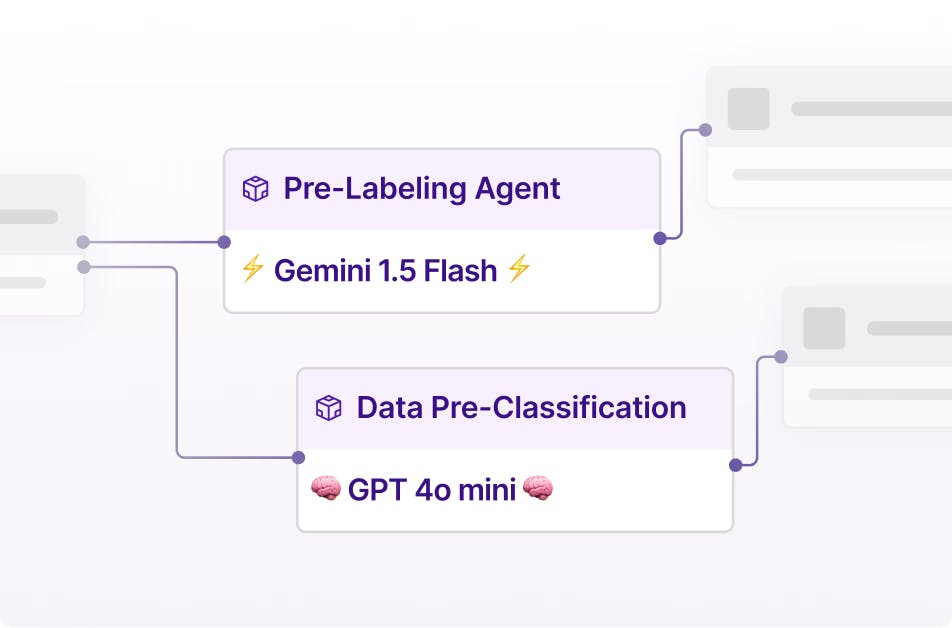

AI-assisted labeling with SOTA foundation models

Integrate SOTA models or your own models directly into your data workflows to automate data reviews, pre-labeling, data classification, filtering, and more.

Curate and manage billions of images

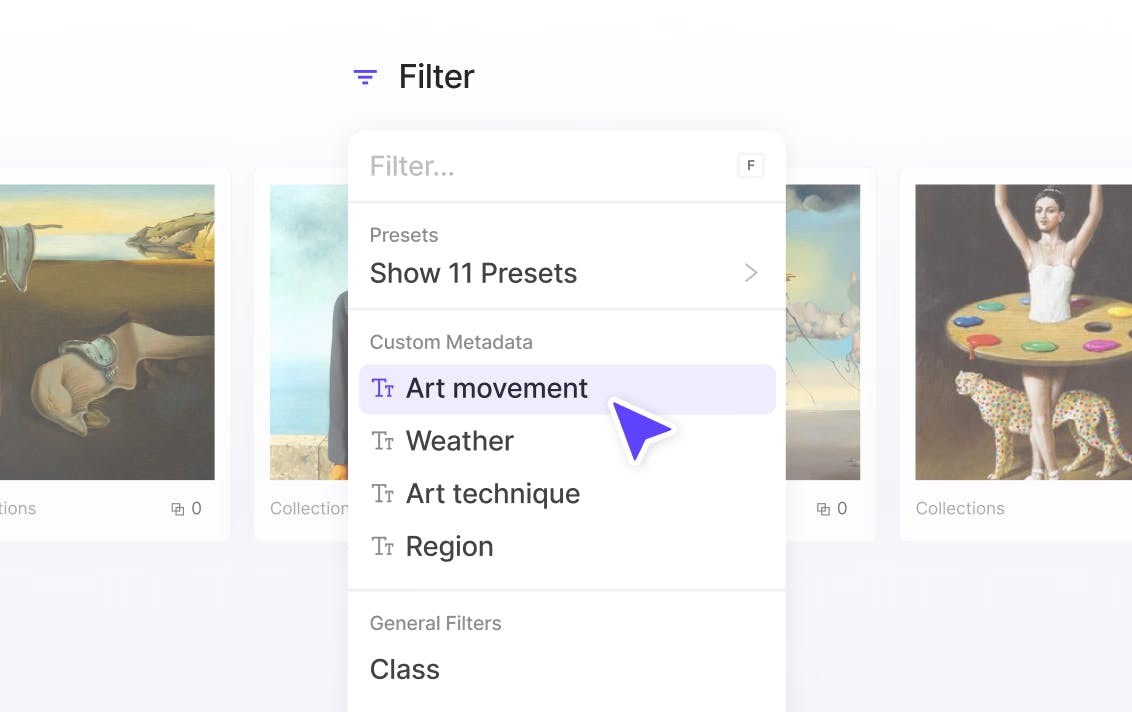

Curate using data metrics and custom metadata

Filter, sort, and organize large image datasets using 40+ data metrics and metadata to efficiently use high quality data for AI model development.

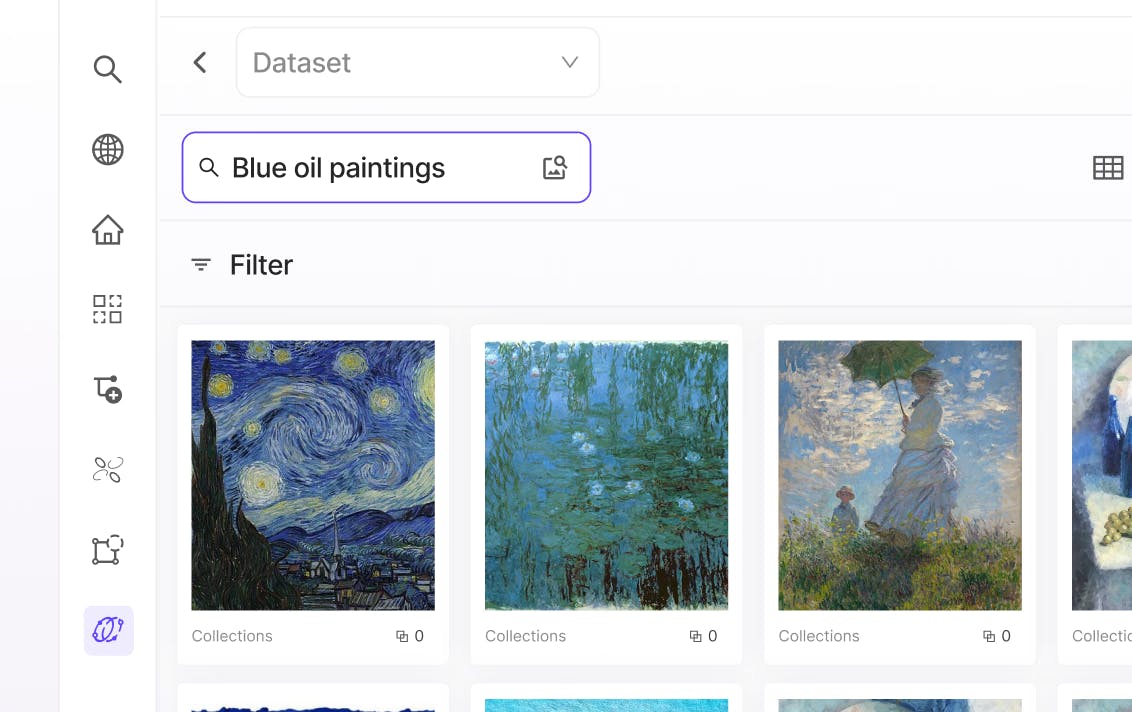

Use English to search large image datasets

Embeddings-based natural language search and similarity search level up data curation to easily discover the most relevant data for AI model training and fine-tuning.

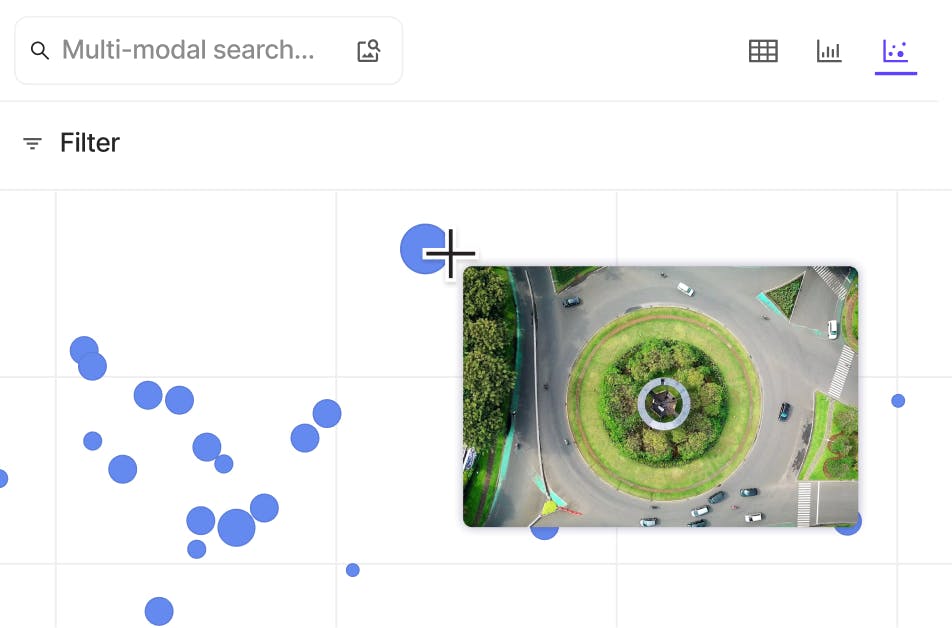

Visually explore with embeddings plots

Identify underrepresented areas, anomalies, and edge cases to create balanced and diverse training datasets to improve model robustness and performance.

Testimonial

Trusted by leading AI teams

Integrations

Integrate with your toolstack

Connect your secure cloud storage, MLOps tools, and much more with dedicated integrations that slot seamlessly into your workflows.

security

Built with security in mind

Encord is SOC2, HIPAA, and GDPR compliant with robust security and encryption standards.

API/sdk

Programmatic access for developers

Leverage our API/SDK to programatically access projects, datasets & labels within the platform via API.

Forget fragmented workflows, annotation tools, and Notebooks for building AI applications. Encord's Data Development platform accelerates every step of taking your model into production.