Encord Blog

Immerse yourself in vision

Trends, Tech, and beyond

Announcing Encord’s $30 million Series B funding

Today, we are excited to announce that Encord has raised $30 million in Series B funding to invest fully in the future of multimodal AI data development. It’s been a little over three years since we launched our product during Y Combinator’s winter 2021 batch, where, as a two-person company, we were sitting in a California dining room, sending cold emails during the day and struggling with AI model library dependencies at night. While the company has grown significantly and evolved to meet the seismic movements in the AI space, we have not lost the two core convictions we’ve had since the early days of YC: data is the most important factor in AI and the path to building a great company is to delight customers. Currently, the youngest AI company from Y Combinator to raise a Series B, we have grown to become the leading data development platform for multimodal AI. Our goal is to be the final AI data platform a company ever needs. We have already assisted over 200 of the world’s top AI teams, including those at Philips, Synthesia, Zeitview, Northwell Health, and Standard AI, in strengthening their data infrastructure for AI development. Our focus on creating high-quality AI data for training, fine-tuning, and validation has led to the production of better models, faster for our customers. We’re thrilled to have Next47 lead the round, with participation from our existing investors, including CRV, Crane Venture Partners, and Y Combinator. The continued support from our existing investors is a testament to the importance of our mission and recognition that the AI department of the future is the IT department of the past. It’s all about the data The technological platform shift driven by the proliferation of AI will solve problems previously thought unsolvable by technology and, much like the rise of the internet in the previous generation, will touch every person on the planet. This shift has been driven by rapid innovation in the compute and model layers, two of the three main pillars for building AI applications. However, innovation, for data, arguably the most important, most proprietary, and most defensible ingredient, has been stagnant. The data layer has fallen victim to a concoction of hastily built in-house tools and ad-hoc orchestration of distributed workforces for annotation and validation, hurting data quality and ultimately model performance. Powering the models of many of the world’s top AI teams at world-leading research labs and enterprises, we’ve witnessed firsthand the importance of having clean, traceable, governed and secure data. Knowing what data to put into your model and what data to take out is a prerequisite to true production level applications of generative and predictive AI. At Encord, we think and talk a lot about how we can continue to support our users in their AI adoption and acceleration journey as they cross the chasm from prototype to production. That’s why we have broken down the data problem into first principles and continue to build best-in-class solutions for each of the core components of an AI data development platform: data management & curation, annotation, and model evaluation. We seek to tie solutions to these core components together in a single, seamlessly integrated solution that works with the petabyte-scale datasets that our clients leverage in their journey to monetize their data and turn it into AI. Some call it a data engine. Some call it a data foundry. We call it a data development platform. The future is data is the future of us We’re especially excited and proud of our product momentum. In the last three months alone we have added an agentic data workflow system, a consensus quality control protocol, support for audio, world-leading segmentation tracking, and many other features. We’ve also continued to make high-quality data annotation smoother and faster with the latest automation and foundation models, integrating Meta’s new Segment Anything Model into our platform less than a day after it was released and the vision-language models LLaVA and GPT4o the same week they were respectively publicly available. We plan to leverage additional capital to accelerate our product roadmap so that we can support our users—existing and new—in even more ways than we have before. With this commitment to continued innovation of the data layer, we’re proud to publicly launch Encord Index to bring ease to multimodal data management and curation. Index is an end-to-end data management platform allowing our users to visualize, search, sort, and manage their internal data at scale. Index gives AI teams full control and governance over their data, helping them understand and operationalize large private datasets in a collaborative and secure way. It integrates seamlessly with data storage such as AWS S3, GCP Cloud Storage, Azure Blob, and others to automate the curating of the best data and remove uninformative or biased data. As a result, our customers have achieved a 35% reduction in dataset size by curating the best data, seeing upwards of 20% improvement in model performance, and saving hundreds of thousands of dollars in compute and human annotation costs. “Successful state-of-the-art models, like our recently released Expressive Avatar foundation model EXPRESS-1, require highly sophisticated infrastructure. Encord Index is a high-performance system for our AI data, enabling us to sort and search at any level of complexity. This supports our continuous efforts to push the boundaries of AI avatar technology and meet customer needs,” said Victor Riparbelli, Co-Founder and CEO of Synthesia, the billion-dollar generative AI company. We’re in the early innings of building a generational company that will play a key role in the AI revolution. Thank you to our users, investors, Encordians, and partners, who make all of this possible every day. We are very excited for what’s to come.

Aug 13 2024

6 m

Trending Articles

1

Announcing the launch of Consensus in Encord Workflows

2

The Step-by-Step Guide to Getting Your AI Models Through FDA Approval

3

Best Image Annotation Tools for Computer Vision [Updated 2024]

4

Top 8 Use Cases of Computer Vision in Manufacturing

5

YOLO Object Detection Explained: Evolution, Algorithm, and Applications

6

Active Learning in Machine Learning: Guide & Strategies [2024]

7

Training, Validation, Test Split for Machine Learning Datasets

Explore our...

Understanding Meta’s Movie Gen Bench: New Generative AI model for Video and Audio

Generative AI has made incredible strides in producing high-quality images and text, with models like GPT-4, DALL-E, and Stable Diffusion dominating their respective domains. However, generating high-quality videos with synchronized audio remains one of the most challenging frontiers in artificial intelligence. Meta AI’s Movie Gen offers a comprehensive solution with its foundation models. Alongside this groundbreaking model comes the Movie Gen Bench, a detailed benchmark designed to assess the capabilities of models in AI video and audio generation. In this blog, we will explore the ins and outs of Meta AI’s Movie Gen Bench, including the set of foundation models, Movie Gen, the benchmarks involved, and how these AI tools enable the fair comparison of generative models in video and audio tasks. What is Movie Gen? Meta’s Movie Gen is an advanced set of generative AI models that can produce high-definition videos and synchronized audio, representing a significant leap in generative media. These models excel in various tasks, including text-to-video synthesis, video personalization based on individual facial features, and precise video editing. By leveraging a transformer based architecture and training on extensive datasets, Movie Gen achieves high-quality outputs that outperform prior state-of-the-art systems, including OpenAI’s Sora, LumaLabs, and Runway Gen3, while offering capabilities not found in current commercial large language models (LLMs). These capabilities can be found in two foundation models: Movie Gen Video The Movie Gen Video model is a 30 billion parameter model trained on a vast collection of images and videos. It generates HD videos of up to 16 seconds at 16 frames per second, handling complex prompts like human activities, dynamic environments, and even unusual or fantastical subjects. Movie Gen Video uses a simplified architecture, incorporating Flow Matching to generate videos with realistic motion, camera angles, and physical interactions. This allows it to handle a range of video content, from fluid dynamics to human emotions and facial expressions. One of the standout features of Movie Gen Video is its capability to personalize videos using a reference image of a specific individual, generating new content that maintains visual consistency with the provided image. This enables applications in personalized media creation, such as marketing or interactive experiences. It also includes instruction-guided video editing, which allows precise changes to both real and generated videos, offering a user-friendly approach to video editing through text prompts. Movie Gen Audio The Movie Gen Audio model focuses on generating cinematic sound effects and background music synchronized with video content. With 13 billion parameters, the model can create high-quality sound at 48 kHz and handle long audio sequences by extending audio in synchronization with the corresponding visual content. Movie Gen Audio not only generates diegetic sounds, like footsteps or environmental noises, but also non-diegetic music that supports the mood of the video. It can handle both sound effect and music generation, blending them seamlessly to create a professional-level audiovisual experience. This model can also generate video-to-audio content, meaning it can produce soundtracks based on the actions and scenes within a video. This capability expands its use in film production, game design, and interactive media. Together, Movie Gen Video and Audio provide a comprehensive set of tools for media generation, pushing the boundaries of what generative AI can achieve in both video and audio production. Movie Gen Bench While Movie Gen's technical capabilities are impressive, equally important is the Movie Gen Bench, which Meta introduced as an evaluation suite for future generative models. Movie Gen Bench consists of two main components: Movie Gen Video Bench and Movie Gen Audio Bench, both designed to test different aspects of video and audio generation. These new benchmarks allow researchers and developers to evaluate the performance of generative models across a broad spectrum of scenarios. By providing a structured, standardized framework, Movie Gen Bench enables consistent comparisons across models, ensuring fair and objective assessment of performance. Movie Gen Video Bench Movie Gen Video Bench consists of 1003 prompts covering a wide variety of testing categories: Human Activity: Captures complex motions such as limb movements and facial expressions. Animals: Includes realistic depictions of animals in various environments. Nature and Scenery: Tests the model's ability to generate natural landscapes and dynamic environmental scenes. Physics: Focuses on fluid dynamics, gravity, and other elements of physical interaction within the environment, such as explosions or collisions. Unusual Subjects and Activities: Pushes the boundaries of generative AI by prompting the model with fantastical or rare scenarios. What makes this evaluation benchmark especially valuable is its thorough coverage of motion levels, including high, medium, and low-motion categories. This ensures the video generation model is tested for both fast-paced sequences and slower, more deliberate actions, which are often challenging to handle in text-to-video generation. Movie Gen: A Cast of Media Foundation Models Movie Gen Audio Bench Movie Gen Audio Bench is designed to test audio generation capabilities across 527 prompts. These prompts are paired with generated videos to assess how well the model can synchronize sound effects and music with visual content. The benchmark covers various soundscapes, including indoor, urban, nature, and transportation environments, as well as different types of sound effects—ranging from human and animal sounds to object-related noises. Audio generation is particularly challenging, especially when it involves synchronizing sound effects with complex motion or creating background music that matches the mood of the scene. The Audio Bench enables evaluation in two critical areas: Sound Effect Generation: Testing the model’s ability to generate realistic sound effects that match the visual action. Joint Sound Effect and Background Music Generation: Assessing the generation of both sound effects and background music in harmony with one another. How Movie Gen Bench Enables Fair Comparisons A standout feature of Meta's Movie Gen Bench is its ability to support fair and transparent comparisons of generative models across different tasks. Many models showcase their best results, but Movie Gen Bench commits to a more rigorous approach by releasing non-cherry-picked data—ensuring all generated outputs are available for evaluation, not just select high-quality samples. This transparency prevents misleading or biased evaluations. Moreover, Meta provides access to the generated videos and corresponding prompts for both the Video Bench and Audio Bench. This allows developers and researchers to test their models against the same dataset, ensuring that any improvements or shortcomings are accurately reflected and not obscured by variations in data quality or prompt difficulty. Movie Gen Bench Evaluation and Metrics Evaluating generative video and audio models is complex, particularly because it involves the added temporal dimension and synchronization between multiple modes. Movie Gen Bench uses a combination of human evaluations and automated metrics to rigorously assess the quality of its models. Human Evaluation For generative video and audio models, human evaluations are considered essential due to the subjective nature of concepts like realism, visual quality, and aesthetic appeal. Automated metrics alone often fail to capture the nuances of visual or auditory content, especially when dealing with motion consistency, fluidity, and sound synchronization. Human evaluators are tasked with assessing generated content on three core axes: Text Alignment: It evaluates how well the generated video or audio matches the provided text prompt. This includes two key aspects: Subject Match, which examines whether the scene's appearance, background, and lighting align with the prompt, and Motion Match, which verifies that the actions of objects and characters correspond correctly to the described actions. Visual Quality: It focuses on the motion quality and frame consistency of the video. It includes Frame Consistency, ensuring stability of objects and environments across frames; Motion Completeness, which assesses whether there’s enough motion for dynamic prompts; Motion Naturalness, evaluating the realism of movements, particularly in human actions; and an Overall Quality assessment that determines the best visual quality based on motion and consistency. Realness and Aesthetics: It addresses the realism and aesthetic appeal of the generated content. This includes Realness, which measures how convincingly the video mimics real-life footage or cinematic scenes, and Aesthetics, which evaluates visual appeal through factors like composition, lighting, color schemes, and overall style. Automated Metrics Although human evaluation is crucial, automated metrics are used to complement these assessments. In generative video models, metrics such as Frechet Video Distance (FVD) and Inception Score (IS) have been commonly applied. However, Meta acknowledges the limitations of these metrics, particularly their weak correlation with human judgment in video generation tasks. Frechet Video Distance (FVD): FVD measures how close a generated video is to real videos in terms of distribution, but it often falls short when evaluating motion and visual consistency across frames. While useful for large-scale comparisons, FVD lacks sensitivity to subtleties in realness and text alignment. Inception Score (IS): IS evaluates image generation models by measuring the diversity and quality of images, but it struggles with temporal coherence in video generation, making it a less reliable metric for this task. Necessity of Human Evaluations One of the main findings in evaluating generative video models is that human judgment remains the most reliable and nuanced way to assess video quality. Temporal coherence, motion quality, and alignment to complex prompts require a level of understanding that automated metrics have yet to fully capture. For this reason, human evaluations are a significant component of Movie Gen Bench’s evaluation system, ensuring that feedback is as reliable and comprehensive as possible. Movie Gen: A Cast of Media Foundation Models Movie Gen Bench Use cases The Movie Gen Bench is a important tool for advancing research in generative AI, particularly in the media generation domain. With its release, future models will be held to a higher standard, requiring them to perform well across a broad range of categories—not just in isolated cases. Potential applications of the Movie Gen models and benchmarks include: Film and Animation: Generating realistic video sequences and synchronized audio for movies, short films, or animations. Video Editing Tools: Enabling precise video edits through simple textual instructions. Personalized Video: Creating customized videos based on user input, whether for personal use or marketing. Interactive Storytelling: Generating immersive video experiences for virtual or augmented reality platforms. By providing a benchmark for both video and audio generation, Meta is paving the way for a new era of AI-generated media that is more realistic, coherent, and customizable than ever before. Accessing Movie Gen Bench To access Movie Gen Bench, interested users and researchers can find the resources on its official GitHub repository. This repository includes essential components for evaluating the Movie Gen models, specifically the Movie Gen Video Bench and Movie Gen Audio Bench. The Movie Gen Video Bench is also available on Hugging Face. Movie Gen Bench: Key Takeaways Comprehensive Evaluation Framework: Meta’s Movie Gen Bench sets a new standard for evaluating generative AI models in video and audio, emphasizing fair and rigorous assessments through comprehensive benchmarks. Advanced Capabilities: The Movie Gen models feature a 30-billion parameter model for video and a 13-billion parameter model for audio. By using Flow Matching for efficiency and Temporal Autoencoder (TAE) for capturing temporal dynamics, these models generate realistic videos that closely align with text prompts, marking significant advancements in visual fidelity and coherence. Robust Evaluation Metrics: Human evaluations, combined with automated metrics, provide a nuanced understanding of generated content, ensuring thorough assessments of text alignment, visual quality, and aesthetic appeal.

Oct 21 2024

5 M

CoTracker3: Simplified Point Tracking with Pseudo-Labeling by Meta AI

Point tracking is essential in computer vision for tasks like 3D reconstruction, video editing, and robotics. However, tracking points accurately across video frames, especially with occlusions or fast motion, remains challenging. Traditional models rely on complex architectures and large synthetic datasets, limiting their performance in real-world videos. Meta AI’s CoTracker3 addresses these issues by simplifying previous trackers and improving data efficiency. It introduces a pseudo-labeling approach, allowing the model to train on real videos without annotations, making it more scalable and effective for real-world use. This blog provides a detailed overview of CoTracker3, explaining how it works, its innovations over previous models, and the impact it has on point tracking. What is CoTracker3? CoTracker3 is the latest point tracking model from Meta AI, designed to track multiple points in videos even when those points are temporarily obscured or occluded. It builds on the foundation laid by earlier models such as TAPIR and CoTracker, while significantly simplifying their architecture. CoTracker3: Simpler and Better Point Tracking by Pseudo-Labelling Real Videos The key innovation in CoTracker3 is its ability to leverage pseudo-labeling, a semi-supervised learning approach that uses real, unannotated videos for training. This approach, combined with a leaner architecture, enables CoTracker3 to outperform previous models like BootsTAPIR and LocoTrack, all while using 1,000 times fewer training videos. Evolution of Point Tracking: From TAPIR to CoTracker3 Early Developments in Point Tracking The first breakthroughs in point tracking came from models like TAPIR and CoTracker, which leveraged neural networks to track points across video frames. TAPIR introduced the idea of using a global matching stage to improve tracking accuracy over long video sequences, refining point tracks by comparing them across multiple frames. This led to significant improvements in tracking, but the model’s reliance on complex components and synthetic training data limited its scalability. CoTracker’s Joint Tracking CoTracker introduced a transformer-based architecture capable of tracking multiple points jointly. This allowed the model to handle occlusions more effectively by using the correlations between tracks to infer the position of hidden points. CoTracker’s joint tracking approach made it a robust model, particularly in cases where points temporarily disappeared from view. {For more information on CoTracker, read the blog Meta AI’s CoTracker: It is Better to Track Together for Video Motion Prediction.} Simplification with CoTracker3 While TAPIR and CoTracker were both effective, their reliance on synthetic data and complex architecture left room for improvement. CoTracker3 simplifies the architecture by removing unnecessary components—such as the global matching stage—and streamlining the tracking process. These changes make CoTracker3 not only faster but also more efficient in its use of data. How CoTracker3 Works Simplified Architecture Unlike previous models that used complex modules for processing correlations between points, CoTracker3 employs a multi-layer perceptron (MLP) to handle 4D correlation features. This change reduces computational overhead while maintaining high performance. Another key feature is CoTracker3’s iterative update mechanism, which refines point tracks over several iterations. Initially, the model makes a rough estimate of the points’ positions and then uses a transformer to refine these estimates by updating the tracks iteratively across frames. This allows the model to progressively improve its predictions. Handling Occlusions with Joint Tracking One of CoTracker3's standout features is its ability to track points that become occluded or temporarily hidden in the video. This is made possible through cross-track attention, which allows the model to track multiple points simultaneously. By using the information from visible points, CoTracker3 can infer the positions of occluded points, making it particularly effective in challenging real-world scenarios where objects move in and out of view. Two Modes: Online and Offline CoTracker3 can operate in two modes: online and offline. In the online mode, the model processes video frames in real-time, making it suitable for applications that require immediate feedback, such as robotics. In offline mode, CoTracker3 processes the entire video sequence in one go, tracking points forward and backward in time. This mode is particularly useful for long-term tracking or when occlusions last for several frames, as it allows the model to reconstruct the trajectory of points that disappear and reappear in the video. Pseudo-Labeling: Key to CoTracker3’s Efficiency The Challenge of Annotating Real Videos One of the biggest hurdles in point tracking is the difficulty of annotating real-world videos. Unlike synthetic datasets, where points can be labeled automatically, real videos require manual annotation, which is time-consuming and prone to errors. As a result, many previous tracking models have relied on synthetic data for training. However, these models often perform poorly when applied to real-world scenarios due to the gap between synthetic and real-world environments. CoTracker3’s Pseudo-Labeling Approach CoTracker3 addresses this issue with a pseudo-labeling approach. Instead of relying on manually annotated real videos, CoTracker3 generates pseudo-labels using existing tracking models trained on synthetic data. These pseudo-labels are then used to fine-tune CoTracker3 on real-world videos. By leveraging real data in this way, CoTracker3 can learn from the complexities and variations of real-world scenes without the need for expensive manual annotation. This approach significantly reduces the amount of data required for training. For example, CoTracker3 uses 1,000 times fewer real videos than models like BootsTAPIR, yet it achieves superior performance. {Read the paper available on arXiv authored by Nikita Karaev, Iurii Makarov, Jianyuan Wang, Natalia Neverova, Andrea Vedaldi and Christian Rupprecht: CoTracker3: Simpler and Better Point Tracking by Pseudo-Labelling Real Videos. } Performance and Benchmarking Tracking Accuracy CoTracker3 has been tested on several point tracking benchmarks, including TAP-Vid and Dynamic Replica, and consistently outperforms previous models like TAPIR and LocoTrack. One of its key strengths is its ability to accurately track occluded points, thanks to its cross-track attention and iterative update mechanism. In particular, CoTracker3 excels at handling occlusions, which have traditionally been a challenge for point trackers. This makes it an ideal solution for real-world applications where objects frequently move in and out of view. Efficiency and Speed In addition to its accuracy, CoTracker3 is also faster and more efficient than many of its predecessors. Its simplified architecture allows it to run 27% faster than LocoTrack, one of the fastest point trackers to date. Despite its increased speed, CoTracker3 maintains high performance across a range of video analysis tasks, from short clips to long sequences with complex motion and occlusions. Data Efficiency CoTracker3’s most notable advantage is its data efficiency. By using pseudo-labels from a relatively small number of real videos, CoTracker3 can outperform models like BootsTAPIR, which require millions of videos for training. This makes CoTracker3 a more scalable solution for point tracking, especially in scenarios where access to large annotated datasets is limited. Applications of CoTracker3 The ability of CoTracker3 to track points accurately, even in challenging conditions, opens up new possibilities in several fields. 3D Reconstruction CoTracker3 can be used for high-precision 3D reconstruction of objects or scenes from video footage. Its ability to track points across frames with minimal drift makes it particularly useful for industries like architecture, animation, and virtual reality, where accurate 3D models are essential. Robotics In robotics, CoTracker3’s online mode allows robots to track objects in real-time, even as they move through dynamic environments. This is critical for tasks such as object manipulation and autonomous navigation, where accurate and immediate feedback is necessary. Video Editing and Special Effects For video editors and visual effects artists, CoTracker3 offers a powerful tool for tasks such as motion tracking and video stabilization. Its ability to track points through occlusions ensures that effects like digital overlays or camera stabilization remain consistent throughout the video, even when the tracked object moves in and out of view. CoTracker3’s Failure Cases While CoTracker3 excels in tracking points across a variety of scenarios, it faces challenges when dealing with featureless surfaces. For instance, the model struggles to track points on surfaces like the sky or bodies of water, where there are few distinct visual features for the algorithm to lock onto. CoTracker3: Simpler and Better Point Tracking by Pseudo-Labelling Real Videos In such cases, the lack of texture or identifiable points leads to unreliable or lost tracks. These limitations highlight scenarios where CoTracker3's performance may degrade, especially in environments with minimal visual detail. How to Implement CoTracker3? CoTracker3 is available on GitHub and can be explored through a Colab demo or on Hugging Face Spaces. Getting started is simple: upload your .mp4 video or select one of the provided example videos. Conclusion CoTracker3 marks a significant advancement in point tracking, offering a simplified architecture that delivers both improved performance and greater efficiency. By eliminating unnecessary components and introducing a pseudo-labeling approach for training on real videos, CoTracker3 sets a new standard for point tracking models. Its ability to track points accurately through occlusions, combined with its speed and data efficiency, makes it a versatile tool for a wide range of applications, from 3D reconstruction to robotics and video editing. Meta AI’s CoTracker3 demonstrates that simpler models can achieve superior results, paving the way for more scalable and effective point tracking solutions in the future.

Oct 18 2024

5 M

Unifying AI Data Toolstack: How to Streamline Your AI Workflows

With data becoming an indispensable asset for organizations, streamlining data processes is crucial for effective collaboration and decision-making. An online survey by Gartner found that 92% of businesses plan to invest in artificial intelligence (AI) tools worldwide. While the modern data stack allows a company to use multiple tools for developing AI-based solutions, its lack of structure and complexity make it challenging to operate. A more pragmatic approach is the unified data stack, which consolidates and integrates different platforms into a single framework. This method allows for scalability, flexibility, and versatility across multiple use cases. In this article, we will discuss what a unified AI data tool stack is, its benefits, components, implementation steps, and more to help you unify your AI toolkit efficiently. What is an AI Data Toolstack? An AI data tool stack is a collection of frameworks and technologies that streamline the data life cycle from collection to disposal. It allows for efficient use of enterprise data assets, increasing the scalability and flexibility of AI initiatives. Data Lifecycle Like a traditional tech stack, the AI data tool stack can consist of three layers: the application layer, model layer, and infrastructure layer. Application Layer: The application layer sits at the top of the stack and comprises interfaces and tools that allow users to interact with a data platform. Its goal is to enable teams to make data-driven decisions using robust data analytics solutions. It can consist of dashboards, business intelligence (BI), and analytics tools that help users interpret complex data through intuitive visualizations. Model Layer: This layer includes all the computing resources and tools required for preparing training data to build machine learning (ML) models. It can consist of popular data platforms for annotating and curating data. The layer may also offer a unified ecosystem that helps data scientists automate data curation with AI models for multiple use cases. Infrastructure Layer: This layer forms the foundation of the AI data stack, which consists of data collection and storage solutions to absorb and pre-process extensive datasets. It can also include monitoring pipelines to evaluate data integrity across multiple data sources. Cloud-based data lakes, warehouses, and integration tools fall under the infrastructure layer. Challenges of a Fragmented Data Tool Stack Each layer of the AI data tool stack requires several tools to manage data for AI applications. Organizations can choose a fragmented tool stack with disparate solutions or a unified framework. However, managing a fragmented stack is more challenging. The list below highlights the issues associated with a fragmented solution. Integration Issues: Integration between different tools can be challenging, leading to data flow and processing bottlenecks. Poor Collaboration: Teams working on disparate tools can cause data inconsistencies due to poor communication between members. Learning Curve: Each team must learn to use all the tools in the fragmented stack, making the training and onboarding process tedious and inefficient. Tool Sprawl: A fragmented data tool stack can have multiple redundancies due to various tools serving the same purpose. High Maintenance Costs: Maintaining a fragmented tool stack means developing separate maintenance procedures for each platform, which increases downtime and lowers productivity. Due to these problems, organizations should adopt a unified platform to improve AI workflows. Benefits of Unifying AI Data Tool Stack A unified AI data tool stack can significantly improve data management and help organizations with their digital transformation efforts. The list below highlights a few benefits of unifying data platforms into a single framework. Automation: Unified data tool stacks help automate data engineering workflows through extract, transform, and load (ETL) pipelines. The in-built validation checks ensure incoming data is clean and accurate, requiring minimal human effort. Enhanced Data Governance: Data governance comprises policies and guidelines for using and accessing data across different teams. A unified tool stack offers an integrated environment to manage access and monitor compliance with established regulations. Better Collaboration: Unifying data tools helps break data silos by allowing teams from different domains to use data from a single and shared source. It improves data interpretability and enables team members to distribute workloads more efficiently. Reduced Need to Switch Between Tools: Users can perform data-related tasks on a single platform instead of switching between tools. This approach increases productivity and mitigates integration issues associated with different tools. Learn how to build a data-centric pipeline to boost ML model performance Components of an AI Data Tool Stack Earlier, we discussed how the AI data tool stack consists of three layers, each containing diverse toolsets. The following sections offer more detail regarding the tool types an AI data stack may contain. Data Collection and Ingestion Tools Data collection and ingestion begin the data lifecycle. The ingestion tools help collect multiple data types, including text, images, videos, and other forms of structured data. They contain data connectors integrating various data sources with an enterprise data storage platform. The process can take place in real time, where the tools continuously transfer a data stream into a storage repository. In contrast, if the information flow is significant, they may fetch information in batches at regular intervals. ETL pipelines such as Talend and Apache NiFi help extract and transfer data to data lakehouses, data warehouses, or relational databases. These storage frameworks may include cloud-based solutions like Amazon AWS, Google Cloud, or Microsoft Azure. Data Preprocessing and Transformation Pipelines The model layer may contain data preprocessing and transformation tools that fix data format inconsistencies, outliers, and other anomalies in raw data. Such tools offer complex pipelines with built-in checks that compute several quality metrics. They compare these metrics against predefined benchmarks and notify relevant teams if data samples do not meet the desired quality standards. During this stage, transformation frameworks may apply aggregation, normalization, and segmentation techniques to make the data usable for model-building. Data Annotation Platforms As highlighted earlier, the model layer can also contain data annotation tools to label unstructured data such as images, text, and video footage. Labeling platforms help experts prepare high-quality training data to build models for specific use cases. For example, image annotation platforms can help experts label images for computer vision (CV) tasks, including object detection, classification, and segmentation. The tools offer multiple labeling techniques for different data types, such as bounding boxes, polygons, named entity recognition, and object tracking. Some providers offer solutions with AI-based labeling that help automate the annotation process. In addition, annotation solutions can compute metrics to evaluate labeling quality. The function lets experts identify and resolve labeling errors before feeding data with incorrect labels to model-development pipelines. Model Development Frameworks After preparing the training data, the next stage involves building and deploying a model using relevant development frameworks. These frameworks may include popular open-source ML libraries such as PyTorch and TensorFlow or more specialized tools for creating complex AI platforms. The tools may also offer features to log model experiments and perform automated hyperparameter turning for model optimization. Monitoring and Analytics Solutions The next step includes tools for monitoring and analyzing data from the production environment after model deployment. These solutions help developers identify issues at runtime by processing customer data in real time. For example, they may compute data distributions to detect data drift or bias and model performance metrics like accuracy and latency. They may also perform predictive analytics using ML algorithms to notify teams of potential issues before they occur. Monitoring resource usage can also be a helpful feature of these tools. The functionality can allow developers to assess memory utilization, CPU usage, and workload distribution. Read our blog to find out about the top 6 data management tools for computer vision How to Select Unified Tools? Building a unified data tool stack requires organizations to invest in compatible tools that help them perform data management tasks efficiently. Below are a few factors to consider when choosing the appropriate tool for your use case. Scalability: The tools must handle changing data volumes and allow enterprises to scale seamlessly according to business needs and customer expectations. Functionality: Select frameworks that help you manage data across its entire lifecycle. They must offer advanced features to ingest, process, transform, and annotate multiple data types. Ease of Use: Search for solutions with intuitive user interfaces, interactive dashboards, and visualizations. This will speed up the onboarding process for new users and allow them to use all the relevant features. Security: Ensure the tools provide robust, industry-standard security protocols to protect data privacy. They must also have flexible access management features to allow relevant users to access desired data on demand. Integration: Choose tools compatible with your existing IT infrastructure and data sources. You can find tools that offer comprehensive integration capabilities with popular data solutions. Cost-effectiveness: Consider the upfront and maintenance costs of third-party tools and in-house frameworks. While in-house solutions provide more customizability, they are challenging to develop and may require the organization to hire an additional workforce. Steps to Unify Data Tool Stack Unifying a data tool stack can be lengthy and iterative, involving multiple stakeholders from different domains. The steps below offer some guidelines to streamline the unification process. Identify Gaps in Current Tool Usage: Start by auditing existing tools and identify redundancies and inefficiencies. For instance, it may be challenging to transfer raw data from a data repository to a modeling framework due to integration issues. You can assess how such situations impact productivity, collaboration, and data quality. Develop an AI Workflow: Define your desired AI workflow, including everything from the data collection phase to model deployment and production monitoring. This will help you identify the appropriate tools required for each stage in the workflow. Select the Required Tools: The earlier section highlighted the factors you must consider when choosing tools for your data stack. Ensure you address all those factors when building your tool stack. Integrate and Migrate: Integrate the new tool stack with your backend infrastructure and begin data migration. Schedule data transfer jobs during non-production hours to ensure the migration process does not disrupt existing operations. Monitor and Scale: After the migration, you can deploy monitoring tools to determine model and data quality using pre-defined key performance indicators (KPIs). If successful, you can scale the system by adding more tools and optimizing existing frameworks to meet growing demand. Encord: A Unified Platform for Computer Vision Encord is an end-to-end data-centric AI platform for managing, curating, annotating and evaluating large-scale datasets for CV tasks. Encord Encord Annotate: Includes basic and advanced features for labeling image data for multiple CV use cases. Encord Active: Supports active learning pipelines for evaluating data quality and model performance. Encord Index: A data management system, that allows AI teams to visualize, sort, and control their data Key Features Scalability: Encord lets you scale AI projects by supporting extensive datasets. You can also create multiple datasets to manage larger projects and upload up to 200,000 frames per video at a time. Functionality: The platform consists of multiple features to filter and slice datasets in the Index Explorer and export for labeling in one click. It also supports deep search, filtering, and metadata analysis. You can also build nested relationship structures in your data schema to improve the quality of your model output. Ease-of-Use: Encord offers an easy-to-use, no-code UI with powerful search functionality for quick data discovery. Users can provide queries in everyday language to search for images and use relevant filters for efficient data retrieval. Data Security: The platform is compliant with major regulatory frameworks, such as the General Data Protection Regulation (GDPR), System and Organization Controls 2 (SOC 2 Type 1), AICPA SOC, and Health Insurance Portability and Accountability Act (HIPAA) standards. It also uses advanced encryption protocols to protect data privacy. Integrations: Encord lets you connect with your native cloud storage buckets and programmatically control data workflows. It offers advanced Python SDK and API to facilitate easy export into JSON and COCO formats. G2 Review Encord has a rating of 4.8/5 based on 60 reviews. Users like the platform’s ontology feature, which helps them define categories for extensive datasets. In addition, its collaborative features and granular annotation tools help users improve annotation accuracy. Unifying AI Data Tool Stack: Key Takeaways With an effective AI data tool stack, organizations can boost the performance of their AI applications and adapt quickly to changing user expectations. Below are a few critical points regarding a unified AI data tool stack. Benefits of an AI Data Tool Stack: A unified framework helps organizations automate data-related workflows, improve data governance, unify data silos, and reduce context-switching. Components of an AI Data Tool Stack: Components correspond to each stage of the data lifecycle and include tools for data ingestion, pre-processing, transformation, annotation, and monitoring. Encord for Computer Vision: Encord is an end-to-end CV data platform that offers a unified ecosystem for curating, annotating, and evaluating unstructured data, including images and videos. FAQs What is Unified Data Analytics? Unified data analytics integrates all aspects of data processing to deliver data-driven insights from analyzing extensive datasets. Why is unified data essential? Organizations can automate data workflows, increase collaboration, and enhance data governance with a unified data framework. How do unified data analytics tools create actionable insights? Unified data analytics consolidates the data ingestion, pre-processing, and transformation stages into a single workflow, making data usable for model-building and complex analysis. What are the best practices for integrating AI tools into a unified data tool stack? Best practices include identifying gaps in the existing tool stack, establishing data governance, and implementing monitoring solutions to evaluate the system’s efficiency. How can integrating AI tools improve data management across different business units? The integration allows different business units to get consistent data from multiple sources. They can quickly share and use the standardized data from various domains to conduct comprehensive analyses.

Oct 16 2024

5 M

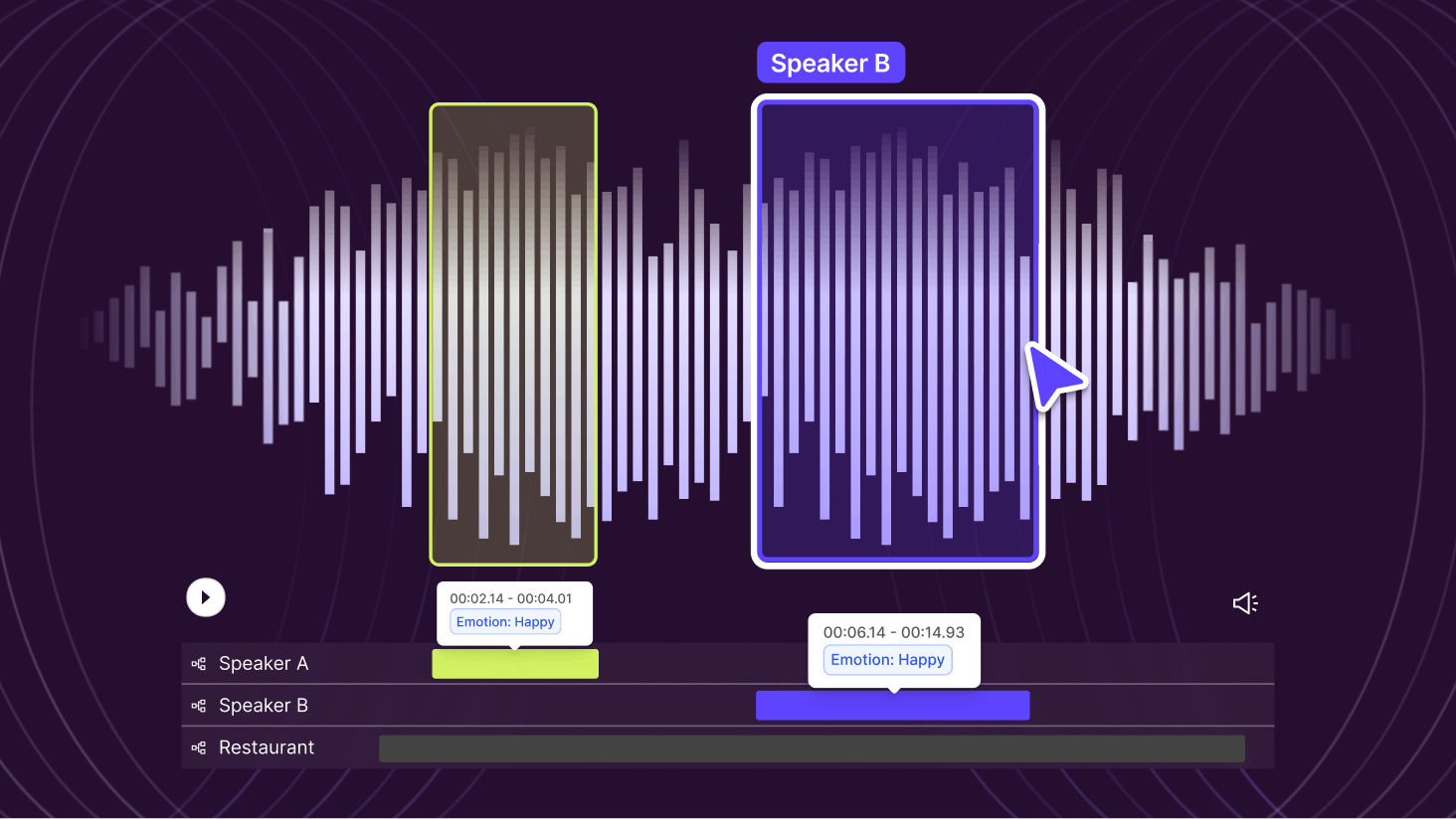

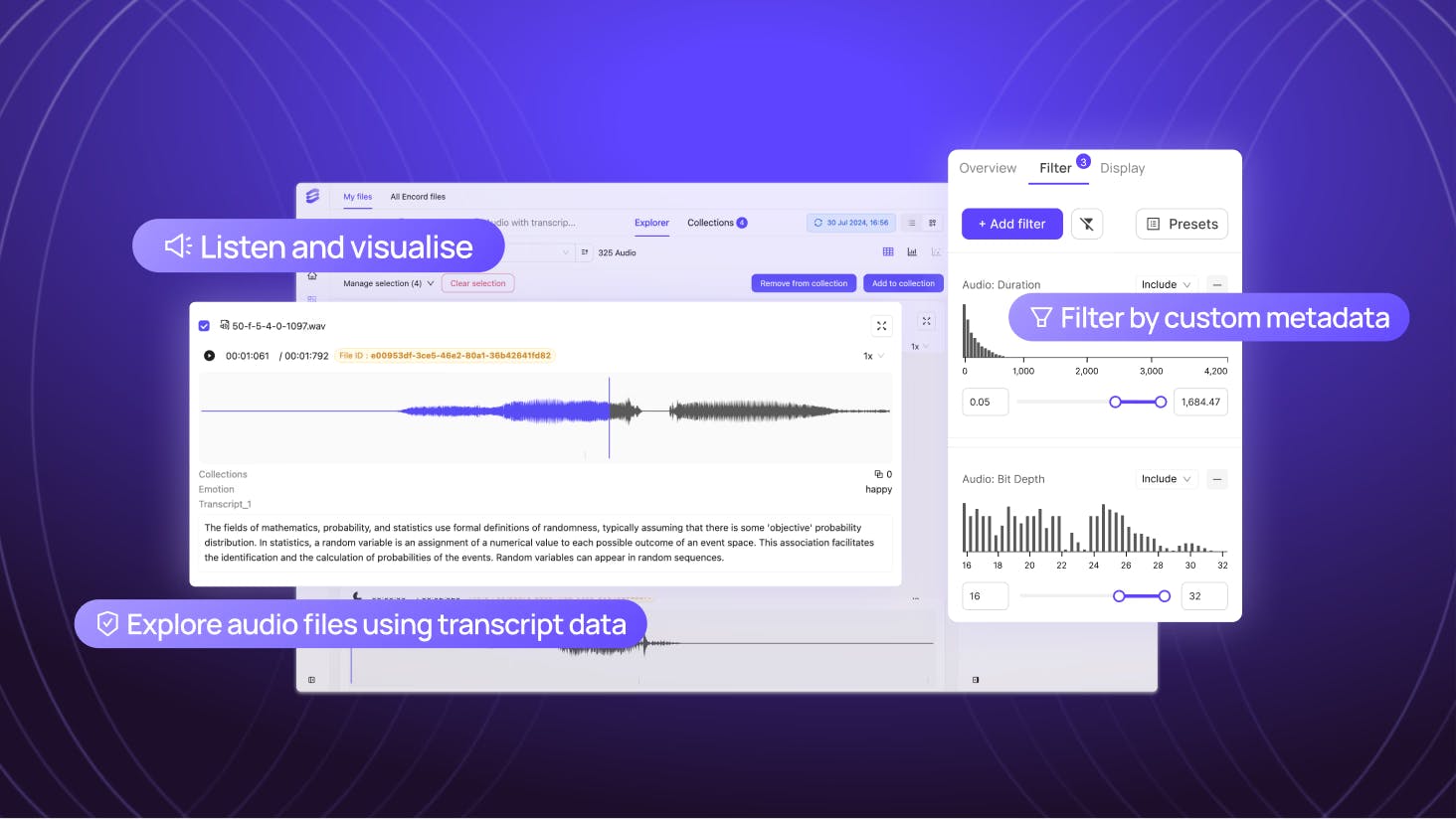

Annotate Audio Data In Encord

From refining speech recognition systems to classifying environmental sounds and detecting emotions in voice recordings, accurately annotated data, forms the backbone of high-quality Audio AI. Announcing Encord’s Audio Annotation Capabilities We are excited to introduce Encord’s new audio annotation capability, specifically designed to enable effective annotation workflows for AI teams working with any type and size of audio dataset. Built with flexibility and user collaboration in mind, Encord’s comprehensive label editor offers the complete solution for annotating a wide range of audio data types and use cases. Flexible Classification of Audio Attributes Within the Encord label editor, users can accurately classify multiple attributes within the same audio file using customizable hotkeys or the intuitive user interface. Labelers can also further adjust attribute classes with extreme precision down to the millisecond. Built to support a wide range of annotation requirements, the audio label editor enables teams to annotate sound events in audio files such as distinct noises and also classify emotions in speech tracks, language, speakers amongst other use cases. Efficient Editing and Review Process Encord offers a robust review platform that simplifies the process of reviewing and editing annotations. Instead of focusing solely on attributes, users can edit specific time ranges and classification types, allowing for more targeted revisions. This feature is particularly valuable for projects involving long-form or complex audio files, where pinpointing specific sections for correction can significantly speed up the workflow. Layered and Overlapping Annotations The Encord label editor can handle overlapping annotations. Whether you're annotating multiple speakers in a conversation or different instruments in a musical composition, Encord allows you to annotate various layers of audio data simultaneously. This ensures that multiple valuable annotations are captured in the same file and complex audio events are annotated in detail. Integrated Collaboration Tools For teams working on large-scale audio projects, Encord’s platform offers unified collaboration features. Multiple annotators and reviewers can work simultaneously on the same project, facilitating a smoother, more coordinated workflow. The platform’s interface enables users to track changes and progress, reducing the likelihood of errors or duplicated efforts. Encord’s Commitment To Keeping Pace With Industry Advancements As the demand for audio and multimodal AI continues to grow, Encord’s audio feature set is designed to meet the evolving needs of pioneering AI teams in the field. some of the latest industry trends and how Encord’s capabilities align include: Multimodal AI Models The rise of multimodal AI models, which combine audio with text and vision modalities, has significantly increased the need for well-annotated high-quality audio data.. Encord’s ability to classify multiple attributes, handle overlapping classifications within audio files, and support complex datasets makes it an ideal tool for professionals developing multimodal systems. Models like OpenAI’s GPT-4o, Meta’s SeamlessM4T, and many more combine speech and text in translation tasks, rely heavily on accurately annotated audio data for training. SOTA AI Models Transform And Create Audio Data Recent advancements in foundational models such as OpenAI’s Whisper and Google’s AudioLM can achieve breakthrough performance in a number of actions to accelerate audio curation and annotation workflows. AI teams can use Encord Agents to integrate with new models and their own to orchestrate automated audio transcription, pre-labeling and quality control to significantly improve the efficiency and quality of their audio data pipelines. Emotion and Sentiment Analysis With the growing emphasis on audio as a medium to interpret emotion and sentiment, particularly in industries like healthcare, customer service, and entertainment, accurate audio annotation is crucial. Encord’s platform allows users to classify nuanced emotions and sentiments within voice recordings, supporting the development of models capable of understanding and interpreting human emotions. Annotate Audio Data on Encord As AI and machine learning projects increasingly incorporate audio data, the tools to annotate and manage that data must evolve. Encord’s audio annotation capabilities are designed to help AI teams streamline their data workflows, enhance collaboration, and manage the complexities of large audio datasets. Whether you’re working on speech recognition, sound classification, or sentiment analysis, Encord provides a flexible, user-friendly platform that adapts to the needs of any audio and multimodal AI project regardless of complexity or size.

Oct 11 2024

5 M

Vision Fine-Tuning with OpenAI's GPT-4: A Step-by-Step Guide

Previously known primarily for its text and vision capabilities in generative AI, GPT-4o’s latest update has introduced an exciting feature: fine-tuning capabilities. This allows users to tailor the AI model to their unique image-based tasks, enhancing its multimodal abilities for specific use cases. In this blog, we’ll walk through how you can perform vision fine-tuning using OpenAI’s tools and API, diving into the technical steps, and considerations. What is Vision Fine-Tuning? Before diving into the "how," let’s clarify the "what." Fine-tuning refers to taking a pre-trained model, like OpenAI’s GPT-4o model, and further training it on a specialized dataset to perform a specific task. Traditionally, fine-tuning was focused on text-based tasks such as sentiment analysis or named entity recognition in large language models(llms). However, with GPT-4’s ability to handle both text and images, you can now fine-tune the model to excel at tasks involving visual data. Multimodal Models Multimodal models can understand and process multiple types of input simultaneously. In GPT-4’s case, this means it can interpret both images and text, making it a valuable tool for various applications, such as: Image classification: Fine-tuning GPT-4 to recognize custom visual patterns (e.g., detecting logos, identifying specific objects). Object detection: Enhancing GPT-4’s ability to locate and label multiple objects within an image. Image captioning: Fine-tuning the model to generate accurate and detailed captions for images. Why Fine-Tune GPT-4o for Vision? GPT-4 is a powerful generalist model, but in specific domains—like medical imaging or company-specific visual data—a general model might not perform optimally. Fine-tuning allows you to specialize the model for your unique needs. For example, a healthcare company could fine-tune GPT-4o model to interpret X-rays or MRIs, while an e-commerce platform could tailor the model to recognize its catalog items in user-uploaded photos. Fine-tuning also offers several key advantages over prompting: Higher Quality Results: Fine-tuning can yield more accurate outputs, particularly in specialized domains. Larger Training Data: You can train the model on significantly more examples than can fit in a single prompt. Token Savings: Shorter prompts reduce the number of tokens needed, lowering the overall cost. Lower Latency: With fine-tuning, requests are processed faster, improving response times. By customizing the model through fine-tuning, you can extract more value and achieve better performance for domain-specific applications. Setting Up Fine-Tuning for Vision in GPT-4 Performing vision fine-tuning is a straightforward process, but there are several steps to prepare your training dataset and environment. Here’s what you need: Prerequisites OpenAI API access: To begin, you’ll need API access through OpenAI’s platform. Ensure that your account is set up, and you’ve obtained your API key. Labeled dataset: Your dataset should contain both images and associated text labels. These could be image classification tags, object detection coordinates, or captions, depending on your use case. Data quality: High-quality, well-labeled datasets are critical for fine-tuning. Ensure that the images and labels are consistent and that there are no ambiguous or mislabeled examples. You can also leverage GPT-4o in your data preparation process. Watch the webinar: Using GPT-4o to Accelerate Your Model Development. Dataset Preparation The success of fine-tuning largely depends on how well your dataset is structured. Let’s walk through how to prepare it. Formatting the Dataset To fine-tune GPT-4 with images, the dataset must follow a specific structure, typically using JSONL (JSON Lines) format. In this format, each entry should pair an image with its corresponding text, such as labels or captions. Images can be included in two ways: as HTTP URLs or as base64-encoded data URLs. Here’s a sample structure: { "messages": [ { "role": "system", "content": "You are an assistant that identifies uncommon cheeses." }, { "role": "user", "content": "What is this cheese?" }, { "role": "user", "content": [ { "type": "image_url", "image_url": { "url": "https://upload.wikimedia.org/wikipedia/commons/3/36/Danbo_Cheese.jpg" } } ] }, { "role": "assistant", "content": "Danbo" } ] } Dataset Annotation Annotations are the textual descriptions or labels for your images. Depending on your use case, these could be simple captions or more complex object labels (e.g., “bounding boxes” for object detection tasks). Use Encord, to make sure your annotations are accurate and aligned with the task you're fine-tuning for. Uploading the Dataset You can upload the dataset to OpenAI’s platform through their API. If your dataset is large, ensure that it is stored efficiently (e.g., using cloud storage) and referenced properly in the dataset file. OpenAI’s documentation provides tools for managing larger datasets and streamlining the upload process. Fine-Tuning Process Now that your dataset is prepared, it’s time to fine-tune GPT-4. Here’s a step-by-step breakdown of how to do it. Initial Setup First, install OpenAI’s Python package if you haven’t already: pip install openai With your API key in hand and dataset prepared, you can now start a fine-tuning job by calling OpenAI’s API. Here’s a sample Python script to kick off the fine-tuning: from openai import OpenAIclient = OpenAI() client.fine_tuning.jobs.create( training_file="file-abc123", model="gpt-4o-mini-2024-07-18" ) Hyperparameter Optimization Fine-tuning is sensitive to hyperparameters. Here are some key ones to consider: Learning Rate: A smaller learning rate allows the model to make finer adjustments during training. If your model is overfitting (i.e., it’s performing well on the training data but poorly on test data), consider lowering the learning rate. Batch Size: A larger batch size allows the model to process more images at once, but this requires more memory. Smaller batch sizes provide noisier gradient estimates, but they can help in avoiding overfitting. Number of Epochs: More epochs allow the model to learn from the data repeatedly, but too many can lead to overfitting. Monitoring performance during training can help determine the optimal number of epochs. You can also get started right away in OpenAI’s fine-tuning dashboard by selecting the base model as ‘gpt-4o-2024-08-06’ from the base model drop-down. Introducing vision to the fine-tuning API Monitoring and Evaluating Fine-Tuned Models After initiating the fine-tuning process, it’s important to track progress and evaluate the model’s performance. OpenAI provides tools for both monitoring and testing your model. Tracking the Fine-Tuning Job The OpenAI API offers real-time monitoring capabilities. You can query the API to check the status of your fine-tuning job: response = openai.FineTune.retrieve("fine-tune-job-id") print(response) The response will include information about the current epoch, loss, and whether the job has completed successfully. Keep an eye on the training loss to ensure that the model is learning effectively. Evaluating Model Performance Once the fine-tuning job is complete, you’ll want to test the model on a validation set to see how well it generalizes to new data. Here’s how you can do that: response = openai.Completion.create( model="your-fine-tuned-model", prompt="Provide a caption for the image path/to/image.jpg", max_tokens=50 ) print(response.choices[0].text) Evaluate the model’s output based on metrics like accuracy, F1 score, or BLEU score (for image captioning tasks). If the model’s performance is not satisfactory, consider revisiting the dataset or tweaking hyperparameters. For a more comprehensive evaluation, including visualizing model predictions and tracking performance across various metrics, consider using Encord Active. This tool allows you to visually inspect how the model is interpreting images and pinpoint areas for further improvement, helping you refine the model iteratively. Deploying Your Fine-Tuned Model Once your model has been successfully fine-tuned, you can integrate it into your application. OpenAI’s API makes it easy to deploy models in production environments. Example Use Case 1: Autonomous Vehicle Navigation Grab used vision fine-tuning to enhance its mapping system by improving the detection of traffic signs and lane dividers using just 100 images. This led to a 20% improvement in lane count accuracy and a 13% improvement in speed limit sign localization, automating a process that was previously manual. Introducing vision to the fine-tuning API Example Use Case 2: Business Process Automation Automat fine-tuned GPT-4 with screenshots to boost its robotic process automation (RPA) performance. The fine-tuned model achieved a 272% improvement in identifying UI elements and a 7% increase in accuracy for document information extraction. Introducing vision to the fine-tuning API Example Use Case 3: Enhanced Content Creation Coframe used vision fine-tuning to improve the visual consistency and layout accuracy of AI-generated web pages by 26%. This fine-tuning helped automate website creation while maintaining high-quality branding. Introducing vision to the fine-tuning API Availability and Pricing of Fine-Tuning GPT-4o Fine-tuning GPT-4's vision capabilities is available to all developers on paid usage tiers, supported by the latest model snapshot, gpt-4o-2024-08-06. You can upload datasets using the same format as OpenAI’s Chat endpoints. Currently, OpenAI offers 1 million free training tokens per day through October 31, 2024. After this period, training costs are $25 per 1M tokens, with inference costs of $3.75 per 1M input tokens and $15 per 1M output tokens. Pricing is based on token usage, with image inputs tokenized similarly to text. You can estimate the cost of your fine-tuning job using the formula: (base cost per 1M tokens ÷ 1M) × tokens in input file × number of epochs For example, training 100,000 tokens over three epochs with gpt-4o-mini would cost around $0.90 after the free period ends. Vision Fine-Tuning: Key Takeaways Vision fine-tuning in OpenAI’s GPT-4 opens up exciting possibilities for customizing a powerful multimodal model to suit your specific needs. By following the steps outlined in this guide, you can use GPT-4’s potential for vision-based tasks like image classification, captioning, and object detection. Whether you’re in healthcare, e-commerce, or any other field where visual data plays a crucial role, GPT-4’s ability to be fine-tuned for your specific use cases allows for greater accuracy and relevance in your applications.

Oct 09 2024

5 M

Apple’s MM1.5 Explained

The MM1 model was an impressive milestone in the development of multimodal large language models(MLLMs), demonstrating robust multimodal capabilities with a general-purpose architecture. However, as the demand for more specialized tasks grew in the field of artificial intelligence, so did the need for multimodal models that could scale efficiently while excelling at fine-grained image and text tasks. Enter MM1.5, a comprehensive upgrade. MM1.5 scales from smaller model sizes (1B and 3B parameters) up to 30B parameters, introducing both dense and mixture-of-experts (MoE) variants. While MM1 was successful, MM1.5’s architecture and training improvements allow it to perform better even at smaller scales, making it highly efficient and versatile for deployment across a variety of devices. But the real story of MM1.5 is in its data-centric approach. By carefully curating data throughout the training lifecycle, MM1.5 improves in areas like OCR (optical character recognition), image comprehension, image captioning and video processing—making it an ideal AI system for both general and niche multimodal tasks. Key Features of MM1.5 OCR and Test-Rich Image Understanding MM1.5 builds on the latest trends in high-resolution image comprehension, supporting arbitrary image aspect ratios and image resolutions up to 4 Megapixels. This flexibility allows it to excel at extracting and understanding embedded text in images with high fidelity. The model leverages a carefully selected dataset of OCR data throughout the training process to ensure it can handle a wide range of text-rich images. By including both public OCR datasets and high-quality synthetic captions, MM1.5 optimizes its ability to read and interpret complex visual text inputs, something that remains a challenge for many open-source models. MM1.5: Methods, Analysis & Insights from Multimodal LLM Fine-Tuning Visual Referring and Grounding Another major upgrade in MM1.5 is its capability for visual referring and grounding. This involves not just recognizing objects or regions in an image but linking them to specific references in the text. For instance, when presented with an image and a set of instructions like "click the red button," MM1.5 can accurately locate and highlight the specific button within the image. This fine-grained image understanding is achieved by training the model to handle both text prompts and visual inputs, such as bounding boxes or points in an image. It can generate grounded responses by referencing specific regions in the visual data. MM1.5: Methods, Analysis & Insights from Multimodal LLM Fine-Tuning While multimodal AI models like OpenAI’s GPT-4, LlaVA-OneVision and Microsoft’s Phi-3-Vision rely on predefined prompts to refer to image regions, MM1.5 takes a more flexible approach by dynamically linking text output to image regions during inference. This is particularly useful for applications like interactive image editing, mobile UI analysis, and augmented reality (AR) experiences. Multi-image Reasoning and In-Context Learning MM1.5 is designed to handle multiple images simultaneously and reason across them. This capability, known as multi-image reasoning, allows the model to perform tasks that involve comparing, contrasting, or synthesizing information from several visual inputs. For example, given two images of a city skyline taken at different times of the day, MM1.5 can describe the differences, reason about lighting changes, and even predict what might happen in the future. This ability is a result of large-scale interleaved pre-training, where the model learns to process sequences of multiple images and corresponding text data, ultimately improving its in-context learning performance. MM1.5: Methods, Analysis & Insights from Multimodal LLM Fine-Tuning MM1.5 also demonstrates strong few-shot learning capabilities, meaning it can perform well on new tasks with minimal training data. This makes it adaptable to a wide range of applications, from social media content moderation to medical imaging analysis. MM1.5 Model Architecture and Variants MM1.5 retains the same core architecture as MM1 but with improvements in scalability and specialization. The models range from 1B to 30B parameters, with both dense and mixture-of-experts (MoE) versions. Dense models, available in 1B and 3B sizes, are particularly optimized for deployment on mobile devices while maintaining strong performance on multimodal tasks. The key components of MM1.5 include a CLIP-based image encoder that processes high-resolution images (up to 4 Megapixels) and uses dynamic image splitting for efficient handling of various aspect ratios. The vision-language connector (C-Abstractor) integrates visual and textual data, ensuring smooth alignment between the two modalities. Finally, the decoder-only Transformer architecture serves as the backbone, processing multimodal inputs and generating language outputs. MM1.5: Methods, Analysis & Insights from Multimodal LLM Fine-Tuning For more specialized applications, MM1.5 offers two variants: MM1.5-Video: Designed for video understanding, this variant can process video data using either training-free methods (using pre-trained image data) or supervised fine-tuning on video-specific datasets. This makes MM1.5-Video ideal for applications like video surveillance, action recognition, and media analysis. MM1.5-UI: Tailored for understanding mobile user interfaces (UIs), MM1.5-UI excels at tasks like app screen comprehension, button detection, and visual grounding in mobile environments. With the increasing complexity of mobile apps, MM1.5-UI offers a robust solution for developers seeking to enhance app interaction and accessibility features. To know more about the architecture of MM1, read the paper MM1: Methods, Analysis & Insights from Multimodal LLM Pre-training or for a brief overview read the blog MM1: Apple’s Multimodal Large Language Models (MLLMs) MM1.5 Training Method: Data-Centric Approach One of the key differentiators of MM1.5 from its previous generations is its data-centric training approach. This strategy focuses on carefully curating data mixtures at every stage of the training process to optimize the model’s performance across a variety of tasks. The training pipeline consists of three major stages: large-scale pre-training, continual pre-training, and supervised fine-tuning (SFT). MM1.5: Methods, Analysis & Insights from Multimodal LLM Fine-Tuning Large-Scale Pre-Training At this stage, MM1.5 is trained on massive datasets consisting of 2 billion image-text pairs, interleaved image-text documents, and text-only data. By optimizing the ratio of these data types (with a heavier focus on text-only data), MM1.5 improves its language understanding and multimodal reasoning capabilities. Continual Pre-Training This stage introduces high-resolution OCR data and synthetic captions to further enhance MM1.5’s ability to interpret text-rich images. Through ablation studies, the MM1.5 team discovered that combining OCR data with carefully generated captions boosts the model’s performance on tasks like document understanding and infographic analysis. Supervised Fine-Tuning(SFT) Finally, MM1.5 undergoes supervised fine-tuning using a diverse mixture of public datasets tailored to different multimodal capabilities. These datasets are categorized into groups like general, text-rich, visual referring, and multi-image data, ensuring balanced performance across all tasks. Through dynamic high-resolution image encoding, MM1.5 efficiently processes images of varying sizes and aspect ratios, further enhancing its ability to handle real-world inputs. For more information, read the research paper published by Apple researchers on Arxiv: MM1.5: Methods, Analysis & Insights from Multimodal LLM Fine-Tuning. Performance of MM1.5 MM1.5 demonstrates outstanding performance across several benchmarks, particularly excelling in text-rich image understanding and visual referring and grounding tasks. With high-quality OCR data and a dynamic image-splitting mechanism, it outperforms many models in tasks like DocVQA and InfoVQA, which involve complex document and infographic comprehension. Its multi-image reasoning capability allows MM1.5 to compare and analyze multiple images, performing well on tasks like NLVR2. In visual referring tasks, such as RefCOCO and Flickr30k, MM1.5 accurately grounds text to specific image regions, making it highly effective for real-world applications like AR and interactive media. MM1.5: Methods, Analysis & Insights from Multimodal LLM Fine-Tuning MM1.5 achieves competitive performance even with smaller model scales (1B and 3B parameters), which enhances its suitability for resource-constrained environments without sacrificing capability. Real-World Applications of MM1.5 The model’s ability to understand and reason with text-rich images is particularly strong, making it an excellent choice for document analysis, web page comprehension, and educational tools. MM1.5’s multi-image reasoning and video understanding capabilities open up exciting possibilities in fields like media production, entertainment, and remote sensing. The model can also be deployed in mobile applications, offering developers powerful tools to improve user interfaces, enhance accessibility, and streamline interaction with digital content. MM1.5: Key Takeaways Enhanced Multimodal Capabilities: MM1.5 excels in text-rich image understanding, visual grounding, and multi-image reasoning. Scalable Model Variants: Offers dense and mixture-of-experts models from 1B to 30B parameters, with strong performance even at smaller scales. Data-Centric Training: Uses optimized OCR and synthetic caption data for continual pre-training, boosting accuracy in text-heavy tasks. Specialized Variants: Includes MM1.5-Video for video understanding and MM1.5-UI for mobile UI analysis, making it adaptable for various use cases. Efficient and Versatile: Performs competitively across a wide range of benchmarks, suitable for diverse applications from document processing to augmented reality.

Oct 07 2024

5 M

NVLM 1.0: NVIDIA's Open-Source Multimodal AI Model

Nvidia has released a family of frontier-class multimodal large language models (MLLMs) designed to rival the performance of leading proprietary models like OpenAI’s GPT-4 and open-source models such as Meta’s Llama 3.1. In this blog, we’ll break down what NVLM is, why it matters, how it compares to other models, and its potential applications across industries and research. What is NVLM? NVLM, short for NVIDIA Vision Language Model, is an AI model developed by NVIDIA that belongs to the category of Multimodal Large Language Models (MLLMs). These models are designed to handle and process multiple types of data simultaneously, primarily text and images, enabling them to understand and generate both textual and visual content. NVLM 1.0, the first iteration of this model family, represents a significant advancement in the field of vision-language models, bringing frontier-class performance to real-world tasks that require a deep understanding of both modalities. At its core, NVLM combines the power of large language models (LLMs), traditionally used for text-based tasks like natural language processing (NLP) and code generation, with the ability to interpret and reason over images. This fusion of text and vision allows NVLM to tackle a broad array of complex tasks that go beyond what a purely text-based or image-based model could achieve. NVLM: Open Frontier-Class Multimodal LLMs Key Features of NVLM State-of-the-Art Performance NVLM is built to compete with the best, both in the proprietary and open-access realms. It achieves remarkable performance on vision-language benchmarks, including tasks like Optical Character Recognition (OCR), natural image understanding, and scene text comprehension. This puts it in competition with leading proprietary artificial intelligence models like GPT-4V and Claude 3.5 as well as open-access models such as InternVL 2 and LLaVA. Improved Text-Only Performance After Multimodal Training One of the most common issues with multimodal models is that their text-only performance tends to degrade after being trained on vision tasks. However, NVLM improves on its text-only tasks even after integrating image data. This is due to the inclusion of a curated, high-quality text dataset during the supervised fine-tuning stage. This ensures that the text reasoning capabilities of NVLM remain robust while enhancing its vision-language understanding. This feature is especially important for applications that rely heavily on both text and image analysis, such as academic research, coding, and mathematical reasoning. By maintaining—and even improving—its text-based abilities, NVLM becomes a highly versatile tool that can be deployed in a wide range of settings. NVLM’s Architecture: Key Features Decoder-Only vs Cross-Attention Models NVLM introduces three architectural options: NVLM-D (Decoder-only), NVLM-X (Cross-attention-based), and NVLM-H (Hybrid). Each of these architectures is optimized for different tasks: Decoder-only models treat image tokens the same way they process text token embeddings, which simplifies the design and unifies the way different types of data are handled. This approach shines in tasks that require reasoning with both text and images simultaneously. Cross-attention transformer models process image tokens separately from text tokens, allowing for more efficient handling of high-resolution images. This is particularly useful for tasks involving fine details, such as OCR or document understanding. Hybrid models combine the strengths of both approaches, using cross-attention for handling high-resolution images and decoder-only processing for reasoning tasks. This architecture balances computational efficiency with powerful multimodal reasoning capabilities. Dynamic High-Resolution Image Processing Instead of processing an entire image at once (which can be computationally expensive), NVLM breaks the image into tiles and processes each tile separately. It then uses a novel 1-D "tile tagging" system to ensure that the model understands where each tile fits within the overall image. This approach of dynamic tiling mechanism boosts performance on tasks like OCR, where high-resolution and detailed image understanding are critical. It also enhances reasoning tasks that require the model to understand the spatial relationships within an image, such as chart interpretation or document understanding. NVLM’s Training: Key Features High-Quality Training Data The training process is split into two main phases: pretraining and supervised fine-tuning. During pretraining, NVLM uses a diverse set of high-quality datasets, including captioning, visual question answering (VQA), OCR, and math reasoning in visual contexts. By focusing on task diversity, NVLM ensures that it can handle a wide range of tasks effectively, even when trained on smaller datasets. Supervised Fine-Tuning After pretraining, NVLM undergoes supervised fine-tuning (SFT) using a blend of text-only and multimodal datasets. This stage incorporates specialized datasets for tasks like OCR, chart understanding, document question answering (DocVQA), and more. The SFT process is critical for ensuring that NVLM performs well in real-world applications. It not only enhances the model’s ability to handle complex vision-language tasks but also prevents the degradation of text-only performance, which is a common issue in other models. Find the research paper on arXiv: NVLM: Open Frontir-Class Multimodal LLMs by NVIDIA’s AI research team Wenliang Dai, Nayeon Lee, Boxin Wang, Zhuoling Yang, Zihan Liu, Jon Barker, Tuomas Rintamaki, Mohammad Shoeybi, Bryan Catanzaro, Wei Ping NVLM vs Other SOTA Vision Language Models NVLM competes directly with some of the biggest names in AI, including GPT-4V and Llama 3.1. So how does it stack up? NVLM: Open Frontier-Class Multimodal LLMs GPT-4V: While GPT-4V is known for its strong multimodal reasoning capabilities, NVLM achieves comparable results, particularly in areas like OCR and vision-language reasoning. Where NVLM outperforms GPT-4V is in maintaining (and even improving) text-only performance after multimodal training. Llama 3-V: Llama 3-V also performs well in multimodal tasks, but NVLM’s dynamic high-resolution image processing gives it an edge in tasks that require fine-grained image analysis, like OCR and chart understanding. Open-Access Models: NVLM also outperforms other open-access vision models, like InternVL 2 and LLaVA, particularly in vision-language benchmarks and OCR tasks. Its combination of architectural flexibility and high-quality training data gives it a significant advantage over other models in its class. Applications of NVLM NVLM: Open Frontier-Class Multimodal LLMs Here are some of the real-world applications of NVLM based on its capabilities: Healthcare: NVLM could be used to analyze medical images alongside text-based patient records, providing a more comprehensive understanding of a patient’s condition. Education: In academic settings, NVLM can help with tasks like diagram understanding, math problem-solving, and document analysis, making it a useful tool for students and researchers alike. Business and Finance: With its ability to process both text and images, NVLM could streamline tasks like financial reporting, where the AI could analyze both charts and written documents to provide summaries or insights. Content Creation: NVLM’s understanding of visual humor and context (like memes) could be used in creative industries, allowing it to generate or analyze multimedia content. How to Access NVLM? NVIDIA is open-sourcing the NVLM-1.0-D-72B model, which is the 72 billion parameter decoder-only version. The model weights are currently available for download and the training code will be made public to the research community soon. To enhance accessibility, the model has been adapted from Megatron-LM to the Hugging Face platform for easier hosting, reproducibility, and inference. Find the NVLMd-1.0-72b model weights in Hugging Face.

Oct 07 2024

5 M

Top 10 Multimodal Use Cases