Encord Blog

Playing videos is easy, pausing them is actually very difficult

The core of Encord's offering is building fast and intuitive object detection, segmentation, and classification video annotation tools to build training datasets for computer vision models.

Just as items can move across different frames in the video, we have to make sure that we store the correct coordinates of individual labels corresponding to the correct frame number in the video.

You might think, “duh,” but this is a serious and complicated problem to solve. We consistently have companies switching to our platform after they have had to throw away months' worth of manual annotation from other commercial tools not purpose built for video or from expensive labeling teams on open-source tooling. All because they realized that labels were misaligned from the corresponding frames due to a myriad of issues casued by rendering video data in modern web browsers.

This is how we approached the problem: We embed videos via the HTML <video> element. When a user pauses the video to draw a label, we query the current frame number of the video from the relevant DOM element and store the data in our backend. Whenever the client needs the label for a review process, to train or apply machine learning algorithms, or to download the labels for their internal tools, they get the correct data.

That is the point when we realized we had solved all our client’s problems and retired to the Caribbean forever.

While this scenario is what we wished for, this is unfortunately not the way that the <video> element works. The problem is that it does not allow you to seek a specific frame but only a specific timestamp.

This should not be a problem if you think that seeking a specific frame would be seeking to

timestamp_x = frame_x * (1 / frames_per_second)

which works unless:

- There is a variable frame rate in the video.

- There are other unknown complications.

Variable frame rates in a video file

When talking about videos with variable frame rates one might think that only time travelers or magicians might need those.

While they are uncommon, there seems to be a use case, especially with dashcams producing them. We assume that dashcams are trying to save frames as fast as they can; with different processes grabbing the dashcam CPU’s attention, this might sometimes mean faster and sometimes slower writes.

Okay, these exist, let’s see later if we can support those.

Other unknown complications with annotating video content

When we looked into what else can go wrong, we opened up a can of worms so unpleasant that we decided against adding an appropriately graphical gif here (you’re welcome).

While we thought we could apply our magic formula of

timestamp_x = frame_x * (1 / frames_per_second)

whenever there is no variable frame rate, we quickly realized that different media players can in some circumstances show a different amount of frames or stretch/shorten the length of specific frames. This is especially true for the media player behind Chromium based browsers (e.g. Google Chrome).

With the help of our friend FFmpeg, we can check the metadata of a video and the metadata of each individual frame in the video on our server. That way we can actually verify the true timestamp at which a frame is meant to be played. However, we cannot access this metadata from the <video> element. We also cannot reproduce the same timestamp heuristics in our server as there are in the browser. Therefore, there is a frame synchronization issue between the frontend and our backend.

Possible Solution

What we know so far is:

- In Chrome, we can only seek the frame of a video by providing a timestamp.

- We found out that Chrome will sometimes move between frames at incorrect timestamps.

- We do need to ensure that labels are stored with the correct frame number (as shown by FFmpeg on our server).

In short, there is a problem to solve. Let’s look at possible solutions.

Unpacking a video into images

One trivial solution is to use our friend FFmpeg to unpack a video into a directory of images with the frame number as part of the image. A command like

$ ffmpeg -i my-video.mp4 my-video-images/$filename%03d.jpg

would make that possible. Then we could upload the images in the frontend, and when the user navigates around frames, we display the corresponding image.

This would mean we might have no proper support for video playback on our frontend, which can make videos feel clunky. The bigger issue is that we would blow up the storage size of videos on our platform. The whole point of videos is the compression of images. A 10-minute video such as this one in full HD takes about 112MB of storage as a video but needs 553MB of storage as extracted images.

We see the storage requirements of clients hitting terabytes and continuing to grow, so we decided against this approach.

Using web features that allow seeking specific frames

We were not the first developer team that had this problem. You can read more discussions here.

Given the frustration of other developers, there are now experimental web features, all with their own advantages and disadvantages. Some of them are

- The HTMLMediaElement.seekToNextFrame() callback function.

- Using WebCodecs for more fine-grained control.

- The HTMLVideoElement.requestVideoFrameCallback() callback function.

If you are reading this article and any of those has landed to become properly supported with API backward compatibility guarantees in the browser, we suggest you do one of the following:

- Celebrate, close this article, and use one of those to solve frame synchronization issues.

- Celebrate and continue reading this article as a history lesson.

We decided against using experimental features due to possible API breakages in newer browser versions. Aligning labels with the correct frames is at the core of our platform, and we felt a more robust solution would be appropriate.

Finding out when the browser media player acts up and reacting accordingly

Spoiler alert: This is what we decided to do to solve issues.

We felt that by finding out which type of videos are causing problems, we would a.) gain valuable experience in the world of video formats and media players in our developer team and b.) be able to offer an informed solution to our clients to guarantee frame synchronization in our platform.

To find problems quicker, we have built an internal browser-based testing tool that takes two inputs:

- the frames per second (fps) of the video

- the video itself

It then embeds the video with the <video> element and increments the timestamp with very small steps. On each step, it takes a screenshot of the currently displayed frame and compares this to the previous screenshot. If the images differ we can guarantee that the browser is displaying a new frame. We then record the timestamp at which that happened.

The tool flags whether the timestamps of the start of each frame are as expected, given the fps of the video.

Videos with audio

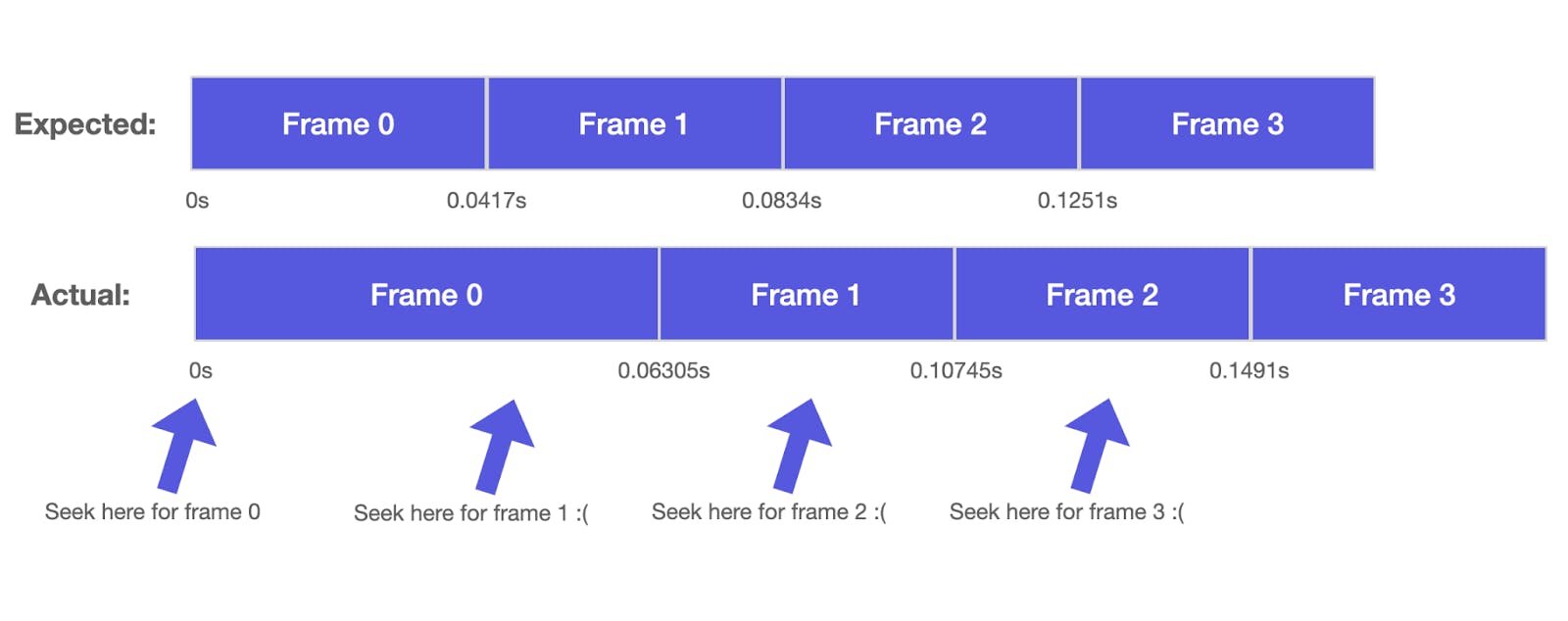

One pattern that we consistently saw with our testing tool was that in many videos, the very first frame was displayed for longer than it should have been.

A frame rate of about 23.98 fps is a common video standard. This means every frame would last for about 0.0417 seconds, but in many videos, the first frame was 0.06305 long. Just over 1.5x the expected length. If the first frame is stretched, that means that all the labels of the entire video will be off by one frame.

We inspected the packets with this command:

$ ffprobe -show_packets -select_streams v -of json video.mp4

And found that for the problematic videos, we would see audio frames with a negative timestamp. “Timestamp” here refers to the time that is reported from the metadata of the video from FFmpeg tools - not the timestamp that we seek in the <video> element. If the media player decides to play at least part of the negative audio frames (and Chromium decides to do so), it is forced to stretch the first frame for longer than its usual display time to display a frame while those negative audio frames are playing.

The <video> element has a muted attribute which we tried enabling in our video player, so Chrome would not have to stretch the first frame. Unfortunately, while the audio was being ignored, the first frame was still stretched.

We then tried removing the audio frames from the video with

$ ffmpeg -i video-with-audio.mp4 -c:v copy -an video-no-audio.mp4

All this does is copy the video frames and drop the audio frames into a new video called `video-no-audio.mp4`. We then uploaded the video to our testing tool, and voila - the problem was fixed, and the frames were displayed with the same timestamps as in the “Expected” row.

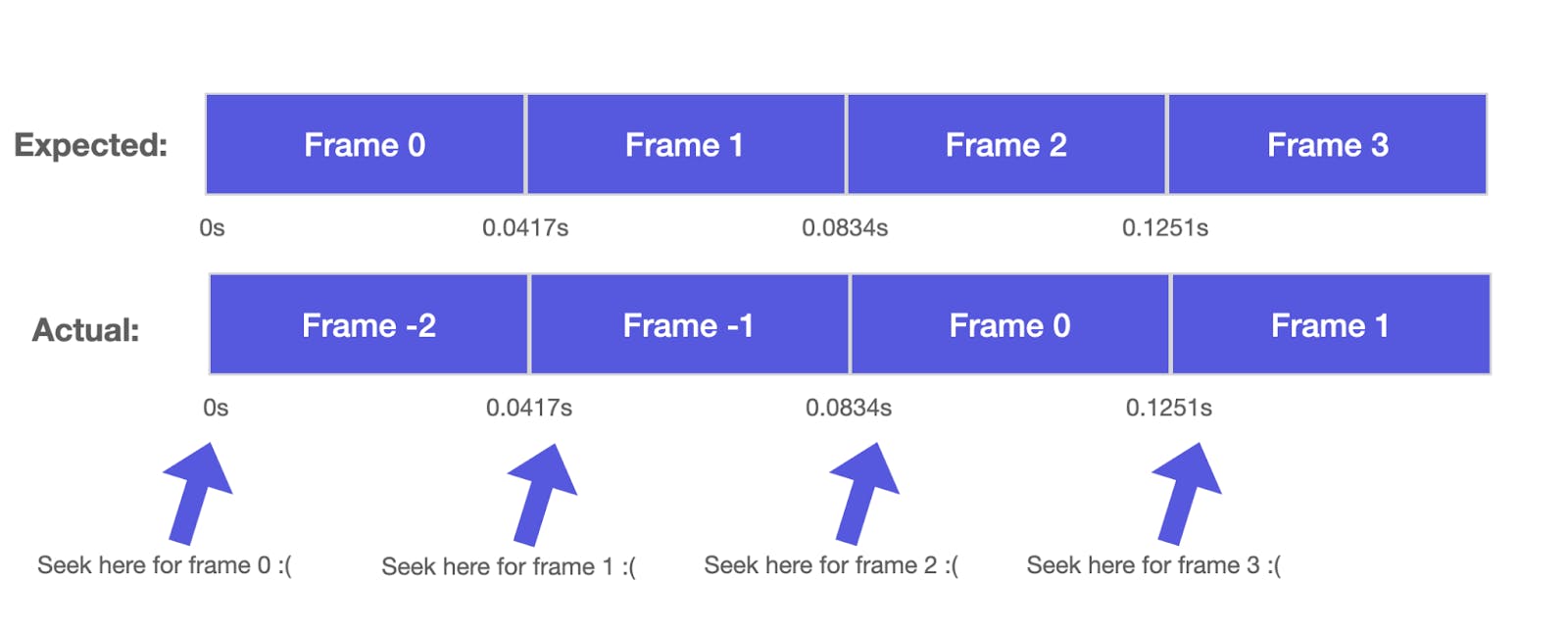

Ghost frames

We coined this spooky term when stumbling upon a video where we found video frames with a negative timestamp in the metadata. Why do such videos exist? It could come from trimming a video where key frames or “infra frames” are kept around from negative timestamps. You can read more about the different types of frames in a video here.

When playing back this video with different media players, we noticed that they all would decide to display anywhere between none and all of the frames with negative timestamps.

Given that there was no way to deterministically say how many of those were displayed, we decided that we would need to remove all those frames by re-encoding the video.

Re-encoding is the process of unpacking a video into all of its image frames and packing it up into a video again. While doing that, you can also choose to drop corrupted frames, such as frames with negative timestamps.

With mp4 files, this command could look like:

$ ffmpeg -err_detect aggressive -fflags discardcorrupt -i video.mp4 -c:v libx264 -movflags faststart -an -tune zerolatency re-encoded-video.mp4

- -err_detect aggressive reports any errors with the videos to us for debugging purposes

- -fflags discardcorrupt removes corrupted packets

- -c:v libx264 encodes the video with the H.264 coding format (used for mp4)

- -movflags faststart is recommended for videos being played in the browser; it puts video metadata to the start of the video so playback can start immediately as the video is buffering.

- -an to remove audio frames - in case they would be problematic

- -tune zerolatency is recommended for fast encoding.

Re-encoding the video with this command would remove all of the frames with negative timestamps, removing this problem.

Variable frame rates

Coming back to the videos with variable frame rates, we saw two options to deal with them:

- Pass a map of the frame number to the timestamp from the backend to the frontend to seek the correct time.

- Re-encode the video forcing a constant frame rate.

Given our experience with some unexpected behavior around videos with audio frames or videos with ghost frames, we did not want to speculate anything about the browser not stretching/squeezing frame lengths in variable frame rate videos. Therefore, we ended up going with option 2.

We used a similar re-encoding FFmpeg command as from above, just with the -vsync cfr flag added. This would ensure that FFmpeg figures out a sensible constant frame rate given the frames that it has seen before. Given that it needs to squeeze frames of a variable rate into a constant rate, this means that some frames will then be duplicated or dropped altogether. A tradeoff we felt was fair given that we can ensure data integrity.

Closing thoughts

To recap, we found a frame synchronization issue in how we see the number of frames in a video. In our backend, we could reliably look into the metadata of the video using FFmpeg to find out the exact timestamp of a frame. In the browser, we could only seek a frame via a timestamp, but we needed to deal with multiple issues where the browser would not be reliable in translating a timestamp into the exact frame number within that video.

In retrospect, we are glad that we decided to look into the individual frame synchronization issues that would arise from different videos instead of just unpacking the video into images. Our dev team has now built a solid understanding of video encoding standards, different behaviors of media players, possible problems with those, and how to provide the right solutions.

We now report to clients any potential issues ahead of annotation time and offer them a solution with the click of a button. We can also give them enough context on why exactly they have to re-encode certain videos.

Explore the platform

Data infrastructure for multimodal AI

Explore product

Explore our products

Encord addresses several challenges in the data annotation workflow, including limited video annotation capabilities, inefficient review processes, and difficulties in organizing annotation tasks per store. By streamlining these aspects, Encord enhances the scalability and effectiveness of the annotation process.

Yes, Encord is designed to support video annotation and various multimodal use cases, including audio and video data. The platform simplifies the process of annotating video frames and allows users to incorporate audio elements, making it suitable for applications such as voice recognition and security analysis.

Traditional video annotation tools often break down video feeds into still images or individual frames, making it difficult for retailers to track ongoing actions and identify moments of interest. This can lead to inefficiencies and missed opportunities in addressing theft or understanding customer behavior.

Encord addresses several challenges associated with video data annotation, including variable frame rates, ghost frames, and encoding issues. By tackling these problems, Encord ensures that users can effectively annotate videos while minimizing complications that could impact downstream processes.

Encord is designed to tackle challenges associated with video encoding by ensuring precise retrieval of frames during the annotation process. Our platform's architecture optimizes video management, making it easier to handle complex data sets while maintaining annotation integrity.

Human involvement is crucial in the annotation process with Encord. Annotators label video data, which is then used for model training. This human-in-the-loop approach helps improve the accuracy of AI models by ensuring that the training data is correctly labeled and validated.

Encord works with video natively, eliminating the need for frame extraction and allowing users to annotate directly on the video within the browser. This approach improves efficiency and quality by avoiding the complexities of matching annotations to individual frames.

The ontology in Encord defines the specific elements that users wish to label during annotation, such as plays, players, and events. This structure helps streamline the annotation process by organizing data points and ensuring that all necessary aspects are captured.

Encord treats video natively, allowing for a more efficient annotation process that avoids the frame sync issues and temporal context loss commonly found in frame-by-frame methods. This results in a more seamless workflow and faster completion of annotation tasks.