Contents

Definitions, general principles & prohibited practices

Risk-based obligations for developers of AI systems

Governance and enforcement

December amendments and ratification

Final remarks

Encord Blog

What the European AI Act Means for You, AI Developer [Updated December 2023]

TL;DR AI peeps, brace for impact! The EU AI Act is hitting the stage with the world's first-ever legislation on artificial intelligence. Imagine GDPR but for AI. Say 'hello' to legal definitions of 'foundation models' and 'general-purpose AI systems' (GPAI). The Act rolls out a red carpet of dos and don'ts for AI practices, mandatory disclosures, and an emphasis on 'trustworthy AI development.' The wild ride doesn't stop there - we've got obligations to follow, 'high-risk AI systems' to scrutinize, and a cliffhanger ending on who's the new AI sheriff in town. Hang tight; it's a whole new world of AI legislation out there!

The European Parliament recently voted to adopt the EU AI Act, marking the world's first piece of legislation on artificial intelligence. The legislation intends to ban systems with an "unacceptable level of risk" and establish guardrails for developing and deploying AI systems into production, particularly in limited risk and high risk scenarios, which we’ll get into later. Like GDPR (oh, how don't we all love the "Accept cookie" banners), which took a few years from adoption (14 April 2016) until enforceability (25 May 2018), the legislation will have to pass through final negotiations between various EU institutions (so-called 'trilogues') before we have more clarity on concrete timelines for enforcement.

As an AI product developer, the last thing you probably want to be spending time on is understanding and complying with regulations (you should've considered that law degree, after all, huh), so I decided to stay up all night reading through the entirety of The Artificial Intelligence Act - yes, all 167 pages, outlining the key points to keep an eye on as you embark on bringing your first AI product to market.

We've collated all the key resources you need to learn about the EU AI Act, including our latest webinar, here.

We've collated all the key resources you need to learn about the EU AI Act, including our latest webinar, here. The main pieces of the legislation and corresponding sections that I'll cover in this piece are:

- Definitions, general principles & prohibited practices - Article 3/4/5

- Fixed and general-purpose AI systems and provisions - Article 3/28

- High-risk AI classification - Articles 6/7

- High-risk obligations - Title III (Chapter III)

- Transparency obligations - Article 52

- Governance and enforcement - Title VI/VII

It's time to grab that cup of ☕ and get ready for some legal boilerplate crunching 🤡

Definitions, general principles & prohibited practices

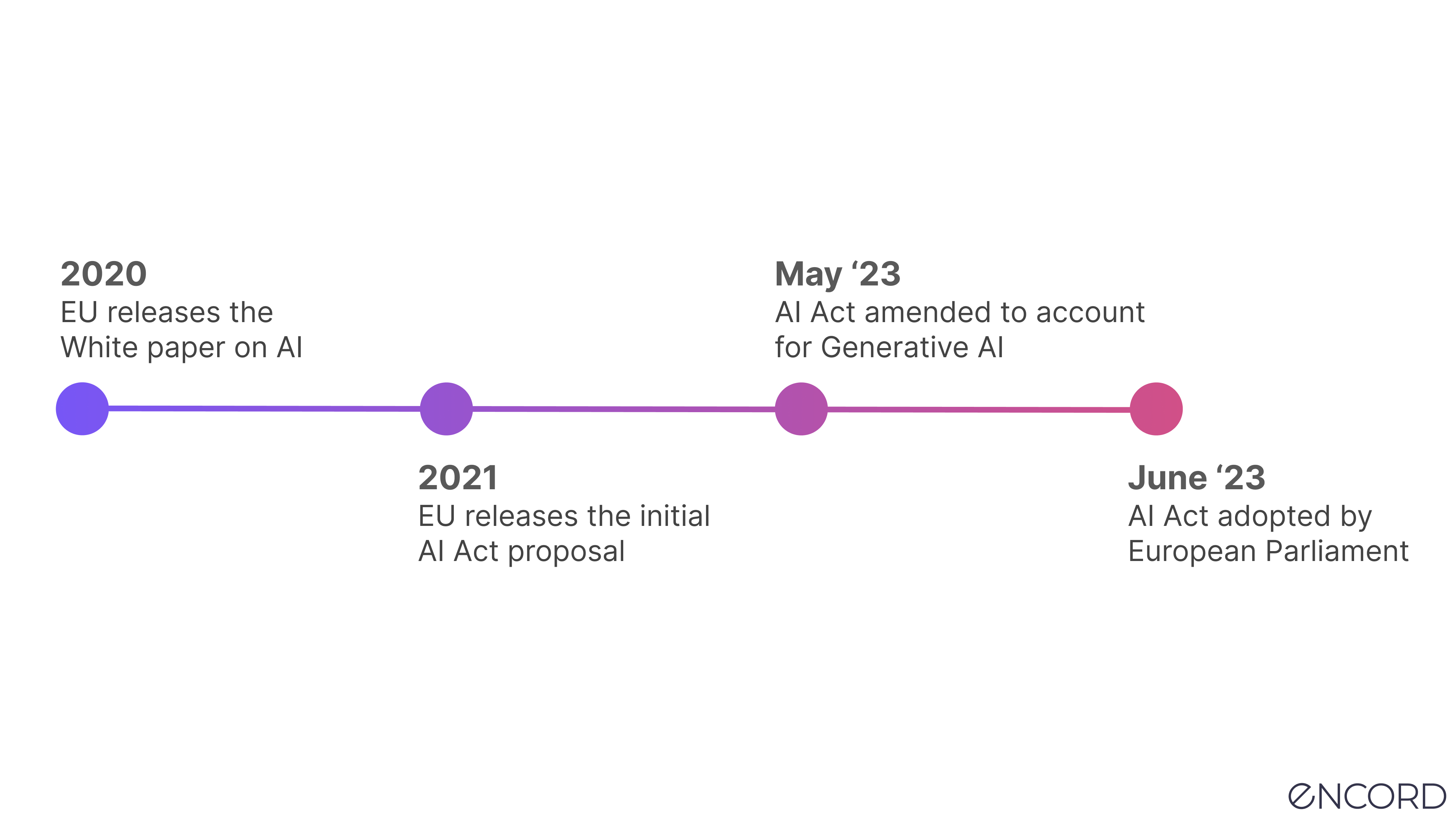

As with most EU legislation, the AI Act originated with a set of committees based in the European Union. The Act is the brainchild of two bodies - specifically, The European Internal Market and Consumer Protection ('IMCO') and Civil Liberties, Justice, and Home Affairs ('LIBE') committees - which seem to have an even greater fondness for long-winded acronyms than developers - who first brought forward the Act through the European Commission on 21 April 2021.

Now that we've got that settled let's move on to some legal definitions of AI 🎉

In addition to defining 'artificial intelligence systems' (which has been left deliberately neutral in order to cover techniques which are not yet known/developed), lawmakers distinguish between 'foundation models' and a 'general-purpose AI systems' (GPAI), adopted in the more recent versions to cover development of models that can be applied to a wide range of tasks (as opposed to fixed-purpose AI systems). Article 3(1) of the draft act states that ‘artificial intelligence system’ means:

...software that is developed with [specific] techniques and approaches and can, for a given set of human-defined objectives, generate outputs such as content, predictions, recommendations, or decisions influencing the environments they interact with.

Notably, the IMCO & LIBE committees have aligned their definition of AI with the OECD's definition and proposed the following definitions of GPAI and foundation models in their article:

(1c) 'foundation model' means an AI model that is trained on broad data at scale, is designed for the generality of output, and can be adapted to a wide range of distinctive tasks

(1d) 'general-purpose AI system' means an AI system that can be used in and adapted to a wide range of applications for which it was not intentionally and specifically designed

These definitions encompass both closed-sourced and open-source technology. The definitions are important as they determine what bucket you fall into and thus what obligations you might have as a developer. In other words, the set of requirements you have to comply with are different if you are a foundation model developer than if you are a general-purpose AI system developer, and different still to being a fixed-purpose AI system developer.

The GPAI and foundation model definitions might appear remarkably similar since a GPAI can be interpreted as a foundation model and vice versa. However, there's some subtle nuance in how the terms are defined. The difference between the two concepts focuses specifically on training data (foundation models are trained on 'broad data at scale') and adaptability. Additionally, generative AI systems fall into the category of foundation models, meaning that providers of these models will have to comply with additional transparency obligations, which we'll get into a bit later.

The text also includes a set of general principles and banned practices that both fixed-purpose and general-purpose AI developers - and even adopters/users - must adhere to. Specifically, the language adopted in Article 4 expands the definitions to include general principles for so-called 'trustworthy AI development.' It encapsulates the spirit that all operators (i.e., developers and adopters/users) make the best effort to develop ‘trustworthy’ (I won’t list all the requirements for being considered trustworthy, but they can be found here) AI systems. In the spirit of the human-centric European approach, the most recent version of the legislation that ended up going through adoption also includes a list of banned and strictly prohibited practices (so-called “unacceptable risk”) in AI development, for example, developing biometric identification systems for use in certain situations (e.g., kidnappings or terrorist attacks), biometric categorization, predictive policing, and using emotion recognition software in law enforcement or border management.

Risk-based obligations for developers of AI systems

Now, this is where things get interesting and slightly heavy on the legalese, so if you haven't had your second cup of coffee yet, now is a good time.

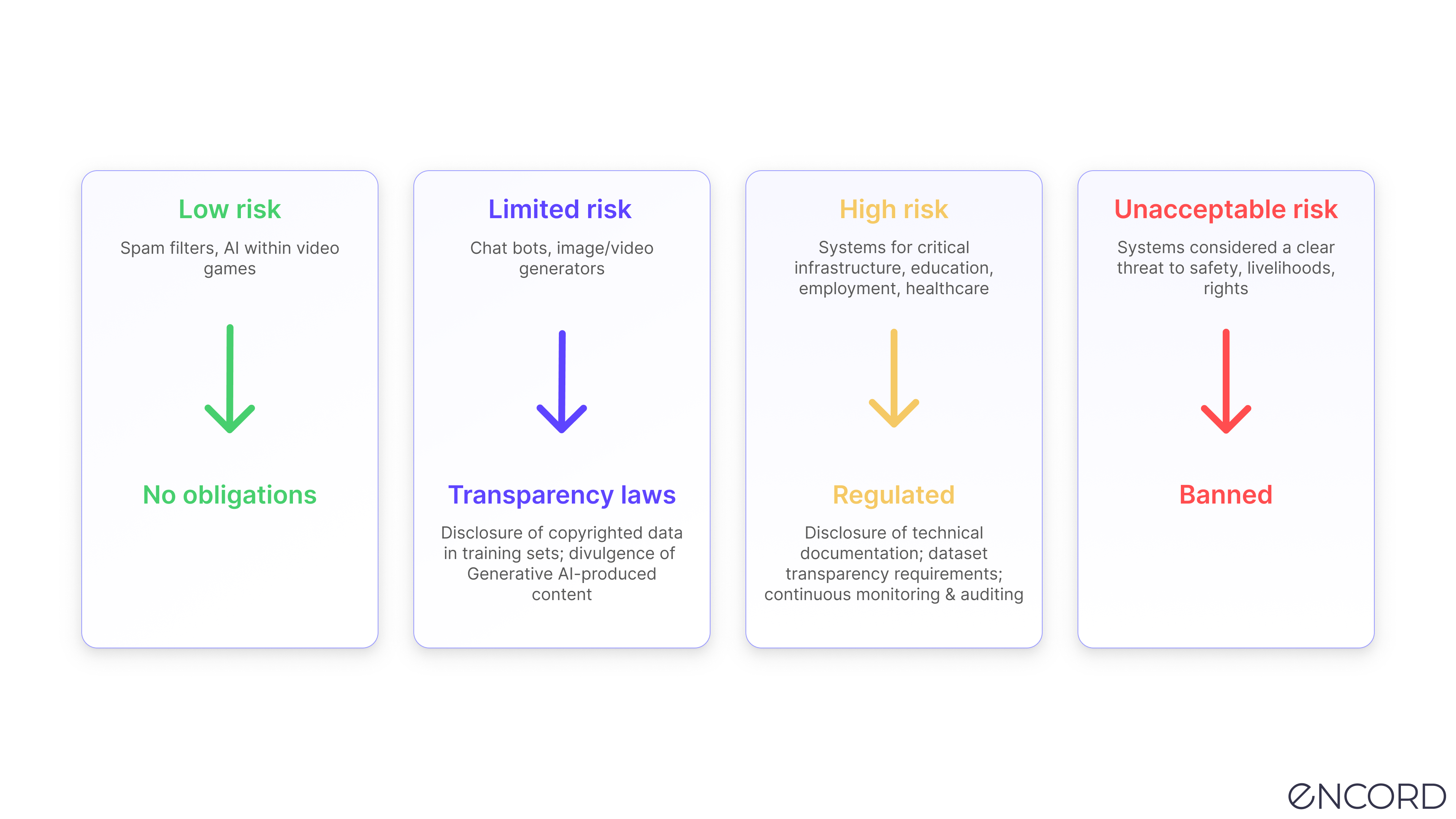

Per the text, any AI developer selling services in the European Union, or the EU internal market as it is known, must adhere to general, high-risk, and transparency obligations, adopting a "risk-based" approach to regulation. This risk-based approach means that the set of legal requirements (and thus, legal intervention) you are subject to depends on the type of application you are developing, and whether you are developing fixed-purpose AI systems, GPAI, a foundation model, or generative AI. The main thing to call out is the different risk-based "bucket categories", which fall into minimal/no-risk, high-risk, and unacceptable risk categories, with an additional ‘limited risk’ category for AI systems that carry specific transparency obligations (i.e., generative AI like GPT):

- Minimal/no-risk AI systems (e.g., spam filters and AI within video games) will be permitted with no restrictions.

- Limited risk AI systems (e.g. image/text generators) are subject to additional transparency obligations.

- High-risk AI systems (e.g recruitment, medical devices, and recommender systems used by social media platforms - I’ve included an entire section on what constitutes high-risk AI systems later in the post - stay tuned) are allowed but subject to compliance with AI requirements and conformity assessments - more on that later.

- Unacceptable risk systems, which we touched on before, are prohibited.

We’ve constructed the below decision tree based on our current understanding of the regulation, and what you’ll notice is that there is a set of “always required” obligations for general-purpose AI system, foundation model, and generative AI developers irrespective of whether they are deemed high-risk or not.

Foundation models developers will have to do things like disclose how much compute (so the total training time, model size, compute power, and so on) and measure the energy consumption used to train the model - and similar to high-risk AI system developers - also conduct conformity assessments, register the model in a database, do technical audits, and so on. If you’re a generative AI developer, you’ll also have to document and disclose any use of copyrighted training data.

Fixed and general-purpose AI developers (Article 3/28)

Most AI systems will not be high-risk (Titles IV, IX), which carry no mandatory obligations, so the provisions and obligations for developers mainly centre around high-risk systems. However, the act envisages the creation of “codes of conduct” to encourage developers of non-high-risk AI systems to voluntarily apply the mandatory requirements.

The developers building high-risk fixed and certain types of general-purpose AI systems must comply with a set of rules, including an ex-ante conformity assessment, as mentioned above, alongside other extensive requirements such as risk management, testing, technical robustness, appropriate training data, etc. Articles 8 to 15 in the Act list all requirements, which are too lengthy to recite here. As an AI developer, you should pay particular attention to Article 10 concerning data and data governance. Take, for example, Article 10 (3):

Training, validation and testing data sets shall be relevant, representative, free of errors and complete.

As a data scientist, you can probably appreciate how difficult it will be to prove compliance 💩

Separately, the conformity assessment (in case you have to do one - see decision tree above) stipulates that you must register the system in an EU-wide database before placing them on the market or in service. You’re not off the hook if you’re a provider selling into the EU - in that case, you have to appoint an authorised representative to ensure the conformity assessment and establish a post-market monitoring system.

On a more technical legalese point (no, you’re not completely off the 🪝if you are an AI platform selling models via API or deploying an open-source model), the AI Act mandates that GPAI providers actively support downstream operators in achieving compliance by sharing all necessary information and documentation regarding an AI model for general-purpose AI systems. However, the provision stipulates that if a downstream provider employs any GPAI system in a high-risk AI context, they will bear the responsibility as the provider of 'high-risk AI systems'. So, suppose you're running a model off an AI platform or via an API and deploying it in a high-risk environment as the downstream deployer. In that case, you're liable - not the upstream provider (i.e., the AI platform or API in this example). Phew.

Providers of foundation models (Article 28b)

The lawmakers seem to have opted for a stricter approach to foundation models (and conversely, generative AI systems) than general fixed-purpose systems and GPAI, as there is no notion of a minimal/no-risk system. Specifically, foundation model developers must comply with obligations related to risk management, data governance, and the level of robustness of the foundation model to be vetted by independent experts. These requirements mean foundation models must undergo extensively documented analysis, testing, and vetting - similar to high-risk AI systems - before developers can deploy them into production. Who knows, 'AI foundation model auditor' might become the hottest job of the 2020s.

As with high-risk systems, EU lawmakers demand foundation model providers implement a quality management system to ensure risk management and data governance. These providers must furnish the pertinent documents for up to 10 years after launching the model. Additionally, they are required to register their foundation models on the EU database and disclose the computing power needed alongside the total training time of the model.

Providers of generative AI models (Article 28b 4)

As an addendum to the requirements for foundation model developers, generative AI providers must disclose that content (text, video, images, and so on) has been artificially generated or manipulated under the transparency obligations outlined in Article 52 (which provides the official definition for deep fakes, exciting stuff) and also implement adequate safeguards against generating content in breach of EU law.

Moreover, generative AI models must "make publicly available a summary disclosing the use of training data protected under copyright law."

Ouch, we're in for some serious paperwork ⚖️

High-risk AI systems and classifications (Articles 6/7)

I've included the formal definition of high-risk AI systems given its importance in the regulation for posterity. Here goes!

High-risk systems are AI products that pose significant threats to health, safety, or the fundamental rights of persons, requiring compulsory conformity assessments to be undertaken by the provider. The following conditions fulfill the consideration of systems as high-risk:

(a) the AI system is intended to be used as a safety component of a product or is itself a product covered by the Union harmonization legislation listed in Annex II;

(b) the product whose safety component is the AI system, or the AI system itself as a product, is required to undergo a third-party conformity assessment with a view to the placing on the market or putting into service of that product pursuant to the Union harmonization legislation listed in Annex II.

Annex II includes a list of all the directives that point to regulation of things like medical devices, heavy machinery, the safety toys, and so on.

Furthermore, the text explicitly provides provisions for considering AI systems in the following areas as always high-risk (Annex III):

- Biometric identification and categorization of natural persons

- Management and operation of critical infrastructure

- Education and vocational training

- Employment, workers management, and access to self-employment

- Access to and enjoyment of essential private services and public services and benefits

- Law enforcement

- Migration, asylum, and border control management

- Administration of justice and democratic processes

A ChatGPT-generated joke about high-risk AI systems is in order (full disclosure: this joke was created by a generative model).

Q: Why did all the AI systems defined by the AI Act form a support group?

A: Because they realized they were all "high-risk" individuals and needed some serious debugging therapy!

Lol.

Governance and enforcement

Congratulations! You've made it through what we at Encord think are the most pertinent sections of the 167-long document to familiarise yourself with. However, many unknowns exist about how the AI Act will play out. The relevant legal definitions and obligations remain vague, raising questions about what effective enforcement will play out in practice.

For example, what does 'broad data at scale' in the foundation model definition mean? They'll mean different things to Facebook AI Research (FAIR) than they do to smaller research labs & some of the recently emerged foundation model startups like Anthropic, Mistral, etc.

The ongoing debate on enforcement revolves around the limited powers of the proposed AI Office, which is intended to play a supporting role in providing guidance and coordinating joint investigations (Title VI/VII). Meanwhile, the European Commission is responsible for settling disputes among national authorities regarding dangerous AI systems, adding to the complexity of determining who will ultimately police compliance and ensure obligations are met.

What is clear is that the fines for non-compliance can be substantial - up to €35M or 7% of total worldwide annual turnover (depending on the severity of the offence).

December amendments and ratification

On December 9th, after nearly 15 hours of negotiations and an almost 24-hour debate, the European Parliament and EU countries reached a provisional deal outlining the rules governing the use of artificial intelligence in the AI Act. The negotiations primarily focused on unacceptable-risk and high-risk AI systems, with concerns about excessive regulation impacting innovation among European companies.

Unacceptable-risk AI systems will still be banned in the EU, with the Act specifically prohibiting emotion recognition in workplaces and the use of AI that exploits individual’s vulnerabilities, among other practices. Biometric identification systems (RBI) are generally banned, although there are limited exceptions for their use in publicly accessible places for law enforcement purposes.

High-risk systems will adhere to most of the key principles previously laid out in June, and detailed above, with a heightened emphasis on transparency. However, there are exceptions for law enforcement in cases of extreme urgency, allowing bypassing of the ‘conformity assessment procedure.’

Another notable aspect is the regulation of GPAIs, which is divided into two tiers. Tier 1 applies to all GPAIs and includes requirements such as maintaining technical documentation for transparency, complying with EU copyright law, and providing summaries of the content used for training. Note that transparency requirements do not apply to open-source models and those in research and development that do not pose systemic risk. Tier 2 sets additional obligations for GPAIs with systemic risk, including conducting model evaluations, assessing and mitigating systemic risk, conducting adversarial testing, reporting serious incidents to the Commission, ensuring cybersecurity, and reporting on energy efficiency.

There were several other notable amendments, including the promotion of sandboxes to facilitate real-world testing and support for SMEs. Most of the measures will come into force in two years, but prohibitions will take effect after six months and GPAI model obligations after twelve months. The focus now shifts to the Parliament and the Council, with the Parliament’s Internal Market and Civil Liberties Committee expected to vote on the agreement, which is expected to be mostly a formality.

Final remarks

On a more serious note, the EU AI Act is an unprecedented step toward the regulation of artificial intelligence, marking a new era of accountability and governance in the realm of AI. As AI developers, we now operate in a world where considerations around our work's ethical, societal, and individual implications are no longer optional but mandated by law.

The Act brings substantial implications for our practice, demanding an understanding of the regulatory landscape and a commitment to uphold the principles at its core. As we venture into this new landscape, the challenge lies in navigating the complexities of the Act and embedding its principles into our work.

In a dynamic and rapidly evolving landscape as AI, the Act serves as a compass, guiding us towards responsible and ethical AI development. The task at hand is by no means simple - it demands patience, diligence, and commitment from us. However, precisely through these challenges, we will shape an AI-driven future that prioritizes the rights and safety of individuals and society at large.

We stand at the forefront of a new era, tasked with translating this legislation into action. The road ahead may seem daunting, but it offers us an opportunity to set a new standard for the AI industry, one that champions transparency, accountability, and respect for human rights. As we step into this uncharted territory, let us approach the task with the seriousness it demands, upholding our commitment to responsible AI and working towards a future where AI serves as a tool for good.

Explore the platform

Data infrastructure for multimodal AI

Explore product

Encord's platform facilitates the development of AI solutions that can serve as supportive tools for human readers by providing additional insights and visual aids. Simultaneously, it allows for the exploration of more advanced AI applications that could potentially automate certain reading processes, thereby improving overall diagnostic accuracy.

Encord actively supports public sector organizations, such as cities and hospitals, by offering tailored solutions that enhance waste management practices. The platform's capabilities in AI-driven data analytics and object detection help these organizations meet regulatory requirements and improve their waste management strategies.

Encord supports multiple strategies for AI data annotation, including supervised and unsupervised learning techniques. This flexibility allows teams to choose the best methods for their specific needs, whether it's through manual annotation, preference ranking, or integrating human feedback into model training.

Encord's approach to AI in logistics emphasizes the importance of accuracy and transparency. While AI can simplify processes, the platform recognizes that many logistics operations demand high precision, leading to a cautious but innovative integration of AI technologies.

Encord supports machine learning and AI development through its robust annotation features, which allow teams to label and manage datasets efficiently. By simplifying the annotation workflow, Encord enables teams to accelerate their projects and improve model accuracy.

Encord allows for significant customization in AI solutions, enabling teams to tailor the annotation process to meet specific project requirements. This flexibility is essential for organizations that require unique features or adaptations to their existing systems.

Encord allows users to filter and select the most relevant items for passing through AI models, which can be resource-intensive. This ensures that only the most pertinent data is used for inference, ultimately saving costs and improving efficiency.