Contents

The Problem with Frame-by-Frame Annotation

What Makes Encord Different for Video Annotation

Why Other Platforms Fall Short

The ROI of Using Encord for Video Annotation

Key Takewaways

Encord Blog

Why Encord Is the Best Choice for Video Annotation: Stop Annotating Frame by Frame

5 min read

Video annotation isn’t just image annotation at scale. It’s a different challenge that traditional annotation tools cannot support.

These frame-based tools weren’t designed for the complexity of video, treating video as a sequence of disconnected images. But this has a direct impact on your model performance and ROI, leading to slow, error-prone, and costly annotation.

Encord changes that. Built with native video support, Encord delivers seamless, intelligent annotation workflows that scale. Whether you're working in surgical AI, robotics, or autonomous vehicles, teams choose Encord to annotate faster and at scale to build more accurate models.

Keep reading to learn why teams like SDSC, Automotus, and Standard AI rely on Encord to stay ahead.

The Problem with Frame-by-Frame Annotation

Frame-by-frame annotation is often the case when using open-sourced or annotation tooling that is not video native. These tools break videos down into frames, leaving each frame to be annotated as individual images. However, this has negative consequences on the AI data pipeline.

First, it can cause a fragmented workflow. Annotators must work on individual images, losing temporal continuity. Plus, object tracking becomes manual and tedious as each video yields many frames.

There is also a higher risk of inconsistency across annotations. This is because when annotating by frame, some frames may be missed due to volume and the level of detail needed. Inconsistent annotations across frames can also arise when proper context is missing. For example, bounding box drift can take place when each bounding box is drawn independently. Objects are likely to change shape and size between the start and end of a video and with manual annotation, boxes may shift, lag behind, or fluctuate. Additionally, a lack of temporal awareness leads to quality degradation. When annotators are not able to understand the relationship between frames over time, this leads to bounding box drift, noisy labels, extra QA effort and most detrimentally, poor model performance.

Finally, it can lead to increased time spent and cost incurred. Frame-by-frame annotation is more labor-intensive for both annotators and reviewers, as each video produces thousands of frames. Instead, repeated tasks could be automated with video-native tools.

What Makes Encord Different for Video Annotation

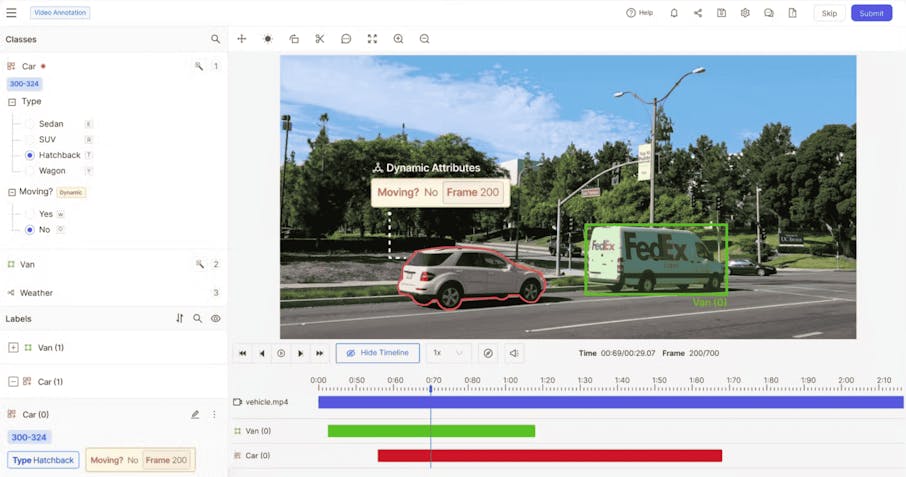

However, using a video native platform, like Encord, directly mitigates these challenges. A tool that has native video support allows for keyframe interpolation, timeline navigation, and real-time preview. All of which drive greater efficiency and accuracy for developing AI at scale.

Built natively for video annotation

Within Encord, video is rendered natively, allowing users to annotate and navigate across a timeline. Annotators can directly annotate on full-length videos, not broken up frames. This not only saves time but also mitigates the risk of missed frames and bounding box drift. Video annotation within the platform also supports playback with object persistence across time. And to further improve efficiency across the AI data pipeline, Encord’s real-time collaboration tools can be used on entire video sequences.

Advanced object tracking & automation

Encord also offers AI-assisted tracking to automatically follow objects through frames once it has been labeled in a single or keyframe. Encord uses SAM2 to predict the place of the bounding box across subsequent frames. This supports re-identification even when objects temporarily disappear (ex: occlusion). This reduces the time spent redrawing objects in each frame and helps maintain temporal consistency.

The platform also features interpolation, model-assisted labeling, and active learning. Interpolation is a semi-automated method where the annotator marks an object at key points, and the platform fills in the labels between them by calculating smooth transitions. This leads to massive timesavings and avoids annotator fatigue, without losing accuracy.

Additionally, the active learning integration uses a feedback loop that selects frames for human annotation. Encord Active flags frames or video segments where model predictions are low-confidence. Annotators are guided to prioritize these clips. And finally, the model learns from informative samples, not redundant ones.

Maintain temporal context

Temporal context is critical for accurate video annotation as it relates to how objects and scenes change over time. With temporal labeling built into the UI, users can annotate events, transitions or behaviors, such a person running or car breaking.

In Encord, annotators can view and annotate frames in relation to previous and future ones. With this timeline navigation and visualisation, users can view object annotations over a video’s entire duration. This provides more context on where an object appears or changes and it is helpful for labeling intermittent objects.

Additionally, this view helps track label persistence across frames, rather than labels that are created per frame. This reduces redundant work and supports smooth object tracking, and avoids annotation drift. Finally, annotators and reviewers can play the full annotated video back to verify consistency across frames.

![]()

Why Other Platforms Fall Short

| Capability | Encord | Traditional Tools | Why It Matters |

| Native Video Support | ✅ | ❌ (frame-based only) | Enables real-time playback and annotation in context across the full video sequence. |

| AI-assisted Object Tracking | ✅ | ⚠️ (limited) | Automates tracking of objects across frames, reducing drift and manual effort. |

| Temporal Context Visualization | ✅ | ❌ | View how objects evolve over time to ensure consistent, temporally aware labeling. |

| Collaborative Video Review | ✅ | ❌ | Supports scalable workflows with reviewers, labelers, and audit trails. |

| Interpolation & Automation | ✅ | ⚠️ | Fill in annotations between keyframes automatically, boosting speed and consistency. |

| Playback & Timeline Tools | ✅ | ❌ | Annotators can scrub through video, track object lifespan, and validate visually. |

| Support for Long Sequences | ✅ | ❌ (performance drops at scale) | Optimized for high-resolution, long-duration video data without lag. |

| Human-in-the-loop QA Tools | ✅ | ❌ | Built-in tools for review, correction, and quality control at scale. |

Summary: Traditional platforms were designed for image annotation and retrofitted for video. Encord was purpose-built for video.

The ROI of Using Encord for Video Annotation

Seamless, efficient, and intelligent video annotation workflows drive both direct ROI and model accuracy. The investment in a video native annotation tool pays off through: speed, accuracy, and scalability.

Faster training & deployment

As the platform supports smart interpolation, auto-generated labels, and label persistence across a video timeline, it reduces annotation time significantly.

What does this mean for ROI? Faster training data pipelines means faster model development and time to market.

Increased model accuracy

Frame sync video annotation leads to better data quality through contextual, consistent annotation. This is because no frames are missed with automated video labeling. Additionally, contextual, timeline-based annotation ensures that intermittent objects are detected accurately, such as those that come in and out of the video. Plus, the more accurate the initial annotations are, the fewer QA cycles and rework.

Greater ability to scale production

Scale is what ensures you dominate the market and competition. With the ability to annotate thousands of video files with collaborative tools, templates, and automation, scaling becomes a simple next step.

However, scaling also requires a larger, more in-sync team. Which is why support for multi-user teams, audit trails, and version control are all key features.

Key Takewaways

Video annotation is not easily scalable using traditional or open-source data annotation tools. The key reason is they break videos into frames, which require tedious, mistake-prone frame-by-frame annotation.

However, for deploying precise computer vision models at scale, using a video native platform is key. Encord supports keyframe interpolation, timeline navigation, and real-time preview which drive greater efficiency, accuracy, and ultimately ROI.

Encord supports smart interpolation, auto-generated labels, and label persistence, reducing annotation time significantly. Faster training data pipelines means faster model development and time to market. And with frame sync and automated video labeling, higher quality training data can be accelerated without comprising accuracy or model performance.

Explore the platform

Data infrastructure for multimodal AI

Explore product

Explore our products

Encord enhances the efficiency of data curation and annotation by providing integrated tools that streamline these processes. This includes features that speed up the curation step, facilitate rapid annotation, and ensure thorough evaluation and quality assurance, ultimately making the data flow as efficient as possible.

Encord offers various features for video data analysis, including the ability to filter videos by specific properties, inspect frames based on analytics, and tag relevant frames. Users can also view whole video thumbnails and utilize natural language search to find specific assets within the video content.

Encord provides a front-end interface that allows users to visualize and filter through vast amounts of data easily. This capability enables teams to identify the most relevant subsets of data for annotation, ensuring that the focus remains on diverse edge cases and high-priority items.

Encord focuses on scalable data annotation solutions by leveraging a combination of internal and external annotators. This enables us to handle high volumes of data effectively, providing consistent quality and timely turnaround for projects that require thousands of samples per day.

Encord provides a secure platform that allows users to distribute data for annotation to both internal and external annotators without physically transferring the data. This ensures that sensitive information remains protected while still enabling collaborative annotation efforts.

Encord provides a comprehensive data annotation platform that not only supports various annotation tasks, such as object detection but also offers data curation and sourcing services. This allows users to harmonize and prepare their data effectively for machine learning purposes.

Encord offers a native video approach that allows for more efficient processing of video data. This method streamlines the workflow, making it easier to manage large datasets and enhances productivity by eliminating the delays typically associated with frame-by-frame analysis.

Using Encord for video understanding projects provides teams with robust data annotation tools, facilitating accurate labeling and efficient data management. This can significantly improve the effectiveness of machine learning models and the overall analysis process.

Yes, all annotation algorithms run on the cloud, which ensures quick processing and accessibility from anywhere. This cloud-based approach allows users to leverage powerful algorithms for tasks like object tracking without needing extensive local computing resources.

Yes, Encord is versatile and can support various annotation projects, including those related to ADAS (Advanced Driver Assistance Systems) and other types of data annotation. This flexibility makes it an ideal partner for a range of annotation requirements.