Announcing our Series C with $110M in total funding. Read more →.

Contents

What is Named Entity Recognition?

How NER Works

Labels and Tagging Schemes in NER

Approaches of NER

Evaluation Metrics for NER

Tools for Transform data for NER

How Encord helps in NER data annotation

Challenges in NER

Key Takeaways

Encord Blog

What Is Named Entity Recognition? Selecting the Best Tool to Transform Your Model Training Data

5 min read

What is Named Entity Recognition?

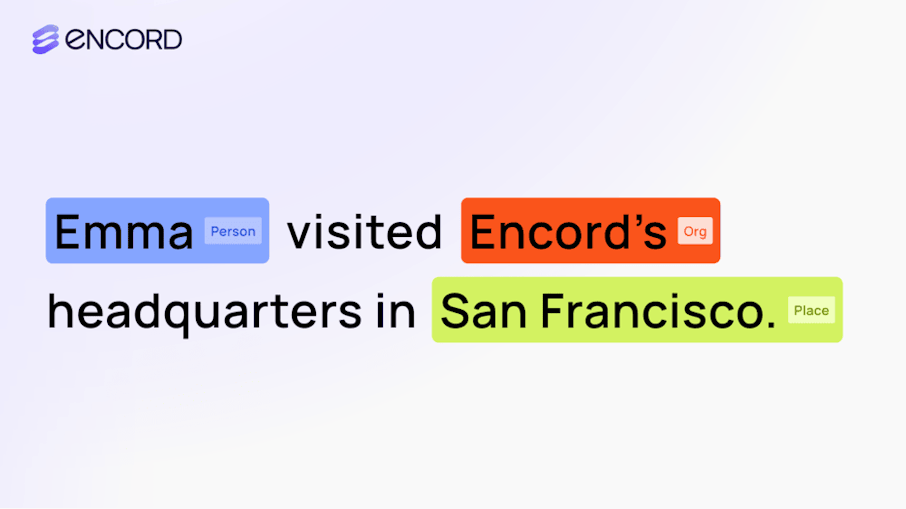

Named Entity Recognition (NER) is a fundamental task in Natural Language Processing (NLP) that involves locating and classifying named entities mentioned in unstructured text into predefined categories such as names, organizations, locations, dates, quantities, percentages, and monetary values. NER serves as a foundational component in various NLP applications, including information extraction, question answering, machine translation, and sentiment analysis.

At its core, NER processes textual data to identify and categorize key information. For example, in the sentence

An NER system should recognize "Apple" as an Organization (ORG), "U.K." as a Geopolitical entity (GPE), and "$1 billion" as a Monetary value (MONEY).

Named Entity Recognition (NER) Example

How NER Works

The NER process identifies and classifies key information (entities) in text into predefined categories such as names, organizations, locations, dates, and more. The following are the general steps of the NER process:

Step #1: Text Input

The process begins with raw text data that needs to be analyzed.

Step #2: Text Preprocessing

This step involves preparing the text for analysis by performing following operations.

Tokenization

Splitting the text into individual units called tokens (words, punctuation, etc.).

Part-of-Speech Tagging

Assigning grammatical tags to each token to understand its role in the sentence.

Step #3: Feature Extraction

Deriving relevant features from the tokens to assist the NER model in making accurate predictions.

- Contextual Features: Considering surrounding words to understand the context.

- Orthographic Features: Examining capitalization, punctuation, and numerical patterns.

- Lexical Features: Utilizing dictionaries or gazetteers to match known entity names.

Step #4: Model Application

Applying a trained NER model to classify each token (or group of tokens) into predefined entity categories.

- Machine Learning Models: Using algorithms like Conditional Random Fields (CRFs) or neural networks trained on annotated datasets.

- Rule-Based Systems: Employing handcrafted rules and patterns for specific entity types.

Step #5: Entity Classification

Assigning labels to tokens based on the model's predictions.

Step #6: Post-Processing

Refining the output to handle nested entities, resolve ambiguities, and ensure consistency. It can determine the correct entity type when a token could belong to multiple categories. For example

Or, identified nested entities (entities within entities), such as a person's name within an organization. For example

Step #7: Output Generation

Producing the final annotated text with entities highlighted or in a structured format like JSON or XML.

Labels and Tagging Schemes in NER

Labels in NER

In NER, labels are the categories assigned to words or phrases identified as named entities within a piece of text. These labels indicate the type of entity detected, such as a person, organization, location, or date. The labeling process allows unstructured text to be converted into structured data, which can be used for various applications like information retrieval, question answering, and data analysis.

The set of labels used in NER can vary depending on the specific application, domain, or dataset. However, some standard labels are widely used across different NER systems:

| Labels | Description | Example |

| Person (PER) | Names of people or fictional characters. | Albert Einstein," "Marie Curie," "Sherlock Holmes." |

| Organization (ORG) | Names of companies, institutions, agencies, or other groups of people. | "Google," "United Nations," "Harvard University." |

| Location (LOC) | Names of geographical places such as cities, countries, mountains, rivers. | "Mount Everest," "Nile River," "Paris." |

| Geo-Political Entity (GPE) | Geographical regions that are also political entities. | "United States," "Germany," "Tokyo." |

| Date | Expressions of calendar dates or periods. | "January 1, 2022," "the 19th century," "2010-2015." |

| Time | Specific times within a day or durations. | "5 PM," "midnight," "two hours." |

| Money | Monetary values, often accompanied by currency symbols. | "$100," "€50 million," "1,000 yen." |

| Percent | Percentage expressions. | "50%," "3.14%," "half." |

| Facility (FAC) | Buildings or infrastructure. | "Eiffel Tower," "JFK Airport," "Golden Gate Bridge." |

| Product | Objects, vehicles, software, or any tangible items. | "iPhone," "Boeing 747," "Windows 10." |

| Event | Named occurrences such as wars, sports events, disasters. | "World War II," "Olympics," "Hurricane Katrina." |

| Work of Art | Titles of books, songs, paintings, movies. | "Mona Lisa," "To Kill a Mockingbird," "Star Wars." |

| Language | Names of languages. | "English," "Mandarin," "Spanish." |

| Law | Legal documents, treaties, acts. | "The Affordable Care Act," "Treaty of Versailles." |

| NORP (Nationality, Religious, or Political Group) | Nationalities, religious groups, or political affiliations. | "American," "Christians," "Democrat." |

For example, in the following sentence:

Bill Gates and Paul Allen recognized and classified as a PERSON entity and

Microsoft is classified as an ORG (organization).

Tagging Schemes in NER

In addition to the entity labels, NER systems often use tagging schemes to indicate the position of words within entities. The most common schemes are:

BIO Tagging (Begin, Inside, Outside)

| Tags | Description |

| B-XXX | Beginning of an entity of type XXX |

| I-XXX | Inside (continuation) of an entity of type XXX |

| O | Outside any named entity |

Example

IOBES Tagging (Inside, Outside, Begin, End, Single)

| Tags | Description |

| B-XXX | Beginning of an entity of type XXX |

| I-XXX | Inside (continuation) of an entity of type XXX |

| E-XXX | End of an entity of type XXX |

| S-XXX | Single-token entity |

| O | Outside any named entity |

Example

IOB2

This tagging is similar to BIO but it ensures that the beginning of every entity is marked with a B- tag, even if it immediately follows another entity of the same type.

Example:

In this case, "Apple" is tagged as the beginning of an organization (B-ORG), and "U.K." is tagged as the beginning of a location (B-LOC).

BIOES (Beginning, Inside, Outside, End, Single)

It is another variation that includes the end and single tags for more precise boundary detection.

| Tags | Description |

| B- (Beginning) | First token of a multi-token entity. |

| I- (Inside) | Tokens inside a multi-token entity. |

| E- (End) | Last token of a multi-token entity. |

| S- (Single) | Single-token entity. |

| O (Outside) | Tokens not part of any entity. |

Example:

Here, both "Tesla" and "SolarCity" are single-token entities tagged as S-ORG.

Domain-Specific Labels

In specialized domains, additional labels may be used to capture domain-specific entities. For example in the biomedical domain, the labels such as Gene/Protein, Disease, Chemical, Drug are used.

Similarly in financial domain labels such as Financial Instrument, Market Index, Economic Indicator etc. are used.

Approaches of NER

Various approaches have been developed to annotate text for NER. Following are the popular approaches that are used.

Rule-Based Methods

Rule-based NER systems rely on manually specified linguistic rules and patterns to identify entities. These rules often utilize regular expressions, dictionaries (gazetteers), and part-of-speech tagging to detect predefined entity types. For example, a rule might specify that a capitalized word followed by "Inc." or "Ltd." should be classified as an organization. While rule-based methods can achieve high precision in specific domains, they often suffer from limited recall and are not easily scalable to diverse or evolving datasets. Additionally, developing and maintaining these rules can be labor-intensive and may not generalize well to new or informal text sources.

Machine Learning-Based Methods

Machine learning approaches involve training statistical models on annotated datasets to automatically recognize entities. Algorithms such as Conditional Random Fields (CRFs) and Support Vector Machines (SVMs) have been commonly used in this context. These models learn to identify entities based on features extracted from the text, such as word shapes, context words, and syntactic information. Machine learning methods generally offer better adaptability to different domains compared to rule-based systems and can handle a wider variety of entity types. However, they require substantial amounts of labeled training data and may still struggle with recognizing entities in noisy or informal text.

Deep Learning-Based Methods

Deep learning based methods use neural networks to capture complex patterns in data. Models such as Recurrent Neural Networks (RNNs), Long Short-Term Memory (LSTM) networks, and Transformers (e.g., BERT) have been used to understand the text. These models can automatically learn feature representations from raw text, reducing the need for manual feature engineering. Deep learning-based NER systems have achieved state-of-the-art performance across various datasets and languages. However, it requires large amounts of training data and computational resources, and their performance can be sensitive to the quality of the data.

Hybrid Approaches

Hybrid NER systems combine elements of rule-based, machine learning, and deep learning methods to use the advantages of each. For example, a hybrid system might use rule-based techniques to preprocess text and identify obvious entities, followed by a machine learning model to detect more complex cases. Alternatively, deep learning models can be supplemented with domain-specific rules to improve accuracy in specialized fields. Hybrid approaches aim to balance precision and recall while maintaining flexibility across different domains and text types.

Each of these approaches has its own set of trade-offs concerning accuracy, scalability, and resource requirements. The choice of method often depends on the specific application, the availability of labeled data, and the computational resources at hand.

Evaluation Metrics for NER

Evaluating a NER model is essential to measure its ability to accurately identify and classify entities. The evaluation metrics typically focus on Precision, Recall, and F1-Score, which are calculated based on the comparison between the predicted entities and the actual entities in the dataset.

Precision

Precision measures the proportion of entities predicted by the model that are correct. High precision indicates that the model makes fewer false positive errors.

Recall

Recall measures the proportion of actual entities that are correctly identified by the model. High recall indicates that the model successfully captures most of the relevant entities.

F1-Score

The F1-Score is the harmonic mean of Precision and Recall, providing a single score that balances the two. High F1-Score suggests a good balance between precision and recall.

Evaluating an NER Model

Consider the following example:

Ground Truth (Actual Entities):

| Apple Inc. | ORGANIZATION |

| San Francisco | LOCATION |

| March 2025 | DATE |

Model Prediction:

| Apple Inc. | ORGANIZATION ✅ (True Positive) |

| San Francisco | LOCATION ✅ (True Positive) |

| office | LOCATION ❌ (False Positive) |

| March 2025 | Not Detected ❌ (False Negative) |

Calculation:

| True Positives (TP) | 2 (Apple Inc., San Francisco) |

| False Positives (FP) | 1 (office) |

| False Negatives (FN) | 1 (March 2025) |

Metrics:

Recall = TP / (TP + FN) = 2 / (2 + 1) = 0.67

F1-Score = 2 x (Precision x Recall / Precision + Recall) = 2 x (0.67 x 0.67 / 0.67 + 0.67) = 0.67

Tools for Transform data for NER

Transforming data for NER involves converting raw text into a structured, annotated format suitable for model training. Various tools are available for this task, each offering unique features to facilitate the process. Below is a detailed explanation of tools that help transform data for NER:

Encord

Encord is an AI data development platform for managing, curating and annotating large-scale text and document datasets, as well as evaluating LLM performance. AI teams can use Encord to label document and text files containing text and complex images and assess annotation quality using several metrics. The platform has robust cross-collaboration functionality across:

- Encord Index: Unify petabytes of unstructured data from multiple fragmented data sources to one platform for streamlined data management and curation. Index enables unparalleled visibility into very large document datasets using embeddings based natural language search and metadata filters, to enable teams to explore and curate the right data to be labeled and used for AI model training and fine-tuning.

- Encord Annotate: Leverage SOTA AI-assisted labeling workflows and flexibly setup complex ontologies to efficiently and accurately label largescale document and text datasets for training, fine-tuning and aligning AI models at scale.

- Encord Active: Evaluate and validate Al models to surface, curate, and prioritize the most valuable data for training and fine-tuning to supercharge Al model performance. Leverage automatic reporting on metrics like mAP, mAR, and F1 Score. Combine model predictions, vector embeddings, visual quality metrics and more to automatically reveal errors in labels and data.

NER annotation in Encord (Source)

Doccano

Doccano is an open-source, user-friendly annotation tool for text labeling tasks which also supports NER annotation. It has following features:

- Intuitive interface for labeling text spans.

- Support for sequence labeling (NER), text classification, and translation tasks.

- Collaborative annotation for teams.

- Export options for labeled data in formats like JSON, JSONL, or CSV, compatible with frameworks like spaCy.

Prodigy

Prodigy is a commercial, Python-based annotation tool designed for machine learning workflows and can be used for NER annotations. It has following features:

- Active learning to prioritize uncertain samples for annotation.

- Seamless integration with spaCy models.

- Support for manual annotation, model-in-the-loop annotation, and rule-based labeling.

- Flexible export formats for training data

Snorkel

Snorkel is a data programming platform for programmatically labeling and transforming training data. It supports many annotation tasks including support for NER annotation. It has following features:

- Create labeling functions to annotate data programmatically.

- Combines weak supervision signals to generate probabilistic labels.

- Scalable and suitable for large datasets.

Snorkel NER annotation (Source)

spaCy

spaCy is a popular NLP library in Python. It also provides options for training and evaluating NER models. It has following features:

- Pre-trained models for entity recognition.

- Supports custom NER annotation and training pipelines.

- Integration with Prodigy for annotation tasks.

spaCy NER example (Source)

OpenNLP

Apache OpenNLP is a machine learning toolkit for processing natural language text. It also supports NER annotations. It has following features:

- Pre-trained models for NER in multiple languages.

- Tools for training custom NER models using labeled data.

- Support for tokenization, sentence segmentation, and other preprocessing tasks.

NER in OpenNLP (Source)

Stanza

Stanza is a Python NLP library developed by Stanford NLP Group. It supports multilingual NER and provides different NER models. It has following features:

- Pre-trained NER models for multiple languages.

- Easy integration with Python workflows.

Stanza NER example (Source)

Spark NLP

Spark NLP is a scalable NLP library built on Apache Spark. It is suitable for distributed computing. It also provides the support for NER annotations. It has following features:

- Pre-trained NER models for large-scale text processing.

- Supports training custom models for NER tasks.

- Integration with other Spark-based tools.

Spark NLP example (Source)

How Encord helps in NER data annotation

Encord supports various data types, including text, making it suitable for NER annotation tasks. It helps in managing, annotating, and iterating on training data for machine learning tasks. Here is how Encord helps in the NER annotation:

Intuitive Annotation Interface

Encord offers a user-friendly text annotation interface, making it easy for annotators to highlight and label text spans as entities. It helps in highlighting text directly to label it as an entity. Annotators can highlight specific words or phrases within the text. Annotators can assign entity labels, such as PERSON, LOCATION, ORGANIZATION, DATE, or any other custom tag defined in the ontology.

Ontology Management

Encord allows you to define a clear and structured ontology for your NER project. This ontology ensures consistent labeling and defines the entity types and their attributes. Users can create custom ontologies for specific projects or industries. This flexibility ensures that the annotation schema aligns with the requirements of domain-specific NER tasks.

Collaborative Annotation and Review

Encord supports team-based annotation projects. It allows multiple annotators to work on the same dataset while maintaining consistency. It enables project managers or reviewers to check and approve annotations using built-in review workflows. It supports multi-stage review processes to help ensure high-quality labels.

Model-Assisted Annotation

Encord integrates with pre-trained models or custom machine learning (ML) models to assist annotators by providing pre-annotations. Annotators can validate, correct, or refine these predictions, significantly reducing manual workload. In Encord you can import a pre-trained NER model (e.g., spaCy, Hugging Face Transformers) and use the model to generate initial predictions on raw text. Annotators review and validate these suggestions, correcting any inaccuracies.

Multi-Modality Support

Encord platform supports annotation of different types of data including images, videos, and multi-modal datasets. This is particularly useful for cross-domain projects where text is tied to visual data. For example, in medical applications annotating entities like SYMPTOM and DIAGNOSIS in patient text reports alongside CT scans or X-rays. Similarly in multimedia data, extracting named entities from speech transcriptions in videos and linking them to visual metadata can be easily done in Encord.

Export and Integration

Encord makes it easy to export annotated data in formats compatible with popular NLP frameworks and tools such as spaCy, Hugging Face Transformers, TensorFlow and many more. The supported formats are JSON, CSV, JSONL (ideal for training spaCy models) etc. It helps in integrating this data into model training pipelines easily making it easier to train the model.

Challenges in NER

NER identifies entities such as names, organizations, locations, and more within unstructured text accurately, but it may also face challenges. Following are some of the challenges in NER.

Ambiguity

Ambiguity arises when a word or phrase can have multiple meanings depending on its context. NER models can struggle to correctly classify such entities, especially in the absence of sufficient context. There are two main types of ambiguity:

- Lexical Ambiguity: Words that can belong to multiple categories (e.g., person, organization, or location).

- Contextual Ambiguity: Entities that require surrounding text to determine their exact type.

Example:

Jordan (First occurrence): Refers to a location (country).

Jordan Shoes: Refers to an organization (brand name).

Context-sensitive words require language models capable of understanding relationships in the text. Traditional rule-based models struggle with ambiguous entities due to limited contextual awareness.

Nested Entities

Nested entities occur when one entity is embedded within another, creating hierarchical structures. This challenge is common in domains like legal, biomedical, or financial text.

Example:

University of California: Organization (outer entity).

Berkeley: Location (nested entity within the organization name).

Traditional NER models often assume that entities do not overlap, leading to errors when an entity is nested. Nested structures require advanced models that can handle multiple layers of entities (e.g., transformer-based approaches or dependency parsers).

Entity Boundary Detection

Entity boundary detection involves identifying the exact start and end positions of an entity. Errors can occur when entities contain compound phrases or when boundaries are unclear.

Example:

Correct Entity: "Eric Adams" ->( PERSON)

Incorrect Boundary: "New York City Mayor Eric" -> (Partial extraction)

Compound entities or multi-word entities can confuse models. Entity boundaries may vary depending on language structure and dataset consistency.

Domain-Specific Entities

NER models trained on general-purpose corpora (like CoNLL-2003) often fail to identify entities in domain-specific text, such as medical, legal, or financial documents.

Example:

Entities: "metformin" -> (MEDICATION), "Type 2 diabetes" -> (DIAGNOSIS)

General-purpose models may not recognize "metformin" or "Type 2 diabetes" as entities.

Entities in specialized domains require custom tagging schemas and training data. Annotating large domain-specific datasets is time-consuming and expensive.

Language and Morphological Variations

NER models may face challenges with languages that have complex grammatical structures, lack capitalization cues, or feature multiple inflected forms of words.

Example:

Sentence: "steve jobs was the co-founder of apple inc."

Challenge: Models relying on capitalization may miss "steve jobs" as a PERSON.

Some languages (e.g., German, Finnish) have inflected words, where entity names can change forms depending on usage. Standard NER models trained on English datasets may struggle with non-English text without additional training.

Key Takeaways

- NER identifies and classifies entities like Person, Organization, Location, and Date in text.

- The NER process involves text preprocessing, feature extraction, and contextual analysis using models.

- NER uses tagging schemes like BIO (Begin-Inside-Outside) to mark entity boundaries.

- NER tools help annotate training data for models. Popular tools include Encord, Prodigy, and Doccano.

- NER is used in information extraction, chatbots, customer feedback analysis, and healthcare and in many other applications.

- Tools like Encord simplify annotation, making it easier to build accurate NER models.

Explore the platform

Data infrastructure for multimodal AI

Explore product

Frequently asked questions

Named Entity Recognition (NER) is a Natural Language Processing (NLP) technique used to identify and classify named entities in unstructured text into predefined categories such as Person, Organization, Location, Date, and more.

Tagging schemes define how entities are marked in text.

NER is used in various applications such as:

Information Extraction: Extracting key information from text.

Chatbots: Understanding user queries.

Customer Feedback Analysis: Analyzing opinions and reviews.

Healthcare: Identifying medical terms and patient details.

NER is critical for structuring unstructured data, enabling downstream tasks like information retrieval, machine translation, and sentiment analysis.

Encord automates the annotation process through pre-labeling workflows, which help address challenges related to quality, time, and cost. The platform allows integration of different open-source large language models (LLMs) for initial pre-labeling, followed by a review stage where another LLM acts as a judge before human verification. This multi-stage approach maximizes efficiency and ensures high-quality annotations.

Encord offers a robust annotation platform designed for enterprise needs, providing features that go beyond basic labeling. While open source tools may be suitable for initial stages, Encord is built to handle scaling challenges and offers enhanced support and reliability, making it ideal for teams looking to transition from early testing to production-level workloads.

Encord offers advanced functionalities that can enhance the efficiency of your annotation processes. By utilizing Encord's platform, you can leverage scalability, consistency, and access to specialized annotators, which can be a strategic advantage over maintaining in-house solutions.

Encord includes various features designed to enhance the speed and efficiency of the annotation process, such as streamlined workflows, automated tools, and the ability to manage multiple annotation projects simultaneously, making it easier to keep up with growing data demands.

Encord assists in the extraction of meaningful information from unstructured data through an efficient data pipeline. This involves organizing the data into accessible formats for NLP models and leveraging standardized dictionaries to collect concepts and relations.

Encord supports various text-based annotations, including name entity recognition, keyword tagging, and structured data extraction. This versatility allows users to tailor their annotation processes to meet specific project requirements and improve model accuracy.

Encord provides a variety of annotation tools, including custom-built solutions that can be tailored to specific project requirements. Users can also leverage open-source tools, enhancing the platform's flexibility and adaptability for diverse annotation tasks.

Encord allows users to make real-time adjustments to their annotation workflows, accommodating changes in taxonomy and definitions as needed. This flexibility ensures that the annotations remain aligned with evolving project requirements and objectives.

Encord includes features like pre-labeling with user-specific models and tools for making adjustments post-pre-labeling. These functionalities are designed to improve the speed and accuracy of the annotation process, ultimately enhancing model quality.

Yes, Encord's platform allows organizations to transition from 100% manual annotation to automated processes gradually. By leveraging AI and machine learning, teams can improve efficiency and reduce the manual workload over time.