Announcing our Series C with $110M in total funding. Read more →.

Contents

Why Speaker Diarization Matters in Audio and AI

Speaker Identification vs Diarization

Applications of Speaker Diarization

Criteria to evaluate Speaker Diarization

How Encord is Used for Speaker Diarization

Key Takeaways

Encord Blog

What is Speaker Diarization?

5 min read

Imagine you are listening to the recording of an important team meeting that you missed. The conversation flows naturally and different voices chime in, ideas bounce back and forth, questions are asked and answered. But as the minutes tick by you find yourself frustrated and you may question yourself,

“Wait, was it the client or the product manager who raised that concern?”

Without knowing who said what, it’s just a sea of words. Now imagine if the recording could automatically tell you:

Suddenly, the conversation has structure, clarity, and meaning. That is the speaker diarization, a technology that teaches machines to separate and label voices in an audio stream, just like your brain does in real life.

Speaker diarization is the process of partitioning an audio stream into homogeneous segments according to the identity of the speaker. In simple terms we can say that it answers the question,

Speaker diarization (Source)

This technology is important for analyzing multi-speaker audio recordings, such as meetings, phone calls, interviews, podcasts, and even surveillance audio. Speaker diarization involves the segmentation and clustering of an audio signal into distinct parts where each part is associated with a unique speaker. It does not require prior knowledge about the number of speakers or their identities. The typical output of a speaker diarization system looks like following,

It essentially adds structure to unstructured audio, providing metadata that can be used for further analysis, indexing, or transcription.

Why Speaker Diarization Matters in Audio and AI

In our increasingly audio-driven world, (i.e. from smart assistants and call centers to podcasts and meetings) it is not enough for machines to just hear what is being said. They need to understand who is speaking. Speaker diarization adds this critical layer of intelligence to audio to make it easier to understand, organize, and work with audio in smart ways across numerous real-world applications. Following are some important points why it matters.

- Enhances Speech Recognition: In Automatic Speech Recognition (ASR), speaker diarization improves transcription accuracy by associating text with individual speakers. This makes the transcript more readable and contextually meaningful, especially in overlapping conversations.

- Boosts Conversational AI Systems: Conversational AI (like virtual assistants or call center bots) get benefits from diarization by better understanding user intent in multi-speaker conversations. It helps systems differentiate between users and agents and respond more appropriately.

- Critical in Meeting Summarization: Speaker diarization is essential for generating intelligent meeting notes. It enables systems to group speech by speaker which is important for action-item tracking, sentiment analysis, and speaker-specific summaries.

- Privacy and Security: Speaker diarization helps isolate speakers for identity verification, anomaly detection, or behavior analysis without always needing to know who the speaker is in surveillance and legal audio analysis.

- Content Indexing and Search: Speaker diarization enables better indexing and retrieval of audio content for media houses, podcasts, and broadcasting companies. Users can search based on speaker turns or speaker-specific quotes.

Speaker Identification vs Diarization

Although both speaker identification and speaker diarization deal with analyzing who is speaking in an audio clip. However, both solve different problems and are used in different scenarios. Let’s understand the difference between the two.

What is Speaker Identification?

In speaker identification, the voice of a person in an audio is recognized and real identity is assigned to it. In other words, it answers the question “Who is speaking right now?”.

Speaker Identification (Source)

Speaker identification is a supervised task in which a pre-enrolled list of known speakers with voice samples is required. The system matches the speaker’s voice against the list and identifies them. Speaker identification systems typically work by extracting voice features and comparing them to stored voice profiles. The system knows the possible speakers ahead of time.

For example, imagine a voice-controlled security system at home. When a user says, “Unlock the door,” the system not only recognizes the command but also checks who said it. If it matches the voice to an authorized user, the door unlocks. Here, the system is identifying the user's voice by comparing it to known voices it has in its database.

What is Speaker Diarization?

In Speaker Diarization, different voices in an audio are separated and labelled without necessarily knowing who the speakers are. It answers the question “Who spoke when?”.

Speaker Diarization (Source)

Speaker diarization is an unsupervised task which does not need prior enrollment of speaker speaker data. It simply separates the audio into segments and assigns labels like "Speaker 1", "Speaker 2", etc. Therefore, the system doesn’t know who the speakers are.

For example, suppose you have a recording of a team meeting for which you want to create a transcript. You do not care about matching voices to specific names, you just want to understand the flow of conversation and know when one speaker stopped and another started. So the system outputs:

You can now read the transcript with clear speaker turns, even if you don’t know who the actual speakers are.

| Feature | Speaker Identification | Speaker Diarization |

| Goal | Recognize who is speaking | Determine when each speaker speaks |

| Requires Known Voices? | Yes (pre-enrolled database of known speakers) | No (unknown speakers are fine) |

| Output | Speaker name or ID (e.g., "Alice", "Bob") | Speaker labels (e.g., "Speaker 1", "Speaker 2") |

| Use Case | Voice authentication, voice assistants, surveillance | Meeting transcription, podcast analysis, call recordings |

| Type of Task | Supervised | Unsupervised |

| Example Output | “Voice matched to: John Smith” | “0:00–2:10: Speaker 1, 2:10–4:00: Speaker 2” |

Speaker identification is used when there is a need to verify or recognize who is speaking, such as in voice-based login systems, forensic voice matching, or personalizing voice assistants etc.

Speaker Diarization on the other hand is used when there is a need to analyze conversations with multiple people, such as transcribing meetings, analyzing group discussions or organizing podcast interviews etc.

In real-world applications, these two techniques are often used together. For example, in a customer service call, speaker diarization can first separate the customer and agent voices. Then, speaker identification can confirm which agent handled the call, allowing for quality review and personalization.

Applications of Speaker Diarization

Speaker diarization plays an important role in audio understanding by breaking down conversations into “who spoke when”. even when the speakers are not known in advance. Following are key applications of speaker diarization in real-world use cases.

Meeting Transcription and Summarization

In corporate settings, meetings often involve multiple people contributing ideas, sharing updates, and making decisions. Speaker diarization helps separate speaker voices, making transcriptions clearer and summaries more meaningful. For example, a team uses a meeting transcription tool like Otter.ai or Microsoft Teams that applies speaker diarization to tag each speaker’s contribution. This allows team members to see who said what. It automatically generates action items per speaker which provides easy review of discussions for absent participants.

Otter.ai transcription (Source)

Call Center Analytics

Customer service calls often involve two speakers i.e. the agent and the customer. Speaker diarization helps monitor conversations, measuring things like agent performance, customer satisfaction, and detecting service issues by separating who is talking. For example, in a customer service center of a telecom company the recordings of support calls are diarized. The system analyzes if the agent followed the troubleshooting script, if the customer sounded frustrated (detected through emotional analysis on customer segments), and how much time the agent vs. customer spoke. This helps improve customer service quality.

Observe.AI uses diarization in customer-agent calls to measure agent speaking time, detect interruptions, track emotional tone per speaker, and improve coaching for call center agents based on how well they interact with customers.

Observe AI speaker diarization (Source)

Broadcast Media Processing

News broadcasts, interviews, and talk shows involve multiple speakers. Diarization is used to automatically label and separate speech segments for archiving, searching, subtitling, or content moderation. For example, during a TV political debate, speaker diarization automatically segments speech between Candidate A, Candidate B and Moderator. Later, when a journalist searches for "closing statement by Candidate A" the system quickly retrieves it because it knows who spoke when. Veritone Media applies speaker diarization on radio talk shows and TV interviews to archive and search by speaker.

Podcast and Audiobook Indexing

Podcasts and audiobooks often feature multiple hosts, guests, or characters. Speaker diarization helps in indexing content by speaker. This makes it easy to search and navigate long audio recordings for required information. For example, a podcast episode features three hosts discussing technology. Speaker diarization allows listeners to jump directly to Host 2's thoughts on AI, and view a timeline showing when each speaker talks. This makes podcasts more interactive and searchable, like chapters in a book. Descript applies speaker diarization to podcasts so that users can edit episodes easily such as remove filler words or edit a specific guest’s section without disturbing the flow.

Courtroom Proceedings and Legal Documentation

In legal settings, accurate attribution of who spoke is critical. Speaker diarization enables transcripts to properly record testimony, objections, and judicial rulings without manual effort. For example, during a court trial, speaker diarization can help distinguish between instructions by a Judge, arguments by a defense attorney, and testimonies by a witness. It produces a clean transcript necessary for official legal records and appeals, ensuring fairness and accountability. Verbit specializes in legal transcription. It uses speaker diarization to separate attorneys, judges, witnesses in court recordings automatically, helping produce official court transcripts with clear speaker attribution.

Health and Therapy Session Monitoring

In mental health counseling and therapy, speaker diarization can help therapists analyze sessions, track patient participation, and even assess changes in patient speech patterns over time. For example, a psychologist records therapy sessions with consent. Speaker diarization can show that the patient spoke 60% of the time answering open-ended questions by the therapist. Over months, analysis reveals the patient started speaking longer and more confidently which is a sign of progress. Eleos Health records therapy sessions (with client consent) and diarizes who is speaking, therapist or client. It analyses engagement metrics like speaking ratios, pauses, emotional markers, helping therapists understand client progress over time.

Eleos Health records therapy sessions (Source)

Speaker diarization can be used in many other applications across various domains. It has become a critical enabler for making audio and voice-driven systems more intelligent, personal, and practical. From automating meeting notes and customer service analytics to powering smarter healthcare systems and legal services, speaker diarization plays a foundational role wherever "who is speaking" matters.

Criteria to evaluate Speaker Diarization

Once the speaker diarization system is built, it should be evaluated how well it performs to get the best speaker diarization. When evaluating speaker diarization, you are basically checking how accurately the system splits and labels speech into different speakers over time. There are three popular metrics to evaluate the speaker diarization.

Diarization Error Rate (DER)

The Diarization Error Rate (DER) is the traditional and most widely used metric for evaluating the performance of speaker diarization systems. DER measures the proportion of the total recording time that is incorrectly labeled by the system. It is computed as the sum of three different types of errors,

- False alarms (FA) - speech detected when none exists)

- Missed speech - speech present but not detected

- Speaker confusion - speech correctly detected but attributed to the wrong speaker

The formula for DER is:

To ensure fair speaker label matching between the system output and the ground truth, the Hungarian algorithm is used to find the best one-to-one mapping between hypothesis speakers and reference speakers. Additionally, the evaluation allows for a 0.25-second "no-score collar" around reference segment boundaries to account for annotation inconsistencies and timing errors by human annotators. This collar means that slight boundary mismatches are not penalized. While DER is widely accepted, it has some limitations. DER can exceed 100% if the system makes severe errors, and dominant speakers may disproportionately affect the score. Therefore, while DER is highly correlated with overall system performance, it sometimes fails to reflect fairness across all speakers.

Jaccard Error Rate (JER)

The Jaccard Error Rate (JER) was proposed in the DIHARD II evaluation, to overcome some of the shortcomings of DER. JER aims to equalize the contribution of each speaker to the overall error, treating all speakers fairly regardless of how much they talk. Instead of calculating a global error over all time segments, JER first calculates per-speaker error rates and then averages them across the number of reference speakers. For each speaker, JER is computed by summing the speaker’s false alarm and missed speech errors, and dividing by the total speaking time of that speaker. It is mathematically expressed by:

Where N is the number of reference speakers. Importantly, speaker confusion errors that appear in DER are reflected in the false alarm component in JER calculation. Unlike DER, JER is bounded between 0% and 100%, making it more interpretable. However, if a subset of speakers dominates the conversation, JER gives higher error rates than DER. JER provides a balanced and speaker-centric evaluation method that complements DER.

Word-Level Diarization Error Rate (WDER)

WDER is a metric designed to evaluate the performance of systems that jointly perform Automatic Speech Recognition (ASR) and Speaker Diarization (SD). Unlike traditional metrics that assess errors based on time segments, WDER focuses on the accuracy of speaker labels assigned to each word in the transcript. This word-level evaluation is particularly relevant for applications where both the content of speech and the identity of the speaker are crucial, such as in medical consultations or legal proceedings.

Where

- SIS (Substitutions with Incorrect Speaker tokens): The number of words where the ASR system incorrectly transcribed the word and assigned it to the wrong speaker.

- CIS (Correct words with Incorrect Speaker tokens): The number of words correctly transcribed by the ASR system but assigned to the wrong speaker.

- S (Substitutions): The total number of words where the ASR system incorrectly transcribed the word.

- C (Correct words): The total number of words correctly transcribed by the ASR system.

This metric specifically evaluates the accuracy of speaker assignments for words that were either correctly or incorrectly recognized by the ASR system. However, it does not account for insertions or deletions, as these errors do not have corresponding reference words to compare against. Therefore, WDER should be considered alongside the traditional Word Error Rate (WER) to obtain a comprehensive understanding of system performance.

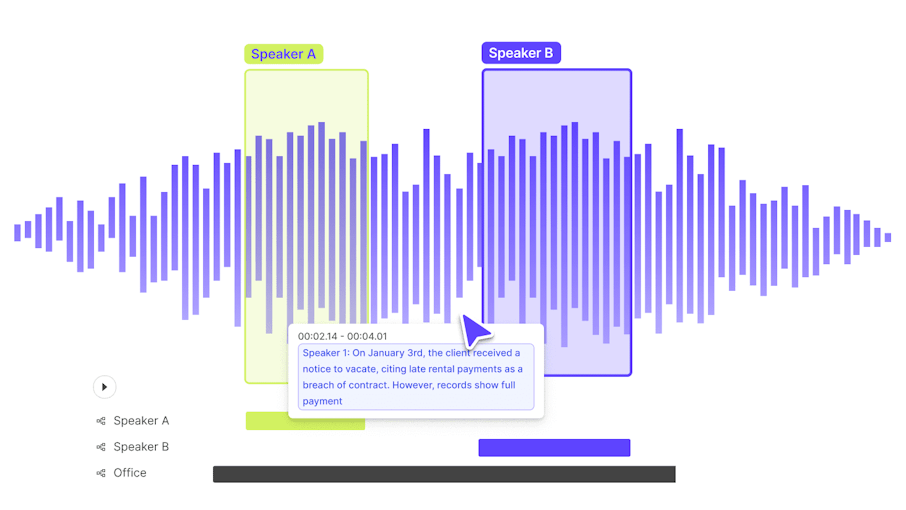

How Encord is Used for Speaker Diarization

Encord is a comprehensive multimodal AI data platform that facilitates efficient management, curation, and annotation of large-scale unstructured datasets, including audio files. Its audio annotation tool is particularly adept at handling complex tasks like speaker diarization, which involves segmenting audio recordings to identify and label individual speakers. Following are the features of Encord in annotating data for speaker diarization.

Encord Audio Annotation (Source)

Precise Temporal Annotation

Encord allows annotators to label audio segments with millisecond-level precision. This is important for accurately marking the start and end times of each speaker's voices.

Support for Overlapping Speech

In real-world scenarios like meetings or interviews, speakers often talk over each other. Encord platform supports overlapping annotations, enabling annotators to label multiple speakers speaking simultaneously. This feature ensures that models trained on such data can handle crosstalk and interruptions effectively.

Layered Annotations

Beyond identifying who spoke when, Encord allows for layered annotations, where additional information such as speaker emotion, language, or background noise can be tagged alongside speaker labels.

AI-Assisted Pre-Labeling

Encord integrates with state-of-the-art models like OpenAI's Whisper and Google's AudioLM. These models can generate preliminary transcriptions and speaker labels, which annotators can then review, refine and use thus reducing manual effort.

Collaborative Annotation Environment

Encord platform is designed for team collaboration that allows multiple annotators and reviewers to work on the same project simultaneously. Features like real-time progress tracking, change logs, and review workflows ensure consistency and quality across large annotation projects.

Scalability and Integration

Encord supports various audio formats, including WAV, MP3, FLAC, and EAC3, and integrates with cloud storage solutions like AWS, GCP, and Azure. This flexibility allows organizations to scale their annotation efforts efficiently and integrate Encord into their existing data pipelines.

Key Takeaways

- Speaker diarization separates an audio recording into segments based on who is speaking, answering "Who spoke when?" without needing to know their identities.

- Speaker diarization adds structure to audio, improves transcription accuracy, enhances conversational AI.

- Speaker identification matches a voice to a known person, while diarization only separates and labels speakers without requiring pre-known identities.

- Speaker diarization used in meetings, call centers, podcasts, legal transcription, media broadcasting, and healthcare monitoring to organize and analyze conversations.

- Speaker diarization systems are evaluated using metrics like DER, JER, and WDER

- Encord helps streamline audio annotation for building speaker diarization models.

Explore the platform

Data infrastructure for multimodal AI

Explore product

Frequently asked questions

Speaker diarization is the process of segmenting an audio recording based on who is speaking, effectively answering the question: "Who spoke when?" It separates the audio into labeled chunks, like "Speaker 1", "Speaker 2", etc., even when the speakers are unknown.

Speaker Identification determines who is speaking by matching the voice to a known profile (e.g., "This is Alice").

Speaker Diarization simply segments and labels the speakers without identifying them (e.g., "Speaker 1", "Speaker 2").

Yes. It’s an unsupervised process and does not require prior enrollment of known speaker voices. It just distinguishes between different speakers in the audio and labels them generically.

Yes. For example, in a call center, diarization can separate agent and customer voices, and identification can determine which specific agent handled the call. Combining both adds depth and personalization to audio analysis.

Encord provides robust features for audio annotation, catering specifically to tasks like diarization and automatic speech recognition (ASR) in various languages, including Arabic. The platform facilitates efficient audio labeling through streamlined workflows that can accommodate the unique requirements of Arabic language processing.

Encord offers robust data annotation capabilities that allow for the acquisition of channel-separated audio data. This feature enables users to create datasets with two speakers discussing various topics over separate channels, ensuring a diverse range of accents and conversational energy levels for improved model training.

Encord's platform facilitates precise audio labeling through advanced annotation tools and quality assurance processes. By leveraging a collaborative approach, Encord can help identify and correct human errors in labels, which is crucial for improving the performance of speaker diarization systems.

Yes, Encord includes capabilities for noise reduction and audio processing, such as voice signature recognition and beamforming. These features enhance the clarity of audio tracks, allowing users to separate dialogues even when multiple speakers are communicating simultaneously.

Yes, Encord supports multimodal data annotation, which is vital for handling various data types. Our platform is equipped to manage complex annotation tasks, such as speaker diarization for audio data, allowing you to annotate time ranges and improve your product offerings.

Yes, speaker names can be changed during the annotation process. This is done by defining the speaker name as an attribute in the ontology, allowing users to update the name while annotating without needing to return to the setup.

In Encord, foreign speech and unintelligible speech can be flagged using a checklist interface. Users can specify when these attributes are present, ensuring comprehensive annotations that meet your project's requirements.