Contents

AI-Assisted Surgical Robotics

Preoperative planning

Intraoperative guidance

Postoperative analysis and training

Encord Blog

The State of AI in Surgical Robotics

5 min read

In 1985, the PUMA 560 surgical robot made history by assisting the team at Memorial Medical Center during a stereotactic brain biopsy, marking one of the earliest recorded instances of robotic-assisted surgery and astonishing the world. Fast forward to today — surgical robotic systems are supporting surgeons across a growing array of medical interventions, assisting surgeries in ways few people imaged a few decades ago.

Over the past eight years alone, the Robotically-Assisted Surgical (RAS) Devices market has expanded from $800 million in 2015 to well over $3 billion today. From prominent healthcare organizations to cutting-edge research institutes, from rapidly growing startups to non-profit initiatives, diverse teams are busy developing innovative surgical robotic systems. Their goal is to enhance surgical efficiency, improve precision and, ultimately, deliver better outcomes for patients.

The recent leaps in computer vision have also further spurred this growth, as artificial intelligence is rapidly entering the operating room and enabling these systems to better perceive and interpret visual information in real time and aid surgeons on a wider range of tasks.

This article explores the landscape of AI applications in surgical video analysis, some of the key innovators in the space and the role of high-quality training data in the development of AI-assisted surgical systems.

AI-Assisted Surgical Robotics

Companies like Intuitive Surgical, creator of the Da Vinci Surgical System, led the way in the 1990s: Da Vinci was the first robotics system approved by the FDA, initially for visualization and tissue retraction in 1997 and later for general surgery in 2000. With over 6,000 robots installed worldwide and over $6b in annual revenue, Intuitive has dominated the surgical robotics industry for the better part of the last 20 years, transforming the industry and enabling patient outcomes that were previously impossible. Yet 2019 marked the start of some of its patent expiries, and with that, a wave of new entrants and innovators.

The use of AI-assisted techniques in robotics now extends from preoperative planning, to intraoperative guidance and postoperative care, and has advanced significantly thanks to the close collaboration of surgeons, programmers, and scientists.

Let’s discuss some of the major real-world applications and teams working in this field — starting with preoperative planning.

Preoperative planning

Preoperative (pre-op) planning includes a range of workstreams — from visualizing the steps of the operation, to forming a plan to tackle navigation or improve precision. Machine learning and computer vision are being leveraged in pre-op planning in many ways: from rapidly analyzing the tabular and visual data of patients (like medical records or scans), to ensuring precise trajectory planning, optimizing incision sites, and gaining more insights into potential complications.

Surgical planning begins with processing and fusing various medical imaging modalities, such as CT scans, MRI scans, and ultrasound scans, to generate a comprehensive 3D model of the patient's anatomy. Computer vision algorithms and deep learning models are then employed to quickly analyze this visual data and surface recommendations and risks with pursuing different surgical steps. Algorithms also enable surgeons to identify and segment specific anatomical structures and regions of interest from the imaging data (like organs, blood vessels, abnormalities, and other critical structures within the 3D model). This segmentation is crucial for surgical planning as it provides a clear visualization of the target area. From here, surgeons can explore different surgical approaches and plan the optimal trajectory for instruments and incisions, assessing the risk factors by quantifying the distance or overlap between the planned surgical path and nearby structures.

Pre-op data can also be combined with intraoperative data to achieve surgical outcomes not otherwise possible.

One of the most innovative end-to-end platforms is Paradigm™ by Proprio Vision, who just a few days announced the successful completion of the world's first light field-enabled spine surgery. Using an array of advanced sensors and cameras, Paradigm captures high-definition multimodal images during surgery and integrates them with preoperative scans to provide surgeons with real-time mapping of the anatomy. In addition to augmenting navigation capabilities during a procedure, Paradigm also collects large amounts of pre-op and intra-op data to inform future surgical decision-making and improve surgical efficiency and accuracy.

Another end-to-end robotic system is Senhance, by Asensus Surgical, which in 2021 was cleared by the FDA for general surgeries. Senhance allows surgeons to create simulations for preoperative planning, while also providing real-time data for intraoperative guidance and generating insightful analytics for postoperative performance assessments and care.

Intraoperative guidance

A recent report by Bain & Company found that over 50% of surgeons surveyed made use of robotic systems in some capacity during general surgeries.

During procedures, where even the slightest hand trembling can risk causing significant harm, image-guided surgery is turning into a requirement. Here, computer vision is often employed for instrument tracking and object recognition, which in turn are leveraged to feed video data to AI models that can monitor the procedure and generate guidance and warnings in case of anomalies, such as excessive bleeding or tissue damage.

AI-assisted systems allow surgical robots to locate and follow the movement of surgical instruments, ensuring they are precisely positioned and maneuvered. They can also be used to identify critical structures and masses in the video footage, providing augmented guidance to the surgeon in real time.

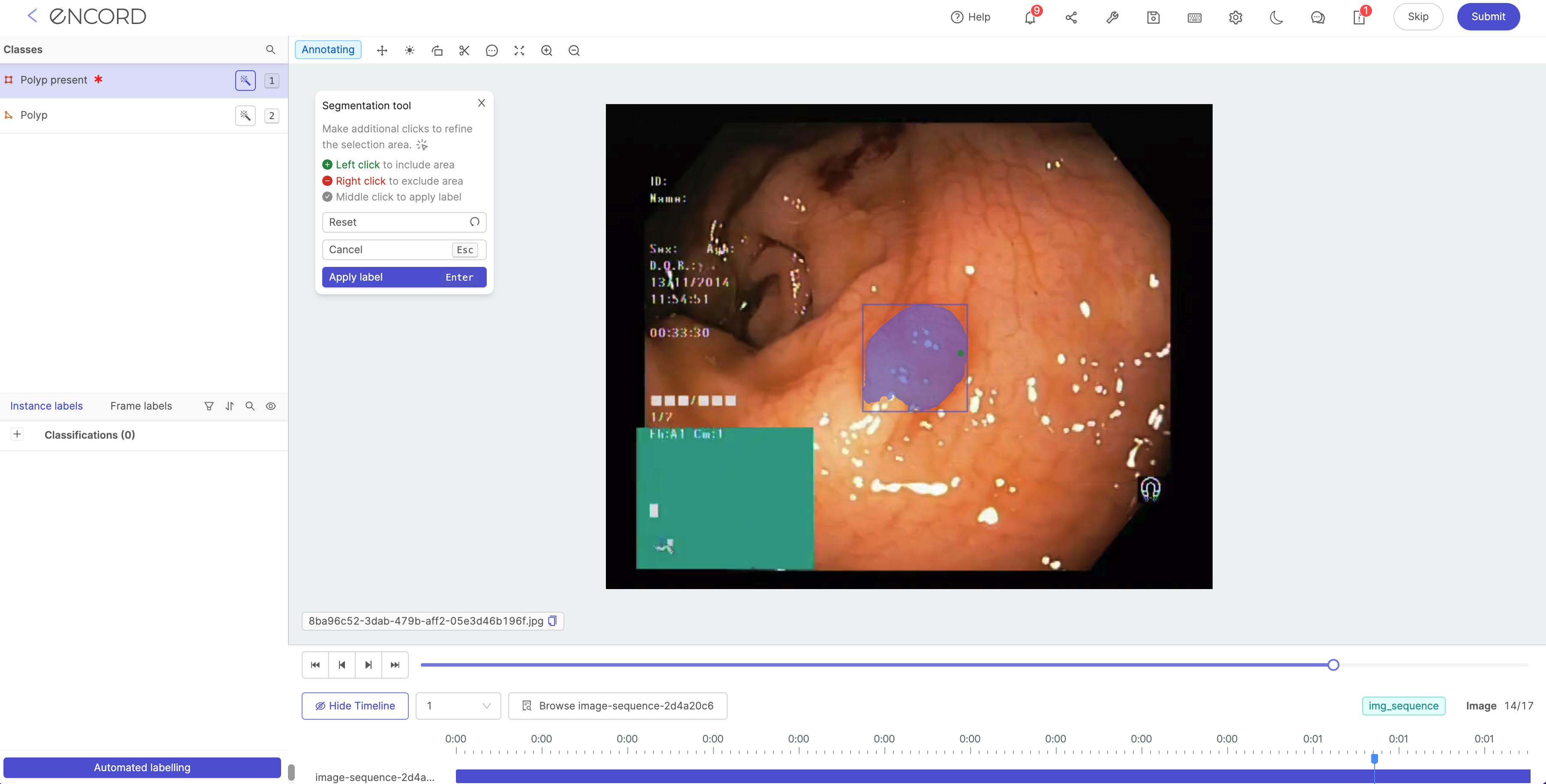

Model-assisted annotations of polyps in the Encord training data platform

General and Minimally Invasive Surgery (MIS)

Robotic assisted devices are more and more frequent in Minimally Invasive Surgeries (MIS). The primary objective of MIS is to reduce the trauma to the patient's body; the incision surface area is smaller, and often serves as an entry point, or port, for specialized instruments and a camera, known as a laparoscope, to enter the tissues and feed back real-time video data, which allows surgeons to view internal stuctures on a monitor and be guided through the procedure.

MIS employs long, thin instruments with articulating tips that can be maneuvered through the small incisions.

Systems like Dexter (by Distalmotion) are currently being used for daily gynecology, urology and general surgery procedures in Europe. “Surgeons can choose to operate entire procedures robotically, or they can leverage the ability to easily switch between the robotic and laparoscopic modalities to perform specialized tasks such as stapling with their preferred and trusted instruments,” Distalmotion CEO Michael Friedrich said in a recent press release announcing their upcoming US expansion.

Another promising platform is Maestro (built by Moon Surgical), which sits at the intersection of robotic-assisted surgery and conventional surgery: acting as a robotic surgical assistant, it augments the precision and control of laparoscopic surgery, increasing the dexterity of a surgeon's own hand. Just this month, Moon Surgical announced the successful completion of the first 10 laparoscopic surgeries with its Maestro system in France. The procedures — bariatric and abdominal surgery procedures — were performed by laparoscopic surgeons Dr. Benjamin Cadière and Dr. Georges Debs, who said that the platform provided them with stability and precision that are difficult to match with human assistance.

Many different procedure types are benefitting from the innovation in surgical assisted devices. A few examples are:

- Orthopedic Surgery. Orthopedic surgery is primarily used for the treatment of musculoskeletal conditions and disorders, mostly relating to bones and joints. With deep learning and computer vision, surgeons can build a pre-op model to plan the creation of patient-specific implants and the precise alignment of bones and joints, and then leverage a robotic arm to facilitate the optimal placement during the surgery. Stryker, the creators of the MAKO surgical assistant, are one of the pioneers in this space: MAKO turns a CT scan of a patient's joint into a 3D model, measures soft tissue balance, and, during surgery, ensures the placement is optimized to the patient's anatomy. Ganymed Robotics is another innovator in the space of orthopedic robotics. The Paris-based startup's team of computer vision and deep learning imaging experts have built a tool that leverages multimodal sensors to improve hard tissue surgery, starting with total knee arthroplasty (TKA).

- Robotic Bronchoscopy. Bronchoscopy helps evaluate and diagnose lung conditions, obtain samples of tissue or fluid, and remove foreign bodies. During a robotic bronchoscopy, the doctor uses a controller to operate a robotic arm, which guides a catheter (a thin, flexible, and maneuverable tube equipped with a camera, light, and shape-sensing technology) through the patient’s airways. Noah Medical received FDA clearance earlier this year for its Galaxy System™: a computer vision powered lung navigation system that improves the visualization and access of robotic brochoscopies.

- Microsurgery. Microsurgery requires the use of high-powered microscopes and precision instruments to perform intricate procedures on tiny structures within the body, such as blood vessel, nerve and tissue repairs. These kinds of surgeries operate hard-to-see anatomical structures that are often invisible to the human eye, and surgeons performing them need to undergo extensive training to develop exceptional hand-eye coordination. A handful of computer vision powered systems are being built to help improve the outcomes of these delicate surgeries, like MUSA-3, the microsurgery robot by Microsure, which allows surgeons to use a joystick to control instrument positioning during lymphatic surgery. The system is optimized for tremor-filtered movements and high-precision, and uses high-definition on-screen displays to enable real-time image analysis during these exceptionally delicate procedures. The Microsure team raised a €38m Series B earlier this month, as they eye FDA clearance in the US and CE-mark in Europe.

Postoperative analysis and training

Successful patient outcomes are achieved before, during, and after what happens in the operating room. AI surgical systems are valuable in post-operative analysis, as surgeons can review the process to understand improvement areas, identify potential health risks for the patient, and share insights to align expectations. Video data can also help trained newly formed surgeons, and provide education and knowledge share for the academic surgery community.

Annotated surgical videos contain information regarding critical procedures, and can help inform students about effective surgical practices or risks involved with specific techniques. AI systems can also assess surgical performance by monitoring live video feeds and comparing a surgeon’s techniques with those used in similar procedures previously. The system can record custom metrics such as an operation’s total duration, patient satisfaction and post-operative complications, establishing benchmarks and shared understanding.

A leader in this space is Orsi Academy, a Belgian training and research community that helps train medical professionals in new AI-driven techniques, such as computer vision for analyzing surgical videos, surgical data science for performance evaluation, and 3D printing, to simulation to help surgeons better understand and view specific body parts and surgical sites. Just a few days ago, Orsi Academy announced that their augmented reality tool (developed by Orsi Innotech) had enabled surgeons at Erasmus Medical Center to perform the world's first robot-assisted lobectomy using augmented reality, marking a huge achievement for the AI-assisted world of surgery. During this surgery, virtual overlay of the tumor, blood vessels and airways were projected over the camera image of the patient’s lung and was rendered with real-time AI-assisted robotic instrument detection. This allows surgeons to find their way inside the patient’s body more safely & effectively.

Explore the platform

Data infrastructure for multimodal AI

Explore product

Explore our products

Medical annotation refers to the process of labeling medical images and videos, such as X-rays, CT and MRI scans, surgical video footage, etc.

Annotation videos provide experts with rich training datasets for building computer vision models that can understand, analyze, and predict video content.

AI-enabled surgical videos and robots can help surgeons with pre-operative planning, offer valuable intra-operative insights, and help improve post-operative patient outcomes by recommending treatments and opportunities for performance improvements.

Standard annotation techniques include marking phases in a surgical procedure, drawing bounding boxes and polygons to highlight critical anatomical structures, semantic segmentation to identify surgical instruments, and keypoint annotation to detect tumors and other medical conditions.

Yes, Encord is designed to facilitate collaboration with medical device companies by allowing for the annotation of specific surgical techniques. This ensures that the instruments are properly tagged and aligned with the respective techniques, enhancing the overall quality and applicability of the annotated data.

Encord provides a robust annotation platform that supports object detection for surgical devices, helping to ensure that instruments are not misplaced during procedures. This capability aids in optimizing the placement of items on tray tables, ultimately improving efficiency and safety in operating theatres.

Encord provides tools that facilitate the annotation of chain of thought tasks, where users can annotate what a robot was supposed to plan and reason during specific key frames or throughout a video. This iterative approach allows for continuous improvement in the annotation process and model training.

Encord can assist with a wide range of projects in the medical AI space, including 2D and 3D imaging tasks. Its capabilities support the development of AI models for various applications, ensuring that teams have the right tools to scale their initiatives effectively.

Encord supports the integration of AI tools by providing advanced annotation and data management capabilities. These features enable teams to develop, test, and refine AI algorithms that can improve the accuracy and consistency of pathology and endoscopy assessments.

Encord actively participates in events such as Surgical AI Day by showcasing its features and engaging in industry talks. The platform's involvement helps foster collaboration and provides opportunities for users to learn more about its capabilities.

Yes, Encord is designed to scale annotation efforts efficiently, allowing teams to handle larger volumes of data without compromising quality. Our platform includes tools that facilitate rapid turnaround and streamline the annotation process.