Announcing our Series C with $110M in total funding. Read more →.

Contents

What is Multimodal Deep Learning?

Benefits of Multimodal Datasets in Computer Vision

Top 10 Multimodal Datasets

Key Takeaways: Multimodal Datasets

Encord Blog

Top 10 Multimodal Datasets

5 min read

Multimodal datasets are the digital equivalent of our senses. Just as we use sight, sound, and touch to interpret the world, these datasets combine various data formats—text, images, audio, and video—to offer a richer understanding of content.

Think of it this way: if you tried to understand a movie just by reading the script, you'd miss out on the visual and auditory elements that make the story come alive. Multimodal datasets provide those missing pieces, allowing AI to catch subtleties and context that would be lost if it were limited to a single type of data.

Another example is analyzing medical images alongside patient records. This approach can reveal patterns that might be missed if each type of data were examined separately, leading to breakthroughs in diagnosing diseases. It's like assembling multiple puzzle pieces to create a clearer, more comprehensive picture.

In this blog, we've gathered the best multimodal datasets with links to these data sources. These datasets are crucial for Multimodal Deep Learning, which requires integrating multiple data sources to enhance performance in tasks such as image captioning, sentiment analysis, medical diagnostics, video analysis, speech recognition, emotion recognition, autonomous vehicles, and cross-modal retrieval.

What is Multimodal Deep Learning?

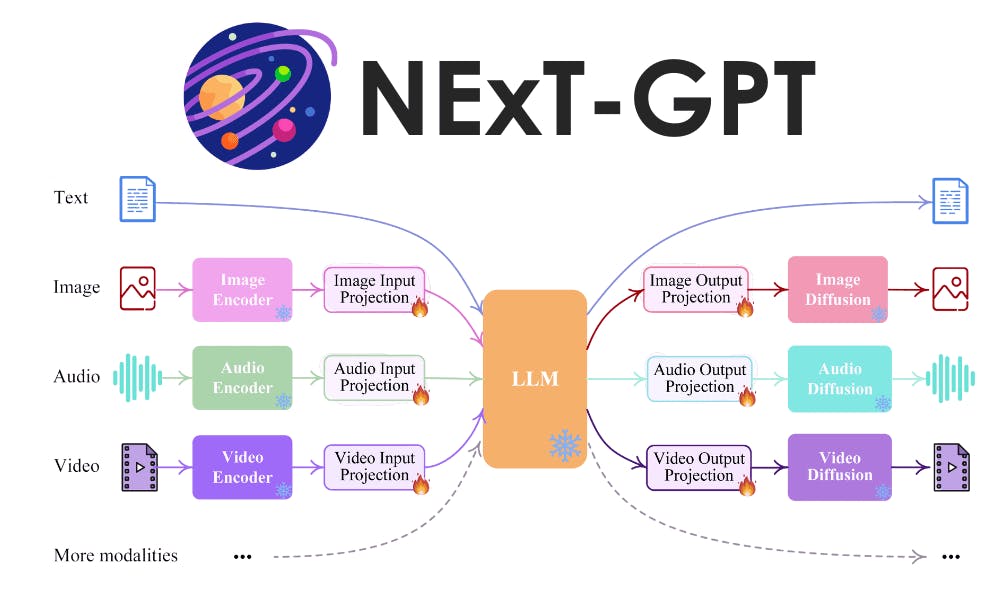

Multimodal deep learning, a subfield of Machine Learning, involves using deep learning techniques to analyze and integrate data from multiple data sources and modalities such as text, images, audio, and video simultaneously. This approach uses the complementary information from different types of data to improve model performance, enabling tasks like enhanced image captioning, audio-visual speech recognition, and cross-modal retrieval.

Benefits of Multimodal Datasets in Computer Vision

Multimodal datasets significantly enhance computer vision applications by providing richer and more contextual information. Here's how:

- By combining visual data with other modalities and data sources like text, audio, or depth information, models can achieve higher accuracy in tasks such as object detection, image classification, and image segmentation.

- Multimodal models are less susceptible to noise or variations in a single modality. For instance, combining visual and textual data can help in overcoming challenges like occlusions or ambiguous image content.

- Multimodal datasets allow models to learn deeper semantic relationships between objects and their context. This enables more sophisticated tasks like visual question answering (VQA) and image generation.

- Multimodal dataset opens up possibilities for novel applications in computer vision, large language models, augmented reality, robotics, text-to-image generation, VQA, NLP and medical image analysis.

- By integrating information from data sources of different modalities, models can better understand the context of visual data, leading to more intelligent and human-like large language models.

Top 10 Multimodal Datasets

Encord E-MM1 Dataset

The E-MM1 open-source dataset is the world's largest multimodal AI dataset, with 107 million groups of five modalities: images, video, text, audio and 3D point clouds. It was built to improve the foundations for AI models to make richer inferences across data types, rather than processing each modality in isolation.

E-MM1 comprises of a huge pre-training pool (>100M 5-tuples) automatically assembled via SOTA language retrieval. The Encord ML team then created a further post-training subset of 1 million groups with diversity-aware sampling and human-rated match quality, and a gold-standard evaluation set with five-rater consensus for zero-shot classification of 3D point clouds against audio files.

Along with the dataset, the Encord team built a baseline retrieval model, similar to the CLIP (Contrastive Language-Image Pre-training) approach developed by OpenAI. The EBind model extends this across five modalities with one encoder trained per modality, making it ideal for resource-constrained environments, and is available open-source on Github.

- Research Paper: EBind: A Practical Approach to Space Binding

- Authors: Jim Broadbent, Felix Cohen, Frederik Hvilshøj, Eric Landau, Eren Sasoglu

- Dataset Size: 537,852 total files

- Licence: ODC Attribution License (ODC-By)

- Access Links: E-MM1 Website

Flickr30K Entities Dataset

The Flickr30K Entities dataset is an extension of the popular Flickr30K dataset, specifically designed to improve research in automatic image description and understand how language refers to objects in images. It provides more detailed annotations for image-text understanding tasks.

Flickr30K Entities dataset built upon the Flickr30k dataset, which contains 31K+ images collected from Flickr. Each image in Flickr30k Entities is associated with five crowd-sourced captions describing the image content. The dataset adds bounding box annotations for all entities (people, objects, etc.) mentioned in the image captions.

Flickr30K allows to develop better large language models with vision capabilities for image captioning, where the model can not only describe the image content but also pinpoint the location of the entities being described. It also allows the development of an improved grounded language understanding, which refers to a machine's ability to understand language in relation to the physical world.

- Research Paper: Flickr30k Entities: Collecting Region-to-Phrase Correspondences for Richer Image-to-Sentence Models

- Authors: Bryan A. Plummer, Liwei Wang, Chris M. Cervantes, Juan C. Caicedo, Julia Hockenmaier, and Svetlana Lazebnik

- Dataset Size: 31,783 real-world images, 158,915 captions (5 per image), approximately 275,000 bounding boxes, 44,518 unique entity instances.

- Licence: The dataset typically follows the original Flickr30k dataset licence, which allows for research and academic use on non-commercial projects. However, you should verify the current licensing terms as they may have changed.

- Access Links: Bryan A. Plummer Website

Visual Genome

The Visual Genome dataset is a multimodal dataset, bridging the gap between image content and textual descriptions. It offers a rich resource for researchers working in areas like image understanding, VQA, and multimodal learning.

Visual Genome combines two modalities, first is Visual, containing over 108,000 images from the MSCOCO dataset are used as the visual component, and second is Textual, where images are extensively annotated with textual information (i.e. objects, relationships, region captions, question-answer pairs).

The multimodal nature of this dataset offers advantages like deeper image understanding to allow identify meaning and relationships between objects in a scene beyond simple object detection, VQA to understand the context and answer questions that require reasoning about the visual content, and multimodal learning that can learn from both visual and textual data.

- Research Paper: Visual Genome: Connecting Language and Vision Using Crowdsourced Dense Image Annotations

- Authors: Ranjay Krishna, Yuke Zhu, Oliver Groth, Justin Johnson, Kenji Hata, Joshua Kravitz, Stephanie Chen, Yannis Kalantidis, Li-Jia Li, David A. Shamma, Michael S. Bernstein, Fei-Fei Li

- Dataset Size: 108,077 real-world image, 5.4 Million Region Descriptions, 1.7 Million VQA, 3.8 Million Object Instances, 2.8 Million Attributes, 2.3 Million Relationships

- Licence: Visual Genome by Ranjay Krishna is licensed under a Creative Commons Attribution 4.0 International License.

- Access Links: Visual Gnome Dataset at Hugging Face

MuSe-CaR

MuSe-CaR (Multimodal Sentiment Analysis in Car Reviews) is a multimodal dataset specifically designed for studying sentiment analysis in the "in-the-wild" context of user-generated video reviews.

MuSe-CaR combines three modalities (i.e. text, audio, video) to understand sentiment in car reviews. The text reviews are presented as spoken language, captured in the video recordings, audio consists of vocal qualities (like tone, pitch, and emphasis) to reveal emotional aspects of the review beyond just the spoken words, and video consists of facial expressions, gestures, and overall body language provide additional cues to the reviewer's sentiment.

MuSe-CaR aims to advance research in multimodal sentiment analysis by providing a rich dataset for training and evaluating models capable of understanding complex human emotions and opinions expressed through various modalities.

- Research Paper: The Multimodal Sentiment Analysis in Car Reviews (MuSe-CaR) Dataset: Collection, Insights and Improvements

- Authors: Lukas Stappen, Alice Baird, Lea Schumann, Björn Schuller

- Dataset Size: 40 hours of user-generated video material with more than 350 reviews and 70 host speakers (as well as 20 overdubbed narrators) from YouTube.

- Licence: End User Licence Agreement (EULA)

- Access Links: Muse Challenge Website

CLEVR

CLEVR, which stands for Compositional Language and Elementary Visual Reasoning, is a multimodal dataset designed to evaluate a machine learning model's ability to reason about the physical world using both visual information and natural language. It is a synthetic multimodal dataset created to test AI systems' ability to perform complex reasoning about visual scenes.

CLEVR combines two modalities, visual and textual. Visual modality comprises rendered 3D scenes containing various objects. Each scene features a simple background and a set of objects with distinct properties like shape (cube, sphere, cylinder), size (large, small), color (gray, red, blue, etc.), and material (rubber, metal). Textual modality consists of questions posed in natural language about the scene. These questions challenge models to not only "see" the objects but also understand their relationships and properties to answer accurately.

CLEVR is used in applications like visual reasoning in robotics and other domains to understand the spatial relationships between objects in real-time (e.g., "Which object is in front of the blue rubber cube?"), counting and comparison to enumerate objects with specific properties (e.g., "How many small spheres are there?"), and logical reasoning to understand the scene and the question to arrive at the correct answer, even if the answer isn't directly visible (e.g., "The rubber object is entirely behind a cube. What color is it?").

- Research Paper: CLEVR: A Diagnostic Dataset for Compositional Language and Elementary Visual Reasoning

- Authors: Justin Johnson, Bharath Hariharan, Laurens van der Maaten, Fei-Fei Li, Larry Zitnick, Ross Girshick

- Dataset Size: 100,000 images, 864986 questions, 849,980 answers, 85,000 scene graph annotations and functional program representations.

- Licence: Creative Commons CC BY 4.0 licence.

- Access Links: Stanford University CLEVR Page

InternVid

InternVid is a relatively new multimodal dataset specifically designed for tasks related to video understanding and generation using generative models. InternVid focuses on the video-text modality, combining a large collection of videos containing everyday scenes and activities accompanied by detailed captions describing the content, actions, and objects present in the video.

InternVid aims to support various video-related tasks such as video captioning, video understanding, video retrieval and video generation.

- Research Paper: InternVid: A Large-scale Video-Text Dataset for Multimodal Understanding and Generation

- Authors: Yi Wang, Yinan He, Yizhuo Li, Kunchang Li, Jiashuo Yu, Xin Ma, Xinhao Li, Guo Chen, Xinyuan Chen, Yaohui Wang, Conghui He, Ping Luo, Ziwei Liu, Yali Wang, LiMin Wang, Yu Qiao

- Dataset Size: The InternVid dataset contains over 7 million videos lasting nearly 760K hours, yielding 234M video clips accompanied by detailed descriptions of total 4.1B words.

- Licence: The InternVid dataset is licensed under the Apache License 2.0

- Access Links: InternVid Dataset at Huggingface

MovieQA

MovieQA is a multimodal dataset designed specifically for the task of video question answering (VideoQA) using text and video information.

MovieQA combines three modalities i.e. video, text and question and answer pairs. The dataset consists of video clips from various movie clips that are accompanied by subtitles or transcripts, providing textual descriptions of the spoken dialogue and on-screen actions.

Each video clip is paired with multiple questions that require understanding both the visual content of the video and the textual information from the subtitles/transcript to answer accurately.

MovieQA aims to evaluate how well a model can understand the actions, interactions, and events happening within the video clip. It can utilize textual information such as subtitles/transcript to complement the visual understanding and answer questions that might require information from both modalities and provide informative answers.

- Research Paper: MovieQA: Understanding Stories in Movies through Question-Answering

- Authors: Makarand Tapaswi, Yukun Zhu, Rainer Stiefelhagen, Antonio Torralba, Raquel Urtasun, Sanja Fidler

- Dataset Size: This dataset consists of 15,000 questions about 400 movies with high semantic diversity.

- Licence: Unknown

- Access Links: Dataset at Metatext

MSR-VTT

MSR-VTT, which stands for Microsoft Research Video to Text, is a large-scale multimodal dataset designed for training and evaluating models on the task of automatic video captioning. The primary focus of MSR-VTT is to train models that can automatically generate captions for unseen videos based on their visual content.

MSR-VTT combines two modalities, videos and text descriptions. Video is a collection of web videos covering a diverse range of categories and activities and each video is paired with multiple natural language captions describing the content, actions, and objects present in the video.

MSR-VTT helps in large-scale learning using vast amounts of data which allows models to learn robust video representations and generate more accurate and descriptive captions. Videos from various categories help models generalize well to unseen video content and multiple captions per video provides a richer understanding of the content.

- Research Paper: MSR-VTT: A Large Video Description Dataset for Bridging Video and Language

- Authors: Jun Xu , Tao Mei , Ting Yao, Yong Rui

- Dataset Size: Large video captioning dataset with 10,000 clips (38.7 hours) and 200,000 descriptions. It covers diverse categories and has the most sentences/vocabulary compared to other similar datasets. Each clip has around 20 captions written by human annotators.

- Licence: Unknown

- Access Links: Dataset at Kaggle

VoxCeleb2

VoxCeleb2 is a large-scale multimodal dataset designed for tasks related to speaker recognition and other audio-visual analysis. VoxCeleb2 combines two modalities, audio and video. Audio consists of recordings of speech from various individuals and corresponding video clips of the speakers, allowing for the extraction of visual features.

VoxCeleb2 primarily focuses on speaker recognition, which involves identifying or verifying a speaker based on their voice. However, the audio-visual nature of the dataset also allows for face recognition and speaker verification.

- Research Paper: VoxCeleb2: Deep Speaker Recognition

- Authors: Joon Son Chung, Arsha Nagrani, Andrew Zisserman

- Dataset Size: VoxCeleb2 is a large-scale dataset containing over 1 million utterances for 6,112 celebrities, extracted from videos uploaded to YouTube.

- Licence: VoxCeleb2 metadata is licensed under a Creative Commons Attribution-ShareAlike 4.0 International License.

- Access Links: The VoxCeleb2 Dataset

VaTeX

VaTeX (VAriational Text and video) is a multimodal dataset designed specifically for research on video-and-language tasks.

Modalities: VaTeX combines two modalities, A collection of videos depicting various activities and scenes, and text descriptions for each video describing the content in both English and Chinese. Some caption pairs are parallel translations, allowing for video-guided machine translation research.

VaTeX supports several research areas related to video and language such as multilingual video captioning to generate captions for videos in multiple languages, video-guided machine translation to improve the accuracy of machine translation, and video understanding to analyze and understand the meaning of video content beyond simple object recognition.

- Research Paper: VaTeX: A Large-Scale, High-Quality Multilingual Dataset for Video-and-Language Research

- Authors: Xin Wang, Jiawei Wu, Junkun Chen, Lei Li, Yuan-Fang Wang, William Yang Wang

- Dataset Size: The dataset contains over 41,250 videos and 825,000 captions in both English and Chinese.

- Licence: The dataset is under a Creative Commons Attribution 4.0 International License.

- Access Links: VATEX Dataset

WIT

WIT, which stands for Wikipedia-based Image Text, is an state-of-the-art large-scale dataset designed for tasks related to image-text retrieval and other multimedia learning applications.

Modalities: WIT combines two modalities, Images which are a massive collection of unique images from Wikipedia and text descriptions for each image extracted from the corresponding Wikipedia article. These descriptions provide information about the content depicted in the image.

WIT primarily focuses on tasks involving the relationship between images and their textual descriptions. Some key applications are Image-Text Retrieval to retrieve images using text query, Image Captioning to generate captions for unseen images, and Multilingual Learning that can understand and connect images to text descriptions in various languages.

- Research Paper: WIT: Wikipedia-based Image Text Dataset for Multimodal Multilingual Machine Learning

- Authors: Krishna Srinivasan, Karthik Raman, Jiecao Chen, Michael Bendersky, Marc Najork

- Dataset Size: WIT contains a curated set of 37.6 million entity rich image-text examples with 11.5 million unique images across 108 Wikipedia languages. I

- Licence: This data is available under the Creative Commons Attribution-ShareAlike 3.0 Unported licence.

- Access Links: Google research dataset github

Key Takeaways: Multimodal Datasets

Multimodal datasets, which blend information from diverse data sources such as text, images, audio, and video, provide a more comprehensive representation of the world. This fusion allows AI models to decipher complex patterns and relationships, enhancing performance in tasks like image captioning, video understanding, and sentiment analysis. By encompassing diverse data aspects, multimodal datasets push the boundaries of artificial intelligence, fostering more human-like understanding and interaction with the world.

These datasets, sourced from various data sources, drive significant advancements across various fields, from superior image and video analysis to more effective human-computer interaction. As technology continues to advance, multimodal datasets will undoubtedly play a crucial role in shaping the future of AI. Embracing this evolution, we can look forward to smarter, more intuitive AI systems that better understand and interact with our multifaceted world.

Explore the platform

Data infrastructure for multimodal AI

Explore product

Frequently asked questions

Encord is designed to handle a variety of data modalities seamlessly. The platform allows users to annotate text, audio, images, and DICOM files, making it a comprehensive solution for diverse AI initiatives. This flexibility supports complex projects that require integrated handling of multiple data types.

Encord facilitates multimodal data creation workflows by allowing users to manage and curate various data types, such as PDFs, videos, and audio files. This enables pre-labeling and efficient annotation processes, ensuring that users can streamline their data handling and enhance model training outcomes.

Encord simplifies the management and annotation of multimodal data by allowing users to curate and annotate video, audio, and transcription files simultaneously. The platform supports automation using open-source models or custom models, providing flexible workflows tailored to specific project needs.

Encord provides a fast and efficient platform for managing, creating, and annotating multimodal data, including text, speech, images, and videos. This makes it a suitable choice for teams working on diverse use cases, such as dubbing and translation, ensuring a streamlined data annotation process.

Encord is a comprehensive platform designed for ML Ops teams to prepare and analyze multimodal training data. It offers tools for data visualization, curation, and management, as well as native annotation capabilities for video data, enabling teams to efficiently handle complex datasets.

Encord's platform facilitates the integration of various data sources, enabling users to manage and annotate datasets seamlessly. This includes support for multimodal data, which is increasingly important as teams seek to leverage diverse inputs for comprehensive model training.

Encord is a truly multimodal platform, allowing users to annotate various data types, including text, audio, video, and images. This flexibility is essential for teams handling diverse data modalities, providing a comprehensive solution for all annotation requirements.

Encord provides a comprehensive data development platform that supports a variety of modalities, including video, images, 3D data, audio, text, and documents. This allows teams to effectively manage and utilize diverse data types for their machine learning operations.

Encord offers a variety of features designed to support multimodal data projects, including tools for curation, annotation, and collaboration. These features are tailored to enhance the efficiency and accuracy of working with diverse data types in AI training.

Encord provides a comprehensive platform for managing and curating multimodal data, including video, audio, images, and text. This allows AI builders to efficiently collaborate and innovate while handling vast amounts of data.