Announcing our Series C with $110M in total funding. Read more →.

Contents

What is Human Pose Estimation (HPE)?

Why use Human Pose Estimation in Computer Vision?

Top 15 Free Pose Estimation Datasets

Rapid Human Poes Estimation Computer Vision Model Training and Deployment

Human Pose Estimation Frequently Asked Questions (FAQs)

Encord Blog

15 Best Free Datasets for Human Pose Estimation in Computer Vision

Human Pose Estimation (HPE) is a way of capturing 2D and 3D human movements using labels and annotations to train computer vision models. You can apply object detsection, bounding boxes, pictoral structure framework (PSF), and Gaussian layers, and even using convolutional neural networks (CNN) for segmentation, detection, and classification tasks.

Human Pose Estimation (HPE) is a powerful way to use computer vision models to track, annotate, and estimate movement patterns for humans and animals.

It takes enormous computational powers and sophisticated algorithms, such as trained Computer vision models, plus accurate and detailed annotations and labels, for a computer to understand and track what the human mind can do in a fraction of a second.

An essential part of training models and making them production-ready is getting the right datasets. One way is to use open-source human pose estimation and detection image and video datasets for machine learning (ML) and computer vision (CV) models.

This article covers the importance of open-source datasets for human pose estimation and detection and gives you more information on 15 of the top free, open-source pose estimation datasets.

What is Human Pose Estimation (HPE)?

HPE is a computer vision-based approach to annotations that identifies and classifies joints, features, and movements of the human body.

Pose estimation is used to capture the coordinates for joints or facial features (e.g., cheeks, eyes, knees, elbows, torso, etc.), also known as keypoints. The connection between two keypoints is a pair.

Not every keypoint connection can form a pair. It has to be a logical and significant connection, creating a skeleton-like outline of the human body. Once these labels have been applied to images or videos, then the labeled datasets can be used to train computer vision models.

Images and videos for human pose estimation are either:

- crowd-sourced,

- found online from multiple sources (such as TV shows and movies),

- taken in studios using models,

- taken from CCTV images, or

- synthetically generated.

3D models, such as those used by designers and artists, are also useful for 3D human pose estimation. Quality is crucial, so high-resolution are more effective at providing accurate joint positions when capturing human activities and movements.

There are a number of ways to approach human pose estimation:

- Classical 2D: Using a pictorial structure framework (PSF) to capture and predict the joints of a human body.

- Histogram Oriented Gaussian (HOG): One way to overcome some of the 2D PSF limitations, such as the fact that the method often fails to capture limbs and joints that aren’t visible in images.

- Deep learning-based approach: Building on the traditional approaches, convolutional neural networks (CNN) are often used for greater precision and accuracy and are more effective for segmentation, detection, and classification tasks. Deep learning using CNNs is often more useful for 3D human pose estimation, multi-person pose estimation, and real-time multi-person detection. Here is the paper that sparked the movement to CNNs and deep learning for HPE (known as DeepPose).

To find out more, read our Complete Guide to Pose Estimation for Computer Vision.

To find out more, read our Complete Guide to Pose Estimation for Computer Vision. Why use Human Pose Estimation in Computer Vision?

Pose estimation and detection are challenging for machine learning and computer vision models. Humans move dynamically, and human activities involve fluid movements. People are often in groups, too, so you’ve got even more challenges with multi-person pose estimation compared to single-person pose estimation when attempting keypoint detection.

Other factors and objects in images and videos can add layers of complexity to this movement, such as clothing, fabric, lighting, arbitrary occlusions, the viewing angle, background, and whether there’s more than one person or animal being tracked in a video.

Computer vision models that use human pose estimation datasets are most commonly applied in healthcare, sports, retail, security, intelligence, and military settings. In any scenario, it’s crucial to train an algorithmically-generated model on the broadest possible range of data, accounting for every possible use and edge case required to achieve the project's goals.

One way to do this, especially when timescales or budgets are low, is to use free, open-source image or video-based datasets. Open-source datasets are almost always as close to training and production-ready as possible, with annotations, keypoint detection, and labels already applied.

Plus, if you need to source more data, you could use several open-source datasets or blend these images and videos with proprietary ones or even synthetic, AI-generated augmentation images. As with any computer vision model, the quality, accuracy, and diversity of images and videos within a dataset can significantly impact a project's outcomes.

Let's dive into the top 15 free, open-source human pose estimation datasets.

Top 15 Free Pose Estimation Datasets

Here are 15 of the best human pose estimation, configuration, and tracking datasets you can use for training computer vision models.

MPII Human Pose Dataset

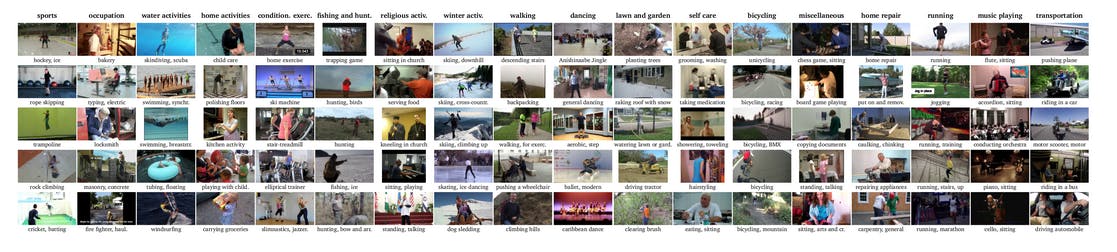

The MPII Human Pose dataset is “a state of the art benchmark for evaluation of articulated human pose estimation.” It includes over 25,000 images of 40,000, with annotations covering 410 human movements and activities.

Images were extracted from YouTube videos, and the dataset includes unannotated frames preceding and following the movement within the annotated frames. The test set data includes richer annotation data, body movement occlusions, and 3D head and torso orientations.

A team of data scientists at the Max Planck Institute for Informatics in Germany and Stanford University in America created this dataset.

3DPW - 3D Poses in the Wild

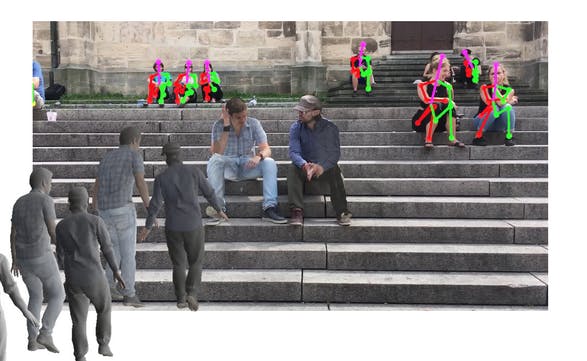

The 3D Poses in the Wild dataset (3DPW) used IMUs and video footage from a moving phone camera to accurately capture human movements in public.

This dataset includes 60 video sequences, 3D and 2D poses, 3D body scans, and people models that are all re-poseable and re-shapeable.

3DPW was also created at the Max Planck Institute for Informatics, with other data scientists supporting it from the Max Planck Institute for Intelligent Systems, and TNT, Leibniz University of Hannover.

LSPe - Leeds Sports Pose Extended

The Leeds Sports Pose extended dataset (LSPe) contains 10,000 sports-related images from Flickr, with each image containing up to 14 joint location annotations. This makes the LSPe database useful for HPE scenarios where more joint data points improve the outcomes of training models.

Accuracy is not guaranteed as this was done using Amazon Mechanical Turk as a Ph.D. project in 2016, and AI-based (artificial intelligence) annotation tools are more advanced now. However, it’s a useful starting point for anyone training in a sports movement-based computer vision model.

DensePose-COCO

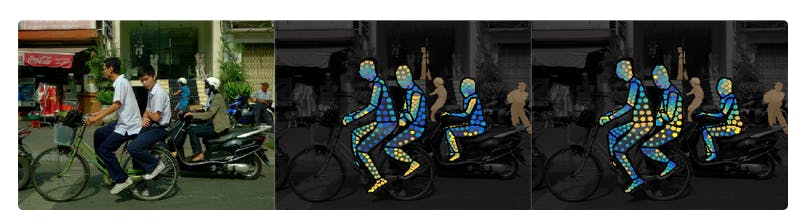

DensePose-COCO is a “Dense Human Pose Estimation In The Wild” dataset containing 50,000 manually annotated COCO-based images (Common Objects in Context).

Annotations in this dataset align dense “correspondences from 2D images to surface-based representations of the human body” using the SMPL model and SURREAL textures for annotations and manual labeling.

As the name suggests, this dense pose dataset includes thousands of multi-person images because they’re more challenging than single-person images. With multiple people there are even more body joints to label; hence the application of bounding boxes, object detection, image segmentation, and other methods to capture multi-person pose estimation in this dataset.

DensePose-COCO is a part of the COCO and Mapillary Joint Recognition Challenge Workshop at ICCV 2019.

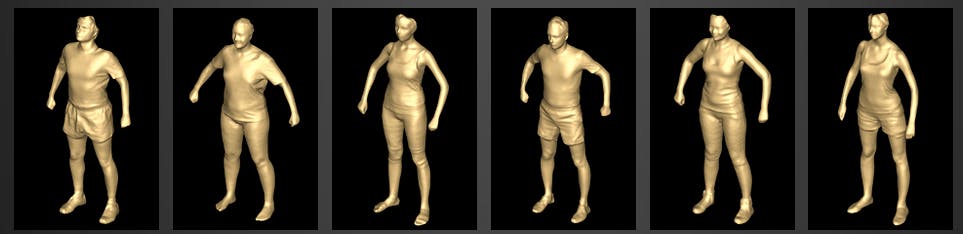

AMASS: Archive of Motion Capture as Surface Shapes

AMASS is “a large and varied database of human motion that unifies 15 different optical marker-based mocap datasets by representing them within a common framework and parameterization.”

It includes 3D and 4D body scans, 40 hours of motion data, 300 human subjects, and over 11,000 human motions and movements, all carefully annotated.

AMASS is another open-source dataset that started as an ICCV 2019 challenge, created by data scientists from the Max Planck Institute for Intelligent Systems.

VGG Human Pose Estimation Datasets

The VGG Human Pose Estimation Datasets include a range of annotated videos and frames from YouTube, the BBC, and ChatLearn.

It includes over 70 videos containing millions of frames, with manually annotated labels covering an extensive range of human upper-body poses.

This vast HPE dataset was created by a team of researchers and data scientists, with grants provided by EPSRC EP/I012001/1 and EP/I01229X/1.

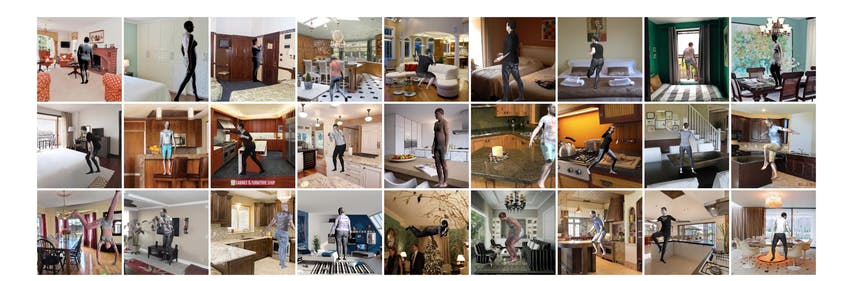

SURREAL Dataset

This dataset contains 6 million video frames of Synthetic hUmans foR REAL tasks (SURREAL).

SURREAL is a “large-scale person dataset to generate depth, body parts, optical flow, 2D/3D pose, surface normals ground truth for RGB video input.” It now contains optical flow data.

CMU Panoptic Studio Dataset

The Panoptic Studio dataset is a “Massively Multiview System for Social Motion Capture.”

It’s a pose estimation dataset designed to capture and accurately annotate human social interactions. Annotations and labels were applied to over 480 synchronized video streams, capturing and labeling the 3D structure of movement of people engaged in social interactions.

This dataset was created by researchers at Carnegie Mellon University following an ICCV 2015, with funding from the National Science Foundation.

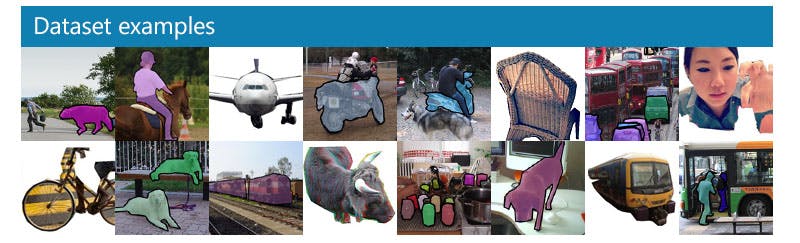

COCO (Common Objects in Context)

COCO is one of the most widely-known and popular human pose estimation datasets containing over 330,000 images (220,000 labeled), 1.5 million object instances, 250,000 with keypoints, and at least five captions per image.

COCO is probably one of the most accurate and varied open-source pose estimation datasets and a useful part of the training process for computer vision HPE-based models.

It was put together by numerous collaborators, including data scientists from Caltech and Google, and is sponsored by Microsoft, Facebook, and other tech giants with an interest in AI. Here is the research paper for more information.

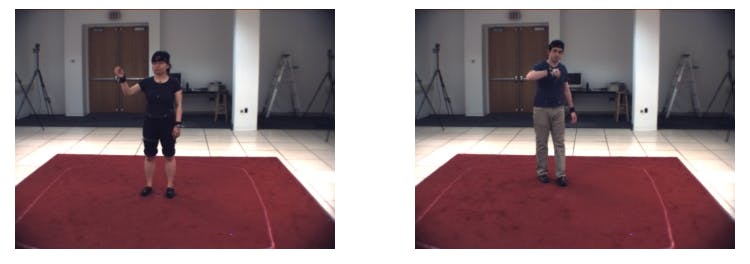

HumanEva

The HumanEva Dataset now contains two human pose estimation datasets, I and II. Both were put together by data scientists and other collaborators at the Max Planck Institute for Intelligent Systems, with support from Microsoft, Disney Research, and the Toyota Technological Institute at Chicago.

HumanEva-I

HumanEva-I includes seven calibrated video sequences (greyscale and color), with four subjects performing a range of actions (walking, jogging, moving, etc.) captured using 3D motion body pose cameras.

Both HumanEva-I and HumanEva-II were designed to answer the following questions about human pose estimation:

- What and how do design algorithms affect human pose estimation tracking performance, and to what extent?

- How to test algorithmic models to see which are the most effective?

- How to find solutions to the main unsolved problems in human pose estimation?

HumanEva-II

HumanEva-II is an extension of the original database, containing more images, subjects, and movements, with the aim of overcoming some of the limitations. It’s worth downloading both and the baseline algorithm code if you’re going to use this to train a computer vision model.

Human3.6M

One of the largest open-source human pose estimation datasets is Humans3.6M containing, as the name suggests, 3.6 million 3D human poses and images.

It was put together with a team of 11 actors performing 17 different scenarios (walking, talking, taking a photo, etc.) and includes pixel-level annotations for 24 body parts/joints for every image.

Human3.6M was created by the following data scientists and researchers: Catalin Ionescu, Dragos Papava, Vlad Olaru, and Cristian Sminchisescu. Here is the research paper for more information and other published papers where this dataset has been referenced.

FLIC (Frames Labelled in Cinema)

FLIC is an open-source dataset taken from Hollywood movies, containing 5003 images that include around 20,000 verifiable people. It was created using a state-of-the-art person detector on every tenth frame across 30 movies.

With a crowd-sourced labeling team from Amazon Mechanical Turk, 10 upper-body movement and joint annotations and labels were applied to every image. Labels were rejected if the person in an image was occluded or non-frontal.

FLIC was introduced and put together as part of the research for the following paper: MODEC: Multimodal Decomposable Models for Human Pose Estimation

300-W (300 Faces In-the-Wild)

One of the most significant challenges in human pose estimation is automatic facial detection “in the wild” for computer vision models.

300 Faces In-the-Wild (300-W) was an academic challenge to benchmark “facial landmark detection in real-world datasets of facial images captured in the wild.” It was held back in 2013 and has been repeated since, in conjunction with International Conference on Computer Vision (ICCV) and the Image and Vision Computing Journal.

If you want to test computer vision models on this dataset, you can download it here: [part1][part2][part3][part4]. Annotations were applied using the Multi-PIE [1] 68 points markup. Although the license isn’t for commercial use.

300-W was organized and coordinated by the Intelligent Behaviour Understanding Group (iBUG) at Imperial College London.

Labeled Face Parts in the Wild

The Labeled Face Parts in-the-Wild (LFPW) dataset includes 1,432 facial images pulled from simple web-based searches and annotated with 29 labels.

It was introduced by Peter N. Belhumeur et al. in the following research paper: Localizing Parts of Faces Using a Consensus of Exemplars.

Rapid Human Poes Estimation Computer Vision Model Training and Deployment

One of the most cost and time-effective ways to develop and deploy human pose estimation models for computer vision is to use an AI-assisted active learning platform, such as Encord.

At Encord, our active learning platform for computer vision is used by many sectors - including healthcare, manufacturing, utilities, and smart cities - to annotate human pose estimation videos and accelerate their computer vision model development.

Human Pose Estimation Frequently Asked Questions (FAQs)

What is Human Pose Estimation?

Human Pose Estimation (HPE) is a way of capturing 2D and 3D human movements using labels and annotations to train computer vision models. You can apply object detection, bounding boxes, pictoral structure framework (PSF), and Gaussian layers, and even using convolutional neural networks (CNN) for segmentation, detection, and classification tasks.

The aim of pose estimation is to create a skeleton-like outline of the human body or a keypoint representation of joints, movements, and facial features.

One way to achieve production-ready models is to use free, open-source datasets, such as the 15 we’ve covered in this article.

What are the best datasets for 3D pose estimation?

Most of the pose estimation datasets in this article can be used for 3D HPE. Some of the best and most diverse include Humans3.6M, COCO, and the CMU Panoptic Studio Dataset.

What are the best datasets for pose estimation in crowded environments?

Capturing data and applying labels for computer vision models when people are in crowds is more challenging. It takes more computational power to train a model for a multi-person pose estimation task, and naturally, more time and work is involved in labeling multiple people in images.

To make this easier, there are open-source datasets that include people in crowded environments, such as AMASS, VGG, and FLIC.

What is top-down human pose estimation?

The top-down approach for human pose estimation is normally used for multi-person images and videos. It starts with detecting which object is a person, estimating where the relevant body parts are (that will be labeled), and then calculating a pose for every human in the labeled images.

Ready to improve the performance of your human pose estimation computer vision projects?

Sign-up for an Encord Free Trial: The Active Learning Platform for Computer Vision, used by the world’s leading computer vision teams.

AI-assisted labeling, model training & diagnostics, find & fix dataset errors and biases, all in one collaborative active learning platform, to get to production AI faster. Try Encord for Free Today.

Want to stay updated?

- Follow us on Twitter and LinkedIn for more content on computer vision, training data, and active learning.

- Join our Discord channel to chat and connect.

Explore the platform

Data infrastructure for multimodal AI

Explore product

Frequently asked questions

Yes, Encord addresses the critical gap between generating lidar data and transforming it into actionable outputs. The platform assists in the annotation and management of lidar datasets, enabling users to create building information models (BIM) and other outputs that provide valuable insights for decision-making in various built environment applications.

Encord offers comprehensive annotation solutions for both camera data and lidar data, which are essential for ground truthing exercises in autonomous driving systems. This allows teams to ensure high-quality labeled data, crucial for tasks like lane detection and object detection.

Encord provides robust features for annotating various sensor modalities including lidar and camera data. The platform supports multiple annotation types such as cuboids and 3D point cloud annotations, enabling teams to effectively process complex data for machine learning models.

While Encord does not provide datasets directly, it is open to collaborating and can potentially offer referrals to organizations that have datasets, particularly in the environmental domain. This can aid users in acquiring additional data to complement their own.

Encord utilizes advanced skeleton keypoint tracking to manage complex motions in video annotations. This technique allows users to label moving subjects accurately, even in challenging scenarios where the subject may be occluded or performing intricate movements.

Encord is designed to efficiently manage large datasets, including 3D LiDAR data and high-resolution aerial imagery. The platform streamlines the annotation process, enabling teams to work with extensive data sets without sacrificing performance or usability.

Yes, Encord is capable of supporting both 2D and 3D pose estimation. This allows users to develop independent projects for segmentation and pose estimation, ultimately leading to more accurate modeling and analysis of subjects in various environments.

Encord can indeed support pre-training of models using action-free data. Users can upload and annotate 2D video data to help build foundational understanding before moving on to more complex tasks, making it a versatile choice for model development.

Best practices for anonymizing datasets in Encord include blurring faces and ensuring compliance with data privacy policies. It’s important to balance data protection with the needs of annotators, who may require clear images for effective training.

Yes, Encord can utilize public datasets or data from drone service provider partners to showcase examples of lidar measurements, enhancing the platform's usability and providing valuable insights for users.