Encord Blog

The Critical Role of Computer Vision in Cancer Treatment

This post is about hope–the hope that machine learning and computer vision can bring to physicians treating cancer patients.

Because cancer kills approximately 10 million people each year, I expect most readers to have known someone who died from or experienced the disease. After decades of research and clinical trials, it remains the world’s leading cause of death. It is also an extremely taxing disease to endure, with many experiencing terrible pain.

When it comes to treatment, about 60 percent of patients undergo some form of chemotherapy. Chemotherapy can and does save lives, but it's accompanied by hair loss, fatigue, and vomiting (which films show) as well as swollen limbs, bruising, and peripheral neuropathy (which they don’t). There’s a bleak expression that sums up the chemo experience: “Kill the cancer before the chemo kills you.”

New treatments, such as immunotherapy, might turn out to be game changers for a cancer prognosis, but most doctors will tell you that the best news that a cancer patient can hear are four little words: “We caught it early.”

I know firsthand the power of those words. They caught my father’s cancer early. At a late-stage diagnosis, his odds of a five-year survival would have been less than 30 percent. Caught early, that rate jumped to well over 90 percent.

When doctors catch cancer early, survival rates increase tremendously: at later stages, the cancer has metastasized, spreading throughout the body, which makes effective treatment more difficult.

When it comes to cancer, an ounce of prevention is worth a pound of cure–and that’s where the hope comes in.

Using Machine Learning and Computer Vision to Prevent Late-Stage Cancer Diagnosis

Machine learning and AI technologies are advancing rapidly and bring with them a tremendous amount of hope for early cancer detection and diagnosis.

Physicians already use medical imaging and AI to detect abnormalities in tissue growth. After being trained on large datasets, computer vision models can perform object detection and categorisation tasks to help doctors identify abnormalities in polyps and tissues and to discern whether tumours are malignant or benign.

However, because computer vision gives clinicians an extra pair of eyes, these models can have the potential to catch subtle indications of abnormalities even when doctors aren’t looking for cancer. Doing so can endow doctors with a huge amount of diagnostic power, even outside of their speciality area.

For instance, if a GP scanned a patient for gallstones, she could also feed the scan to a computer vision model that’s running an algorithm to detect abnormalities in the surrounding regions of the body. If the model noticed anything abnormal, the GP could alert the patient, and the patient could see a specialist even though they haven’t yet experienced any external symptoms from the tumour. Such proactive and preventative care has major implications for catching cancer early. For many cancers, and especially for those where there is no regular screening, external symptoms– such as weight loss, pain, and fatigue–often correlate with the progression of the disease, meaning that by the time a patient has cause to see a specialist, it could already be too late for effective treatment.

Because computer vision can enable doctors of any speciality to scan for early signs of cancer, these models also have the potential to democratise healthcare for those living in rural areas and developing nations. The best cancer doctors in the world help to curate, train, and review these algorithms, so the models apply their expertise when looking at patient data. With this technology, hospitals anywhere can provide patients with the expertise of a best-in-class oncologist. While the world has a limited number of high-quality oncologists, these algorithms are infinitely scalable, meaning that the expertise of these doctors will no longer be reserved for patients receiving care from world’s leading hospitals and research institutions.

Building the Medical Imaging and Diagnosis Tools of the Future

Companies across the globe are working to build these diagnostic tools, and, to do so, they need to train their computer vision models to the highest standards.

When building a computer vision model for medical diagnosis, the most important factor is to ensure that the quality of the ground truth is very high. The training data used to train the model must be of the same standard as to what a doctor would have signed off on. The annotations must be accurate and informative, and the distribution of the data must be well-balanced so that the algorithm learns to find its target outcome in examples that represent a variety of real world scenarios. For instance, having demographic variety in the dataset is extremely important. An algorithm trained only on data from college students of a certain ethnic background would not reflect the balance of the real world, so the model wouldn’t be able to make accurate predictions when run on data collected from people of varying demographics.

Building these models also requires a lot of collaboration between doctors and machine learning engineers and between multiple doctors. This collaboration helps ensure that the model is being designed to answer the appropriate questions for the real-world scenario faced by the end-user and that it is learning to make predictions from practising on high-quality training data.

Without the expertise of both data scientists and clinicians during the design and training phases, the resulting model won’t be very effective. Designing clinical grade algorithms requires the input of both sets of stakeholders. Machine learning engineers need to work closely with physicians because physicians are the end-users. By consulting with them, the engineers gain access to a full set of nuanced questions that must be answered for the product to achieve maximum effectiveness in a real world use case.

However, doctors need to be able to work closely with each other, too, so that they can perform thorough workflow reviews. A workflow review contains two parts: the groundtruth review and the model review. For a ground truth review, doctors must check the accuracy of the machine-produced annotations on which the model will be trained. For the model review,doctors check the model’s outputs as a way of measuring its performance and making sure that it’s making predictions accurately. Having multiple doctors of different experience and expertise levels perform workflow reviews and verify the model’s outputs in multiple ways helps ensure the accuracy of its predictions. Often, regulatory bodies like the FDA require that teams building medical models have at least three doctors performing workflow reviews.

Machine learning engineers might be surprised to learn how often doctors disagree with one another when making a diagnosis; however, this difference of opinion is another reason why it’s important for engineers to consult with multiple doctors. If you work with just one doctor when training your model, the algorithm is only fit to that doctor’s opinion. To achieve the highest quality of model, engineers need to accommodate multiple layers of review and multiple opinions and arbitrate across all of them for the best result. Doctors in turn need tools that are catered for them and their workflows, tools that allow them to create precise annotations.

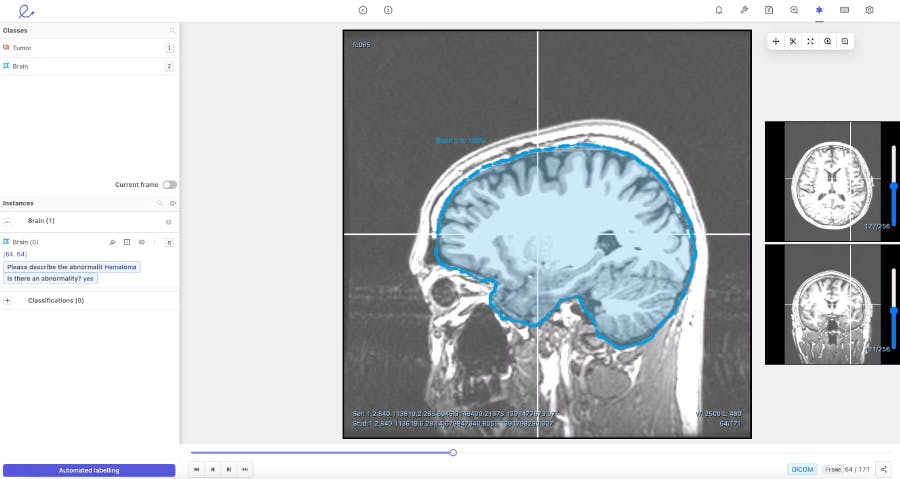

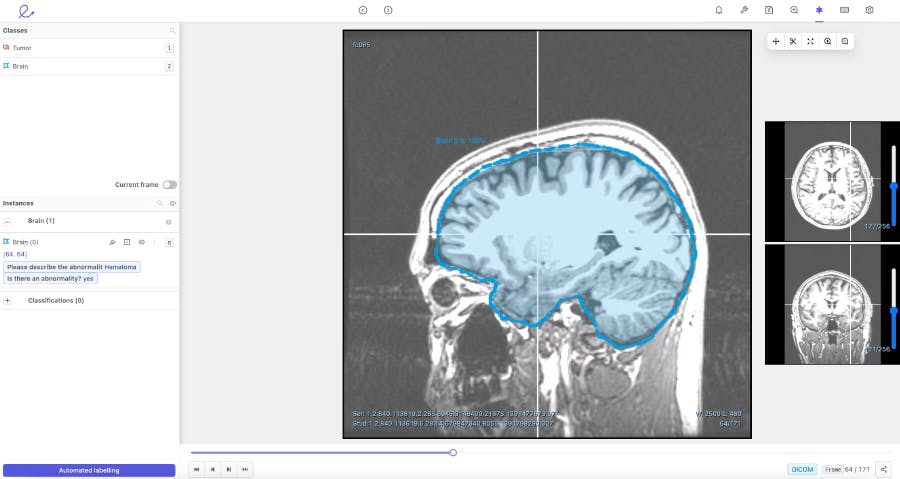

To facilitate the review process and help doctors annotate more efficiently, Encord developed our DICOM annotation tool– the first comprehensive annotation tool with native 3D annotation capabilities designed for medical AI. It is built to handle multiple medical imaging modalities, including CT, X-ray, and MRI. Our DICOM tool combines an approach of training and running automated models, with human supervision to review and refine labels. When it comes to ground truth review, the tool improves efficiency, reduces costs, and increases accuracy– making it an asset for time-pressed doctors and cost conscious hospitals.

When building our DICOM 3D-image annotation tool we consulted with physicians in King’s College, academics at Stanford, and ML experts at AI radiology companies building these types of medical vision models. As a result, we knew that our platform needed to have a flexible interface that enabled collaboration between clinical teams and data science teams. It had to be able to support multiple reviews, facilitate a consensus across different opinions, and perform quality assessments to check the ground truth of the algorithm automating data labelling.

We developed our DICOM annotation tool to augment and replace the manual labelling process that makes AI development expensive, time consuming, and difficult to scale. Currently, most AI development relies on outsourcing data to human labellers, including clinicians. The human error that arises during this process results in doctors having to waste time reviewing and correcting labels. With the DICOM image annotation tool, we hope to save physicians valuable time by giving them the right tools and by reducing their burden of manual labelling.

It’s an upstream approach, but creating our DICOM image annotation tool is our way of contributing to early cancer detection and prevention. Organisations that use the tool can increase the speed at which computer vision models can enter production and become viable for use in a medical environment.

The Future of AI and Medicine

The commercial adoption of medical AI will revolutionise healthcare in ways we can’t yet imagine.

With this technology, we can accelerate medical research by 100x. Think about how medical research was performed in the era before? Doctors and researchers had to write notes in a physical spreadsheet and perform analysis using slide rulers. Each step was immensely time consuming. Computers–though not designed to be a directly relevant technology for medical research– transformed the way research was done because of their power and universal reach.

Computer vision and machine learning will do the same.

Biological research and technology will advance in tandem, and working together, machine learning engineers and clinicians will be able to offer people preventative care rather than forcing them to wait until something egregious arises that warrants treatment.

Prevention of disease is faster, cheaper, and safer than treatment of a disease. We don’t yet have the AI tools to implement this vision at scale, but, with the rise of data-centric AI and advancements in data annotation, machine learning, and computer vision, we will get there.

Using the power of medical AI, we can transform our medical systems from focusing on “sick care” to focusing on “health care”. In doing so, we can spare people from both the life-or-death consequences of receiving a cancer diagnosis “too late” and the human suffering that accompanies current treatment methods.

Like I said, it’s a story of hope. And it’s just beginning.

Explore our products