Contents

Video Labeling for Computer Vision Models

Advantages of annotating video

Video annotation use cases

What is the role of a video annotator?

Video annotation techniques

Different methods to annotate

How to annotate a video for computer vision model training

Video annotation tools

Best practices for video annotation

Conclusion

Encord Blog

The Full Guide to Video Annotation for Computer Vision

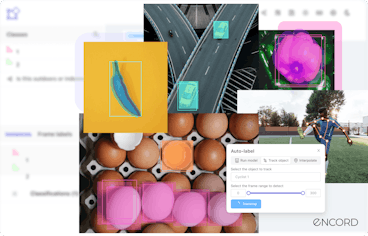

Power your AI models with the right data

Automate your data curation, annotation and label validation workflows.

Get startedContents

Video Labeling for Computer Vision Models

Advantages of annotating video

Video annotation use cases

What is the role of a video annotator?

Video annotation techniques

Different methods to annotate

How to annotate a video for computer vision model training

Video annotation tools

Best practices for video annotation

Conclusion

Written by

Frederik Hvilshøj

Computer vision has numerous cool applications like self-driving cars, pose estimation and many others in the field of medical imaging which uses videos as their data. Hence, video annotation plays a crucial part in training computer vision models.

Annotating images is a relatively simple and straightforward process. Video data labeling on the other hand is an entirely different beast! It has an added layer of complexity but you can extract more information from it if you know what you are doing and use the right tools.

In this guide, we’ll start with understanding video annotation, its advantages and use cases. Then we’ll look at the fundamental elements of video annotation and how to annotate a video. We’ll then look at video annotation tools and discuss best practices to improve video annotation for your computer vision projects.

Video Labeling for Computer Vision Models

In order to train computer vision AI models, video data is annotated with labels or masks. This can be carried out manually or, in some cases with AI-assisted video labeling. Labels can be used for everything from simple object detection to identifying complex actions and emotions.

Video annotation tools help manage these large datasets while ensuring high accuracy and consistency in the process of labeling.

Video annotation vs. Image annotation

As one might think, video and image annotation are similar in many aspects. But there are considerable differences as well between the two. Let’s discuss the three major aspects:

Data

Compared to images, video has a more intricate data structure which is also the reason it can provide more information per unit of data.

For example, the image shown doesn’t provide any information on the direction of movement of the vehicles. A video on the other hand would provide not only the direction but provide information to estimate its speed compared to other objects in the image. Annotation tools allow you to add this extra information to your dataset to be used for training ML models.

Video data can also use data from previous frames to locate an object that might be partially obscured or contains occlusion. In an image, this information would be lost.

Annotation process

Comparing video annotation to image annotation, there is an additional level of difficulty. While labeling one must synchronize and keep track of objects in various states between frames. This process can be made quicker by automating it.

Accuracy

While labeling images, it is essential to use the same annotations for the same object throughout the dataset. This can be difficult and prone to error. Video on other hand provides continuity across frames, limiting the possibility of errors. In the process of annotation, tools can help you remember context throughout the video, which in turn helps in tracking an object across frames. This ensures more consistency and accuracy than image labeling, leading to greater accuracy in the machine learning model’s prediction.

Computer vision applications do rely on images to train machine learning. While in some use cases, like object detection or pixel-by-pixel segmentation, annotated images are preferred. But considering image annotation is a tedious and expensive process, if you are building the dataset from scratch, let’s look at some of the advantages of video annotation instead of image data collection and annotation.

Advantages of annotating video

Video annotation can be more time-consuming than image annotation. But with the right tool, it can provide added functionalities for efficient model building. Here are some of the functionalities that annotated videos provide:

Ease of data collection

As you know a few seconds of the video contains several individual images. Hence, a video of an entire scene contains enough data to build a robust model. The process of annotation also becomes easier as you do not need to annotate each and every frame. Labeling the first occurrence of the object and the last frame the object occurs is enough. The rest of the annotation of in-between frames can be interpolated.

Temporal context

Video data can provide more information in form motion which static images cannot help the ML models. For example, labeling a video can provide information about an occluded object. It provides the ML model with temporal context by helping the ML model understand the movement of objects and how it changes over time.

This helps the developer to improve network performance by implementing techniques like temporal filters and Kalman filters. The temporal filters help the ML models to filter out the misclassifications depending on the presence or absence (occlusion) of specific objects in adjacent frames. Kalman filters use the information from the adjacent frames to determine the most likely location of an object in the subsequent frames.

Practical functionality

Since the annotated videos provide fine-grained information for the ML models to work with, they lead to more accurate results. Also, they depict real-world scenarios more precisely than images and hence can be used to train more advanced ML models. So, video datasets are more practical in terms of functionality.

Video annotation use cases

Now that we understand the advantages of annotated video datasets, let’s briefly discuss how it helps in real-world applications of computer vision.

Autonomous vehicles

The ML models for autonomous vehicles solely rely on labeled videos to understand the surrounding. It’s mainly used in the identification of objects on the street and other vehicles around the car. It is also helpful in building collision braking systems in vehicles. These datasets are not just used in building autonomous vehicles, they can also be used to monitor driving in order to prevent accidents. For example, monitoring the driver’s condition or monitoring unsafe driving behavior to ensure road safety.

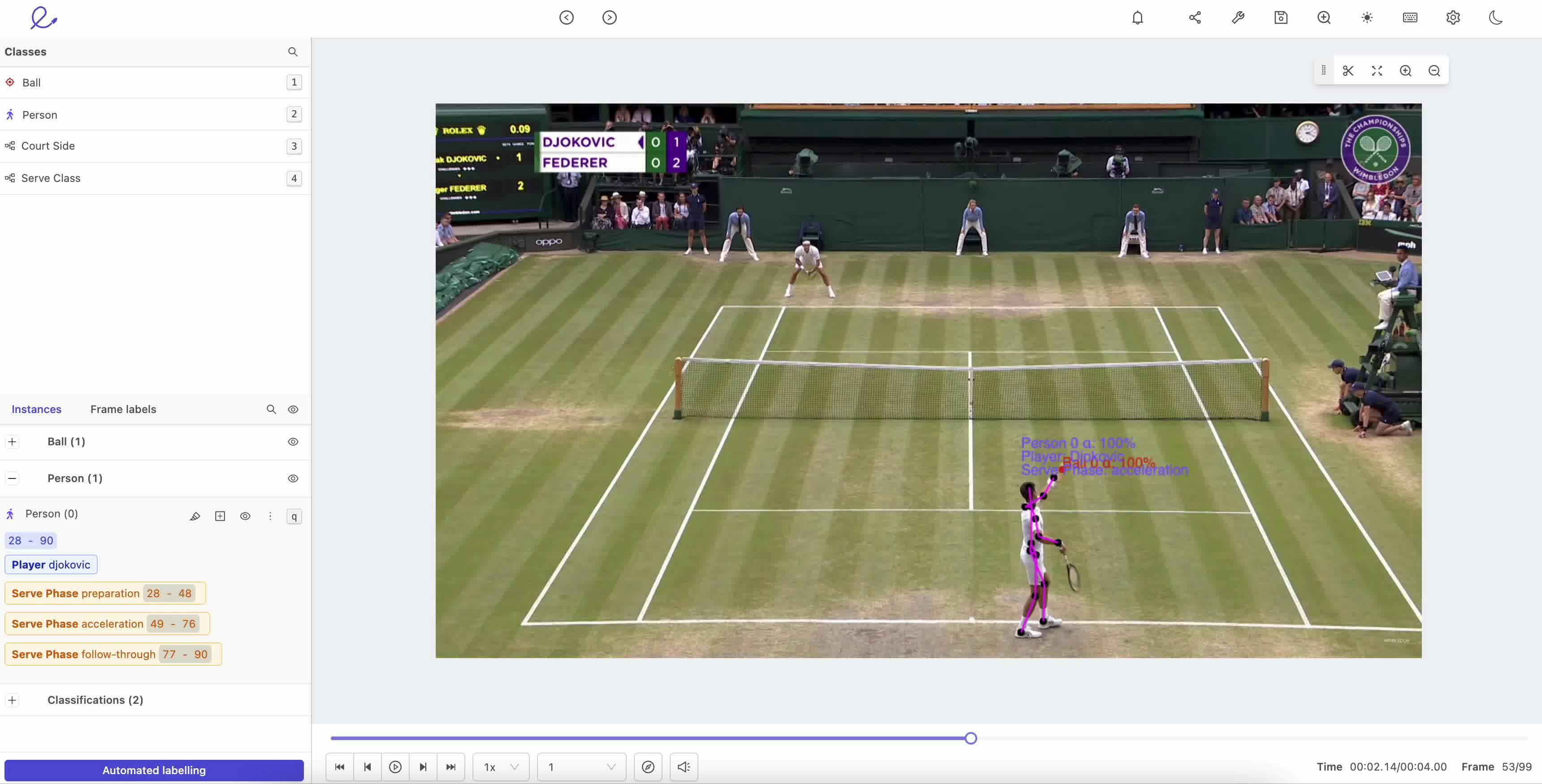

Pose estimation

Robust pose estimation has a wide range of applications like tracking body parts in gaming, augmented and virtual reality, human-computer interaction, etc. While building a robust ML model for pose estimation one can face a few challenges which arise due to the high variability of human visual appearance when using images. These could be due to viewing angle, lighting, background, different sizes of different body parts, etc. A precisely annotated video dataset, allows the ML model to identify the human in each frame and keep track of them and their motion in subsequent frames. This will in turn help in training the ML model to track human activities and estimate the poses.

Traffic surveillance

Cities around the world are adapting to rely on smart traffic management systems to improve traffic conditions. Given the growing population, smart management of traffic is becoming more and more necessary. Annotated videos can be used to build ML models for traffic surveillance. These systems can monitor accidents and quickly alert the authorities. It can also help in navigating the traffic out of congestion by routing the traffic into different routes.

Medical Imaging

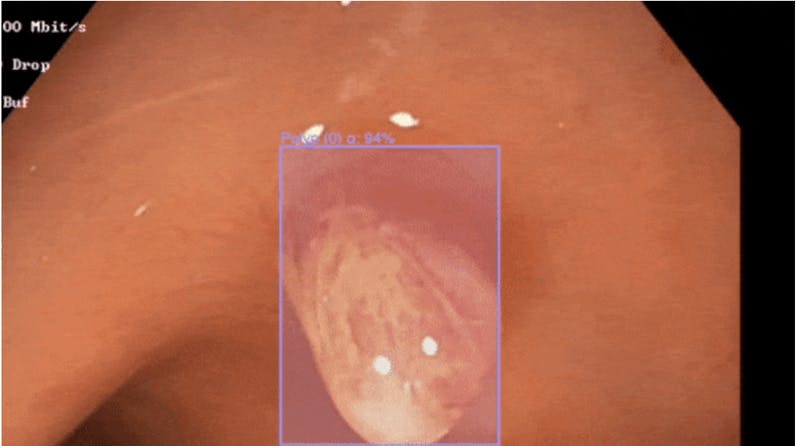

Machine learning is making its way into the field of medical science. Many diagnoses rely on videos. In order to use this data for diagnosis through ML models, one needs to annotate. For example, in endoscopy, the doctors have to go through videos in order to detect abnormalities. This process can be fast-forwarded by annotating these videos and training ML models. ML models can run live detection of abnormalities and act as the doctor’s assistant. This will also ensure higher accuracy as there is a second method of filtration for the detection. For deeper insight into how video annotation helps doctors in the field of gastroenterology, take a look at our blog Pain Relief for Doctors Labeling Data.

In the field of medical diagnostics, high-precision annotations of medical images are crucial for building reliable machine learning models. In order to understand the importance of robust and effective medical image annotation and its use in the medical industry in detail, please read our blog Introduction to medical image labeling for machine learning.

Though the use cases discussed here mainly focus on the object detection and segmentation tasks in the field of computer vision, it is to be noted that use cases of video datasets are not limited to just these tasks.

While there are several benefits to annotating videos rather than images and many use cases of video datasets alone, the process is still laborious and difficult. The person responsible for annotating these videos must understand the use of the right tools and workflows.

What is the role of a video annotator?

The role of a video annotator is to add labels and tags to the video dataset that has been curated for the specific task. These labeled datasets are used for training the ML models. The process of adding labels to data is known as annotation and it helps the ML models in identifying specific objects or patterns in the dataset.

The best course of action if you are new to the process is to learn about video annotation techniques. This will help in understanding and using the ideal type of annotation for the specific task. Let’s first understand the different processes of annotating videos and then dive deeper into different methods to annotate a video.

Video annotation techniques

There are mainly two different methods one could annotate the videos:

- Single frame annotation

This is more of a traditional method of labeling. The video is separated or divided into distinct frames or images and labeled individually. This is chosen when the dataset contains videos with less dynamic object movement and is smaller than the conventional publicly available datasets. Otherwise, it is time consuming and expensive as the videos one has to annotate a huge amount of image data, given a large video dataset.

- Multiframe or stream annotation

In this method, the annotator labels the objects as video streams using data annotation tools, i.e the object and its coordinates have to be tracked frame-by-frame as the video plays. This method of video annotation is significantly quicker and more efficient, especially when there is a lot of data to process. The tagging of the objects is done with greater accuracy and consistency. With the growing use of video annotation tools, the multi-frame approach has grown more widespread.

The continuous frame method of video labeling tools now features to automate the process which makes it even easier and helps in maintaining continuity. This is how it’s done: Frame-by-frame machine learning algorithms can track objects and their positions automatically, preserving the continuity and flow of the information. The algorithms evaluate the pixels in the previous and next frames and forecast the motion of the pixels in the current frame. This information is enough for the machine learning algorithms to accurately detect a moving object that first appears at the beginning of the video before disappearing for a few frames and then reappearing later.

The task for which the dataset has been curated is essential to understand in order to pick the right annotation methods. For example, in human pose estimation, you need to use the keypoint method for labeling the joints of humans. Using a bounding box for it would not provide the ML model with enough data to identify each joint. So let’s learn more about different methods to annotate your videos!

Different methods to annotate

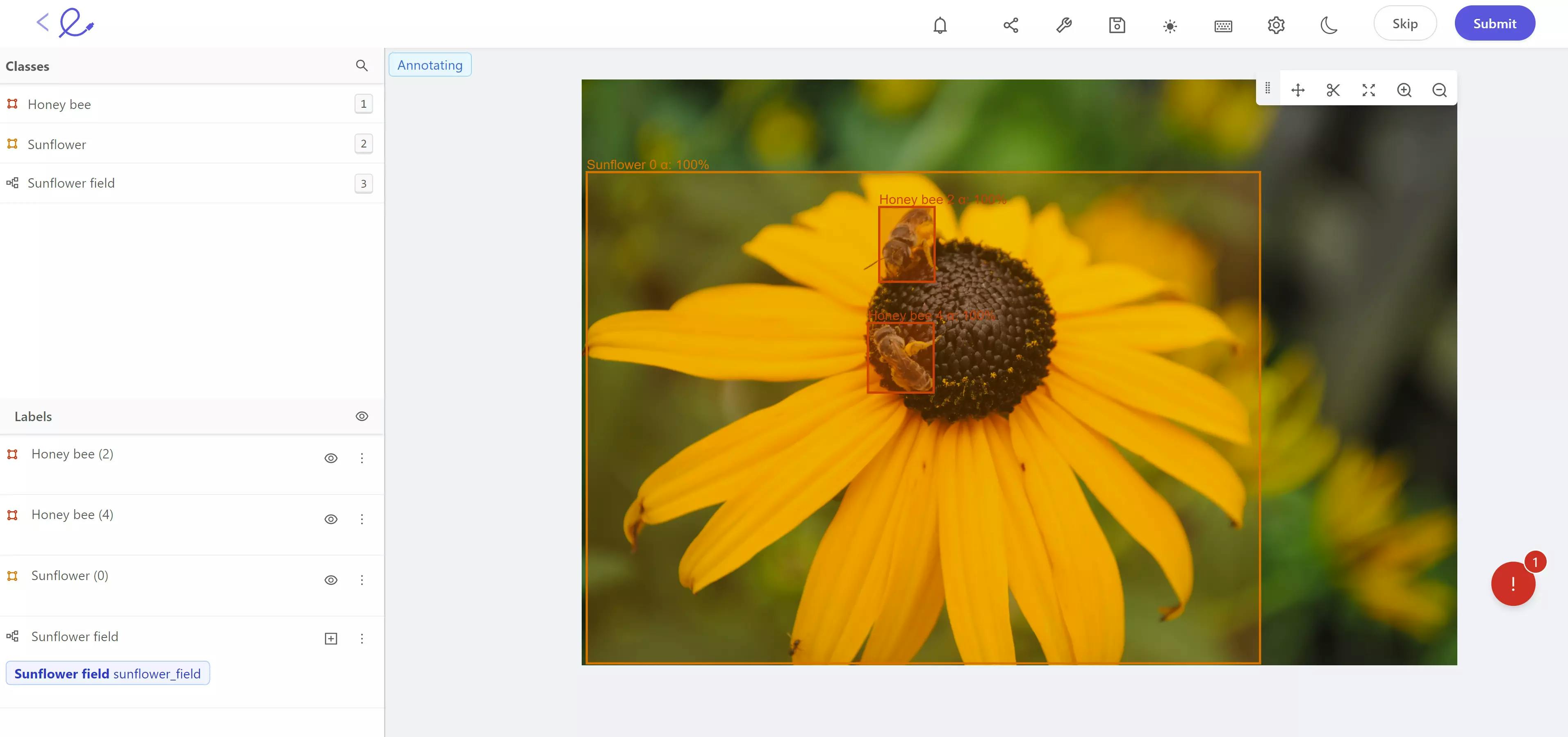

Bounding boxes

Bounding boxes are the most basic type of annotation. With a rectangular frame, you surround the object of interest. It can be used for objects for which some elements of the background will not interfere in the training and interpretation of the ML model. Bounding boxes are mainly useful in the task of object detention as they help in identifying the location and size of the object of interest in the video. For rectangular objects, they provide precise information. If the object of interest is of any other shape, then polygons should be preferred.

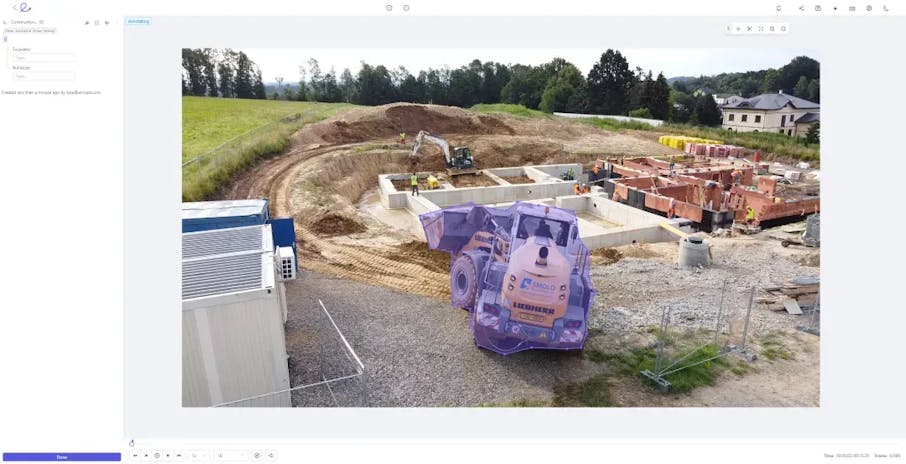

Annotating an image with bounding boxes in the Encord platform

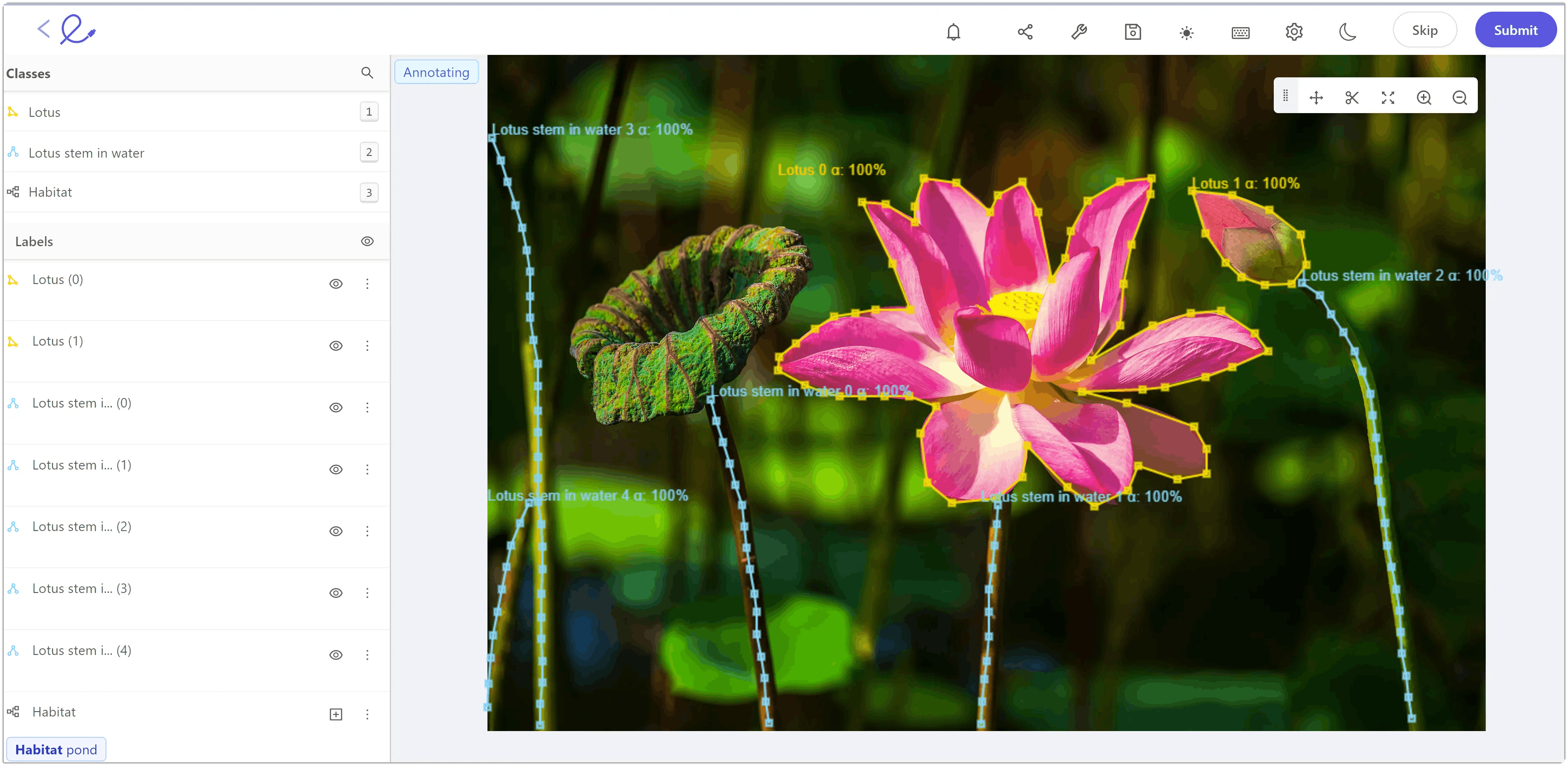

Polygons

Polygons are used to annotate when the object of interest is of irregular shape. This can also be used when any element of background is not required.

This process of annotating through polygons can be tiresome for large datasets. But with automated segmentation features in the annotation tools, this can get easier.

Polylines

Polylines are quite essential in video datasets to label the objects which are static by nature but move from frame to frame. For example, in autonomous vehicle datasets, the roads are annotated using polylines.

Polygon and polyline annotation in the Encord platform

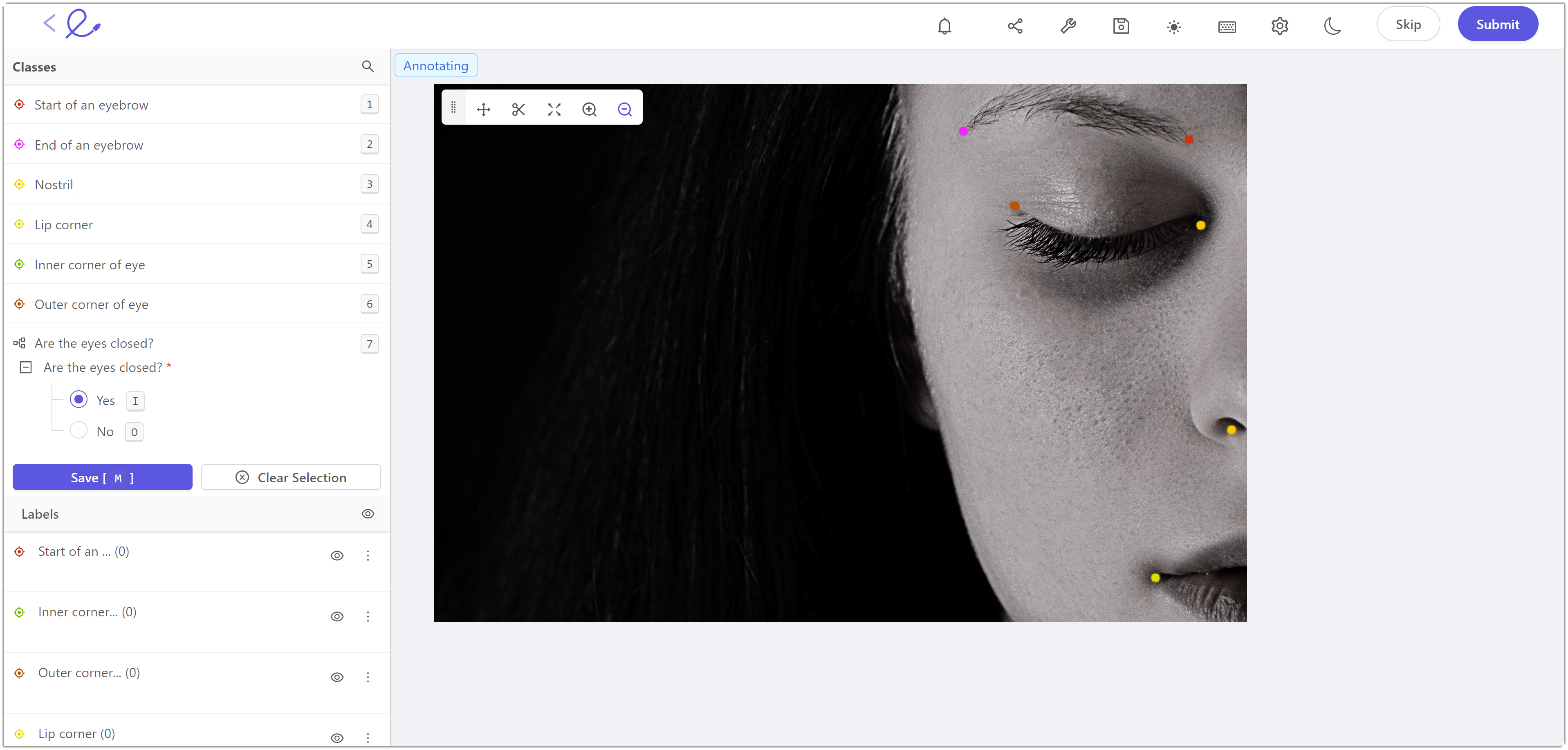

Keypoints

Keypoints are helpful for annotating objects of interest whose geometry is not essential for training the ML model. They outline or pinpoint the landmarks of the objects of interest. For example, in pose estimation, keypoints are used to label the landmarks, or the joints, of the human body. These keypoints here represent the human skeleton and can be used to train models to interpret or monitor the motion of humans in videos.

Keypoint annotation in Encord

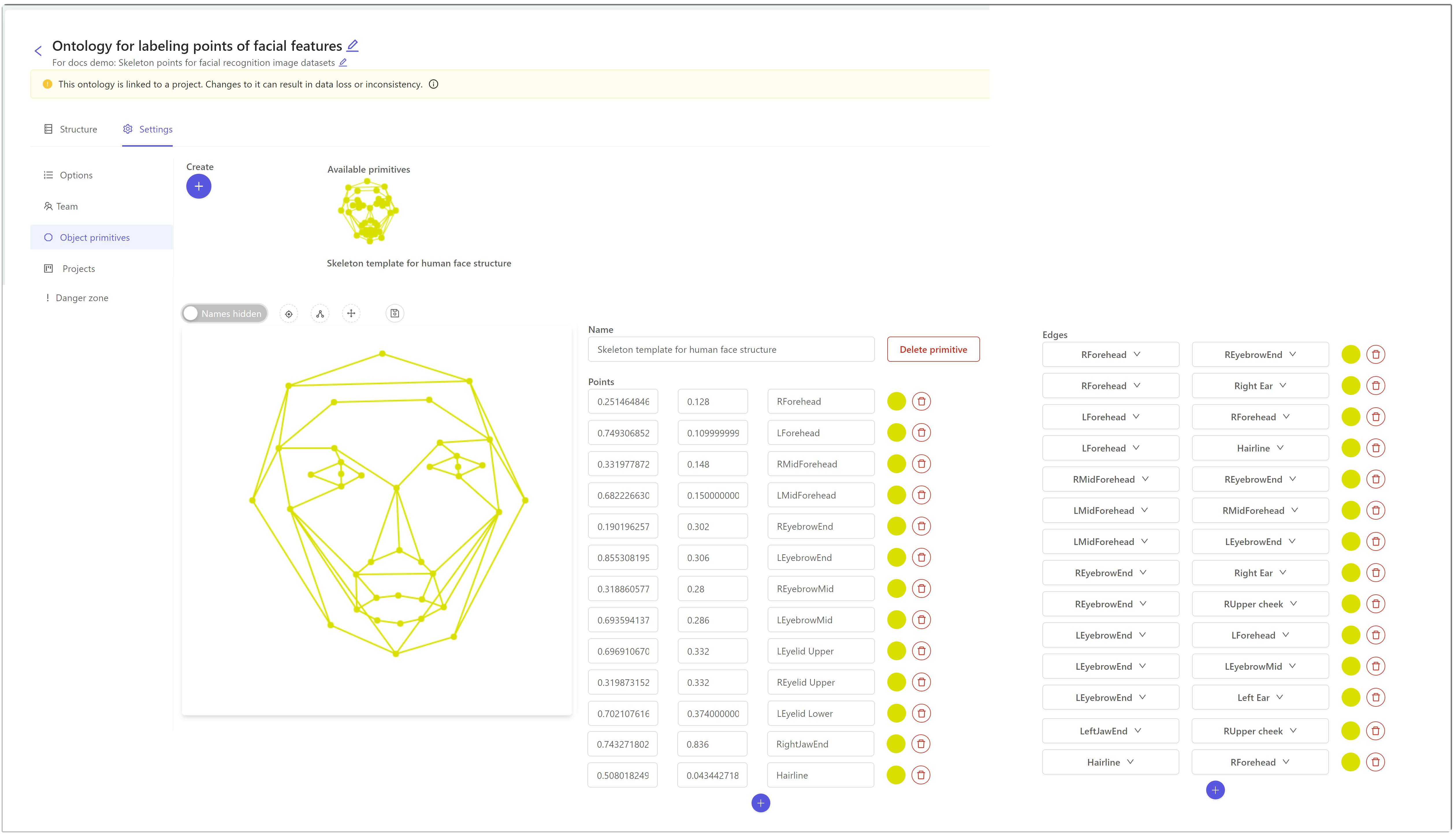

Primitives

They are also known as skeleton templates. Primitives are used for specialized annotations for template shapes like 3D cuboids, rotated bounding boxes, etc. It is particularly useful in labeling objects whose 3D structure is required from the video. Primitives are very helpful for annotating medical videos.

Creating a skeleton template (primitives) in Encord

Now that we have understood the fundamentals of video annotation, let us see how to annotate a video!

How to annotate a video for computer vision model training

Even though video annotations are efficient, labeling them can still be tedious for the annotator given the sheer amount of videos in datasets. That’s why designing the video annotation pipeline streamlines the task for the annotators. The pipeline should include the following components:

1. Define Objectives

Before starting the annotation process, it is essential to explicitly define the project’s goal. The curated dataset and the objective of the ML model should be accounted for before the start of the annotation process. This ensures that the annotation process supports the building of a robust ML model.

2. Choose the right tool or service

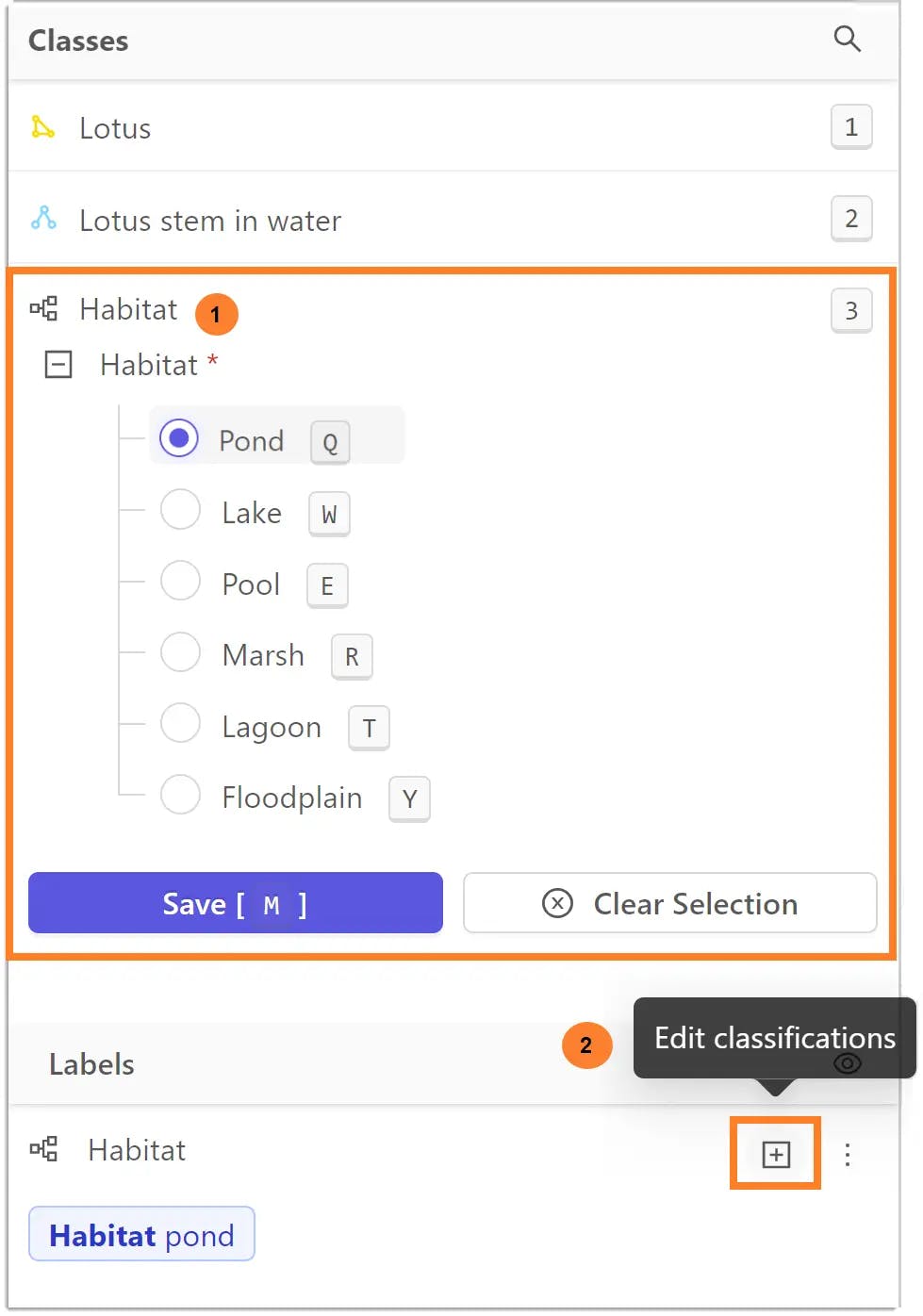

The type of dataset and the techniques you are going to use should be considered while choosing the video annotation tool. The tool should contain the following features for ease of annotation:

- Advanced video handling

- Easy-to-use annotation interface

- Dynamic and event-based classification

- Automated object tracking and interpolation

- Team and project management

To learn more about the features to look for in a video annotation tool, you can read the blog 5 features you need in a video annotation tool.

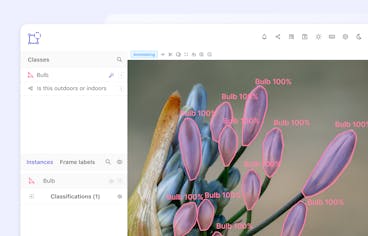

Label classifications in Encord

3. Review the annotation

The process of reviewing the annotations should be done from time to time to ensure that the dataset is labeled as per the requirement. While annotating large datasets, it is possible that a few things are annotated wrongly or missed. Reviewing the annotation at intervals would ensure it doesn’t happen. Annotation tools provide operation dashboards to incorporate this into your data pipeline. These pipelines can be automated as well for continuous and elastic data delivery at scale.

Video annotation tools

There are a number of video annotation platforms available, some of them are paid whereas some of them are free.

The paid annotation platforms are mainly used by machine learning and data operations teams who are working on commercial computer vision projects. In order to deal with large datasets and manage the whole ML lifecycle, you need additional support from all the tools you are using in your project. Here are some of the features Encord offers which aren’t found in free annotation tools:

- Powerful ontology features to support complex sub-classifications of your labels

- Render and annotate videos and image sequences of any length

- Support for all annotation types, boxes, polygons, polylines, keypoints and primitives.

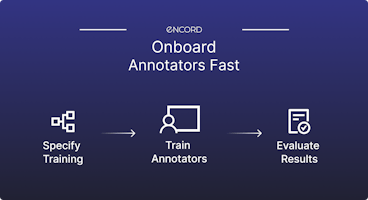

- Customizable review and annotation pipelines to monitor the performance of your annotators and automatically allocate labeling tasks

- Ability to automate the annotation using Encord’s micro- model approach

There are also video annotation tools which are free. They are suitable for academics, ML enthusiasts, and students who are looking for solutions locally and have no intention of scaling the solution.

So, let’s look at a few open-source video annotation tools for labeling your data for computer vision and data science projects.

CVAT

CVAT is a free and open-sourced, web-based annotation tool for labeling data for computer vision. It supports primary tasks for supervised learning: object detection, classification and image segmentation.

Features

- Offers four basic annotation techniques: boxes, polygons, polylines and points

- Offers semi-automated annotation

- Supports interpolation of shapes between keyframes

- Web-based and collaborative

- Easy to deploy. Can be installed in a local network using Docker but is difficult to maintain as it scales

LabelMe is an online annotation tool for digital images. It is written in Python and uses Qt for its graphical interface.

Features

- Videos should be converted into images for the annotation

- Offers basic annotation techniques: polygon, rectangle, circle, line, and point

- Image flag annotation for classification and cleaning

- Annotations can only be saved in JSON format (supports VOC and COCO formats which are widely used for experimentation)

Diffgram is an open-sourced platform providing annotation, catalog and workflow services to create and maintain your ML model.

Features

- Offers fast video annotation with high resolution, high frame rate and multiple sequences with their interface

- Annotations can be automated

- Simplified human review pipelines to increase training data and project management efficiency

- Store the dataset virtually; unlimited storage for their enterprise product

- Easy ingest of the predicted data

- Offers automated error highlighting to ease the process of debugging and fixing issues.

Best practices for video annotation

In order to use your video datasets to train a robust and precise ML model, you have to ensure that the labels on the data are accurate. Choosing the right annotation technique is important and should not be overlooked. Other than this, there are a few things to consider while annotating video data.

So, how do you annotate effectively?

For those who want to train their computer vision models, here are some tips for video annotators.

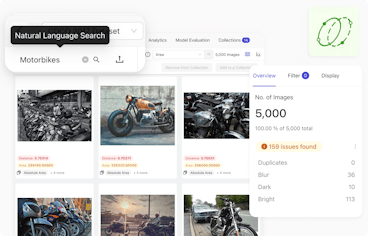

Quality of the dataset

The quality of the dataset is crucial for any ML model building. The dataset curated should be cleaned before starting the annotation process. The low quality and duplicate data should be identified and removed so that it doesn’t affect your model training adversely.

If an annotation tool is being used then you have to ensure that it uses lossless frame compression so that the tool doesn’t degrade the quality of the dataset.

Using right labels

The annotators need to understand how the dataset is going to be used in the training of the ML model. If the project goal is object detection, then they need to be labeled using bounding boxes or the right annotation technique to get the coordinates of the object. If the goal is to classify objects, then class labels should be defined previously and then applied.

Organize the labels

It is significant to use customized label structures and use accurate labels and metadata to prevent the objects from being incorrectly classified after the manual annotation work is complete. So the label structures and the class it would belong to should be predefined.

Use of interpolation and keyframes

While annotating videos, you may come across objects that move predictably and don’t change shape throughout the video. In these cases, identifying the frames which contain important data which is enough is important. By identifying these keyframes, you do not need to label the whole video, but use them to interpolate and annotate. This speeds up the process while maintaining quality. So the sooner you find these keyframes in your video, the faster the annotation process.

User-friendly video annotation tool

In order to create precise annotations which will, later on, be used for training ML models, annotators require powerful user-friendly annotation tools. The right tool would make this process easier, cost-effective and more efficient. Annotation tools offer many features which can help to make the process simpler.

For example, tools offering auto-annotation features like auto segmentation. Annotating the segmentations in video datasets manually is more time consuming than labeling classes or drawing bounding boxes for object detection. The auto-segmentation feature allows the annotator to just draw an outline over the object of interest, and the tool automatically “snaps' to the contours of the object saving the annotator's time of annotator.

Similarly, there are many features a video annotation tool has which are built to help the annotators. While choosing the tool it is also essential to look at features such as automation, which align with the goal of the annotation and would make the process more efficient.

Conclusion

Computer vision systems are built around images and videos. So, if you are working on a computer vision project, it is essential to understand your data and how it has been created before building the model. I’ve discussed the difference between using images and videos, and the advantages of using videos for your ML model. Then we took a deeper dive into video annotation, and the techniques, and discussed briefly the tools available. Lastly, we looked at a few of the best practices for your video annotation.

If you’re looking for an easy to use video annotation tool that offers complex ontology structures and streamlined data management dashboards, get in touch to request a trial of Encord.

Power your AI models with the right data

Automate your data curation, annotation and label validation workflows.

Get startedWritten by

Frederik Hvilshøj

Related blogs

The Python Developer's Toolkit for PDF Processing

PDFs (Portable Document Format) are a ubiquitous part of our digital lives, from eBooks and research papers to invoices and contracts. For developers, automating PDF processing can save time and boost productivity. 🔥Fun Fact: While PDFs may appear to contain well-structured text, they do not inherently include paragraphs, sentences, or even words. Instead, a PDF file is only aware of individual characters and their placement on the page.🔥 This characteristic makes extracting meaningful text from PDFs challenging. The characters forming a paragraph are indistinguishable from those in tables, footers, or figure descriptions. Unlike formats such as .txt files or Word documents, PDFs do not contain a continuous stream of text. A PDF document is composed of a collection of objects that collectively describe the appearance of one or more pages. These may include interactive elements and higher-level application data. The file itself contains these objects along with associated structural information, all encapsulated in a single self-contained sequence of bytes. In this comprehensive guide, we’ll explore how to process PDFs in Python using various libraries. We’ll cover tasks such as reading, extracting text and metadata, creating, merging, and splitting PDFs. Prerequisites Before diving into the code, ensure you have the following: Python installed on your system Basic understanding of Python programming Required libraries: PyPDF2, pdfminer.six, ReportLab, and PyMuPDF (fitz) You can install these libraries using pip: pip install PyPDF2 pdfminer.six reportlab PyMuPDF Reading PDFs with PyPDF2 PyPDF2 is a pure-python library used for splitting, merging, cropping, and transforming pages of PDF files. It can also add custom data, viewing options, and passwords to PDF files. Code Example Here we are reading a PDF and extracting text from it: import PyPDF2 def extract_text_from_pdf(file_path): with open(file_path, 'rb') as file: reader = PyPDF2.PdfReader(file) text = '' for page_num in range(len(reader.pages)): text += reader.pages[page_num].extract_text() return text # Usage file_path = 'sample.pdf' print(extract_text_from_pdf(file_path)) Extracting Text and Metadata with pdfminer.six pdfminer.six is a tool for extracting information from PDF documents, focusing on getting and analyzing the text data. Code Example Here’s how to extract text and metadata from a PDF: from pdfminer.high_level import extract_text from pdfminer.pdfparser import PDFParser from pdfminer.pdfdocument import PDFDocument def extract_text_with_pdfminer(file_path): return extract_text(file_path) def extract_metadata(file_path): with open(file_path, 'rb') as file: parser = PDFParser(file) doc = PDFDocument(parser) metadata = doc.info[0] return metadata # Usage file_path = 'sample.pdf' print(extract_text_with_pdfminer(file_path)) print(extract_metadata(file_path)) Creating and Modifying PDFs with ReportLab ReportLab is a robust library for creating PDFs from scratch, allowing for the addition of various elements like text, images, and graphics. Code Example To create a simple PDF: from reportlab.lib.pagesizes import letter from reportlab.pdfgen import canvas def create_pdf(file_path): c = canvas.Canvas(file_path, pagesize=letter) c.drawString(100, 750, "Hello from Encord!") c.save() # Usage create_pdf("test.pdf") To modify an existing PDF, you can use PyPDF2 in conjunction with ReportLab. Manipulating PDFs with PyPDF2 Code Example for Merging PDFs from PyPDF2 import PdfMerger def merge_pdfs(pdf_list, output_path): merger = PdfMerger() for pdf in pdf_list: merger.append(pdf) merger.write(output_path) merger.close() # Usage pdf_list = ['file1.pdf', 'file2.pdf'] merge_pdfs(pdf_list, 'merged.pdf') Code Example for Splitting PDFs from PyPDF2 import PdfReader, PdfWriter def split_pdf(input_path, start_page, end_page, output_path): reader = PdfReader(input_path) writer = PdfWriter() for page_num in range(start_page, end_page): writer.add_page(reader.pages[page_num]) with open(output_path, 'wb') as output_pdf: writer.write(output_pdf) # Usage split_pdf('merged.pdf', 0, 2, 'split_output.pdf') Code Example for Rotating Pages from PyPDF2 import PdfReader, PdfWriter def rotate_pdf(input_path, output_path, rotation_degrees=90): reader = PdfReader(input_path) writer = PdfWriter() for page_num in range(len(reader.pages)): page = reader.pages[page_num] page.rotate(rotation_degrees) writer.add_page(page) with open(output_path, 'wb') as output_pdf: writer.write(output_pdf) # Usage input_path = 'input.pdf' output_path = 'rotated_output.pdf' rotate_pdf(input_path, output_path, 90) Extracting Images from PDFs using PyMuPDF (fitz) PyMuPDF (also known as fitz) allows for advanced operations like extracting images from PDFs. Code Example Here is how to extract images from PDFs: import fitz def extract_images(file_path): pdf_document = fitz.open(file_path) for page_num in range(len(pdf_document)): page = pdf_document.load_page(page_num) images = page.get_images(full=True) for image_index, img in enumerate(images): xref = img[0] base_image = pdf_document.extract_image(xref) image_bytes = base_image["image"] image_ext = base_image["ext"] with open(f"image{page_num+1}_{image_index}.{image_ext}", "wb") as image_file: image_file.write(image_bytes) # Usage extract_images('sample.pdf') If you're extracting images from PDFs to build a dataset for your computer vision model, be sure to explore Encord—a comprehensive data development platform designed for computer vision and multimodal AI teams. Conclusion Python provides a powerful toolkit for PDF processing, enabling developers to perform a wide range of tasks from basic text extraction to complex document manipulation. Libraries like PyPDF2, pdfminer.six, and PyMuPDF offer complementary features that cover most PDF processing needs. When choosing a library, consider the specific requirements of your project. PyPDF2 is great for basic operations, pdfminer.six excels at text extraction, and PyMuPDF offers a comprehensive set of features including image extraction and table detection. As you get deeper into PDF processing with Python, explore the official documentation of these libraries for more advanced features and optimizations (I have linked them in this blog!). Remember to handle exceptions and edge cases, especially when dealing with large or complex PDF files.

Jul 17 2024

5 M

Video Data Curation Guide for Computer Vision Teams

Video data curation in computer vision shares similarities with the meticulous editing process of a film director, where each frame is carefully chosen to create a compelling narrative. Much like a director crafts a story, video data curation involves collecting, organizing, and preparing raw video data to optimize the training and performance of machine learning models. For example, well-curated dashcam footage is essential for training self-driving car models to accurately detect pedestrians, vehicles, road signs, and other objects. Conversely, models trained on poorly curated data can exhibit biases and blind spots that compromise their real-world performance. This process goes beyond ensuring data quality; it directly impacts the accuracy and efficiency of models designed for facial recognition, object detection, and automated video tagging. This article is a comprehensive guide to curating video data—selecting representative frames, accurately annotating objects, and ensuring balanced datasets—to set the stage for building quality training data for high-performance computer vision models. Importance of Video Data Curation in Computer Vision The significance of video data curation in computer vision (CV) cannot be overstated. With the exponential growth in video data fueled by advancements in digital technology and the proliferation of video content platforms, effectively managing this data becomes crucial. Data curation helps improve model performance by ensuring that the data used for training ML algorithms is high-quality, well-annotated, and representative of diverse scenarios and environments. For instance, consider a self-driving car that fails to detect pedestrians in low-light conditions because its training data lacks sufficient nighttime footage. This example highlights the critical role of data curation in ensuring the robustness and reliability of computer vision applications. Curation involves various techniques, such as selecting the most relevant and informative video frames, annotating these frames with accurate labels, and organizing the data to facilitate efficient processing and analysis. It helps reduce noise in the data, such as irrelevant frames or poorly labeled information, leading to better model accuracy and robustness. Recommendation: How to Improve the Accuracy of Computer Vision Models. Furthermore, data curation optimizes data for specific computational models and applications. For instance, embeddings—numerical representations of videos that capture their semantic content—can be generated and used with clustering or nearest neighbor search to group similar videos by content. See Also: The Full Guide to Embeddings in Machine Learning. This approach not only aids in efficient data retrieval and handling but also improves the training process by grouping similar instances, thereby improving the learning phase of models. Systematic data curation solves data diversity, volume, and annotation issues, which makes it essential to CV projects. Advantages of Video Data Curation Video data curation is essential in CV, offering numerous advantages that improve the development and deployment of robust models. Here are some of the key benefits: Improved Model Performance: Carefully curated data, free from errors and inconsistencies, leads to more accurate and reliable models. Reduced Training Time: By selecting only relevant and high-quality data, the training process becomes more efficient, saving valuable time and resources. Enhanced Generalization: Curation ensures that the data represents a wide range of scenarios, environments, and edge cases, improving the model's ability to generalize to new, unseen data. Increased Reproducibility: Well-documented curation processes make it easier to reproduce and validate results, promoting transparency and trust in the research. Cost Savings: By identifying and eliminating low-quality or irrelevant data early in the process, curation helps to avoid costly mistakes and rework later on. Watch: From Data to Diamonds: Unearthing the True Value of Quality Data. Components of Video Curation Video data curation encompasses various techniques to enhance the quality, organization, and accessibility of video data. Here are some of the key components: Different techniques for video curation (Source) Analyzing Motion: Scene Cut Detection: Identifying transitions between scenes or shots in a video is crucial for summarization and indexing tasks. Methods like frame differencing (which calculates the pixel-by-pixel differences between consecutive frames), histogram analysis (assessing changes in visual content through color comparison), or ML models (analyzing patterns) can achieve this. Optical Flow: This technique analyzes the apparent motion of objects, surfaces, or edges between consecutive frames. It helps identify and track moving objects, distinguish between static and dynamic scenes, and segment content for further analysis or editing. RAFT Model, optical flow using deep learning (Source) Detecting scene cuts remains challenging due to motion blur, compression artifacts, and intricate editing techniques that seamlessly blend scenes. However, advances in algorithmic strategies and computational capabilities are gradually mitigating these issues, improving the reliability of scene-cut detection. Enriching Content Synthetic Captioning: Generating textual descriptions of video content is essential for accessibility and content retrieval. Modern models like CoCa and VideoBLIP can automatically generate captions summarizing a video's visual content. Text Overlay Detection (OCR): Optical Character Recognition (OCR) technology is used to identify and extract text that appears over videos, such as subtitles, credits, or annotations. This information can be used for indexing, searching, and content management. Recommended: The Full Guide to Video Annotation for Computer Vision. Assessing Relevance CLIP-based Scoring: The CLIP model, developed by OpenAI, can assess the relevance of video content to textual descriptions. This technique is valuable for content retrieval and recommendation systems, ensuring videos align with user queries or textual prompts. It can also greatly improve user experience on platforms relying heavily on content discovery. 🔥 NEW RELEASE: We released TTI-Eval (text-to-image evaluation), an open-source library for evaluating zero-shot classification models like CLIP and domain-specific ones like BioCLIP against your (or HF) datasets to estimate how well the model will perform. Get started with it on GitHub, and do ⭐️ the repo if it's awesome. 🔥. While powerful, CLIP-based scoring faces challenges, such as the need for substantial computational resources due to the complexity of the model, especially when processing large volumes of video data. There’s also an ongoing need to refine these models to effectively handle diverse and nuanced video content. CLIP can also explore more complex video tasks, like action classification and recognition, across different environments. This involves recognizing a broad range of video activities that are not part of the training data. This adaptability makes CLIP-based scoring a robust tool for video analytics across varied applications. Workshop: How to Build Semantic Visual Search with ChatGPT & CLIP. Video Data Curation Process Video data curation for computer vision involves several critical steps, each contributing to effectively managing, annotating, and storing video data. This process ensures that the data is not only accessible but also primed for use in developing and training machine learning models. Here's a detailed look at each step in the video data curation process. Video Data Curation Process | Encord Video Selection and Acquisition The first step in video data curation is selecting and acquiring relevant content. This involves identifying and collecting video data from various sources that align with the specific objectives of a computer vision project. For instance, Encord allows you to ingest data by integrating different Cloud platforms, using the SDK to upload data programmatically, or importing data from local storage through the UI. Here is how you can import video datasets from your local storage to Index, the data management component of Encord: Encord Index walkthrough: uploading local data Data Management Effective data management is crucial for handling large volumes of video data and facilitating team collaboration. Encord's platform provides comprehensive tools to optimize these processes, including: Dataset Versioning: Seamlessly manage changes and iterations of video datasets. Advanced Filtering: Enhance searchability and retrievability of specific data points. Tagging: Categorize and organize video data for better structure and navigation. These features ensure that large video datasets remain manageable, accessible, and conducive to data-driven decision-making and CV workflows. Encord Inde walkthrough: Add files to the dataset Data Annotation and Labeling Data annotation involves labeling and categorizing content within video frames, and preparing the data for computer vision applications. Tools like Encord Annotate support various annotation types, such as: Bounding Boxes: Defining the location and extent of objects in a frame. Polygons: Outlining the precise shape of objects. Key Points: Marking specific points of interest, such as facial landmarks. By adding this metadata to video frames, annotation makes the data interpretable for computer vision models, enhancing the accuracy of tasks like object detection and tracking. For example, annotated video data can enable an autonomous vehicle to accurately identify and locate pedestrians, vehicles, and road signs in real-time. Here’s a walkthrough of how Index natively integrates with Annotate to create a Project to annotate the dataset: Encord Index integrates natively with Encord Annotate. Encord's automated labeling features (e.g., using SAM, object tracking, and auto-segmentation tracking) speed up your annotation. And with Active (soon coming to Index), you can pre-label data with ML-assisted algorithms. This is especially valuable for tasks like image segmentation and object detection, where it can automatically infer complex shapes from simple user interactions. Data Storage Managing the large file sizes associated with high-quality video content requires robust, scalable storage solutions. Encord Index is the data lake designed to meet the extensive data preservation needs for videos. Encord generally has large-capacity storage options that accommodate current volumes and scale to meet future demands, as well as efficient retrieval when needed. See our best practices documentation for guidelines on preserving and using your data on Encord. Data Permissions and Access Control Ensuring the security of sensitive video data is paramount, necessitating strict control over who can access it. Data management platforms often include tools for setting granular user roles and permissions and encrypting data to maintain privacy. Encord provides robust user management capabilities that allow for detailed access control, helping to safeguard data against unauthorized breaches. Encord Index walkthrough: Data permissions and access control By following these key steps in video data curation, organizations can ensure that their video datasets are well-organized, securely stored, and optimally prepared for developing cutting-edge computer vision applications. A well-designed curation workflow enables the creation of accurate, robust models that can drive significant value in various industries and use cases. Factors to Consider for Effective Video Curation Effective video curation is a multifaceted process that requires careful consideration of several key factors. These factors, including descriptive metadata, long-term accessible formats, copyright and permissions, data volume, video format, and software compatibility, collectively contribute to the success and sustainability of video curation efforts. Curators can ensure that video content is well-managed, easily discoverable, and preserved for future use by addressing these factors holistically. Descriptive Metadata Descriptive metadata plays a crucial role in video curation by enhancing the searchability and discoverability of video content. It includes information that describes the video assets for identification and discovery, such as: Unique Identifiers: Alphanumeric codes that uniquely identify each video asset. Physical/Technical Attributes: Format, duration, resolution, codec, etc. Bibliographic Attributes: Title, creator, subject, keywords, description, etc. Effective metadata management, including controlled vocabularies and metadata standards, ensures consistency and interoperability across systems, which makes the video content easily retrievable and usable. Encord Index walkthrough: Descriptive metadata. Long-term Video Accessible Formats It is vital to select the right video formats for long-term accessibility: Choose video formats known for stability and longevity (e.g., MOV, WebM, MPEG-4 with H.264 codec). Consider uncompressed or losslessly compressed formats for archival purposes. Avoid proprietary formats that may become obsolete. Copyright and Permissions Navigating copyright and permissions is a significant aspect of video curation. It involves understanding video content's legal framework, including copyright laws, fair use provisions, and licensing agreements. Curators must ensure that video content is used and distributed within legal boundaries, often requiring permissions or licenses from copyright holders. For example, a curator might need a commercial use license from the copyright owner before including a video clip in a monetized online course. Data Volume The sheer volume of video data presents storage, management, and retrieval challenges. Curators must implement strategies to handle large datasets efficiently, such as using data curation tools for categorization, tagging, and indexing. Cloud storage solutions can also provide scalable and cost-effective options for managing growing video collections. Effective data volume management ensures that video content remains organized and accessible. Video Format The choice of video format affects video content's quality, compatibility, and preservation. Curators must consider factors like compression, bit rates, and codecs when selecting formats. Using formats that balance quality with file size and compatibility is crucial for effective video curation. Compatibility with the Existing Software Ecosystem Ensuring compatibility with the existing software ecosystem is essential for seamless video curation workflows. This includes compatibility with video editing tools, digital asset management (DAM) systems, and archival software. Curators must select video formats and curation tools that integrate well with the organization's existing software infrastructure to facilitate efficient curation processes. They can develop robust video curation strategies that optimize the value and longevity of their video assets when they carefully evaluate and address these key factors. Effective video curation not only ensures the preservation and accessibility of video content but also unlocks its potential for reuse and repurposing in various contexts, from research and education to creative production and cultural heritage. Conclusion Video data curation is indispensable in computer vision, ensuring that video data is well-prepared for training accurate and efficient models. Key takeaways include: 1. Significance: Curation enhances model performance by improving data quality, reducing noise, and optimizing data for specific tasks. 2. Process: Curation involves video selection, data management, annotation, labeling, storage, and access control. 3. Techniques: Various techniques, such as scene cut detection, optical flow, synthetic captioning, text overlay detection with OCR, and CLIP-based scoring for assessing relevance, play crucial roles in enriching and organizing video data. 4. Considerations: Factors like descriptive metadata, long-term accessible formats, copyright, data volume, video format, and software compatibility are essential for successful curation. Understanding and applying these principles can unlock the full potential of video data for computer vision applications. Effective curation streamlines the development of robust models and ensures the long-term preservation and accessibility of valuable video assets.

Jun 04 2024

5 M

Dataset Distillation: Algorithm, Methods and Applications

As the world becomes more connected through digital platforms and smart devices, a flood of data is straining organizational systems’ ability to comprehend and extract relevant information for sound decision-making. In 2023 alone, users generated 120 zettabytes of data, with reports projecting the volume to approach 181 by 2025. While artificial intelligence (AI) is helping organizations leverage the power of data to gain valuable insights, the ever-increasing volume and variety of data require more sophisticated AI systems that can process real-time data. However, real-time systems are now more challenging to deploy due to the constant streaming of extensive data points from multiple sources. While several solutions are emerging to deal with large data volumes, dataset distillation is a promising technique that trains a model on a few synthetic data samples for optimal performance by transferring knowledge of large datasets into a few data points. This article discusses dataset distillation, its methods, algorithms, and applications in detail to help you understand this new and exciting paradigm for model development. What is Dataset Distillation? Dataset distillation is a technique that compresses the knowledge of large-scale datasets into smaller, synthetic datasets, allowing models to be trained with less data while achieving similar performance to models trained on full datasets. This approach was proposed by Wang et al. (2020), who successfully distilled the 60,000 training images in the MNIST dataset into a smaller set of synthetic images, achieving 94% accuracy on the LeNet architecture. The idea is based on Geoffrey Hinton's knowledge distillation method, in which a sophisticated teacher model transfers knowledge to a less sophisticated student model. However, unlike knowledge distillation, which focuses on model complexity, dataset distillation involves reducing the training dataset's size while preserving key features for model training. A notable example by Wang et al. involved compressing the MNIST dataset into a distilled dataset of ten images, demonstrating that models trained on this reduced dataset achieved similar performance to those trained on the full set. This makes dataset distillation a good option for limited storage or computational resources. Dataset distillation differs from core-set or instance selection, where a subset of data samples is chosen using heuristics or active learning. While core-set selection also aims to reduce dataset size, it may lead to suboptimal outputs due to its reliance on heuristics, potentially overlooking key patterns. Dataset distillation, by contrast, creates a smaller dataset that retains critical information, offering a more efficient and reliable approach for model training. Benefits of Dataset Distillation The primary advantage of dataset distillation is its ability to encapsulate the knowledge and patterns of a large dataset into a smaller, synthetic one, which dramatically reduces the number of samples required for effective model training. This provides several key benefits: Efficient Training: Dataset distillation streamlines the training process, allowing data scientists and model developers to optimize models with fewer training samples. This reduces the computational load and accelerates the training process compared to using the full dataset. Cost-effectiveness: The reduced size of distilled data leads to lower storage costs and fewer computational resources during training. This can be especially valuable for organizations with limited resources or those needing scalable solutions. Better Security and Privacy: Since distilled datasets are synthetic, they do not contain sensitive or personally identifiable information from the original data. This significantly reduces the risk of data breaches or privacy concerns, providing a safer environment for model training. Faster experimentation: The smaller size of distilled datasets allows for rapid experimentation and model testing. Researchers can quickly iterate over different model configurations and test scenarios, speeding up the model development cycle and reducing the time to market. Want to learn more about synthetic data generation? Read our article on what synthetic data generation is and why it is useful. Dataset Distillation Methods Multiple algorithms exist to generate synthetic examples from large datasets. Below, we will discuss the four main methods used for distilling data: performance matching, parameter matching, distribution matching, and generative techniques. Performance Matching Performance matching involves optimizing a synthetic dataset so that training a model on this data will give the same performance as training it on a larger dataset. The method by Wang et al. (2020) is an example of performance matching. Parameter Matching Zhao et al. (2021) first introduced the idea of parameter matching for dataset distillation. The method involves training a single network on the original and distilled dataset. The network optimizes the distilled data by ensuring the training parameters are consistent during the training process. Distribution Matching Distribution matching creates synthetic data with statistical properties similar to those of the original dataset. This method uses metrics like Maximum Mean Discrepancy or Kullback-Leibler (KL) divergence to measure the distance between data distributions and optimize the synthetic data accordingly. By aligning distributions, this method ensures that the synthetic dataset maintains the key statistical patterns of the original data. Generative Methods Generative methods train generative adversarial networks (GANs) to generate synthetic datasets that resemble original data. The technique involves training a generator to get latent factors or embeddings that resemble those of the original dataset. Additionally, this approach benefits storage and resource efficiency, as users can generate synthetic data on demand from latent factors or embeddings. Dataset Distillation Algorithm While the above methods broadly categorize the approaches used for dataset condensation, multiple learning algorithms exist within each approach to obtain distilled data. Below, we discuss eight algorithms for distilling data and mention the categories to which they belong. 1. Meta-learning-based Method The meta-learning-based method belongs to the performance-matching category of algorithms. It involves minimizing a loss function, such as cross-entropy, over the pixels between the original and synthetic data samples. The algorithm uses a bi-level optimization technique. An inner loop uses single-step gradient descent to get a distilled dataset, and the outer loop compares the distilled samples with the original data to compute loss. It starts by initializing a random set of distilled samples and a learning ratehyperparameter. It also samples a random parameter set from a probability distribution. The parameters represent pixels compared against those of the distilled dataset to minimize loss. Algorithm After updating the parameter set using a single gradient-descent step, the algorithm compares the new parameter set with the pixels of the original dataset to compute the validation loss. The process repeats for multiple training steps and involves backpropagation to update the distilled dataset. For a linear loss function, Wang et al. (2020) show that the number of distilled data samples should at least equal the number of features for a single sample in the original dataset to obtain the most optimal results. In computer vision (CV), where features represent each image’s pixels, the research implies that the number of distilled images should equal the number of pixels for a single image. Zhou et al. (2021) also demonstrate how to improve generalization performance using a Differentiable Siamese Augmentation (DSA) technique. The method applies crop, cutout, flip, scale, rotate, and color jitter transformations to raw data before using it for synthesizing new samples. 2. Kernel Ridge Regression-Based Methods The meta-learning-based method can be inefficient as it backpropagates errors over the entire training set. It makes the technique difficult to scale since performing the outer loop optimization step requires significant GPU memory. The alternative is kernel ridge regression (KRR), which performs convex optimization using a non-linear network architecture to avoid the inner loop optimization step. The method uses the neural tangent kernel (NTK) to optimize the distilled dataset. NTK is an artificial neural network kernel that determines how the network converts input to output vectors. For a wide neural net, the NTK represents a function after convergence, representing how a neural net behaves during training. Since NTK is a limiting function for wide neural nets, the dataset distilled using NTK is more robust and approximates the original dataset more accurately. 3. Single-step Parameter Matching In single-step parameter matching—also called gradient matching—a network trains on the distilled and original datasets in a single step. The method matches the resulting gradients after the update step, allowing the distilled data to match the original samples closely. Single-step parameter matching After updating the distilled dataset after a single training step, the network re-trains on the updated distilled data to re-generate gradients. Using a suitable similarity metric, a loss function computes the distance between the distilled and original dataset gradients. Lee et al. (2022) improve the method by developing a loss function that learns class-discriminative features. They average the gradients over all classes to measure distance. A problem that often occurs with gradient matching is that a particular network’s parameters tend to overfit synthetic data due to its small size. Kim et al. (2022) propose a solution that optimizes using a network trained on the original dataset. The method trains a network on the larger original dataset and then performs gradient matching using synthetic data. Zhang et al. (2022) also use model augmentations to create a pool of models with weight perturbations. They distill data using multiple models from the pool to obtain a highly generalized synthetic dataset using only a few optimization steps. 4. Multi-step Parameter Matching Multi-step parameter matching—also called matching training trajectories (MTT)—trains a network on synthetic and original datasets for multiple steps and matches the final parameter sets. The method is better than single-step parameter matching, which ignores the errors that may accumulate further in the process where the network trains on synthetic data. By minimizing the loss between the end results, MTT ensures consistency throughout the entire training process. MTT It also includes a normalization step, which improves performance by ensuring the magnitude of the parameters across different neurons during the later training epochs does not affect the similarity computation. An improvement involves removing parameters that are difficult to match from the loss function if the similarity between the parameters of the original and distilled dataset is below a certain threshold. 5. Single-layer Distribution Matching Single-layer distribution matching optimizes a distilled dataset by ensuring the embeddings of synthetic and original datasets are close. The method uses the embeddings generated by the last linear layer before the output layer. It involves minimizing a metric measuring the distance between the embedding distributions. Single-layer Distribution Matching Using the mean vector of embeddings for each class is a straightforward method for ensuring that synthetic data retains the distributional features of the original dataset. 6. Multi-layer Distribution Matching Multi-layer distribution matching enhances the single-layer approach by extracting features from real and synthetic data from each layer in a neural network except the last. The objective is to match features in each layer for a more robust representation. In addition, the technique uses another classifier function to learn discriminative features between different classes. The objective is to maximize the probability of correctly detecting a specific class based on the actual data sample, synthetic sample, and mean class embedding. The technique combines the discriminative loss and the loss from the distance function to compute an overall loss to update the synthetic dataset. 7. GAN Inversion Zhao et al. (2022) use GAN inversion to get latent factors from the real dataset and use the latent feature to generate synthetic data samples. GANs The generator used for GAN inversion is a pre-trained network that the researchers initialize using the latent set representing real images. Next, a feature extractor network computes the relevant features using real images and synthetic samples created using the generator network. Optimization involves minimizing the distance between the features of real and synthetic images to train the generator network. 8. Synthetic Data Parameterization Parameterizing synthetic data helps users store data more efficiently without losing information in the original data. However, a problem arises when users consider storing synthetic data in its raw format. If storage capacity is limited and the synthetic data size is relatively large, preserving it in its raw format could be less efficient. Also, storing only a few synthetic data samples may result in information loss.. Synthetic Data Parameterization The solution is to convert a sufficient number of synthetic data samples into latent features using a learnable differentiable function. Once learned, the function can help users re-generate synthetic samples without storing a large synthetic dataset. Deng et al. (2022) propose Addressing Matrices that learn representative features of all classes in a dataset. A row in the matrix corresponds to the features of a particular class. Users can extract a class-specific feature from the matrix and learn a mapping function that converts the features into a synthetic sample. They can also store the matrix and the mapping function instead of the actual samples. Do you want to learn more about embeddings? Learn more about embeddings in our full guide to embeddings in machine learning. Performance Comparison of Data Distillation Methods Liu et al. (2023) report a comprehensive performance analysis of different data distillation methods against multiple benchmark datasets. The table below reports their results. Performance results DD refers to the meta-learning-based algorithm, DC is data condensation through gradient matching, DSA is differentiable Siamese augmentation, DM is distribution matching, MTT is matching training trajectory, and FRePO is Feature Regression with Pooling and falls under KRR. FRePO performs highly on MNIST and Fashion-MNIST and has state-of-the-art performance on CIFAR-10, CIFAR-100, and Tiny-ImageNET. Dataset Distillation Applications Since dataset distillation reduces data size for optimal training, the method helps with multiple computationally intensive tasks. Below, we discuss seven use cases for data distillation, including continual and federated learning, neural architecture search, privacy and robustness, recommender systems, medicine, and fashion. Continual Learning Continual learning (CL) trains machine learning models (ML models) incrementally using small batches from a data stream. Unlike traditional supervised learning, the models cannot access previous data while learning patterns from the new dataset. This leads to catastrophic forgetting, where the model forgets previously learned knowledge. Dataset distillation helps by synthesizing representative samples from previous data. These distilled samples act as a form of "memory" for the model, often used in techniques like knowledge replay or pseudo-rehearsal. They ensure that past knowledge is retained while training on new information. Federated Learning Federated learning trains models on decentralized data sources, like mobile devices. This preserves privacy, but frequent communication of model updates between devices and the central server incurs high bandwidth costs. Dataset distillation offers a solution by generating smaller synthetic datasets on each device, which represent the essence of the local data. Transmitting these distilled datasets for central model aggregation reduces communication costs while maintaining performance. Neural Architecture Search (NAS) NAS is a method to find the most optimal network from a large pool of networks. This process is computationally expensive, especially with large datasets, as it involves training many candidate architectures. Dataset distillation provides a faster solution. By training and evaluating models on distilled data, NAS can quickly identify promising architectures before a more comprehensive evaluation of the full dataset. Privacy and Robustness Training a network on distilled can help prevent data privacy breaches and make the model robust to adversarial attacks. Dong et al. (2022) show how data distillation relates to differential privacy and how synthetic data samples are irreversible, making it difficult for attackers to extract real information. Similarly, Chen et al. (2022) demonstrate that dataset distillation can help generate high-dimensional synthetic data to ensure differential privacy and low computation costs. Recommender Systems Recommender systems use massive datasets generated from user activity to offer personalized suggestions in multiple domains, such as retail, entertainment, healthcare, etc. However, the ever-increasing size of real datasets makes these systems suffer from high latency and security risks. Dataset distillation provides a cost-effective solution as the system can use a small synthetic dataset to generate accurate recommendations. Also, distillation can help quickly fine-tune large language models (LLMs) used in modern recommendation frameworks using synthetic data samples instead of the entire dataset. Medicine Anonymization is a critical requirement when processing medical datasets. Dataset distillation offers an easy solution by allowing experts to use synthetic medical images that retain the knowledge from the original dataset while ensuring data privacy. Li et al. (2022) uses performance and parameter matching to create synthetic datasets. They also apply label distillation, which involves using soft labels instead of one-hot vectors for each class. Fashion Distilled image samples often have unique, aesthetically pleasing patterns that designers can use on clothing items. Cazenavette et al. (2022) use data distillation on an image dataset to generate synthetic samples with exotic textures for use in clothing designs. Distilled image patterns Similarly, Chen et al. (2022) use dataset distillation to develop a fashion compatibility model that extracts embeddings from designer and user-generated clothing items through convolutional networks. Fashion Compatibility Model The model learns embeddings from clothing images using uses dataset distillation to obtain relevant features. They also use and employs an attention-based mechanism to measure the compatibility of designer items with user-generated fashion trends. Dataset Distillation: Key Takeaways Dataset distillation is an evolving research field with great promise for using AI in multiple industrial domains such as healthcare, retail, and entertainment. Below are a few key points to remember regarding dataset distillation. Data vs. Knowledge Distillation: Dataset distillation maps knowledge in large datasets to small synthetic datasets, while knowledge distillation trains a small student model using a more extensive teacher network. Data Distillation Methods: The primary distillation methods involve parameter matching, performance matching, distribution matching, and generative processes. Dataset Distillation Algorithms: Current algorithms include meta-based learning, kernel ridge regression, gradient matching, matching training trajectories, single and multi-layer distribution matching, and GAN inversion. Dataset Distillation Use Cases: Dataset distillation significantly improves continual and federated learning frameworks, neural architecture search, recommender systems, medical diagnosis, and fashion-related tasks.

Apr 26 2024

8 M

Data Lake Explained: A Comprehensive Guide for ML Teams