Contents

How does SAM work?

MetaAI’s SAM x Encord Annotate

Create quality masks with our SAM-powered auto-segmentation tool

Integrate an AI-assisted Micro-model to make labeling even faster

Segment Anything Model (SAM) in Encord Frequently Asked Questions (FAQs)

Encord Blog

How to use SAM to Automate Data Labeling in Encord

As data ops, machine learning (ML), and annotation teams know, labeling training data from scratch is time intensive and often requires expert labeling teams and review stages. Manual data labeling can quickly become expensive, especially for teams still developing best practices or annotating large amounts of data.

Efficiently speeding up the data labeling process can be a challenge; this is where automation comes in.

Incorporating automation into your workflow is one of the most effective ways to produce high-quality data fast.

Recently, Meta released their new Visual Foundation Model (VFM) called the Segment Anything Model (SAM), an open-source VFM with incredible abilities to support auto-segmentation workflows.

First, let's discuss a brief overview of SAM's functionality.

How does SAM work?

SAM’s architecture is based on three main components:

- An image encoder (a masked auto-encoder and pre-trained vision transformer)

- A prompt encoder (sparse and dense masks)

- And a mask decoder

The image encoder processes input images to extract features, while the prompt encoder encodes user-provided prompts. These prompts specify which objects to segment in the image. The mask decoder combines information from both encoders to generate pixel-level segmentation masks.

This approach enables SAM to efficiently segment objects in images based on user instructions, making it adaptable for various segmentation tasks in computer vision.

Given this new release, Encord is excited to introduce the first SAM-powered auto-segmentation tool to help teams generate high-quality segmentation masks in seconds.

MetaAI’s SAM x Encord Annotate

The integration of MetaAI's SAM with Encord Annotate provides users with a powerful tool for automating labeling tasks. By leveraging SAM's capabilities within the Encord platform, users can streamline the annotation process and achieve precise segmentations across different file formats. This integration enhances efficiency and accuracy in labeling workflows, empowering users to generate high-quality annotated datasets effortlessly.

Create quality masks with our SAM-powered auto-segmentation tool

Whether you're new to labeling data for your model or have several models in production, our new SAM-powered auto-segmentation tool can help you save time and streamline your labeling process. To maximize the benefits of this tool, follow these steps:

Set up Annotation Project

Setup your image annotation project by attaching your dataset and ontology.

Activate SAM

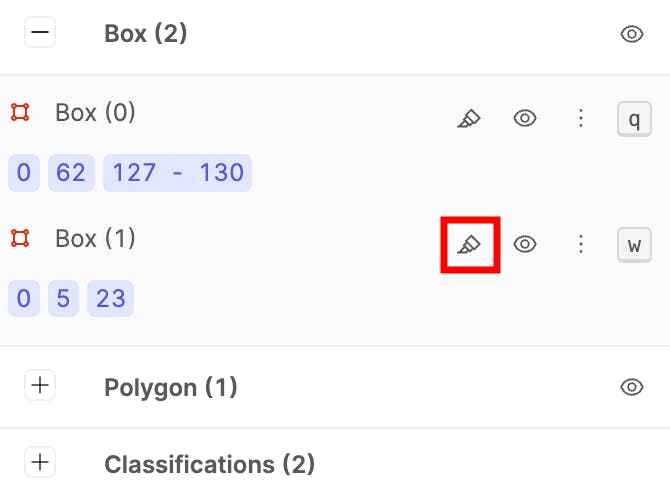

Click the icon within the Polygon or Bounding box class in the label editor to activate SAM. Alternatively, use the Shift + A keyboard shortcut to toggle SAM mode.

Create Labels for Existing Instances

Navigate to the desired frame. Click "Instantiate object" next to the instance, or press the instance's hotkey. Press Shift + A on your keyboard to enable SAM.

Segment the Image with SAM

Click the area on the image or frame to segment. A pop-up will indicate that auto-segmentation is running. Alternatively, click and drag your cursor to select the part of the image to segment.

Include/Exclude Areas from Selection

After the prediction, a part of the image or frame will be highlighted in blue. Left-click to add to the selected area or right-click to exclude parts. To restart, click Reset on the SAM tool pop-up.

Confirm Label

Once the correct section is highlighted, click "Apply Label" on the SAM pop-up or press Enter on your keyboard to confirm. The result will be a labeled area outlined based on the selection (bounding box or polygon shape).

Integrate an AI-assisted Micro-model to make labeling even faster

While AI-assisted tools such as SAM-powered auto-segmentation can be great for teams just getting started with data labeling, teams who follow more advanced techniques like pre-labeling can take automation to the next level with micro-models.

By using your model or a Micro-model, pre-labels can significantly increase labeling efficiency. As the model improves with each iteration, labeling costs decrease, allowing teams to focus their manual labeling efforts on edge cases or areas where the model may not perform as well. This results in faster, less expensive labeling with improved model performance.

Check out our case study to learn more about our pre-labeling workflow, powered by AI-assisted labeling, and how one of our customers increased their labeling efficiency by 37x using AI-assisted Micro-models.

Try our auto-segmentation tool on an image labeling project or start using model-assisted labeling today.

If you are interested in using SAM for your computer vision project and would like to fine-tune SAM, it's essential to carefully consider your specific task requirements and dataset characteristics. By fine-tuning SAM, you can tailor its performance to suit your project's needs, ensuring accurate and efficient segmentation of images for your application. Fine-tuning SAM allows you to leverage its promptability and adaptability to new image distributions, maximizing its effectiveness in addressing your unique computer vision challenges.

Key Takeaways

Meta's Segment Anything Model (SAM) is a powerful and effective open-source Visual Foundation Model (VFM) that will make a positive difference to automated segmentation workflows for computer vision and ML projects.

AI-assisted labeling can reduce labeling costs and improve with each iteration. Our SAM-powered auto-segmentation tool and AI-assisted labeling workflow are available to all customers. We're excited for our users to see how automation can significantly reduce costs and increase labeling efficiency.

Ready to improve the performance and scale your data operations, labeling, and automated annotation?

AI-assisted labeling, model training & diagnostics, find & fix dataset errors and biases, all in one collaborative active learning platform, to get to production AI faster. Try Encord for Free Today.

Want to stay updated?

- Follow us on Twitter and LinkedIn for more content on computer vision, training data, and active learning.

Segment Anything Model (SAM) in Encord Frequently Asked Questions (FAQs)

What is a Segmentation Mask?

A segmentation mask assigns a label to each pixel in an image, identifying objects or regions of interest. This is done by creating a binary image where object pixels are marked with 1, and the rest with 0. It's used in computer vision for object detection and image segmentation and for training machine learning models for image recognition.

How Does the SAM-powered Auto-segmentation Work?

Combining SAM with Encord Annotate offers users an auto-segmentation tool with powerful ontologies, an interactive editor, and media support. SAM can segment objects or images without prior exposure using basic keypoint info and a bounding box. Encord annotate utilizes SAM to annotate various media types such as images, videos, satellite data, and DICOM data.

If you want to better understand SAM’s inner workings and importance, please refer to the SAM explainer.

Can I use SAM in Encord for Bounding Boxes?

Encord’s auto-segmentation feature supports various types of annotations such as bounding boxes, polyline annotation, and keypoint annotation. Additionally, Encord utilizes SAM for annotating images, videos, and specializes data types including satellite (SAR), DICOM, NIfTI, CT, X-ray, PET, ultrasound and MRI files.

For more information on auto-segmentation for medical imaging computer vision models, please refer to the documentation.

Can I Fine-tune SAM?

The image encoder of SAM is a highly intricate architecture containing numerous parameters. To fine-tune the model, it is more practical to concentrate on the mask encoder instead, which is lightweight and, therefore simpler, quicker, and more efficient in terms of memory usage. Please read Encord’s tutorial on how to fine-tune Segment Anything.

You can find the Colab Notebook with all the code you need to fine-tune SAM here. Keep reading if you want a fully working solution out of the box!

You can find the Colab Notebook with all the code you need to fine-tune SAM here. Keep reading if you want a fully working solution out of the box!Can I try SAM in Encord for free?

Encord has integrated its powerful ontologies, interactive editor, and versatile data type support with SAM to enable segmentation of any type of data. SAM's auto-annotation capability can be utilized for this purpose. Encord offers a free trial that can be accessed by logging in to our platform or please contact our team to get yourself started 😀

Explore our products

- Segmentation in data labeling involves partitioning an image into distinct regions corresponding to objects or features of interest, typically achieved by outlining object boundaries with pixel-level accuracy.

- Segment anything model is open-sourced under the Apache 2.0 license.

- SAM outputs segmented regions within an image, delineating distinct features or objects based on its training and inference on specific segmentation tasks.

- SAM exhibits limitations including missing fine structures, occasional generation of disconnected components, and less crisp boundary production compared to specialized methods. While efficient for real-time processing, SAM's overall performance may suffer with heavy image encoders. Designing simple prompts for certain tasks like semantic and panoptic segmentation remains challenging.

- The best image classification model depends on factors like task requirements, dataset characteristics, and performance metrics. Commonly used models include ResNet, VGG, Inception, and EfficientNet, each with its advantages and suitability for specific tasks.

- Segmentation can be more challenging than object detection as it requires pixel-level accuracy in delineating object boundaries, whereas object detection focuses on identifying objects without necessarily providing precise boundary information.

- The main advantage of segmentation is its ability to provide detailed object boundaries within an image, facilitating precise object localization and enabling more accurate analysis and understanding of image content.

- Anomaly detection identifies deviations or outliers from expected patterns in data, while segmentation partitions an image into distinct regions corresponding to objects or features. Anomaly detection focuses on identifying irregularities, while segmentation focuses on delineating objects.

- SAM features efficient segmentation capabilities adaptable to various tasks and distributions. Its key attributes include support for diverse annotation types (e.g., Polygon, Bounding box), promptability for zero-shot transfers, and a large-scale dataset with over 1 billion masks on 11M images.