Announcing our Series C with $110M in total funding. Read more →.

Contents

Understanding Text Annotation

Types of Text Annotation

Text Classification

Part-of-Speech (POS) Tagging

Coreference Resolution

Dependency Parsing

Semantic Role Labeling (SRL)

Temporal annotation

Intent annotation

The Role of a Text Annotator

Advanced Text Annotation Techniques

Practical Applications of Text Annotation

Enhancing Text Data Quality with Encord

Key Takeaways

Encord Blog

How to Enhance Text AI Quality with Advanced Text Annotation Techniques

5 min read

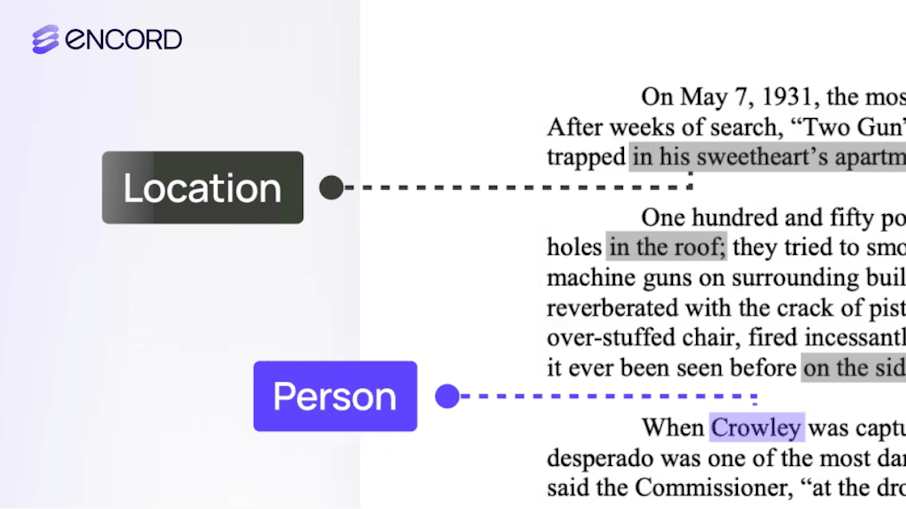

Understanding Text Annotation

Text annotation, in Artificial Intelligence (particularly in Natural Language Processing), is the process of labeling or annotating text data so that machine learning models can understand it. Text annotation involves identifying and labeling specific components or features in text data, such as entities, sentiments, or relationships, to train AI models effectively. This process converts raw, unstructured text into structured, machine readable data format.

Text Annotation (Source)

Types of Text Annotation

The types of text annotation vary depending on the specific NLP task. Each type of annotation focuses on a particular aspect of text to structure data for AI models. Following are the main types of text annotation:

Named Entity Recognition (NER)

In Named Entity Recognition (NER), entities in a text are identified and classified into predefined categories such as people, organizations, locations, dates, and more. NER is used to extract key information from text. It helps understand user-specific entities like name of person, locations or company names etc.

Example:

In following text data:

Following are the text annotations Annotation:

"Hawaii" → LOCATION

"1961" → DATE

Sentiment Annotation

In Sentiment Annotation text is labeled with emotions or opinions such as positive, negative, or neutral. It may also include fine-grained sentiments like happiness, anger, or frustration. Sentiment analysis is used in applications such as analyzing customer feedback or product reviews, monitoring brand reputation on social media etc.

Example:

For the following text:

The sentiment annotation is following:

Text Classification

In text classification, predefined categories or labels are assigned to entire text documents or segments. Text classification is used in applications like spam detection in emails or categorizing news articles by topic (e.g., politics, sports, entertainment).

Example:

For the following text:

The text classification annotation is following:

Part-of-Speech (POS) Tagging

In Part-of-Speech tagging, each word in a sentence is annotated with its grammatical role, such as noun, verb, adjective, or adverb. The example applications of parts-of-speech tagging are building grammar correction tools.

Example:

For the following text:

The parts-of-speech tagging is following:

"dog" → NN (Noun, singular or mass)

"barked" → VBD (Verb Past Tense)

"loudly" → RB (Adverb)

Coreference Resolution

In coreference resolution pronouns or phrases are identified and linked to the entities they refer to within a text. Conference resolutions are used to enhance conversational AI systems to maintain context in dialogue, improving summarization by linking all references to the same entity etc.

Example:

For the following text:

The annotation would be following:

Here ‘Sarah” and “She” refers to following:

"She" → Anaphor

Dependency Parsing

In dependency parsing, the grammatical structure of a sentence is analyzed to establish relationships between "head" words and their dependents. This process results in a dependency tree. In this tree nodes represent words, and directed edges denote dependencies. This illustrates how words are connected to convey meaning. It is used in language translation systems, Text-to-speech applications etc.

Example:

For the following text:

The dependency relationships would be following:

Nominal Subject (nsubj): "boy" is the subject performing the action of "eats."

Determiner (det): "The" specifies "boy."

Direct Object (dobj): "apple" is the object receiving the action of "eats."

Determiner (det): "an" specifies "apple."

Semantic Role Labeling (SRL)

Semantic Role Labeling (SRL) is a process in Natural Language Processing (NLP) that involves identifying the predicate-argument structures in a sentence to determine "who did what to whom," "when," "where," and "how." By assigning labels to words or phrases, SRL captures the underlying semantic relationships, providing a deeper understanding of the sentence's meaning.

Example:

In the sentence

SRL identifies the following components:

Agent (Who): "Mary" (the seller)

Theme (What): "the book" (the item being sold)

Recipient (Whom): "John" (the buyer)

This analysis clarifies that Mary is the one performing the action of selling, the book is the object being sold, and John is the recipient of the book. By assigning these semantic roles, SRL helps in understanding the relationships between entities in a sentence, which is essential for various natural language processing applications.

Temporal annotation

In Temporal annotation, temporal expressions (such as dates, times, durations, and frequencies) in text are identified. This process enables machines to understand and process time-related information, which is crucial for applications like event sequencing, timeline generation, and temporal reasoning. Key Components of Temporal Annotation:

- Temporal Expression Recognition: Identifying phrases that denote time, such as "yesterday," "June 5, 2023," or "two weeks ago."

- Normalization: Converting these expressions into a standard, machine-readable format, often aligning them with a specific calendar date or time.

- Temporal Relation Identification: Determining the relationships between events and temporal expressions to understand the sequence and timing of events.

Example:

Consider the sentence:

The temporal annotation would be:

| Temporal Expressions Identified: | "March 15, 2023" ; "two weeks later" |

| Normalization: | "March 15, 2023" → 2023-03-15 ; "two weeks later" → 2023-03-29 |

| Temporal Relations: | The event "conference" is linked to 2023-03-15. ; The event "next meeting" is linked to 2023-03-29. |

Several standards have been developed to guide temporal annotation:

- TimeML: A specification language designed to annotate events, temporal expressions, and their relationships in text.

- ISO-TimeML: An international standard based on TimeML, providing guidelines for consistent temporal annotation.

Intent annotation

In Intent annotation, also known as intent classification, the underlying purpose or goal behind a text is identified. This technique enables machines to understand what action a user intends to perform. This is essential for applications like chatbots, virtual assistants, and customer service automation.

Example:

Consider the user input:

The identified Intent is

In this example, the system recognizes that the user's intent is to book a flight which allows the system to proceed with actions related to flight reservations.

The Role of a Text Annotator

A text annotator plays an important role in the development, refinement, and maintenance of NLP systems and other text-based machine learning models.

The core responsibility of a text annotator is to enhance raw textual data with structured labels, tags, or metadata that make it understandable and usable by machine learning models. Because machine learning models rely heavily on examples to learn patterns (such as understanding language structure, sentiment, entities, or intent) and must be provided with consistent, high-quality annotations. The work of a text annotator is to ensure that these training sets are accurate, consistent, and reflective of the complexities of human language.

Key responsibilities includes:

- Data Labeling: Assigning precise labels to text elements, including identifying named entities (e.g., names of people, organizations, locations) and categorizing documents into specific topics.

- Content Classification: Organizing documents or text snippets into relevant categories to facilitate structured data analysis.

- Quality Assurance: Reviewing and validating annotations to ensure consistency and accuracy across datasets.

Advanced Text Annotation Techniques

Modern generative AI models and associated tools have expanded and streamlined the capabilities of text annotation to great extent. Generative AI models can accelerate and enhance the annotation process and reduce the required manual effort. Following are some advanced text annotation techniques:

Zero-Shot and Few-Shot Annotation with Large Language Models

Zero-shot and few-shot learning enables text annotators to generate annotations for tasks without requiring thousands of manually labeled examples. Text annotators can provide natural language instructions, examples, or prompts to an LLM to classify text or tag entities based on their pre-training and the guidance given in the prompt.

For example, in Zero-shot annotation a text annotator may describe the annotation task and categories (e.g., “Label each sentence as ‘Positive,’ ‘Negative,’ or ‘Neutral’”) LLM. The LLM then annotates text based on its internal understanding.

Similarly for Few-shot Annotation, the text annotator provides a few examples of annotated data (e.g., 3-5 sentences with their corresponding labels), and the LLM uses these examples to infer the labeling scheme. It then applies this understanding to new, unseen text.

Prompt Engineering for Structured Annotation

LLMs respond to natural language instructions. Prompt engineering involves carefully designing the text prompt given to these models to improve the quality, consistency, and relevance of the generated annotations. An instruction template provides the model with a systematic set of instructions describing the annotation schema. For example: “You are an expert text annotator. Classify the following text into one of these categories: {Category A}, {Category B}, {Category C}. If unsure, say {Uncertain}.”

Using Generative AI to Assist with Complex Annotation Tasks

Some annotation tasks (like relation extraction, event detection, or sentiment analysis with complex nuances) can be challenging. Generative AI can break down these tasks into simpler steps, provide explanations, and highlight text segments that justify certain labels. An LLM can be instructed by text annotators to first identify entities (e.g., people, places, organizations) and then determine relationships between them. The LLM can also summarize larger text before annotation. In this way the annotator focuses on relevant sections and speeding up human-in-the-loop processes.

Integration with Annotation Platforms

Modern annotation platforms and MLOps tools are integrating generative AI features to assist annotators. For example, they allow an LLM to produce initial annotations, which annotators then refine. Over time, these corrections feed into active learning loops that improve model performance.

For example, the active learning and model-assisted workflows in Encord can be adapted for text annotation. By connecting an LLM that provides draft annotations, human annotators can quickly correct mistakes. Those corrections help the model learn and improve. The other tools like Label Studio or Prodigy can include LLM outputs directly into the annotation interface, making the model’s suggestions easy to accept, modify, or reject.

Practical Applications of Text Annotation

Text annotation can be used in various domains. Following are some examples of text annotation to enhance applications, improve data understanding, and provide better end-user experiences.

Healthcare

The healthcare industry generates vast amounts of text data every day consisting of patient records, physician notes, pathology reports, clinical trial documentation, insurance claims, and medical literature. However, these documents are often unstructured, making it difficult to use them for analytics, research, or clinical decision support. Text annotation makes this unstructured data more accessible and useful. Following are some examples:

- In Electronic Health Record (EHR) analysis medical entities such as symptoms, diagnoses, medications, dosages, and treatment plans in a patient’s EHR are identified and annotated. Once annotated, these datasets enable algorithms to automatically extract critical patient information.

- A model might highlight that a patient with diabetes (diagnosis) is taking metformin (medication) and currently experiences fatigue (symptom). This helps physicians quickly review patient histories, ensure treatment adherence, and detect patterns that may influence treatment decisions.

E-Commerce

E-commerce platforms handle large amounts of customer data such as product descriptions, user-generated reviews, Q&A sections, support tickets, chat logs, and social media mentions. Text annotation helps structure this data, enabling advanced search, personalized recommendations, better inventory management, and improved customer service.

For example, in product categorization and tagging the product titles and descriptions with categories, brands, material, style, or size etc. are annotated. Annotated product information allows recommendation systems to group similar items and suggest complementary products. For instance, if a product is tagged as “women’s sports shoes,” the recommendation engine can show running socks or athletic apparel. This enhances product discovery, making it easier for customers to find what they’re looking for, ultimately increasing sales and customer satisfaction.

Sentiment Analysis

Sentiment analysis focuses on determining the emotional tone of text. Online reviews, social media posts, comments, and feedback forms contain valuable insights into customer feelings, brand perception, and emerging trends. Annotating this text with sentiment labels (positive, negative, neutral) enables models to gauge public opinion at scale.

For example, in brand reputation management user tweets, blog comments, and forum posts are annotated as positive, negative, or neutral toward the brand or a product line. By analyzing aggregated sentiment over time, companies can detect negative spikes that indicate PR issues or product defects. They can then take rapid corrective measures, such as addressing a manufacturing flaw or releasing a statement. It helps maintain a positive brand image, guides marketing strategies, and improves customer trust.

Enhancing Text Data Quality with Encord

Encord offers a comprehensive document annotation tool designed to streamline the text annotation for training LLM. Key features include:

Text Classification

This feature allows users to assign predefined categories to entire documents or specific text segments, ensuring that data is systematically organized for analysis.

Text Classification (Source)

Named Entity Recognition (NER)

This feature of Encord enables the identification and labeling of entities such as names, organizations, dates, and locations within the text, facilitating structured data extraction.

Named Entity Recognition Annotation (Source)

Sentiment Analysis

This feature assesses and annotates the sentiment expressed in text passages, helping models understand the emotional context.

Sentiment Analysis Annotation (Source)

Question Answering

This feature helps annotate text to train models capable of responding accurately to queries based on the provided information.

QA Annotation (Source)

Translation

Under this feature, a free-text field enables labeling and translation of text. It supports multilingual data processing.

Text Translation (Source)

To accelerate the annotation process, Encord integrates state-of-the-art models such as GPT-4o and Gemini Pro 1.5 into data workflows. This integration allows for auto-labeling or pre-classification of text content, reducing manual effort and enhancing efficiency.

Encord's platform also enables the centralization, exploration, and organization of large document datasets. Users can upload extensive collections of documents, apply granular filtering by metadata and data attributes, and perform embeddings-based and natural language searches to curate data effectively.

By providing these robust annotation capabilities, Encord assists teams in creating high-quality datasets, thereby boosting model performance for NLP and LLM applications.

Key Takeaways

This article highlights the essential insights from text annotation techniques and their significance in natural language processing (NLP) applications:

- The quality of annotated data directly impacts the effectiveness of machine learning models.

- High-quality text annotation ensures models learn accurate patterns and relationships, improving overall performance.

- Establishing precise rules and frameworks for annotation ensures consistency across annotators.

- Annotation tools like Labelbox, Prodigy, or Encord streamline the annotation workflow.

- Generative AI models streamline advanced text annotation with zero-shot learning, prompt engineering, and platform integration, reducing manual effort and enhancing efficiency.

- Encord improves text annotation by integrating model-assisted workflows, enabling efficient annotation with active learning, collaboration tools, and scalable AI-powered automation.

Explore the platform

Data infrastructure for multimodal AI

Explore product

Frequently asked questions

Text annotation is the process of labeling or tagging text data to make it understandable for machine learning models. It involves identifying components like entities, sentiments, and grammatical roles to transform unstructured text into structured data. This is crucial for training AI systems to accurately interpret and process human language.

A text annotator labels text data with metadata, such as categories, entities, or sentiments, to train AI models. Their role involves ensuring annotations are accurate, consistent, and reflective of language complexities. They also perform quality assurance and refine datasets for better machine learning outcomes.

The performance of AI models depends heavily on the quality of annotated data. Accurate and consistent annotations help models learn correct patterns, improving their ability to generalize and perform well on unseen data.

Encord supports a comprehensive quality assurance process within its platform, allowing users to review and adjust annotations easily. This includes features like timestamped transcriptions and the ability to modify labels directly as you play through the audio, ensuring accuracy and reliability in the annotation workflow.

Encord enhances the quality and speed of annotations by providing a unified platform that streamlines the entire annotation process. This means that annotators can work more efficiently, and the integration with existing data lakes allows for faster feedback and improvements to models based on high-quality labeled data.

Encord offers a robust annotation pipeline that enhances the quality of sports content annotations. By leveraging both manual tagging and AI-powered tagging, Encord ensures that the annotations are accurate and enriched with the necessary sports expertise, which is critical for creating personalized sports content.

Encord streamlines the quality assurance process by integrating annotation workflows directly within the platform, reducing the need for cumbersome back-and-forth between spreadsheets and data. This helps ensure that the quality of the annotations positively impacts the performance of machine learning models.

Yes, Encord includes mechanisms for quality control within the annotation workflow. This consists of audit logs that track performance metrics, including task completion times and accuracy, as well as consensus stages for subjective ratings, ensuring that data quality is maintained throughout the process.

Quality annotation is crucial in AI projects as it directly impacts model performance. Encord ensures high-quality annotations through a combination of robust review processes and tools that facilitate accurate labeling, allowing users to maintain control over the annotation quality.

Encord helps clients build better AI models faster by focusing on data curation, annotation, and model evaluation. By identifying edge cases where models may not perform well, Encord assists in finding and annotating critical moments in data to enhance overall model performance.

Encord supports auto labeling by leveraging advanced machine learning models that can generate high-quality annotations. With human quality control, users can ensure the accuracy of these annotations while minimizing manual labor, making the process efficient and cost-effective.

Encord leverages AI integration to enhance labeling efficiency by automating repetitive tasks, reducing errors, and speeding up the overall annotation process. This allows teams to focus on higher-level decision-making and improves the quality of their datasets.

Encord includes a quality assurance and quality control (QA/QC) process that integrates directly into the annotation workflow, helping to ensure high-quality outputs without the need for elaborate external mechanisms.