Contents

Why TTI-Eval?

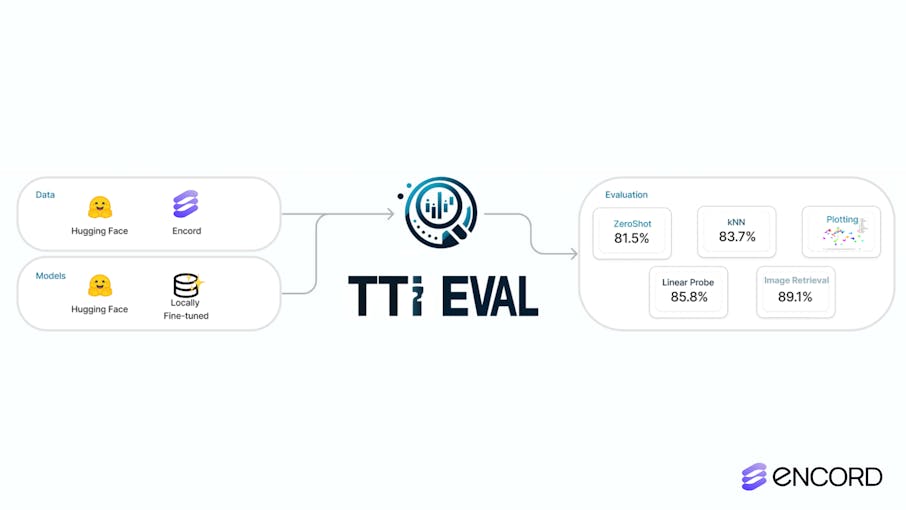

What is TTI-Eval?

How TTI-Eval Works

Key Features of TTI-Eval

Benefits of TTI-Eval in Data Curation

Example Results and Custom Models

Getting Started with TTI-Eval

Conclusion

Encord Blog

Introducing TTI-Eval: An Open-Source Library for Evaluating Text-to-Image Embedding Models

5 min read

In the past few years, computer vision and multimodal AI have come a long way, especially when it comes to text-to-image embedding models. Models such as CLIP from OpenAI can jointly embed images and text for powerful applications like natural language and image similarity search.

However, evaluating the performance of these models (and even custom embedding models) on custom datasets can be challenging. That's where TTI-Eval comes in.

We open-sourced TTI-Eval to help researchers and developers test their text-to-image embedding models on Hugging Face datasets or their own. With a straightforward and interactive evaluation process, TTI-Eval helps estimate how well different embedding models capture the semantic information within the dataset.

This article will help you understand TTI-Eval and get started evaluating your text-to-image (TTI) embedding models against custom datasets.

Why TTI-Eval?

Imagine you have a data lake full of company data and need to do sampling to get relevant data for a given task. One common sampling approach is to use image similarity search and natural language search to identify the data from the data lake.

You are likely looking for data with samples that look similar to the data you have in the production environment and those that hold the relevant semantic content.

To do this type of sampling, you would typically embed all the data within the datalake with, e.g., CLIP and perform image similarity and natural language searches on such embeddings.

A common question before investing all the required computing to embed all the data is, “Which model should I use?” It could be CLIP, an in-house vision model, or a domain-specific model like BioMedCLIP. TTI-Eval helps answer that question, particularly for the data you are dealing with.

What is TTI-Eval?

TTI-Eval's primary goal is to help you evaluate text-to-image embedding models (e.g., CLIP) against your datasets or those available on Hugging Face. By doing so, you can estimate how well a model will perform on a specific classification dataset.

One of our key motivations behind TTI-Eval is to improve the accuracy of natural language and image similarity search features, which are critical for Encord Index customers and users.

We used TTI-Eval internally at Encord to select the most suitable model for their similarity search feature. Since we have seen it work well, we decided to open-source it.

Instead of relying on off-the-shelf embedding models that may not be optimized for your specific use case, you can use TTI-Eval to evaluate the embeddings and determine their effectiveness for similarity searches.

How TTI-Eval Works

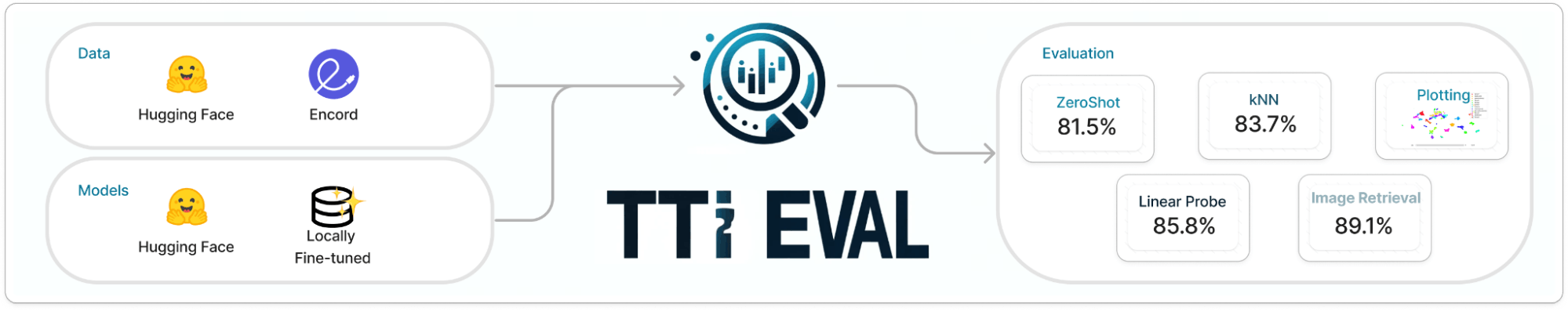

TTI-Eval follows a straightforward evaluation workflow:

- Link data from Hugging Face text-to-image datasets or Encord's classification ontologies to TTI-Eval.

- Connect your CLIP-style models from Hugging Face or custom fine-tuned models to TTI-Eval.

- TTI-Eval computes embeddings for each image in the provided dataset using the specified model.

- It calculates the benchmark based on the model’s classifications to assess the similarity among image embeddings and the text descriptions of each class.

- It also generates the accuracy metrics for text-to-image and image-to-image search scenarios.

Key Features of TTI-Eval

There are a few main things about TTI-Eval that make it a useful tool for developers and researchers:

- Generating custom embeddings from model-dataset pairs.

- Evaluating the performance of embedding models on custom datasets.

- Generating embedding animations to visualize performance.

Embeddings Generation

You can choose which models and datasets to use together to create embeddings, which gives you more control over the evaluation process.

Here’s how you can generate embeddings with known model and dataset pairs (CLIP, Alzheimer-MRI) from your command line with `tti-eval build`:

tti-eval build --model-dataset clip/Alzheimer-MRI --model-dataset bioclip/Alzheimer-MRI

Model Evaluation

TTI-Eval lets you choose which models and datasets to evaluate interactively to fully understand how well the embedding models work on the dataset.

Here’s how you can evaluate embeddings with known models and dataset pairs (bioclip, Alzheimer-MRI) from your command line with `tti-eval evaluate`:

tti-eval evaluate --model-dataset clip/Alzheimer-MRI --model-dataset bioclip/Alzheimer-MRI

Embeddings Animation

The library provides a visualization feature that enables users to visualize the reduction of embeddings from two models on the same dataset, which is useful for a comparative analysis.

To create 2D animations of the embeddings, use the CLI command `tti-eval animate`. You can select two models and a dataset for visualization interactively. Alternatively, you can specify the models and dataset as arguments. For example:

tti-eval animate clip bioclip Alzheimer-MRI

The animations will be saved at the location specified by the environment variable `TTI_EVAL_OUTPUT_PATH`. By default, this path corresponds to the `output` folder in the repository directory. Use the `-- interactive` flag to explore the animation interactively in a temporary session.

See the difference between CLIP and a fine-tuned CLIP variant on a dataset in an embedding space:

Visualizing CLIP vs. Fine-Tuned CLIP in embedding space.

Benefits of TTI-Eval in Data Curation

Through internal tests and early user adoption, we have seen how TTI-Eval helps teams curate datasets. By selecting the best embeddings, they know they work with the most relevant and high-quality data for their specific tasks.

Within Encord Active, TTI-Eval contributes to accurate model validation and label quality assurance by providing reliable estimates of class accuracy based on the selected embeddings.

Example Results and Custom Models

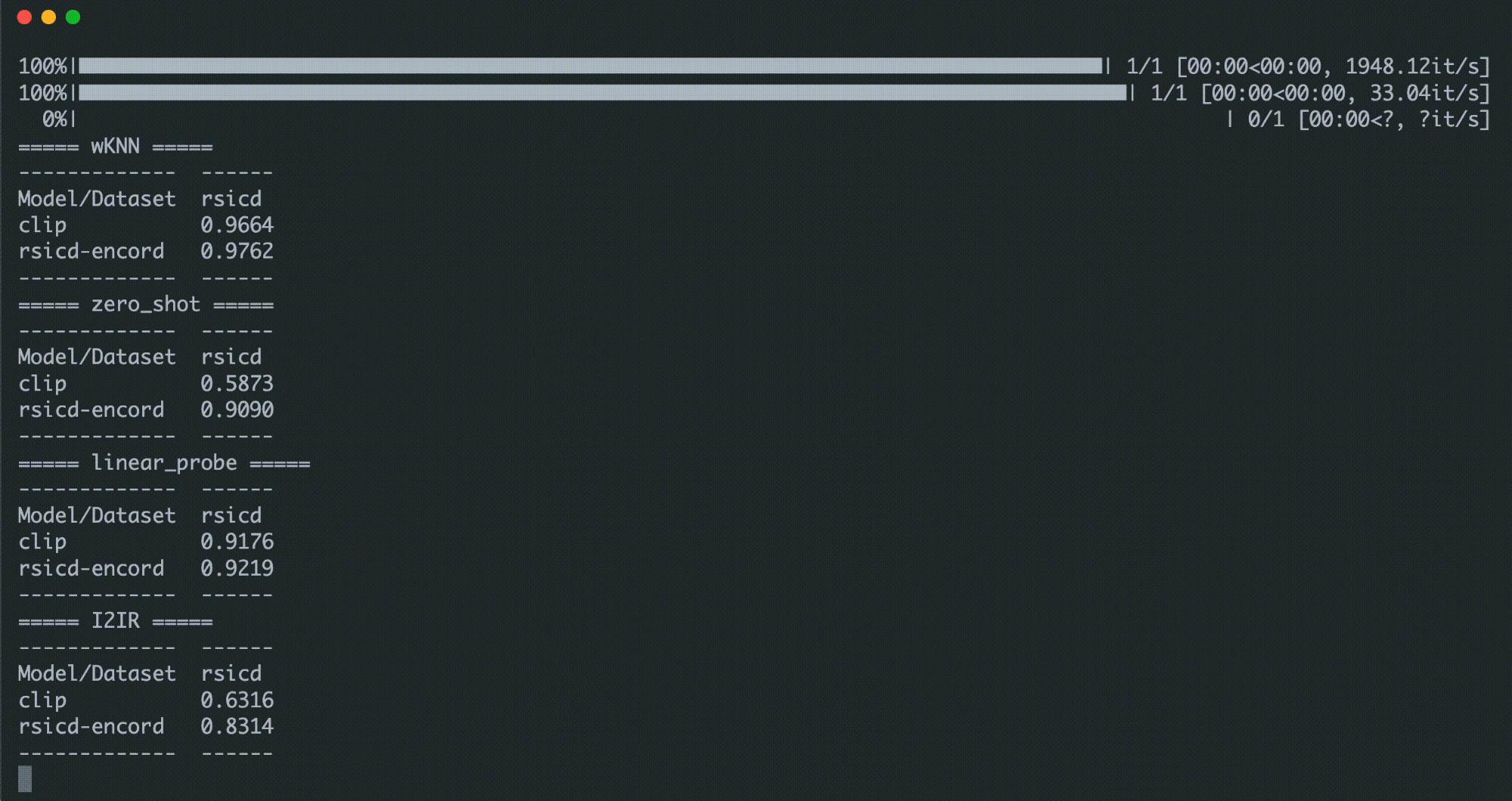

One example of where this `tti-eval` is useful is when testing different open-source models against different open-source datasets within a specific domain. Below, we focused on the medical domain.

We evaluated nine models (three of which are domain-specific) against four different medical datasets (skin-cancer, chest-xray-classification, Alzheimer-MRI, LungCancer4Types). Here’s the result:

The result of using TTI-Eval to evaluate different CLIP embedding models against four medical datasets.

The plot indicates that for multiple datasets [1, 3, 4], you can use any of the CLIP-based medical models for the medical datasets. However, there's no reason for the second dataset (`chest-xray-classification`) to use a larger and more expensive medical model since the results from smaller and cheaper models are comparable.

This helps you determine which model is ideal for your dataset and then

Getting Started with TTI-Eval

To get started with TTI-Eval in your Python notebook, follow these steps:

Step 1: Install the TTI-Eval library

Clone the repository:

git clone https://github.com/encord-team/text-to-image-eval.git

Navigate to the project directory:

cd text-to-image-eval

Install the required dependencies:

poetry shell

poetry install

Add environment variables:

export TTI_EVAL_CACHE_PATH=$PWD/.cache

export TTI_EVAL_OUTPUT_PATH=$PWD/output

export ENCORD_SSH_KEY_PATH=<path_to_the_encord_ssh_key_file>

Step 2: Define and instantiate the embeddings by specifying the model and dataset

Say we are using CLIP as the embedding model and the `Falah/Alzheimer_MRI` dataset:

from tti_eval.common import EmbeddingDefinition, Split

def1 = EmbeddingDefinition(model="clip", dataset="Alzheimer-MRI")

Step 3: Compute the embeddings of the dataset using the specified model

from tti_eval.compute import compute_embeddings_from_definition

embeddings = compute_embeddings_from_definition(def1, Split.TRAIN)

Step 4: Evaluate the model's performance against the dataset

from tti_eval.evaluation import I2IRetrievalEvaluator, LinearProbeClassifier, WeightedKNNClassifier, ZeroShotClassifier

from tti_eval.evaluation.evaluator import run_evaluation

evaluators = [ZeroShotClassifier, LinearProbeClassifier, WeightedKNNClassifier, I2IRetrievalEvaluator]

performances = run_evaluation(evaluators, [def1, def2])

Here’s the quickstart notebook to get started with TTI-Eval using Python.

We also prepared a CLI quickstart notebook guide that covers the basic usage of the CLI commands and their options for a quick way to test `tti-eval` without installing anything locally.

Conclusion

Our goal is for TTI-Eval to contribute significantly to the computer vision and multimodal AI community. We are actively working on developing tutorials to help you get the most out of TTI-Eval for evaluation purposes.

In the meantime, check out the TTI-Eval GitHub repository for more information, documentation, and notebooks to guide you. We are also actively working on tutorials to help you harness the full potential of TTI-Eval for evaluation purposes.

Explore the platform

Data infrastructure for multimodal AI

Explore product

Explore our products