Segment Anything Model (SAM)

Encord Computer Vision Glossary

Segment Anything Model (SAM) was introduced by Meta as the first foundation model for image segmentation with impressive Zero-shot inference.The Segment Project aims to democratize segmentation by introducing which consists of a new task, new dataset, and model. The Segment Anything Model is trained on Segment Anything 1-Billion mask dataset (SA-1B), the largest ever segmentation dataset.

In the past, there were two main methods for addressing segmentation problems. The first approach was interactive segmentation, which permitted segmentation of any type of object but necessitated human guidance through an iterative process of refining a mask. The second approach was automatic segmentation, which could segment only pre-defined object categories and required a significant number of manually labeled objects for training. However, neither approach offered a comprehensive, fully annotated solution to segmentation. The SAM model, by combining both of these techniques, can adapt to new tasks and domains, making it the first segmentation model of its kind to provide such flexibility.

Please read the blog Visual Foundation Models Explained, to know more about the use of foundation models in computer vision.

Segment Anything Model Architecture

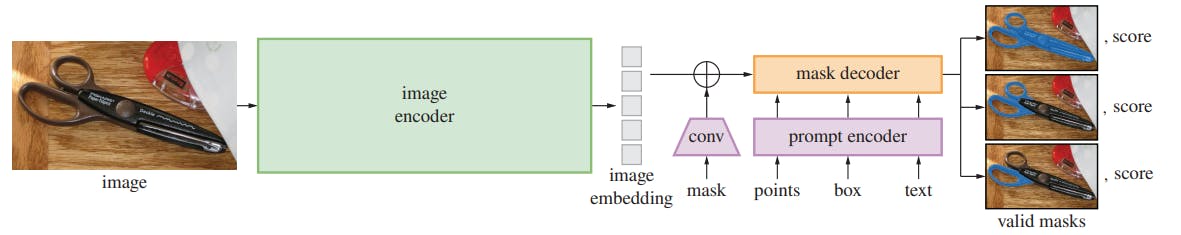

The Segment Anything model is broken down into two sections. The first is a featurization transformer block that takes and image and compresses it to a 256x64x64 feature matrix. These features are then passed into a decoder head that also accepts the model's prompts, whether that be a rough mask, labeled points, or text prompt (note text prompting is not released with the rest of the model).

Segment Anything model diagram. Source

The Segment model architecture is revolutionary because it puts the heavy lifting of image featurization to a transformer model and then trains a lighter model on top. For deploying SAM to production, this makes for a really nice user experience where the featurization can be done via inference on a backend GPU and the smaller model can be run within the web browser.

If you want to know more about the SAM, please read the blog Meta AI's New Breakthrough: Segment Anything Model (SAM) Explained