Contents

How has medical AI evolved over the course of your career?

You mentioned data annotation as a laborious process. Could you describe what it’s like to annotate these images manually?

How did Encord’s tools change the image annotation experience for you?

What’s the greatest benefit that AI in general, and computer vision in particular, can bring to your field?

How do you think patients and medical professionals view adoption of AI for medical treatment?

You mentioned earlier that time was the greatest benefit that AI could provide to patients. Can you see AI eventually making a profound difference in early diagnosis for diseases?

Customer Interview: Overcoming The Mental Torture of Manual Data Labelling

King’s College Hospital’s Director for Gastroenterology and Endoscopy Dr. Bu Hayee is– to put it mildly– a busy man. As a practising physician and assistant professor, he has a number of competing interests: academic, strategic, research, and clinical.

In addition to supervising students and treating patients, he works closely with NHS England on their digital pathways and transformations, and he has a deep interest in how emerging technologies such as artificial intelligence and computer vision can increase the efficiency of patient care.

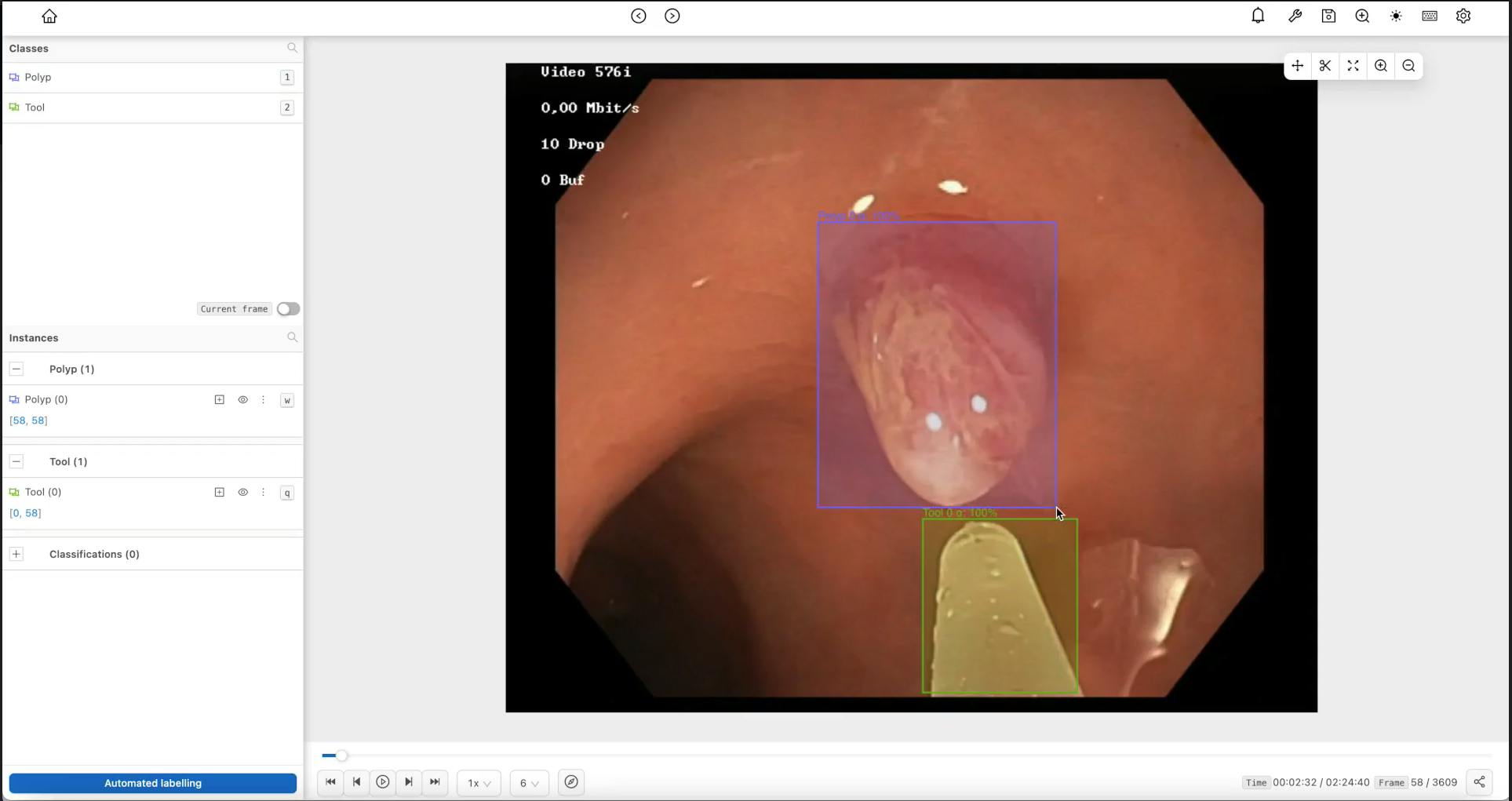

In 2020, Dr. Hayee assisted in supervising a collaboration between King’s College London and Encord– the platform for data-centric computer vision– which aimed to increase the speed at which doctors could annotate polyps in colonoscopy videos (training data for computer vision models detecting precancerous polyps).

Polyp detection in action

We recently sat down with Dr. Bu Hayee to hear more about his thoughts on the role of deep learning in medical diagnosis, his collaboration with Encord, and the changing landscape of medical AI.

The following interview has been edited for clarity and length.

How has medical AI evolved over the course of your career?

Dr. Hayee: It’s very new. We only began to see the practical application of medical AI in my speciality about three years ago. Endoscopy lends itself extremely well to computer vision because it's a very image oriented speciality. The diagnosis and evaluation of patients and the conditions they might have relies on image analysis, so computer vision is gaining a lot of traction in the field.

Although I have had a longstanding interest in machine learning and how it can be leveraged to provide better patient care, unfortunately, until very recently, these kinds of machine learning projects have been slow to deliver because training a model requires millions and millions of data points.

Preparing that data requires laborious image annotation and time-intensive quality control. These barriers make it difficult to move a model out of the research stage and into production. These roadblocks also mean that this technology has mostly been the domain of large industries as opposed to other, smaller actors that have been dissuaded from pursuing deep learning because of the challenges associated with training data curation and model development.

You mentioned data annotation as a laborious process. Could you describe what it’s like to annotate these images manually?

Dr. Hayee: It’s mental torture. Annotation is simply one of the most painful tasks that we have to perform in image based research.

Labelling data is time consuming, and it requires the attention of someone who understands the subject well enough to annotate the images. Reliable and highly accurate annotations are the bedrock of the machine learning process, so labelling has to be done properly. One option is to outsource the images to a company that provides annotation services, but since these annotators have no background in medicine, clinicians must educate them about the data which is in itself a labour intensive effort. Alternatively, a hospital can pay a highly trained medical professional with two postgraduate degrees to sit in front of a screen and click a mouse for hours, which is not an efficient or cost effective use of time.

How did Encord’s tools change the image annotation experience for you?

Dr. Hayee: The built-in tools allowed us to rapidly annotate large numbers of data points. Before, using clinicians to annotate precancerous polyp videos had prohibitively high costs because of the large number of datasets needed to train the model. With Encord, we annotated the data over six times faster than using traditional methods.

That’s a fantastic result because at those rates we can still deploy highly qualified medical professionals to annotate data, but we don’t take up a huge amount of their time. Much of the task shifts to quality assurance, which is much quicker than annotating, because Encord’s micromodels automatically generated 97 percent of the labels. Rather than acting as annotators, we become the humans-in-the-loop who review the machine’s output, in this case labels, for accuracy.

Compared to other label assistance tools that we’ve worked with, Encord’s user experience is much smoother. The interface is intuitive, so it requires very little training to become a sort of “expert” at using all the functionality.

That’s what you want, right? The mark of a great product is in many ways its useability. For instance, you plug in your smartphone, it works, and you don’t need much training to operate it. That’s the kind of UX we’ve come to expect in the 21st century. When it comes to implementing medical AI, we’ll need that kind of experience because doctors aren’t programmers. We’re end users, and we don’t want to have to learn to code to use the product effectively. We want a product that compliments our medical expertise and enhances our medical practice without requiring us to become computer science experts.

Encord's tools in action

What’s the greatest benefit that AI in general, and computer vision in particular, can bring to your field?

Dr. Hayee: In short, time– time for both patients and clinicians. We’ll be able to give patients real-time evolutions of what we’re seeing on the screen. We’ll be able to detect abnormalities visually with greater reliability and greater speed rather than having to wait for a microscopic confirmation. When it comes to patient care, physicians will have more time to dedicate to a care plan because we won’t have to depend on the current pathways that we use for diagnosis, such as waiting for a histology on a biopsy we’ve taken to come back from the lab, before we make a care management plan.

AI will be incredibly useful in decision support. It’s not a decision replacement. The machine won’t replace the doctor and diagnose the patient itself, but it will provide a second pair of eyes, so to speak, for an already educated clinician. The machine can confirm the doctor's diagnosis, increasing the physician’s confidence so that they can make a management plan and treat the patient straight away. Alternatively, if the machine detects something different from what the doctor had thought, the doctor can take a bit more care, be a bit more cautious, and investigate a bit more thoroughly before making a diagnosis and devising a management plan. Both of those scenarios are positive in my book.

How do you think patients and medical professionals view adoption of AI for medical treatment?

Dr. Hayee: I think there’s some confusion around the technology’s role. Even calling this technology AI unnecessarily raises some hackles because a lot of people associate AI with The Terminator and iRobot– two of the worst movies to have brought AI into the common consciousness because they perpetuate such a negative, unrealistic view of the technology and what it does.

When I talk to people about AI, I say: ‘Listen, these aren’t thinking machines or all-seeing eyes. They aren’t even as smart as a three-year-old child putting blocks into a hole. They’re not going to replace doctors or nurses.’

Medical professionals will become comfortable working daily with AI. In time, computer vision will become what our Texas Instrument calculators are to us now. It’ll make us better and faster at our jobs. I can do simple addition without my calculator, but the calculator augments what I do and improves my performance. That’s what AI is going to do for healthcare. The clinician will always be the person who decides, but the AI will give us additional information to make that decision, similar to how we make decisions about treatment based on blood test results.

You mentioned earlier that time was the greatest benefit that AI could provide to patients. Can you see AI eventually making a profound difference in early diagnosis for diseases?

Dr. Hayee: One would hope so.

Not catching a disease before it’s too late– diagnosing a cancer at a late stage when an early diagnosis could have saved a life– those are the kinds of things that keep physicians up at night. They’re horrendous, and unfortunately, most of us have personal experiences of them. Physicians constantly wonder how they can keep such things from happening.

Right now, we don’t have enough proof to say with certainty that AI will be able to do that, but if you look logically at what AI purports to do, then hopefully it will only be a matter of time before we can definitively prove that AI is saving lives

In healthcare, one of the biggest strengths and biggest weaknesses is the large amount of data that we generate. If we knew how to tap into it, I’m sure it could help us solve many of these problems. It could be a strategic asset that enables us to help our patients rather than just numbers on a page

Products like Encord are going to help us do that because they’re allowing us to unlock the potential of that data faster and in a more democratising way. Not having to be a highly trained programmer to get started with an AI project or proposal opens all kinds of opportunities for innovation and new ideas from new actors. That’s got to be the way forward. If we're going to make progress, we can’t forever concentrate these efforts in big silos and big companies. We need to have more grassroots involvement, and products like Encord are going to help make that participation possible.

Explore our products

Yes. In addition to being able to train models & run inference using our platform, you can either import model predictions via our APIs & Python SDK, integrate your model in the Encord annotation interface if it is deployed via API, or upload your own model weights.

At Encord, we take our security commitments very seriously. When working with us and using our services, you can ensure your and your customer's data is safe and secure. You always own labels, data & models, and Encord never shares any of your data with any third party. Encord is hosted securely on the Google Cloud Platform (GCP). Encord native integrations with private cloud buckets, ensuring that data never has to leave your own storage facility.

Any data passing through the Encord platform is encrypted both in-transit using TLS and at rest.

Encord is HIPAA&GDPR compliant, and maintains SOC2 Type II certification. Learn more about data security at Encord here.Yes. If you believe you’ve discovered a bug in Encord’s security, please get in touch at security@encord.com. Our security team promptly investigates all reported issues. Learn more about data security at Encord here.

Yes - we offer managed on-demand premium labeling-as-a-service designed to meet your specific business objectives and offer our expert support to help you meet your goals. Our active learning platform and suite of tools are designed to automate the annotation process and maximise the ROI of each human input. The purpose of our software is to help you label less data.

The best way to spend less on labeling is using purpose-built annotation software, automation features, and active learning techniques. Encord's platform provides several automation techniques, including model-assisted labeling & auto-segmentation. High-complexity use cases have seen 60-80% reduction in labeling costs.

Encord offers three different support plans: standard, premium, and enterprise support. Note that custom service agreements and uptime SLAs require an enterprise support plan. Learn more about our support plans here.