Contents

What is Video Annotation?

Types of Video Annotations

Understanding the Complexity of Video Data

Key Challenges in Video Annotation

How Encord helps in Video Annotations

Key Takeaways

Encord Blog

Key Challenges in Video Annotation for Machine Learning

5 min read

“Did you know? A 10-minute video at 30 frames per second has 18,000 frames, and each one needs careful labeling for AI training!”

Video annotation is essential for training AI models to recognize objects, track movements, and understand actions in videos. But it’s not easy as it presents many challenges. This article explores these challenges and explains how tools like Encord help in annotating video data faster and more accurately.

What is Video Annotation?

Video annotation is the process of annotating objects, actions, or events in video data to help machine learning (ML) models understand and recognize these objects when exposed to new video data. This annotation process involves identifying and marking objects of interest across multiple frames with the help of annotation tools and different annotation types. This labeled (annotated) data serves as the foundation for training ML models to recognize, track, and understand patterns in video data. It’s like giving a guidebook to machines so that they can use this guide to understand what is in the video data.

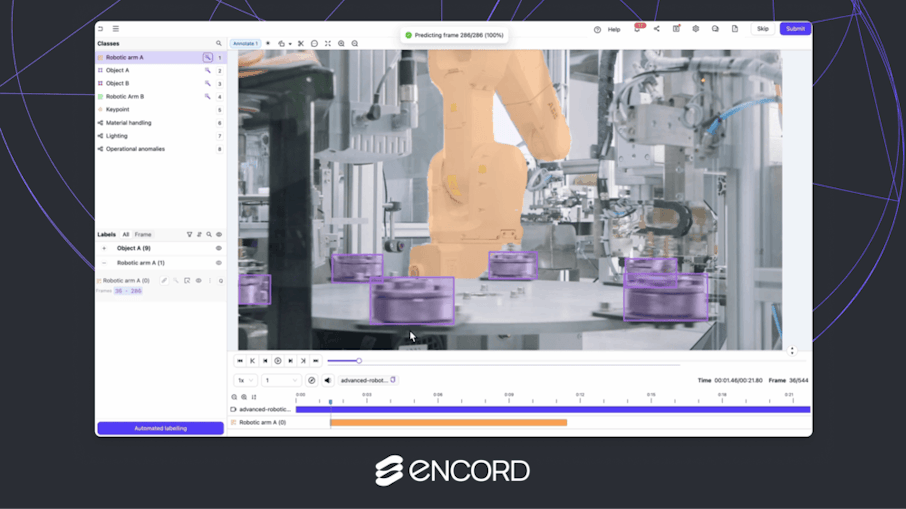

Video Annotation in Encord (Source)

Types of Video Annotations

Video annotation can take different forms depending on the specific use case. Following are the different types of methods used to annotate objects in a video frame.

Types of Annotations in Encord

Bounding Boxes Annotation

Bounding box annotation is a method of annotating an object using rectangular boxes. This type of annotation helps to identify and locate objects within an image or video. Bounding box annotation is used for tasks like object detection to recognize and locate multiple objects in a scene. It assists in training computer vision models to detect and track objects.

Bounding Box Annotation in Encord (Source)

Polygon Annotation

Polygon annotation is a method of annotating objects by drawing detailed and custom-shaped boundaries around them. Unlike bounding boxes, polygons can closely follow the contours of objects that have complex shapes. This type of annotation is particularly useful for tasks like detecting and segmenting objects with complex shapes, such as trees, buildings, or animals, in computer vision models.

Polygon annotation in Encord (Source)

Keypoints Annotation

Keypoints annotation is the method of marking specific points of interest on an object such as the joints of a human body. These points help in identifying and tracking features of the object. Keypoint annotation is used to build computer vision applications like activity recognition, pose estimation, or facial expression analysis.

Keypoint Annotation in Encord (Source)

Polyline Annotations

Polyline annotations are used to draw lines along objects like roads, lanes, or the paths of moving objects. Polyline annotation is used when we want to annotate non-closed shapes. This method is helpful for tasks such as lane detection in autonomous driving or tracking the movement of objects over time in video data.

Polyline Annotation in Encord

Segmentation Mask Annotation

Semantic mask annotation or bitmask annotation is a method where each pixel in an image or video is labeled with a specific class, such as "car," "road," or "person." This pixel-level annotation provides a detailed understanding of the objects in an image or a video frame. This annotation method is used to build applications for scene understanding, medical imaging, or environmental monitoring.

Segmentation Mask (Bitmask) Annotation in Encord

Understanding the Complexity of Video Data

Video data is more complex than image or textual data because it has multiple dimensions, including spatial, temporal, and contextual information. This complexity makes it a challenging but essential component for artificial intelligence (AI) applications.

High Data Volume

Videos consist of sequences of frames (images) which are captured at high frame rates such as 30 or 60 frames per second. This generates a large amount of data even for short video clips. For example, a 10-second video at 30 fps may have 300 frames to process and annotate. Higher resolutions, such a 4K, increases the data size and computational requirements. Videos require high storage capacity due to their size and annotating such video for building machine learning models requires advanced tools to handle these large datasets efficiently.

Temporal Dimension

Unlike images, videos capture sequences of events over time. This adds a temporal layer of complexity. While annotating such video the relationships between successive frames must be understood to make sense of movements, interactions, or changes. For example, tracking a person while he is walking involves understanding his motion across multiple frames. In applications like action recognition, temporal understanding is important for recognizing actions or events. For example, detecting a vehicle slowing down or identifying two persons having handshakes in surveillance footage.

Richness of Content

Videos often contain multiple objects interacting simultaneously. For example, in a traffic video you may see multiple pedestrians, vehicles, and cyclists. Annotating and understanding these interactions is complex but it is important for applications like autonomous vehicles. Real-world videos also have dynamic and unpredictable scenarios such as a change in lighting or weather conditions, or even change in appearances of objects. This makes it challenging to maintain consistent annotations.

Object Motion and Tracking

Fast-moving objects in video can appear blurry in frames which makes it difficult to accurately detect and track them. For example, sports videos often have fast moving objects such as a ball traveling at high speed. Objects may also be partially or completely obscured by other objects which leads to complexity in detection or tracking. For example, a pedestrian walking behind a vehicle may be partially visible in certain frames.

The video data is complex as we have discussed in the above points. Handling such data is challenging and requires advanced annotation tools and techniques as well as powerful computational resources to build machine learning models capable of capturing both spatial and temporal information. Video data is a key data source for AI applications therefore understanding and addressing complexity of such data is important for building effective AI solutions.

Key Challenges in Video Annotation

Video annotation is very important for training machine learning and computer vision models. However, the process of annotating video is too complex because the video data is dynamic and multi-dimensional. Following are the key challenges in video annotation.

Scalability

Annotating video data requires labeling thousands to millions of frames of videos because it has high-resolution and sometimes high-frame-rates. This process of data annotation is time-consuming and resource-intensive. For example, annotating a 10-minute video at 30 frames per second (fps) generates 18,000 frames. Each frame must be labeled for objects, actions, or events, which could take days or weeks for a team of annotators. The manual annotation for such large-scale data becomes impractical without automated annotation tools and as a result it leads to delay in project timelines or error in data annotation.

Consistency Across Frames

Ensuring consistency in video data annotations across successive frames is difficult because the objects may change in appearance, size, or position. For example, a car driving into the frame may be labeled differently in terms of boundary size or position across multiple frames which may result in inconsistencies in annotations. This is a common issue when different annotators are working on the same project. Inconsistent annotations can result in poorly trained models with unreliable predictions.

Temporal Understanding

Temporal understanding in video annotation refers to the ability to analyze and interpret how objects, actions, or events change and move over time in a video. Unlike images, videos capture motion and sequences, so temporal understanding focuses on tracking these changes frame by frame. Annotating this temporal aspect is much more complex than labeling the static image data. For example, in a surveillance video, identifying "a person picking up an object" requires annotators to mark the entire sequence of frames where the action occurs and not just the key moments. If annotators mislabel actions or fail to annotate the entire sequence, it will reduce the ability of ML models to understand and recognize events.

Handling Occlusions

Sometimes objects to be tracked become partially or fully occluded by other objects in video. This makes it hard to track and annotate such objects accurately. For example, in the image (center image) below the person on the right is partially occluded by the one on the left, making part of his body less visible. Annotators must predict his position in such cases. Incorrect labeling of occluded objects leads to incomplete data and reduces the ability of trained models to track objects in real-world scenarios.

Object Tracking during and after Occlusion (Source)

Motion Blur and Poor Visibility

Objects in video that move fast may sometimes appear blurred. This makes it hard to define the boundaries or track such fast moving objects. For example, a fast moving ball in sports videos may appear as a streak which makes it challenging to annotate its exact position in a frame. Annotated data for such objects may lack precision which can affect the accuracy of models.

Fast moving Train causing a Motion Blur (Source)

Annotation Tools Limitations

Many existing annotation tools are not optimized for handling large-scale, complex video datasets as well as advanced automated annotation features. If an annotation tool does not support automated annotation , it will force annotators to manually label objects in every frame of a video which increases the workload. Inefficient tools slow down the annotation process and increase costs.

Cost and Expertise

Annotating video data is labor-intensive and requires skilled annotators. For example, annotating medical videos that consist of events such as surgical procedures requires domain-specific expertise to label tools, anatomy, and actions correctly. High costs and the need for specialized skills make video annotation less accessible for smaller projects or research groups.

Quality Assurance

Ensuring the accuracy of annotation across thousands of frames is a challenging task. The quality assurance becomes more difficult when there are multiple annotators of different skill sets are involved. FOr example, two annotators may label the same object differently in the same video which may lead to inconsistencies in the annotation. Poorly annotated data reduces the accuracy of ML models. So it is important to have strong quality control measures.

Real-Time Requirements

In some annotation tasks such as annotating data for autonomous driving or security surveillance applications, the real-time annotations for quick decision-making is required. For example, annotating video data for autonomous cars requires labeling of objects like pedestrians and traffic signals in milliseconds because these objects move with speed or happen very quickly across video frames. The real-time annotation of such events requires advanced annotation tools.

How Encord helps in Video Annotations

Encord helps to achieve high quality video annotation with granular tooling, customizable workflows and automated pipelines. Encord helps solve your most complex computer vision annotation tasks. Following are some important features of Encord platform which helps in high quality annotation tasks.

AI-Assisted Annotation Tools

Encord uses AI assisted annotation to simplify and speed up the annotation process. Encord has an automated object tracking feature which tracks objects across frames and also maintains consistency of annotation. The platform also integrates the Segment Anything Model (SAM), which automatically segments objects in video frames. Encord allows users to use model-assisted tools to make the labeling process faster and more efficient. Encord’s AI-assisted labeling feature allows the use of state-of-the-Art (SOTA) foundation models as well as your own custom models to automate video annotation. These models can pre-label data which means that it automatically suggests annotations for objects, actions, or events in your videos. This saves time and effort by reducing the manual work needed for labeling. By integrating these models directly into the workflow, you can speed up the annotation process and focus on refining the results. The AI assisted annotation makes video annotation tasks faster and more efficient.

AI-assisted labeling with SOTA foundation models (Source)

Comprehensive Annotation Capabilities

Encord offers a collection of annotation tools for video annotation to meet the needs of all types of video annotation projects. It provides bounding box annotation to annotate objects for object detection tasks by enclosing them in rectangular frames. To annotate irregularly shaped objects it provides a polygon annotations tool. For pose estimation projects it provides a keypoint annotation tool. The dynamic attributes in the Encord platform helps ensure that the annotations capture temporal changes to accurately detect the objects that appear over time in a video frame. For example, if you’re annotating a video of a car, you can track its attributes like its speed, color, or direction, and update them as they appear across multiple frames. This is especially useful for videos where objects or their characteristics are not static but change dynamically. By capturing these changes, Encord helps create more detailed and accurate datasets which is important to train advanced ML models for various real-time applications such as autonomous driving, activity recognition, or surveillance systems. The following image shows annotation of a moving hen using dynamic attribute.

Working with Dynamic Attributes in Encord (Source)

This powerful capability makes Encord a powerful tool for complex annotation needs.

Scalability for Large Video Datasets

Encord is built to handle large video datasets with ease and efficiency. It allows annotators to work on multiple videos at the same time which saves time and effort. Automated workflows make it simple to manage data by organizing tasks like importing, annotating, and exporting videos. The performance analytics feature offers clear insights into the progress and quality of annotations which helps teams to manage large-scale projects effectively.

Collaboration and Quality Assurance

Encord makes teamwork and quality control easy by offering features that help teams to work together and ensure high quality annotations.Encord allows teams to create custom workflows according to their specific needs which may include steps for annotating, reviewing, and approving data. Multiple team members can work on the same project at the same time with real-time updates which keeps all members updated. Encord helps teams to review and check annotations systematically. It helps team members make sure that the annotations are accurate and consistent.

Advanced Features for Temporal Data

Encord is a great tool to work with video data in which objects in video changes over time. Encord provides a frame synchronization feature to make sure that the annotations are consistent across multiple frames even when annotated objects are moving or the background is changing for the objects. Encord also helps in time-series annotation which means that you can annotate events or actions that happen over time. It also supports action segmentation by breaking down continuous actions into small sub-segments. These features provided by Encord help annotators to get the most out of video data by focusing on how things change and evolve over time.

Video annotation using Encord (Source)

The image shows an annotation of a warehouse video using Encord. In this annotation Autonomous Mobile Robots (AMRs) and inventory are labeled with color-coded overlays for object detection and tracking. The timeline highlights active frames for each annotation, with options for automated labeling and manual adjustments.

Integration with Machine Learning Pipelines

Encord provides APIs and SDKs that make it simple to create workflow scripts. This helps to quickly develop effective data strategies. You can set up advanced pipelines and integrations in just minutes to save time and effort. Encord also provides various data export options and supports various formats for easy integration into training pipelines which are compatible with different ML frameworks.

Encord SDK (Source)

In summary, Encord provides all the necessary tools to tackle all the challenges in video annotation. Encord thus helps in building advanced ML models that can recognize objects, actions, or events in video data.

Key Takeaways

Video annotation is crucial for training machine learning (ML) models to detect and track objects, actions, and events in videos. It powers applications like self-driving cars, surveillance systems, and activity recognition by providing well-labeled data. However, it comes with its own set of challenges.

Why Video Annotation Matters: Video annotation helps ML models understand and analyze video content to make it possible to recognize patterns, track movements, and detect events over time.

Challenges in Video Annotation: There are many challenges in video annotation such as:

- Videos contain a lot of frames which makes annotation time-consuming.

- Keeping annotations accurate and consistent across frames is hard.

- Issues like occlusions (objects blocking each other), motion blur, and understanding how things change over time is difficult.

- Handling large datasets efficiently can be challenging.

- Not all tools can handle advanced annotation needs or real-time requirements.

How Encord Helps: Encord simplifies video annotation with AI-assisted tools, automated object tracking, and a variety of annotation options. It supports large datasets, allows easy integration with ML pipelines, and ensures high-quality results through workflow automation. This makes the process faster, more accurate, and scalable.

Explore the platform

Data infrastructure for multimodal AI

Explore product

Video annotation is the process of labeling objects, actions, or events in video frames to help machine learning (ML) models understand and recognize them. It involves marking objects of interest across multiple frames using annotation tools, providing essential training data for AI models.

Video annotation enables AI models to detect and track objects, recognize actions, and understand events over time. It is essential for applications like autonomous driving, security surveillance, medical imaging, and activity recognition.

Bounding Box Annotation: Uses rectangular boxes to mark objects.

Polygon Annotation: Draws custom-shaped boundaries around objects for better accuracy.

Keypoint Annotation: Marks specific points on objects, such as human joints, for pose estimation.

Polyline Annotation: Draws lines for tracking paths, such as road lanes.

Segmentation Mask Annotation: Labels each pixel in a frame for detailed object identification.

High data volume, temporal understanding, occlusions, motion blur, consistency across frames

Challenges may arise when external annotators lack clarity on annotation tasks or do not utilize tools like SAM, leading to inefficiencies and reduced quality in the annotated images. Companies often find that in-house annotation allows for better control over quality and speed, especially when dealing with specific requirements.

Encord allows for extensive customization in labeling, enabling users to create custom labels at both the video and frame levels. For example, users can label an entire video with a general category, like 'critical conflict', while also tagging specific timestamps within the video for more detailed analysis.

Encord provides functionality for video annotation, which is particularly useful for teams looking to expand beyond image-based data. This capability enhances model training processes by allowing the use of diverse data types, ultimately improving the performance of AI models.

Yes, Encord's platform is designed to accommodate specialized annotation tasks, allowing filmmakers and other experts to contribute their insights on specific aspects of video content. This ensures that complex projects benefit from both general and specialized skill sets.

Yes, Encord offers highly customizable labeling parameters, allowing users to define specific attributes for different match events. Users can add fields for various classifications, such as whether a player is in possession of the ball or if a penalty is present.

Encord provides a platform that enables users to find, label, and validate video data with human annotators. This process is essential for training AI models to recognize specific objects or scenes within videos, ensuring accurate and effective model performance.

Yes, Encord supports video annotation and provides advanced features to facilitate automatic labeling of video data. The platform is designed to optimize the annotation process, helping users efficiently prepare their datasets for training AI models.

Encord provides tools to extract frames from videos for annotation, enabling users to focus on the most relevant frames for model processing. This is particularly useful for scenarios where specific user positioning or defect detection is critical.

Yes, Encord allows users to add custom tags to annotations. This includes creating specific classes for objects and assigning multiple choice options for attributes, such as breed, which facilitates detailed analysis and data management.

Yes, Encord is designed to support video native annotation, allowing users to efficiently label and annotate video data. This capability is particularly beneficial for projects requiring detailed analysis of video content.