Encord Blog

OpenAI’s DALL-E 3 Explained: Generate Images with ChatGPT

In the field of image generation, OpenAI continues to push the boundaries of what’s possible. On September 20th, 2023 Sam Altman announced DALL-E 3, which is set to revolutionize the world of text-to-image generation.

Fueled by Microsoft's support, the firm is strategically harnessing ChatGPT's surging popularity to maintain its leadership in generative AI, a critical move given the escalating competition from industry titans like Google and emerging disruptors like Bard, Midjourney, and Stability AI.

DALL-E 3: What We Know So Far

DALL-E 3 is a text-to-image model which is built upon DALL-E 2 and ChatGPT. It excels in understanding and translating textual descriptions into highly detailed and accurate images.

Watch the demo video for DALL-E 3!

Watch the demo video for DALL-E 3!While this powerful AI model is still in research preview, there's already a lot to be excited about. Here's a glimpse into what we know so far about DALL-E 3:

Eliminating Prompt Engineering

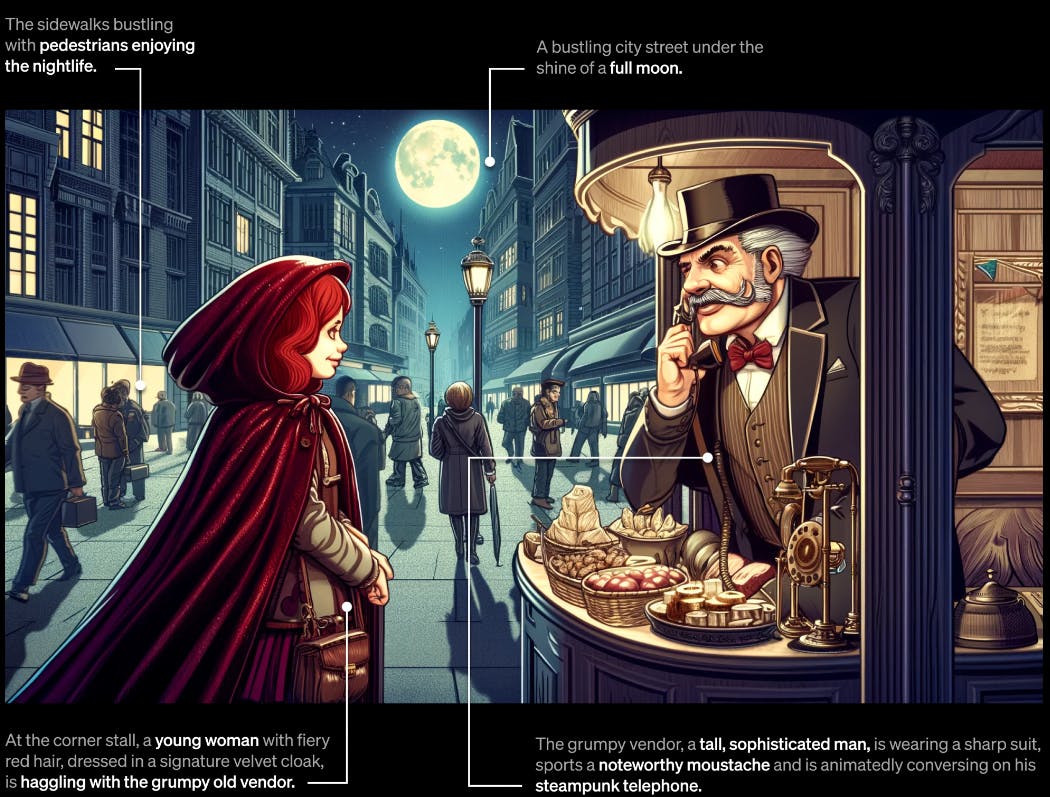

DALL-E 3 is set to redefine how we think about generating images from text. Modern text-to-image systems often fall short by ignoring words or descriptions, thereby requiring users to master the art of prompt engineering. In contrast, DALL·E 3 represents a remarkable leap forward in our ability to generate images that precisely adhere to the text provided, eliminating the complexities of prompt engineering.

Integrated seamlessly with ChatGPT, DALL·E 3 acts as a creative partner, allowing users to effortlessly bring their ideas to life by generating tailored and visually stunning images from simple sentences to detailed paragraphs.

Improved Precision

DALL-E 3 is set to redefine how we think about generating images from text prompts. Previously DALL-E, like other generative AI models has shown issues interpreting complex text prompts and often mixing two concepts while generating images. Unlike its predecessors, this model is designed to understand text prompts with remarkable precision, capturing nuance and detail like never before.

Focus on Ethical AI

OpenAI is acutely aware of the ethical considerations that come with image generation models. To address these concerns, DALL-E 3 incorporates safety measures that restrict the generation of violent, adult, or hateful content. Moreover, it has mitigations in place to avoid generating images of public figures by name, thereby safeguarding privacy and reducing the risk of misinformation.

OpenAI's commitment to ethical AI is further underscored by its collaboration with red teamers and domain experts. These partnerships aim to rigorously test the model and identify and mitigate potential biases, ensuring that DALL-E 3 is a responsible and reliable tool.

Just this week, OpenAI unveiled the "OpenAI Red Teaming Network," a program designed to seek out experts across diverse domains. The aim is to engage these experts in evaluating their AI models, thereby contributing to the informed assessment of risks and the implementation of mitigation strategies throughout the entire lifecycle of model and product development.

Transparency

As AI-generated content becomes more prevalent, the need for transparency in identifying such content grows. OpenAI is actively researching ways to help people distinguish AI-generated images from those created by humans. They are experimenting with a provenance classifier, an internal tool designed to determine whether an image was generated by DALL-E 3. This initiative reflects OpenAI's dedication to transparency and responsible AI usage.

This latest iteration of DALL-E is scheduled for an initial release in early October, starting with ChatGPT Plus and ChatGPT Enterprise customers, with subsequent availability in research labs and through its API service in the autumn. OpenAI intends to roll out DALL-E 3 in phases but has not yet confirmed a specific date for a free public release.

When DALL-E 3 is launched, you'll discover an in-depth explanation article about it on Encord! Stay tuned!

When DALL-E 3 is launched, you'll discover an in-depth explanation article about it on Encord! Stay tuned!Recommended Topics for Pre-Release Reading

To brace yourself for the release and help you dive right into it, here are some suggested topics you can explore:

Transformers

Transformers are foundational architectures in the field of artificial intelligence, revolutionizing the way machines process and understand sequential data. Unlike traditional models that operate sequentially, Transformers employ parallel processing, making them exceptionally efficient. They use mechanisms like attention to weigh the importance of different elements in a sequence, enabling tasks such as language translation, sentiment analysis, and image generation. Transformers have become the cornerstone of modern AI, underpinning advanced models like DALL-E, ChatGPT, etc.

For more information about Vision Transformers read Introduction to Vision Transformers (ViT)

For more information about Vision Transformers read Introduction to Vision Transformers (ViT)Foundation Models

Foundation models are the bedrock of contemporary artificial intelligence, representing a transformative breakthrough in machine learning. These models are pre-trained on vast datasets, equipping them with a broad understanding of language and knowledge. GPT-3 and DALL-E, for instance, are prominent foundation models developed by OpenAI. These models serve as versatile building blocks upon which more specialized AI systems can be constructed. After pre-training on extensive text data from the internet, they can be fine-tuned for specific tasks, including natural language understanding, text generation, and even text-to-image conversion, as seen in DALL-E 3. Their ability to generalize knowledge and adapt to diverse applications underscores their significance in AI's rapid advancement.

Foundation models have become instrumental in numerous fields, including large language models, AI chatbots, content generation, and more. Their capacity to grasp context, generate coherent responses, and perform diverse language-related tasks makes them invaluable tools for developers and researchers. Moreover, the flexibility of foundation models opens doors to creative and practical applications across various industries.

For more information about foundation models read The Full Guide to Foundation Models

For more information about foundation models read The Full Guide to Foundation ModelsText-to-Image Generation

Text-to-image generation is a cutting-edge field in artificial intelligence that bridges the gap between textual descriptions and visual content creation. In this remarkable domain, AI models use neural networks to translate written text into vivid, pixel-perfect images. These models understand and interpret textual input, capturing intricate details, colors, and context to produce striking visual representations. Text-to-image generation finds applications in art, design, content creation, and more, offering a powerful tool for bringing creative ideas to life. As AI in this field continues to advance, it holds the promise of revolutionizing how we communicate and create visual content, offering exciting possibilities for artists, designers, and storytellers.

Read the paper Zero-Shot Text-to-Image Generation by A. Ramesh, et al from OpenAI to understand how DALL-E generates images!

Read the paper Zero-Shot Text-to-Image Generation by A. Ramesh, et al from OpenAI to understand how DALL-E generates images!

Power your AI models with the right data

Automate your data curation, annotation and label validation workflows.

Get startedWritten by

Nikolaj Buhl

Explore our products