Contents

What other platforms and training data tools did you use before finding Encord?

What sort of problems did you run into with these labeling platforms?

Did using Encord solve these issues?

Which of Encord’s labeling features do you and your team find particularly helpful?

From a reviewer's perspective, which of Encord’s features are most useful?

How do you collaborate with the machine learning team while using Encord’s platform?

As a radiologist, what excites you about Encord’s technology?

Case Studies

Customer Interview: Annotating and Reviewing Medical Imaging Data with Encord

Dr. Hamza Guzel, a radiologist with the Turkish Ministry of Health, is an expert at diagnosing and treating medical conditions using medical imaging technology. Guzel also works with Floy, a medical AI company developing technology that assists radiologists in detecting lesions, helping them prepare the medical imaging data used to train their machine learning models. He supervises three junior radiologists, reviewing their annotations for accuracy and quality. He also works on product development with Floy’s machine learning team.

In the below Q&A, Dr. Guzel answers questions about what it’s like using Encord to review and annotate medical imaging data.

What other platforms and training data tools did you use before finding Encord?

We started with some open source tools like Snap ITK and 3D Slicer before trying off-the-shelf commercial tools. We encountered different problems with each of these platforms, which caused us to keep looking for a tool that could meet the needs of our annotators and reviewers. We want the annotation and review process to be as fast and easy as possible, but we also require the labels to be specific and precisely drawn.

What sort of problems did you run into with these labeling platforms?

In medical imaging annotation, we’re labeling millimetric objects, and the outlines of our annotations have to be precise, especially when we’re labeling for the purpose of building AI detection systems. With the open source platforms, the annotators had issues with border resolution. They also had limitations with the outside border resolution.

The open source platforms were also hosted locally, offline, on the individual annotators’ computers. This wasn’t ideal because it didn’t allow for seamless collaboration between the three annotators and one reviewer working on the project.

But with the commercial off-the-shelf platforms, we ran into different issues. The first platform we tried wouldn’t let us organize patient data in a manner that reflects a patient’s medical file. In radiology, one patient has a number of different series, and a series contains between 50 and 200 images. A patient may have five series, for instance, but it’s all still data that belongs to that one patient. One platform wouldn’t allow us to create individual patient folders that contained multiple series, so the data was all mixed up.

Another off-the-shelf platform didn’t have this organizational problem, but we found out that it had different and more problematic issues. It took a long time to open images. Medical images are large– often many gigabytes– and the platform struggled to stream them in a reasonable amount of time. Medical images are made up of multiple slices, and moving from one slice to the other was not a smooth experience. The more annotations we had made on a slice, the longer it took to bring up the next slice.

The bigger problem was that we had accuracy issues with the platform. In the end, we couldn’t trust it. Once, the annotations were deleted without explanation, and we lost about 40 hours of work. Another time, by chance, I checked a dataset before sending it to the machine learning team, and all of the annotations had shifted by a couple of millimeters. They no longer accurately identified the correct objects. If a model trains on mislabelled data, it will learn to make inaccurate predictions. That’s a disaster for a company developing medical AI.

Did using Encord solve these issues?

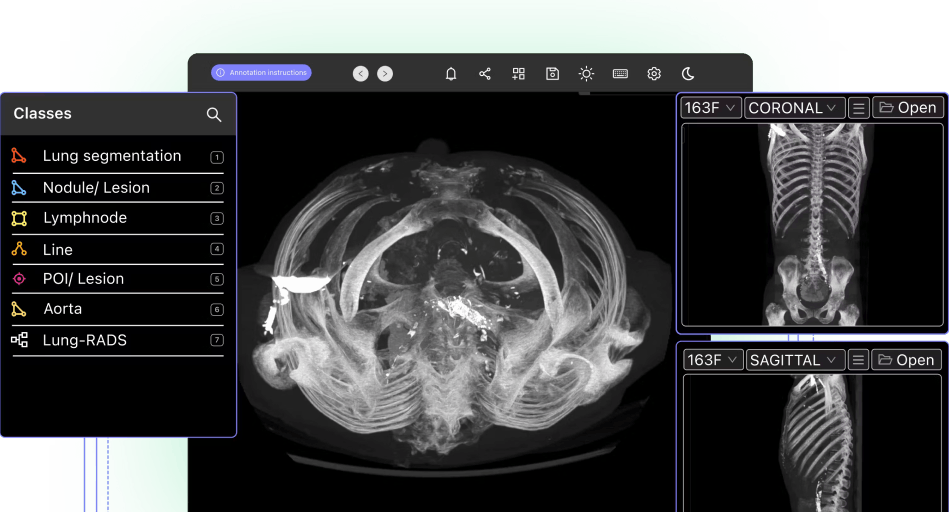

Yes, it did. The organizational issue was not a problem in Encord, and with Encord’s freehand annotation tool, we can label data however we want. We can decrease the distance between the dots on boundaries so that we can work at the millimeter scale that we need to label lesions and other objects precisely. Labeling is also a smooth experience– it’s very easy to draw on the image and move from one image slice to another.

The time it takes to annotate an image varies depending on several things, including the modality as well as the difficulty of a patient’s case. That said, using the other platforms, annotating a simple series could take 15 minutes, while a complicated series, such as one with advanced cancer, could take an hour. In MRI, we have about 23 images per series. In CT, we have about 250, so for some CT cases; it could take us four hours to label just one patient’s set of data.

Which of Encord’s labeling features do you and your team find particularly helpful?

The dark mode. We all use it, and we all love it.

Dark mode in Encord for viewing DICOM images in 3D

You can also create a label and continue to use that same label as you move from slice to slice, labeling the same object. If a patient has five lesions that need to be labeled, and the image has 10 slices, then you need to label 50 objects, but you don’t need 50 different identifying labels. You only need five, one for each lesion. Other platforms made us use 50 unique labels. Having only five labels is actually crucial for training the model because the model needs to understand that the lesion is the same one even if it appears in multiple slices.

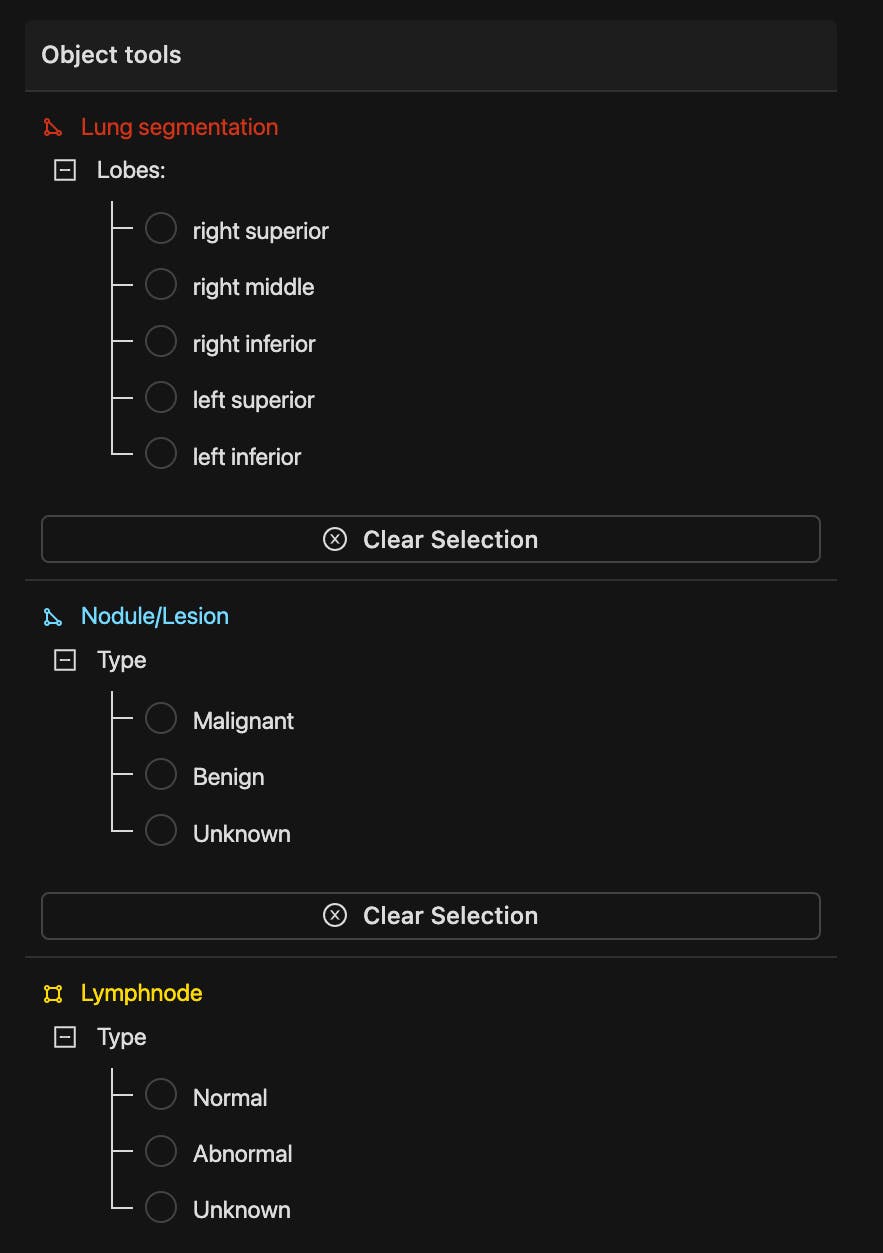

Flexible ontologies are also really useful. If you are creative, you can solve a lot of problems by using them. For instance, before Encord, we used a spreadsheet to list series we wanted to exclude from training. Using a spreadsheet leaves us vulnerable to quality control issues because someone could accidentally change it. In Encord, we created an “exclude” ontology. Then, we wrote five different reasons for exclusions, such as “wrong image.” It worked perfectly. When we extracted these features, we could automatically see the excluded series.

Example of a radiology ontology in Encord

From a reviewer's perspective, which of Encord’s features are most useful?

The platform facilitates best communication between annotator and reviewer that I’ve seen.

Annotators can leave notes for reviews when they are annotating, asking questions like “Could you check this lesion?” and “I’m not sure if this is cancer or not. Could you review?”

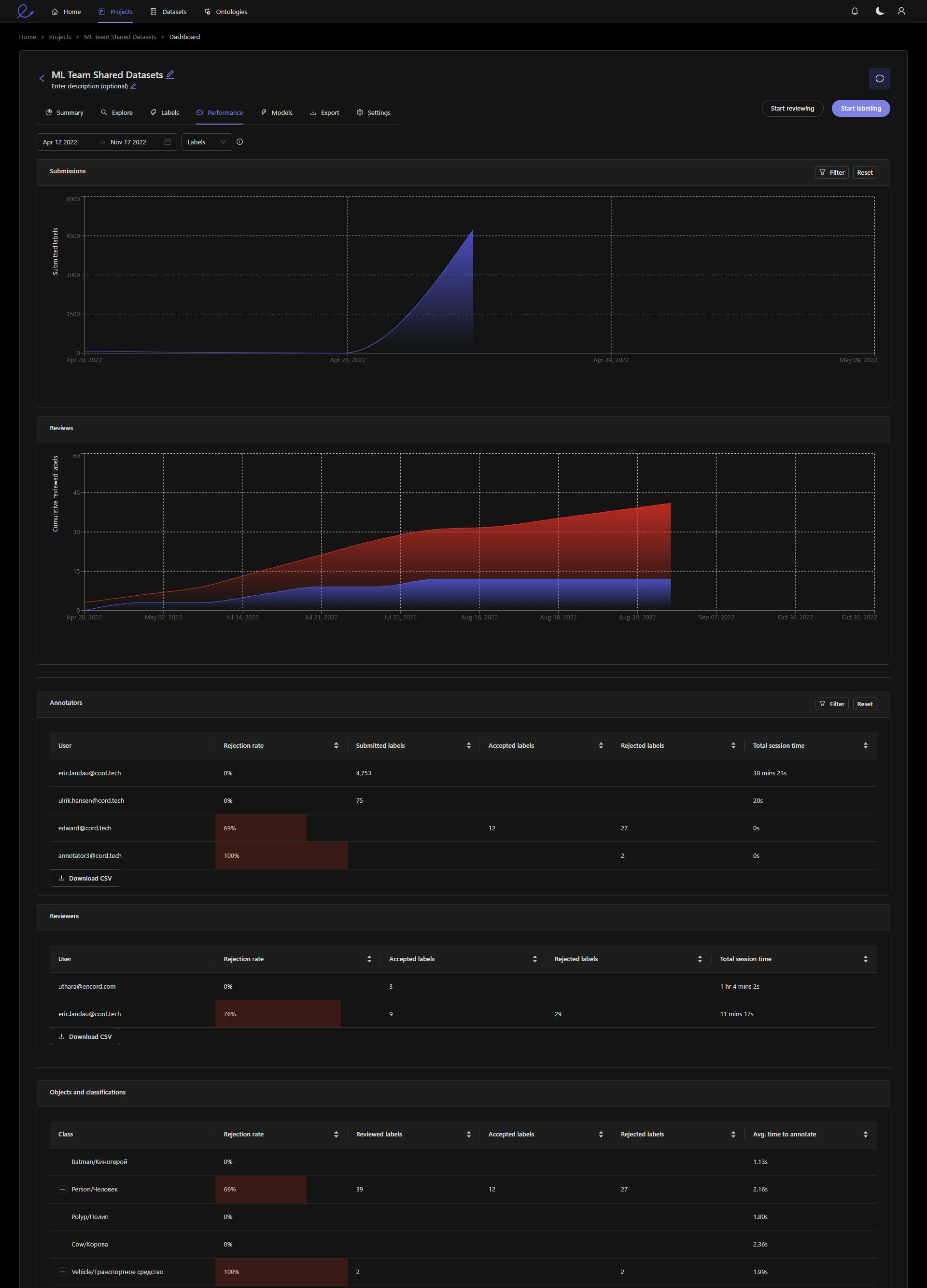

Other platforms only allowed the reviewer to approve or reject a label, but Encord’s review feature lets a reviewer provide feedback so that the reviewer can read the note and change the label accordingly. That improves the process over time because the annotators learn. Early on in a project, I might reject 25 percent of labels, but because I provide the annotators with reasons for rejections, they improve, and, for the rest of the process, the label and review process goes much faster.

Encord’s feature also allows a reviewer to directly modify a label themselves– something that the other platforms didn’t allow either. The label-review process is time-intensive, and for smaller mistakes, I don’t want to reject an entire series and send it back through that whole process if I can fix the mistake quickly and send the data onto the machine learning team.

In other platforms, I actually had to use a workaround in which I logged into the labellers accounts to fix their mistakes because I had no method for changing an annotation or showing them how they could improve.

Annotator management dashboard in Encord

How do you collaborate with the machine learning team while using Encord’s platform?

The machine learning team extracts the images, annotations, and the associated features. Another plus of using Encord is that the annotators can write information inside of the labels, such as the size of an aneurysm or the type of lesion, so the ML team can extract these features as well as the ontologies as well as any notes made by the annotators during the label and review process.

Once the images and features have been extracted, I collaborate with the machine learning team, analyzing the images and the labels as well as the predictions made by the model during training. We are in continuous collaboration as they go through the machine learning process. They showed me false positive and false negative results, and we looked at the data and the annotations and try to figure out why these mistakes happened so that we could improve the model’s performance.

As a radiologist, what excites you about Encord’s technology?

Radiologists are using medical imaging devices much more often than they used to. Worldwide, the number of scans performed is increasing quickly. Even in my small hospital, we have 400 MRI or CT scans daily, and we only have three radiologists to review them all.

With these increases in volume, it’s not possible for radiologists to evaluate all the scans without help. For example, we currently have to outsource some reviews to external radiologists to ensure we don’t end up with a backlog.

Having AI that can help identify small lesions or pathologies is very useful for both patients and doctors. They both prefer it when an AI-powered tool provides a second opinion. I feel safer having this second pair of eyes check for lesions or a second brain perform calculations.

However, developing reliable and accurate AI tools means we have to have a lot of well- labeled data, so annotating precisely–down to the pixel–is incredibly important. Having tools like Encord that make the label and review process go faster is important, but it’s even more important that I can trust Encord’s platform. When I label data precisely and with notes, I need to know that those details will make it exactly as they are to the machine learning team. That’s the only way they can develop technology capable of making true positive and true negative predictions consistently.

Yes. In addition to being able to train models & run inference using our platform, you can either import model predictions via our APIs & Python SDK, integrate your model in the Encord annotation interface if it is deployed via API, or upload your own model weights.

At Encord, we take our security commitments very seriously. When working with us and using our services, you can ensure your and your customer's data is safe and secure. You always own labels, data & models, and Encord never shares any of your data with any third party. Encord is hosted securely on the Google Cloud Platform (GCP). Encord native integrations with private cloud buckets, ensuring that data never has to leave your own storage facility.

Any data passing through the Encord platform is encrypted both in-transit using TLS and at rest.

Encord is HIPAA&GDPR compliant, and maintains SOC2 Type II certification. Learn more about data security at Encord here.Yes. If you believe you’ve discovered a bug in Encord’s security, please get in touch at security@encord.com. Our security team promptly investigates all reported issues. Learn more about data security at Encord here.

Yes - we offer managed on-demand premium labeling-as-a-service designed to meet your specific business objectives and offer our expert support to help you meet your goals. Our active learning platform and suite of tools are designed to automate the annotation process and maximise the ROI of each human input. The purpose of our software is to help you label less data.

The best way to spend less on labeling is using purpose-built annotation software, automation features, and active learning techniques. Encord's platform provides several automation techniques, including model-assisted labeling & auto-segmentation. High-complexity use cases have seen 60-80% reduction in labeling costs.

Encord offers three different support plans: standard, premium, and enterprise support. Note that custom service agreements and uptime SLAs require an enterprise support plan. Learn more about our support plans here.

Explore our products