Encord Blog

Closing the AI Production Gap With Encord Active

Power your AI models with the right data

Automate your data curation, annotation and label validation workflows.

Get startedWritten by

Eric Landau

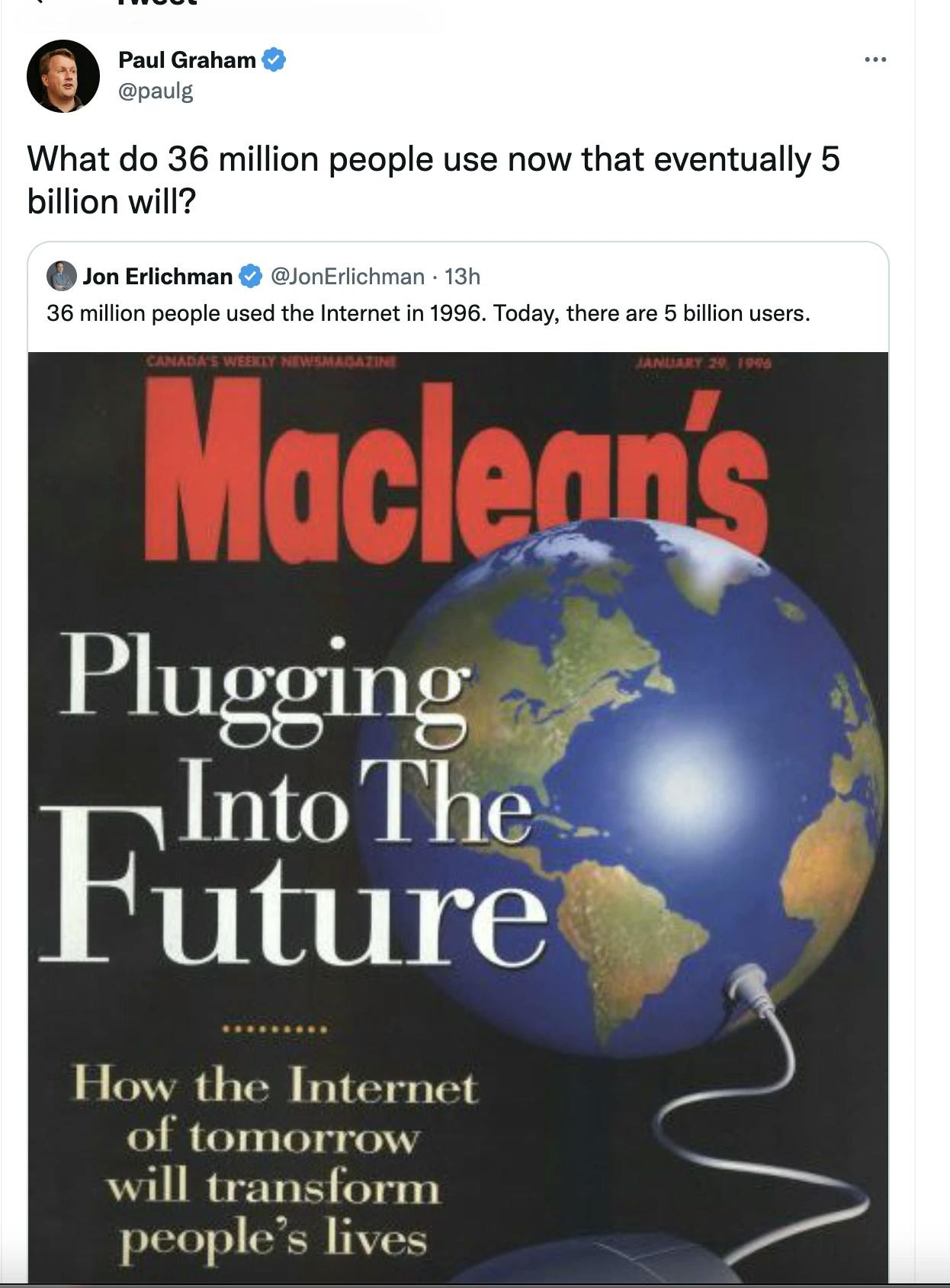

Recently there was a tweet by Paul Graham showing an old cover from Maclean’s magazine highlighting the “future of the internet”.

Graham poses the accompanying question: “What do 36 million people use now that eventually 5 billion people will?” At Encord we have long believed the answer to this question to be artificial intelligence, as AI feels to be at a similar inflection point now as the internet was in the 90s, poised to take off for widespread adoption.

While this view that AI will be the next ubiquitous technology is not an uncommon one, its plausibility hasn’t been as palpable as recently with the imagination-grabbing advancements in generative AI over the last year. These advancements have seen the rise of “foundational models”, high capacity unsupervised AI systems that train over enormous swaths of data and take millions of dollars of GPU power doing it.

TLDR;

Problem: There is an “AI production gap” between proof-of-concept and production models due to issues with AI model robustness, reliability, explainability, caused by a lack of high-quality labels, model edge cases, and cumbersome iteration cycles for model re-training.

Solution: We are releasing a free open-source active learning toolkit, designed to help people building computer vision models improve their data, labels, and model performance.

Introduction

The success of foundational models is creating a dynamic of duality in the AI world. With foundational models built by well-funded institutions with the GPU muscle to train over an internet’s worth of data and application-layer AI models normally built from the traditional supervised learning paradigm requiring labeled training data.

While the feats of these foundational models have been quite impressive, it is quite clear we are still in the very early days of the proliferation and value of application-layer AI, with numerous bottlenecks holding back wider adoption.

We started Encord a few years ago originally to tackle one of the major blockers to this adoption, the data labeling problem. Over the years, working with many exciting AI companies, we have since enhanced our views on the blockers for later-stage AI development and deploying models to production environments. Over this post, we will discuss our learnings from thousands of conversations with ML engineers, data operations specialists, business stakeholders, researchers, and software engineers and how it has culminated in the release of our newest open-source toolkit, Encord Active.

The Problem: Elon’s promise

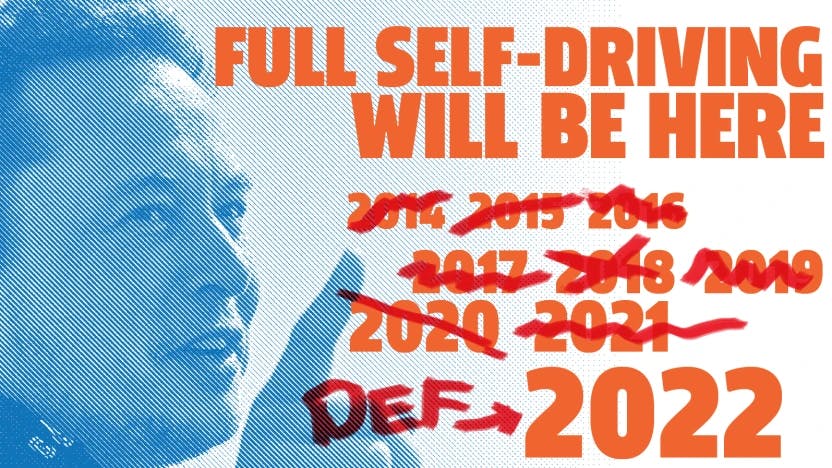

There is a famous Youtube sequence of Elon Musk promising Tesla’s delivery of self-driving cars, every “next year”, since 2014.

We are now at the start of 2023, and that promise still seems “one year” away. This demonstrates how the broader dream of realizing production AI models that have transformative effects over the real world (self-driving cars, robot chefs, etc) has been slower to materialize than expected given early promise and floods of investment.

This is a multi-faceted and complex issue coupled with societal structures outside the tech industry (regulators, governments, industry, etc.) lagging appreciation of associated implications that come from the second-order effects of adopting this technology. The more pernicious problem, however, is one which lies within the technology itself. Promising proof-of-concept models which perform well on benchmarked datasets in research environments have often struggled when in contact with real-world applications. This is the infamous AI production gap. Where does this gap come from?

The AI Production Gap

One of the main issues is that the set of requirements asked of AI applications rises precipitously when in contact with a production environment: robustness, reliability, explainability, maintainability, and much more stringent performance thresholds. An AI barista making a coffee is impressive in a cherry-picked demo video, but frustrating when spilling your Frappuccino 5 times in a row.

As such, the gap between “that’s neat” and “that’s useful” is much larger and more formidable than ML engineers had anticipated. The production gap can be attributed to a few high-level sub-components. Among others:

Slow shift to data-centricity:

Working with many AI practitioners, we have noticed a significant disconnect between academics and industry. Academics often focus on model improvements, working with fixed benchmark datasets and labels. They optimize the parts of the system that they have the most control over. Unfortunately, in practical use cases, these interventions have lower leveraged effects on the success of the AI application than taking a data-centric view.

Insufficient care has been placed on data-centric problems such as data selection, data quality improvement, and label error reduction. While not important from a model-centric view with fixed training and validation datasets, these elements are crucial for the success of production models.

Lack of decomposability:

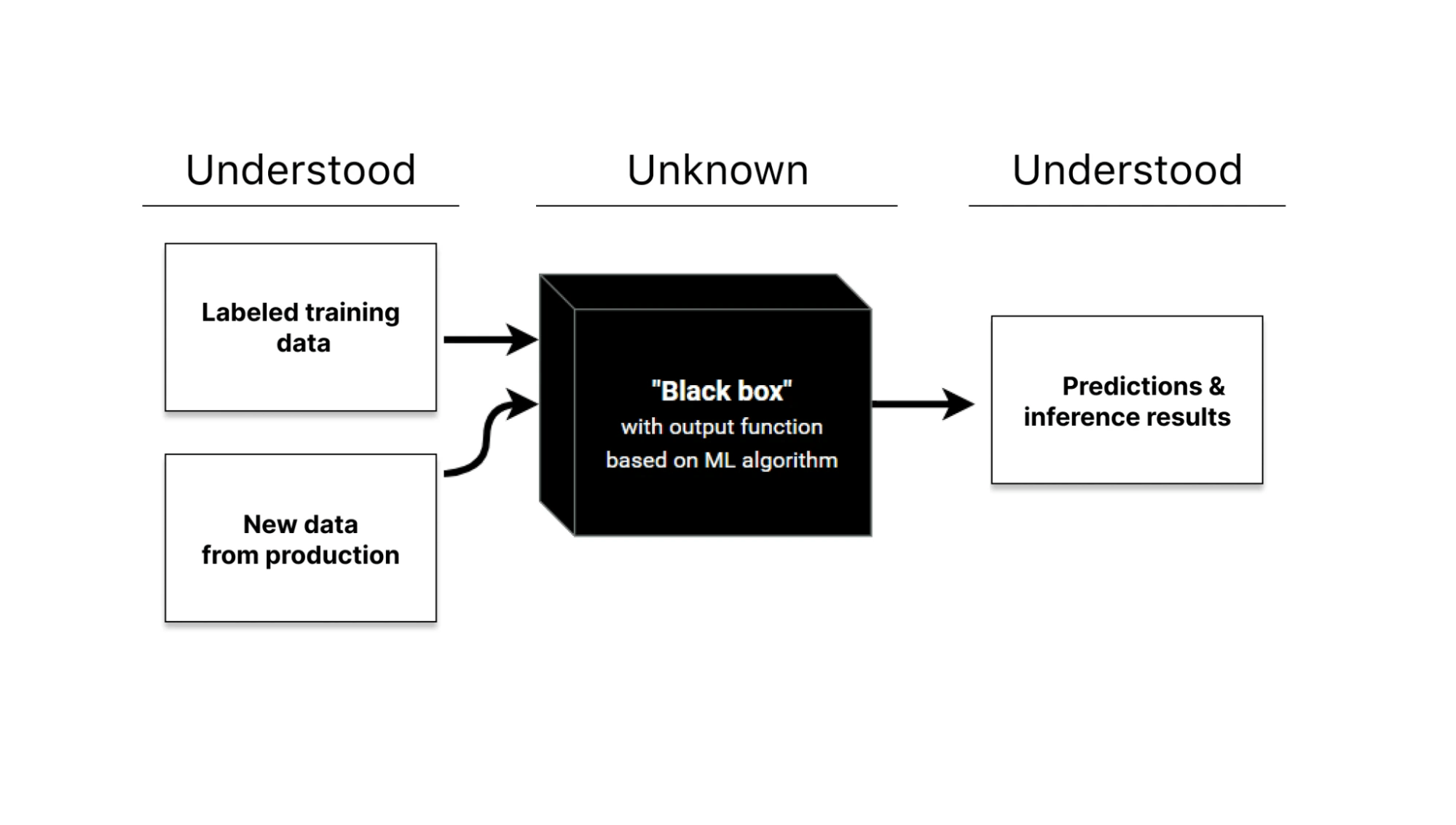

A disadvantage of deep learning methods compared to traditional software is the lack of being able to take it apart in pieces for examination. Normal (but highly complex) software systems have composable parts that can be examined and tested in independent ways. Stress testing individual components of a system is a powerful strategy for fortifying the entirety. Benefits include the interpretability of system behavior and the ability to quickly isolate and debug errant pieces. Deep neural networks, for all their benefits, are billion parameter meshes of intransparency; you take it as is and have little luck in inspecting and isolating pieces component-wise.

Insufficient evaluation criteria:

Exacerbating the lack of decomposability are the insufficient criteria we have to evaluate AI systems. Normal approaches just take global averages of a handful of metrics. Complex high-dimensional systems need sophisticated evaluation systems to meet the complexity of their intended domain. The tools to probe and measure performance are still nascent for models and almost completely non-existent for data and label quality, leaving a lack of visibility into the true quality of an AI system.

The above problems (and the lack of human-friendly tools to deal with them) have all contributed in their own way (again among others) to the AI production gap.

At Encord, we have been lucky to see how ML engineers across a multifaceted set of use cases have tackled these issues. The interesting observation was that they used very similar strategies even in very varied use cases. We have been helping these companies now for years, and based on that experience we have released Encord Active, a tool that is data-centric, decomposable, human-interaction focused, and improves evaluation.

How It Should Be Done

Before going into Encord Active, let’s go over how we’ve seen it done by the best AI companies. The gold standard of active learning are stacks that are fully iterative pipelines where every component is run with respect to optimizing the performance of the downstream model: data selection, annotation, review, training, and validation are done with an integrated logic rather than as disconnected units.

Counterintuitively, the best systems also have the most human interaction. They fully embrace the human-in-the-loop nature of iterative model improvement by opening up entry points for human supervision within each sub-process while also maintaining optionality for completely automated flows when things are working. The best stacks are thus iterative, granular, inspectable, automatable, and coherent.

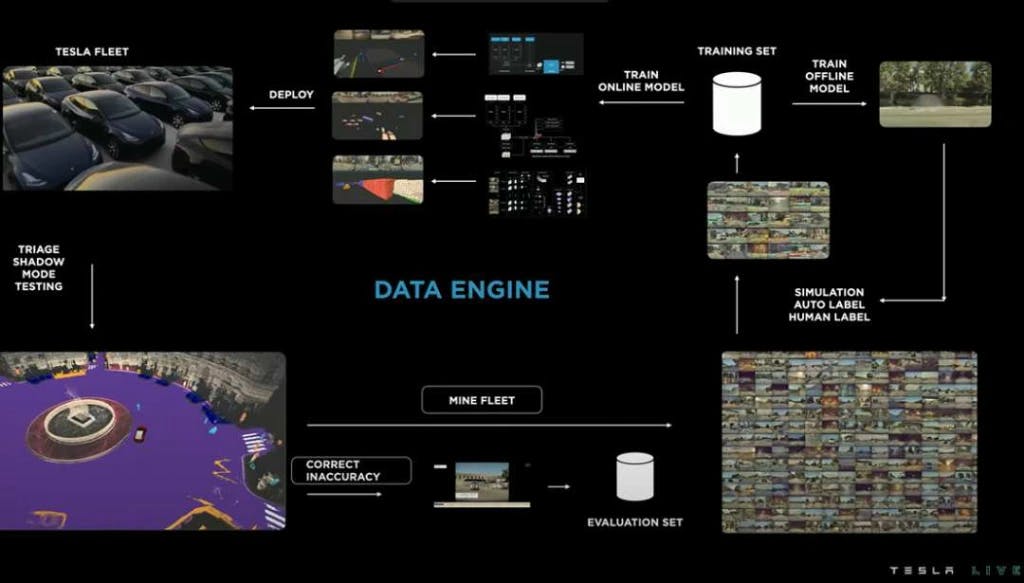

Last year, Andrej Karpathy presented Tesla’s Data Engine as their solution to bridge the gap, but where does that leave other start-ups and AI companies without the resources to build expensive in-house tooling?

Source: Tesla 2022 AI Day

Introducing Encord Active

Encountering the above problems and seeing the systems of more sophisticated players led us through a long winding path of creating various tools for our customers. We have decided to release them open source as Encord Active.

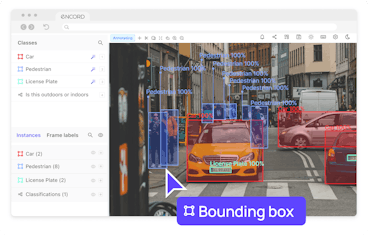

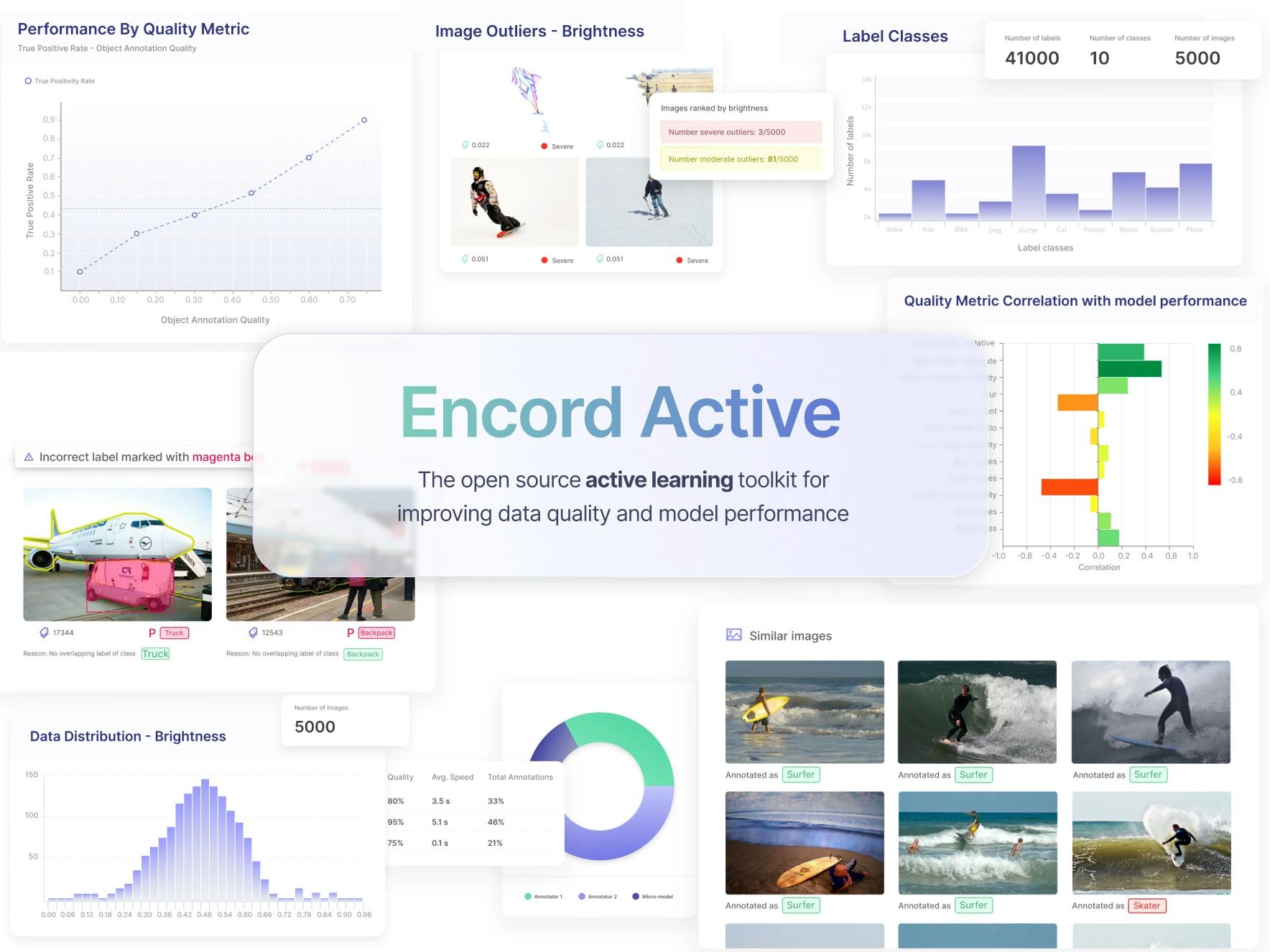

Loosely speaking Encord Active is an active learning toolkit with visualizations, workflows, and, importantly, a library of what we call “quality metrics”. While not the only value-add of the toolkit, for the remainder of the post, we will focus on the quality metric library as it is one of our key contributions.

Quality Metrics

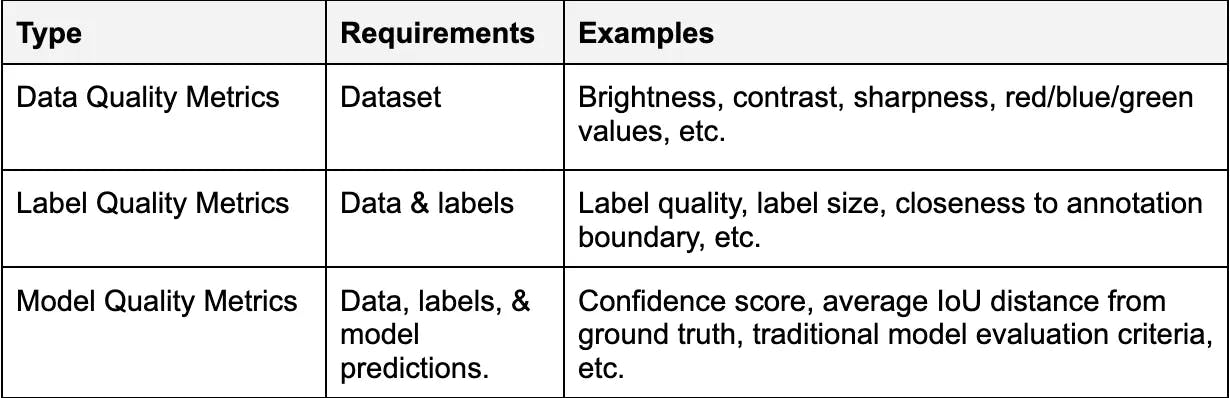

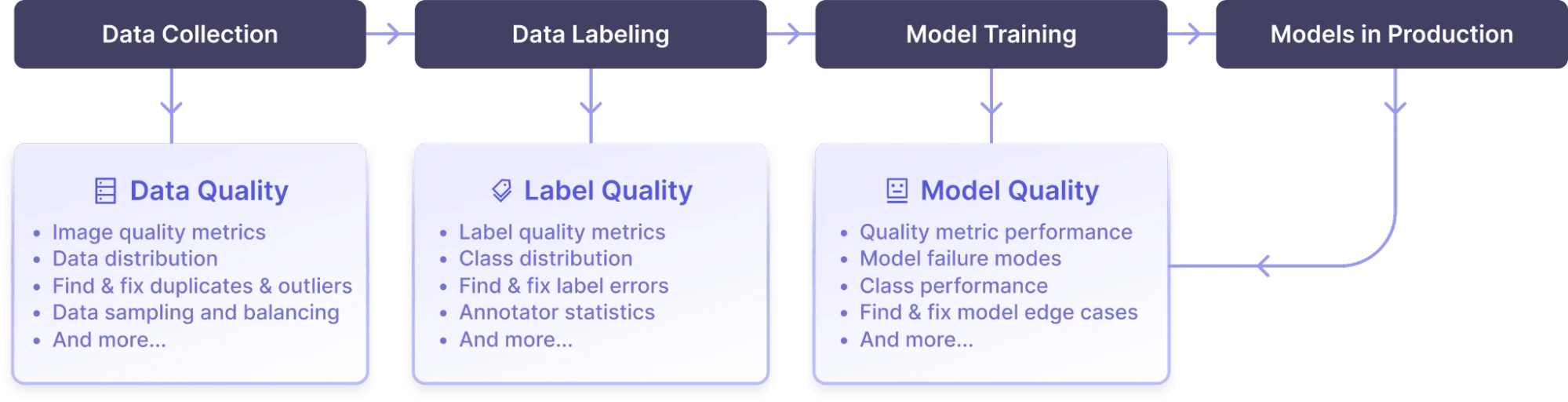

Quality metrics are additional parametrizations added onto your data, labels, and models; they are ways of indexing your data, labels, and models in semantically interesting and relevant ways. They come in three flavors:

It was also very important that Encord Active gives practical and actionable workflows for ML engineers, data scientists, and data operations people. We did not want to build an insight-generating mechanism, we wanted to build a tool that could act as the command center for closing the full loop on concrete problems practitioners were encountering in their day-to-day model development cycle.

The way it works

Encord Active (EA) is designed to compute, store, inspect, manipulate, and utilize quality metrics for a wide array of functionality. It hosts a library of these quality metrics, and importantly allows you to customize by writing your own “quality metric functions” to calculate/compute QMs across your dataset.

Upload data, labels, and/or model predictions and it will automatically compute quality metrics across the library. These metrics are then returned in visualizations with the additional ability to incorporate them into programmatic workflows. We have adopted a dual approach such that you can interact with the metrics via a UI with visualizations, but also set them up in scripts for automated processes in your AI stack.

With this approach, let’s return back to the problems we had listed earlier:

Slow shift to data-centricity:

EA is designed to help improve model performance among several different dimensions. The data-centric approaches it facilitates include, among others:

- Selecting the right data to use data labeling, model training, and validation

- Reducing label error rates and label inconsistencies

- Evaluating model performance with respect to different subsets within your data

EA is designed to be useful across the entire model development cycle. The quality metric approach covers everything from prioritizing data during data collecting, to debugging your labels, to evaluating your models.

The best demonstrations are with examples in the next section.

Decomposability:

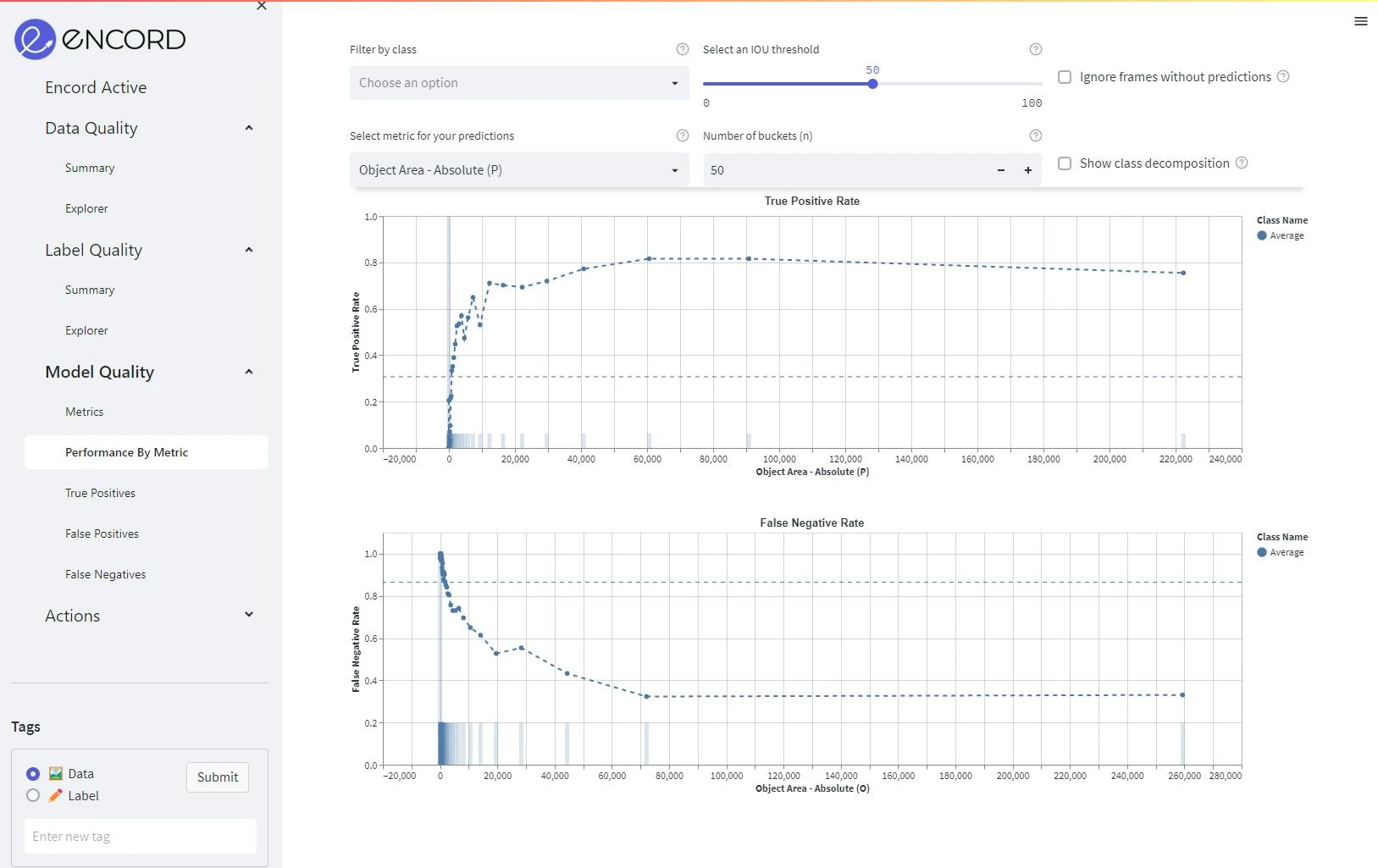

Until we have better tools to inspect the inner workings of neural networks, EA treats the decomposability problem by shifting decomposability both up and down the AI stack. Rather than factoring a model itself, quality metrics allow you to very granularly decompose your data, labels, and model performance. This kind of factorization is critical for identifying potential problems and then properly debugging them.

Insufficient evaluation criteria:

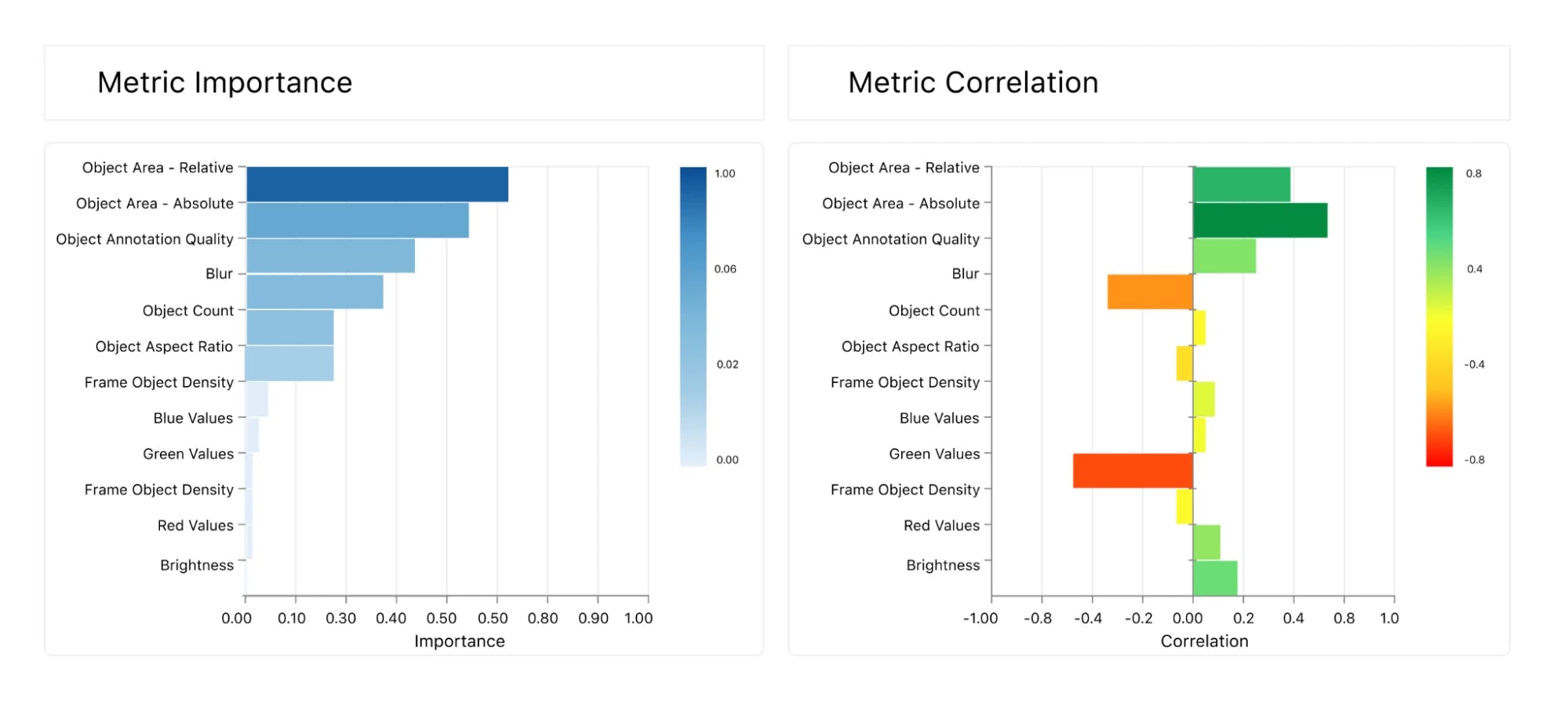

As a corollary to the above EA allows for arbitrarily many and arbitrarily complex quality metrics to evaluate the performance of your model. Importantly, it breaks down the model performance as a function of the quality metrics automatically, guiding users to the metrics that are likely to be most impactful for model improvement.

Until we have both AGI AND the AI alignment problem solved, it remains critically important of keeping humans in the loop for monitoring, improvement, development, and maintenance of AI systems. EA is designed with this in mind. The UI allows for quick visual inspection and tagging of data, while the programmatic interface allows for systematization and automation of workflows discovered by ML engineers and data operations people.

Choose Your Own Adventure: Example Use Cases

Data selection:

With EA you can run your previous model over a new dataset and set the inverse confidence score of the model as a quality metric. Sample the data weighted by this quality metric for entry into an annotation queue. Furthermore, you can use the pre-computed quality metrics to identify subsets of outliers to exclude before training or subsets to oversample. You see a tutorial on data selection using the TACO dataset here.

Label error improvement:

You can use the “annotation quality” metric, which calculates the deviation of the class of a label from its nearest neighbors in an embedding space to identify which labels potentially contain errors. This additionally breaks down label error with respect to who annotated it, to help find annotators that need more training. If you upload your model predictions you can find high-confidence false positive predictions to identify label errors or missing labels.

Model performance analysis:

EA automatically breaks down your global model performance metrics and correlates them to each quality metric. This surfaces which quality metrics are important drives in your model performance, and which potential subsets of the data your model is likely to perform worst in going forward

Why Open Source

There was an observation we made working with late-stage AI companies that prompted us to release Encord Active open source. Many of the metrics companies use are often common, even for completely different vertical applications.

One of the strategies of a startup is to reduce the amount of redundant work that is being done in the world. Before Git’s common adoption, every software company was developing its own internal version control software. This amounted to tens of thousands of hours of wasted developer time that could be allocated to more productive activity. We believe the same is being done now with quality metrics for ML engineers.

Open sourcing Encord Active will remove the pain of people using notebooks to create redundant code and one-off scripts that many others are also developing and free up time for ML engineers to focus on improving their data and models in more interesting ways.

As a new open source tool, please be patient with us. We have some of the leading AI companies in the world using Encord Active, but it is still very much a work in progress. We want to make it the best tool it can be, and we want it out in the world so that it can help as many AI companies as possible move forward.

If it works, we can in a small way contribute to one of the hardest things in the world: making an Elon Musk promise come true. Because AI delayed is not AI denied.

Want to test your own data and models?

“I want to start annotating” - Get a free trial of Encord here.

"I want to get started right away" - You can find Encord Active on Github here or try the quickstart Python command from our documentation.

"Can you show me an example first?" - Check out this Colab Notebook.

If you want to support the project you can help us out by giving a Star on GitHub ⭐

Want to stay updated?

- Follow us on Twitter and LinkedIn for more content on computer vision, training data, and active learning.

- Join the Slack community to chat and connect.

Power your AI models with the right data

Automate your data curation, annotation and label validation workflows.

Get startedWritten by

Eric Landau

Related blogs

Meet Shivant - Technical CSM at Encord

For today’s version of “Behind the Enc-urtain”, we sat down with Shivant, Technical CSM at Encord, to learn more about his journey and day-to-day role. Shivant joined the GTM team when it was a little more than a 10 person task force, and has played a pivotal role in our hypergrowth over the last year. In this post, we’ll learn more about the camaraderie he shares with the team, what culture at Encord is like, and the thrill of working on some pretty fascinating projects with some of today’s AI leaders. To start us off - could you introduce yourself to the readers, & share more about your journey to Encord? Of course! I’m originally from South Africa – I studied Business Science and Information Systems, and started my career at one of the leading advisory firms in Cape Town. As a Data Scientist, I worked on everything from technology risk assessments to developing models for lenders around the world. I had a great time - and learned a ton! In 2022 I was presented the opportunity to join a newly-launched program in Analytics at London Business School, one of the best Graduate schools in the world. I decided to pack up my life (quite literally!) and move to London. That year was an insane adventure – and I didn’t know at the time but it prepared me extremely well for what my role post-LBS would be like. It was an extremely diverse and international environment, courses were ever-changing and a good level of challenging, and, as the cliche goes, I met some of my now-best friends! I went to a networking event in the spring, where I met probably two dozen startups that were hiring – I think I walked around basically every booth, and actually missed the Encord one. [NB: it was in a remote corner!] As I was leaving I saw Lavanya [People Associate at Encord] and Nikolaj [Product Manager at Encord] packing up the booth. We started chatting and fast forward to today… here we are! What was something you found surprising about Encord when you joined? How closely everyone works together. I still remember my first day – my desk-neighbors were Justin [Head of Product Engineering], Eric [Co-founder & CEO] and Rad [Head of Engineering]. Coming from a 5,000 employee organization, I already found that insane! Then throughout the day, AEs or BDRs would pass by and chat about a conversation they had just had with a prospect – and engineers sitting nearby would chip in with relevant features they were working on, or ask questions about how prospects were using our product. It all felt quite surreal. I now realize we operate with extremely fast and tight feedback loops and everyone generally gets exposure to every other area of the company – it’s one of the reasons we’ve been able to grow and move as fast as we have. What’s your favorite part of being a Technical CSM at Encord? The incredibly inspiring projects I get to help our customers work on. When most people think about AI today they mostly think about ChatGPT but, beyond LLMs, companies are working on truly incredible products that are improving so many areas of society. To give an example – on any given day, my morning might start with helping the CTO of a generative AI scale-up improve their text-to-video model, be followed by a call with an AI team at a drone startup who is trying to more accurately detect carbon emissions in a forest, and end with meeting a data engineering team at a large healthcare org who’s working on deploying a more automated abnormality-detector for MRI scans. I can’t really think of any other role where I’d be exposed to so much of “the future”. It’s extremely fun. What words would you use to describe the Encord culture? Open and collaborative. We’re one team, and the default for everyone is always to focus on getting to the best outcome for Encord and our customers. Also, agile: the AI space we’re in is moving fast, and we’re able to stay ahead of it all and incorporate cutting-edge technologies into our platform to help our customers – sometimes a few days from it being released by Meta or OpenAI. And then definitely diverse: we’re 60 employees, from 34 different nationalities, which is incredibly cool. I appreciate being surrounded by people from different backgrounds, it helps me see things in ways I wouldn’t otherwise, and has definitely challenged a lot of what I thought was the norm. What are you most excited re. Encord or the CS team this year? There’s a lot to be excited about – this will be a huge year for us. We recently opened our San Francisco office to be closer to many of our customers, so I’m extra excited about having a true Encord base in the Bay area and getting to see everyone more regularly in person. We’re also going to grow the CS team past Fred & I for the first time! We’re looking for both Technical CSMs and Senior CSMs to join the team, both in London and in SF, as well as Customer Support Engineers and ML Solutions Engineers. On the topic of hiring… who do you think Encord would be the right fit for? Who would enjoy Encord the most? In my experience, people who enjoy Encord the most have a strong sense of self-initiative and ambition – they want to achieve big, important outcomes but also realize most of the work to get there is extremely unglamorous and requires no task being “beneath” them. They tend to always approach a problem with the intent of finding a way to get to the solution, and generally get energy from learning and being surrounded by other talented, extremely smart people. Relentlessness is definitely a trait that we all share at Encord. A lot of our team is made up of previous founders, I think that says a lot about our culture. See you at the next episode of “Behind the Enc-urtain”! And as always, you can find our careers page here😉

Jul 19 2024

5 M

Top 10 Data Annotation and Data Labeling Companies [2024]

With increasing reliance on computer vision (CV) systems in multiple industrial domains, the demand for robust data annotation solutions is rising exponentially. The most recent reports project the data annotation tools market to have a compound annual growth rate (CAGR) of 21.8% from 2024 to 2032. However, as several companies emerge offering annotation platforms and services, finding a cost-effective provider is challenging. While many platforms offer advanced annotation features, only a few meet the scalability and security requirements essential for enterprise-level CV applications. This article discusses the ten best video and image annotation companies in 2024 to help you with your search. The following lists the companies we think are driving the data annotation space: Encord iMerit Appen Label Your Data KeyMakr TrainingData SuperbAI Kili Technology Telus International SuperAnnotate CogitoTech LabelBox Top 12 Data Annotation and Data Labeling Companies Data annotation companies offering labeling solutions must meet stringent security and scalability requirements to match the high standards of the modern artificial intelligence (AI) space. Below are the twelve top companies, ranked based on the following factors: Data security protocols: Compliance with data security regulations and use of encryption algorithms. Scalability: The solution’s ability to handle large data volumes and variety. Collaboration: Tools allowing different team members to collaborate on projects. Ease of use: A user-friendly interface that is intuitive and easy to navigate. Supported data types: support for different modalities such as video, image, audio, and text. Automation: AI-based labeling for speeding up annotation processes. Other functionalities for streamlining the annotation workflow include integration with cloud services and advanced annotation methods for complex scenarios. Let’s explore each company's annotation platforms or services and see the key features based on the above factors to help you determine the most suitable option. Encord Encord is an end-to-end data platform that enables you to annotate, curate, and manage computer vision datasets through AI-assisted annotation features. It also provides intuitive dashboards to view insights on key metrics, such as label quality and annotator performance, to optimize workforce efficiency and ensure you build production-ready models faster. SOTA Model-assisted Labeling and Customizable Workflows with Encord Annotate Key Features Data security: Encord complies with the General Data Protection Regulation (GDPR), System and Organization Controls 2 (SOC 2), and Health Insurance Portability and Accountability Act (HIPAA) standards. It uses advanced encryption protocols to ensure data security and privacy. Scalability: The platform allows you to upload up to 500,000 images (recommended), 100 GB in size, and 5 million labels per project. You can also upload up to 200,000 frames per video (2 hours at 30 frames per second) for each project. See more guidelines for scalability in the documentation. Collaboration: You can create workflows and assign roles to relevant team members to manage tasks at different stages. User roles include admin, team member, reviewer, and annotator. Ease-of-use: Encord Annotate offers an intuitive user interface (UI) and an SDK to label and manage annotation projects. Supported data types: The platform lets you annotate images, videos (and image sequences), DICOM, and Mammography data. Supported annotation methods: Encord supports multiple annotation methods, including classification, bounding box, keypoint, polylines, and polygons. Automated labeling: The platform speeds up the annotation with automation features, including: - Segment Anything Model (SAM) to automatically create labels around distinct features in all supported file formats. - Interpolation to auto-create instance labels by estimating where labels should be created in videos and image sequences. - Object tracking to follow entities within images based on pixel information enclosed within the label boundary. Integration: Integrate popular cloud storage platforms, such as AWS, Google Cloud, Azure, and Open Telekom Cloud OSS, to import datasets. Best for Teams looking for an enterprise-grade image and video annotation solution to produce high-quality data for computer vision models. Pricing Encord has a pay-per-user pricing model with Starter, Team, and Enterprise options. Learn more about automated data annotation by reading our guide to automated data annotation. iMerit iMerit offers Ango Hub, a data annotation solution built on a generative AI framework that lets you build use-case-specific applications for autonomous vehicles, agriculture, and healthcare industries. iMerit Key Features Collaboration: The Ango Hub solution lets you add labelers and reviewers to customized workflows for managing annotation projects. Ease-of-use: The platform offers an intuitive UI to label items, requiring no coding expertise. Supported data types: Ango Hub supports audio, image, video, DICOM, text, and markdown data types. Supported labeling methods: The solution supports bounding boxes, polygons, polylines, segmentation, and tools for natural language processing (NLP). Integration: The platform features integrated plugins for automated labeling and machine learning models for AI-assisted annotations. Best for Teams searching for an integrated labeling platform for annotating text, video, and image data. Pricing Pricing information is not publicly available. Contact the team to get a quote. Appen Appen offers data annotation solutions for building large language models (LLMs) by providing a standalone labeling platform and data labeling services through expert linguists. Appen Key Features Workforce capacity: Appen’s managed services include more than a million specialists speaking over 200 languages across 170 countries. With the option to combine its platform with its services, the solution becomes highly scalable. Supported data types: Appen’s platform lets you label documents, images, videos, audio, text, and point-cloud data. Supported annotation methods: Labeling methods include bounding boxes, cuboids, lines, points, polygons, ellipses, segmentation, and classification. Instruction datasets: The company also offers domain-specific instruction datasets for training LLMs. Best for Teams looking for a hybrid solution for building multi-modal models for text and vision applications. Pricing Pricing is not publicly available. Label Your Data Label Your Data is a data annotation service provider offering video and image annotation services for CV and NLP applications. Label Your Data Key Features Data security: The company complies with ISO 27001, GDPR, and CCPA standards. Workforce capacity: Label Your Data builds a remote team of over 500 data annotators to speed up the annotation process. Supported data types: The solution supports image, video, point-cloud, text, and audio data. Supported labeling methods: CV methods include semantic segmentation, bounding boxes, polygons, cuboids, and key points. NLP methods include named entity recognition (NER), sentiment analysis, audio transcription, and text annotation. Best for Teams looking for a secure annotation service provider for completely outsourcing their labeling efforts. Pricing Label Your Data provides on-demand, short- and long-term plans. Keymakr Keymakr is an image and video annotation service provider that manages labeling processes through its in-house professional experts. Keymakr Key Features Labeling capacity: You can label up to 100,000 data items. Supported data types: The platform supports image, video, and point-cloud data. Supported labeling methods: Keymakr offers annotations that include bounding boxes, cuboids, polygons, semantic segmentation, key points, bitmasks, and instance segmentation. Smart assignment: The solution features a smart distribution to match relevant annotators with suitable tasks based on skillset. Performance tracking: Keymakr provides performance analytics to track progress and alert managers in case of issues. Data collection and creation: The company also offers services to create relevant data for your projects or collect it from reliable sources. Best for Beginner-level teams working CV projects, requiring data creation and annotation services. Pricing Pricing is not publicly available. TrainingData TrainingData is a Software-as-a-Service (SaaS) data labeling application for CV projects, featuring pixel-level annotation tools for accurate labeling. TrainingData Key Features Data security: The company provides a Docker image to run on your local network through a secure virtual private network (VPN) connection. Scalability: You can label up to 100,000 images. Collaboration: TrainingData’s platform lets you create projects and add relevant collaborators with suitable roles, including reviewer, annotator, and admin. Supported labeling methods: The platform offers multiple labeling tools, including a brush and eraser for pixel-accurate segmentation, bounding boxes, polygons, key points, and a freehand drawer for freeform contours. Integration: TrainingData integrates with any cloud storage service that complies with cross-origin resource sharing (CORS) policy. Best for Teams looking for an on-premises image annotation platform for segmentation tasks. Pricing TrainingData offers free, pro, and enterprise packages. SuperbAI SuperbAI offers multiple products for building AI models, including a data management platform, a labeling solution, and a tool for training, evaluating, and deploying models. SuperbAI Key Features Data security: SuperbAI complies with SOC standards and encrypts all data using Advanced Encryption Standard - 256 (AES-256). Collaboration: The platform offers access management tools and lets you invite team members as admins, labelers, and managers. Supported data types: SuperbAI supports images and videos in PNG, BMP, JPG, and MP4 formats. It also supports point-cloud data. Supported labeling methods: The solution supports all standard labeling methods, including bounding boxes, polylines, polygons, and cuboids. Integration: The platform integrates with Google Cloud, Azure, AWS, and Slack. Best for Teams looking for an integrated data management solution for training machine learning algorithms. Pricing SuperbAI offers starter and enterprise packages. Kili Technology Kili Technology offers an intuitive labeling platform to annotate data for LLMs, generative AI, and CV models with quality assurance features to produce error-free datasets. Kili Technology Key features Collaboration: The platform lets you assign multiple roles to team members, including reviewer, admin, manager, and labeler, to collaborate on projects through instructions and feedback. Ease-of-use: Kili offers a user-friendly UI for managing workflows, requiring minimal code. Supported labeling methods: The tool supports bounding boxes, optical character recognition (OCR), NERs, pose estimation, and semantic segmentation. Automation: Kili supports automated labeling through active learning and pre-annotations using ChatGPT and SAM. Best for Data scientists looking for a lightweight annotation solution for building generative AI applications. Pricing Pricing depends on the number of items you need to label. Telus International Telus International’s Ground Truth (GT) studio offers three platforms as part of a managed service to build training datasets for ML models. GT Manage helps with people and project management; GT Annotate lets you annotate image and video data. GT Data is a data creation and collection tool supporting multiple data types. Telus International Key Features Data security: GT Annotate complies with SOC 2 standards and implements two-factor authentication with firewall applications and intrusion detection for data security. Collaboration: GT Manage features workforce management tools for optimal task distribution and quality control. Supported data types: You can collect image, video, audio, text, and geo-location data using GT data. Supported labeling methods: GT Annotate supports bounding boxes, cuboids, polylines, and landmarks. Best for Teams looking for a complete AI solution for collecting, labeling, and managing raw data. Pricing Pricing information is not publicly available. SuperAnnotate SuperAnnotate offers a data labeling tool that lets you manage AI data through collaboration tools and annotation workflows while providing quality assurance features to produce labeling accuracy. SuperAnnotate Key Features Collaboration: SuperAnnotate lets you create teams and assign relevant roles such as admin, annotator, and reviewer. Ease-of-use: The platform has an easy-to-use UI. Supported data types: SuperAnnotate supports image, video, text, and audio data. Supported labeling methods: The platform has tools for categorization, segmentation, pose estimation, object tracking, sentiment analysis, and speech recognition. Best for Teams looking for an annotation solution to build generative AI applications. Pricing The platform offers free, pro, and enterprise versions. Cogito Cogito is a data labeling service provider that employs a large pool of human annotators to deliver annotations for generative AI, CV, content moderation, NLP, and data processing. Cognito Key Features Data security: Cogito complies with GDPR, SOC 2, HIPAA, CCPA, and ISO 27001 standards. Supported data types: The platform supports image, video, audio, text, and point-cloud data. Automation: Cogito uses AI-based algorithms to label large data volumes. Best for Startups looking for a company to outsource their AI operations. Pricing Pricing is not publicly available. Labelbox Labelbox offers multiple products for managing AI projects. Its data labeling platform allows you to annotate various data types for building vision and LLM applications. LabelBox Key Features Data security: Labelbox complies with several regulatory standards, including GDPR, CCPA, SOC 2, and ISO 27001. Collaboration: Users can create projects and invite in-house labeling team members with relevant roles to manage the annotation workflow. Ease-of-use: Labelbox has a user-friendly interface with a customizable labeling editor. Automation: The platform supports model-assisted labeling (MAL) to import AI-based classifications for your data. Integrability: Labelbox integrates with AWS, Azure, and Google Cloud to access data repositories quickly. Best for Teams looking for labeling solutions to build applications for e-commerce, healthcare, and financial services industries. Pricing Labelbox offers free, starter, and enterprise versions. Still confused about whether to buy a tool or go for open-source solutions? Read some lessons from practitioners regarding build vs. buy decisions Data Annotation Companies: Key Takeaways CV applications are driving the current industrial landscape by innovating fields like medical imaging, robotics, retail, etc. However, CV’s rapid expansion into these domains calls for robust data annotation tools and services to build high-quality training data. Below are a few key points regarding data annotation companies in 2024. Security is key: With data privacy regulations becoming stricter globally, companies offering annotation solutions must have compliance certifications to ensure data protection. Scalability: Annotation companies should offer scalable tools to handle the ever-increasing data volume and variety. Top annotation companies in 2024: SuperAnnotate, Encord, and Kili are the top 3 companies that provide robust labeling platforms and services.

Feb 23 2024

8 M

Why AI Is the Mother of All Unicorns

"Say you want to watch a movie. To choose, you'll want to know what movies others liked and, based on what you thought of other movies you've seen if this is a movie you'd like. You'll be able to browse that information. Then you select and get video on demand. Afterward, you can even share what you thought of the movie. But thinking of it only in terms of movies on demand trivializes the ultimate impact. The way we find information and make decisions will be changed. Think about how you find people with common interests, pick a doctor, and decide what book to read. Right now, reaching out to a broad range of people is hard. You are tied into the physical community near you. But in the new environment, because of how information is stored and accessed, that community will expand. This tool will be empowering, the infrastructure will be built quickly and the impact will be broad." - The Bill Gates Interview, Playboy Magazine, July 1994 Sound familiar? Asked what else the personal computer was supposed to do other than process documents, Bill Gates prophesied the changes brought about by the coming of the information age that modern-day tech giants have since realized. From video-on-demand and movie recommendations (Netflix) to the way we find information (Google) to how you find people with common interests (Facebook) and deciding what book to read (Amazon 1.0), Gates' vision of the transformation that the information age would bring about turned out in more ways than one could conceivably imagine at the dawn of the Internet revolution. The Coming of the AI Revolution Fast forward 20 years, the AI revolution has begun. It will fundamentally transform our world, much just like the advent of the atomic bomb, microprocessor, personal computer, and the Internet. If the wealth generated from the emergence of each of these technologies offers any indication, we are poised to witness an unprecedented accumulation of wealth. As with any prophesied significant platform shift, there's a real risk that they fail to materialize in a big way at a particular moment in time (e.g. Web3, Blockchain, Crypto, Metaverse) or that they take much longer than anticipated to play out (admittedly, crypto can still find an actual use case). Until as recently as ten years ago, almost all AI systems failed to demonstrate significant value, and many still do not (e.g., purely logic-based AI systems and symbolic AI - the dominant paradigm from the 1950s to the mid-1990s - are still primarily research interests). We could be in for another AI hype cycle that may eventually fizzle. As an eternal optimist and founder of an AI company looking to raise a Series B in the not-too-distant future, I won't bother spelling out why AI is overrated. Instead, I'll argue why it will change the world. When I explain AI to my parents, I describe it as a new form of dynamic software built on answers, unlike traditional status software built on rules. Put simply, comparing AI to conventional software is like saying "show" instead of "tell." What's exciting about AI is that dynamic and answer-based software will enable us to create new products, applications, and systems that can solve unsolved problems that, until now, have been reserved for human cognition. Self-driving cars are the most obvious example - while traditional software can handle simple tasks like driving straight, building a fully autonomous vehicle would require an overwhelming number of static rules to cover even the basics of navigation. It is not a leap to believe that the total addressable market (TAM) of problems only solvable by human cognition is orders of magnitude higher than that of any of the problems for which we use traditional software. As AI can augment and - in some cases - replace humans, it can produce what I think of as "non-linear" productivity outcomes. Here are a few contrived examples across various vertical use cases to illustrate the potential non-linearity of AI systems: Building a faster car to reduce the amount of attention required to drive from A to B (linear) vs. self-driving vehicle (non-linear) More efficient organization of leads and tasks in a CRM system with a slightly better UI for salespeople (linear) vs. AI talking avatars that allow for infinite scaling of the salesperson (non-linear) Improved diagnostic equipment that provides more detailed images for radiologists to analyze (linear) vs. AI-driven systems that scan medical images and highlight potential anomalies for doctors or even predict possible illnesses before symptoms manifest based on health data (non-linear) Better tractors and machinery to help farmers plant and harvest crops (linear) vs. drones and robots that monitor the health of individual plants, apply precise amounts of fertilizer or pesticide, and harvest crops with minimal human intervention (non-linear) A digital learning and education platform with improved video lectures and homework targeted specifically at programming (linear) vs. an adaptive chatbot that can be prompted to "explain this concept like I'm 12 years old" (non-linear) The digital learning example is interesting as it is playing out in real-time: Chegg, the education technology company, saw its stock price tumble 47% (down ~63% year-to-date) after admitting that ChatGPT was pressuring its subscriber growth, leading them to suspend their full-year outlook. You get the idea. Just as the Internet's value skyrocketed with evolving applications, tools, and increased user participation, so too will the AI sector's worth. Despite the Internet's basic components remaining similar to those of the early 1990s, its value has grown exponentially over 20 years due to expanded applications and user engagement. As more individuals and businesses embrace AI and develop applications, the supporting tools and infrastructure will improve. Increased data availability will also enhance product quality. This cyclical improvement will fuel exponential growth in AI. Undoubtedly, AI is poised to be the next major technological platform shift within the next 20 years. While previous technological revolutions, like the Internet or the Industrial Revolution, were monumental in reshaping societies and economies, AI encapsulates something far more profound: the essence of human cognition. The wealth generation from novel solutions to previously unsolvable problems, combined with the heightened productivity and efficiency across all sectors, implies that the economic impact of AI could dwarf that of all prior technological shifts. The emergence of AI will give birth to an unfathomable number of unicorns. Who Wins: Titans, Challengers, or Innovators? Ok, so AI will be huge, but who wins the biggest slice of the pie, and where will the most value be generated? While the Twitter VC community may have its own predictions, here are my thoughts on a potential outcome. I could of course be entirely wrong. Early winners like NVIDIA have already experienced a surge in their stock price, and investors believe that generative AI and LLM developers will be the next big thing, as evidenced by the high valuations and significant investment flowing into those companies. The landscape is already fiercely competitive, especially among "neo" foundation model/LLM providers (e.g., Cohere, Anthropic, Mistral). Given the high valuations and evolving competitive landscape, I question the viability of venture-scale returns for most of these new entrants. There is a limit to how many chatbots the market can absorb, after all. Additionally, OpenAI is also so far ahead (8 years of R&D and billions of queries via ChatGPT generating valuable RLHF data) that it will be difficult for any of these companies to catch up. Perhaps one or two will succeed, but for emerging LLM companies to truly thrive, they will likely need to uncover unique niches or pivot towards refining larger models using specialized, proprietary datasets for distinct needs and/or partnering with downstream application developers. Some corporate/venture combinations could also happen, where partnerships like OpenAI/MSFT, Anthropic/Google, and Cohere/Meta-type will combine distribution and data advantage with R&D expertise. Elad Gil made some interesting observations on this here. Separately, foundation model providers will likely realize lower margins in the first few years of operation, as they more closely resemble 'hardware-type companies' with significant upfront training expenses. However, this does not mean that developers who create applications using these large models won't achieve considerable margins even if the TAM of those markets is much smaller, for example, by offering specialized expertise, products, and other value-added services related to these models. Jasper is an example of a company that has done this perfectly - they've built a product that serves marketers, and just marketers, well. All things considered, the AI market will probably resemble that of the current software market in 20 years. There will be a few huge "Big AI" companies with over $100 billion in revenue (this could very well end up being the foundation model developers, but it could also be companies that we haven't even conceived of yet) and a diaspora of large companies focusing on specific applications (e.g., Stripe for payments, Uber for transportation, Figma for design - this could be Jasper for marketers, Cruise for autonomous vehicles, Viz AI for medical imagery, and so on). For context, Apple, Microsoft, Amazon, Meta, and Alphabet constitute ~$9 trillion of the value of the NASDAQ's ~$22 trillion market cap. This is a substantial chunk, no doubt, but the total size of the pie is undoubtedly only going to get bigger as the AI market gets underway and secular trends in technology continue to reverberate. Why This Time is Different Technological revolutions often occur due to a convergence of pivotal factors, and the AI sector is currently experiencing such a juncture. Similar to the Internet's ascendance, which was enabled by ubiquitous personal computers and faster connectivity, the current AI boom is a product of simultaneous advances in computing power, vast data availability, and increasingly advanced models. Eric and I founded Encord at a pivotal moment when object detection models transitioned from often being erroneous and requiring highly controlled "sandbox"-type environments to delivering tangible ROI. Similarly, the release of ChatGPT marked a paradigm shift in how we approached natural language processing and understanding. Looking into the near future, I anticipate AI delving deeper into multi-modal applications, offering higher ROI and increasingly viable solutions to more complex problems, and even stepping into realms of human reasoning. In short, the market is just getting started. The value, revenue, TAM, etc., will naturally accrue as the complexity of the problems that we solve with AI increases. After all, it was impossible to stream a movie over your Internet connection 20 years ago, but now its table stakes.

Aug 24 2023

4 M

Meet Denis - Full Stack Engineer at Encord

We sat down with Denis Gavrielov, Full Stack Engineer at Encord, to learn more about his day-to-day. Denis is an essential player on the Engineering team, he has been a part of Encord's exciting journey from a small 8-person team to the dynamic startup it is today. He walks us through the highs and lows of his experiences, the camaraderie he shares with his team, and the thrill of working on some truly fascinating projects. If you've ever wondered what it's like to work at a startup like Encord, strap in and join us for this conversation! Denis, first question. What inspired you to join Encord? I applied about two years ago when the team was still getting started (we were eight people strong — all engineers!). At that time, I wasn't sure if I wanted to join a startup as I had been working in a larger company up until then (Bloomberg). I met most of the team throughout the interview process, and the more I spoke to everyone, the more I felt I'd really enjoy working with them. I also had a great first impression of the founders (Eric & Ulrik) and the more I spoke with them the more I got a sense for how strong their skillset was and how unique and unparalleled of an opportunity it'd be. The combination of team, founders, and opportunity is what ultimately led me to join Encord. I still remember my first week sitting in our 10m2 office space in the middle of Soho without any meeting rooms or kitchen, quite different from today! Very different from now indeed! What does a typical day as a full-stack engineer at Encord look like? Every day is a bit different. Some people like to focus on back-end engineering and some like front-end work — I like to work on both! Typically, I start by catching up with everyone's messages on Slack. I might have one or two meetings in the morning before starting to code. Throughout the day there's a lot of collaboration via impromptu short catch-ups in person or on Slack. During these meetings, I often discuss architectural designs and problem-solving strategies with more senior team members. A significant component of my day is spent coding and solving problems. And I guess you fit in lunch in between that as well, right? That's true, a good lunch break is important for recharging! I usually grab lunch with a few colleagues — if the weather is nice, like during the summer, we enjoy our lunch outside. On Fridays, the entire team gets together in the office for a company lunch, that's always fun and one of my favorite Encord traditions. You mentioned teamwork and customer centricity — how would you describe the company culture at Encord more broadly? The company culture is very collaborative. The founders and senior managers are encouraging and open to suggestions, so it's easy to take (good) decisions independently. We try to maintain a relaxed office culture focused on team success, where people are free to work in a manner that works best for them and for us as a team more broadly. People are always trying to help each other out and break down any silos there might arise — although we've been very intentional though about building the right environment, so luckily there are very little silos in the first place. For example, our engineering and commercial team all work in one big room and getting context or feedback is incredibly quick. Another key part of our culture is being able to adapt quickly. Previously, I was working at Bloomberg in an infrastructure team, where we had to plan everything very precisely. Everything had to be correct from the start, and every decision would need to go through many layers of approval and planning sessions. Speed of progress was naturally quite different. Here at Encord, we are a nimble, rapid team — we move quickly and have been able to achieve things that teams many times our size have struggled with. Customers tell us every week how much better our product is compared to other alternatives they're looking at. It is always very rewarding when we hear this feedback. What we aim for as a team is to be great — ship high-performing industry-leading products quickly, get feedback from customers and prospects early, and consistently focus on building what customers and users really want. I think these are the two principles that stand out to me as an engineer the most. Can you tell us a bit about a project that you're currently working on? One of the projects I'm working on is overhauling of our task management system. The core problem we're trying to solve is that our customers need a detailed overview and a flexible interface to control the annotation and review process for their data operations. The process can be complicated, involving multiple review stages and different levels of scrutiny. So we're developing a system to allow our clients to implement more complex workflows and be able to review the process even faster! In terms of how I spend my time between collaborating and individual development, it varies heavily by what point of the project we're in. At the beginning, a large part of my time is spent brainstorming, talking through the objectives with other engineers and product managers, and listening in on client calls. After gathering ideas and refining our approach, I spend most of my time coding and focusing on the project — while still staying in sync with the rest of the team so that we keep moving quickly in the same direction. Lastly, what advice would you give to someone considering working at Encord? Many people reach out to me each week asking this and there's a few things I think many people don't consider. Encord is obviously in a very exciting position — we're a strong team, in the right market (AI infrastructure), and have a clear vision of what the future will be like and what we need to build to get there. Yet early-stage startups, especially ones moving very quickly, require working-modes and dispositions that the vast majority of people are not looking for nor comfortable with — and that's okay! If you don’t particularly enjoy collaborating on tasks, or you prefer being told very precisely/prescriptively what to do, or you're not at the stage in your career where you want to own processes, then I'd say Encord (and similar-type companies) are probably not the best fit. It really depends on what excites you. Here, you need to embrace pace, ownership, collaboration, and autonomy, often to degrees you may not have considered possible. These are key traits that, I think, have all made us successful in our roles. If you want to own problem areas and find solutions, if you like collaborating and prototyping MVPs quickly to get client feedback early, and if you pride yourself in making the right decisions with limited amounts of information — then Encord may be a good fit! Thank you, Denis! We have big plans for 2023 & are hiring across all teams. Find here the roles we are hiring for!

Aug 22 2023

5 M

Meet Mavis - Product Design Lead at Encord

Learn more about life at Encord from our Product Design Lead, Mavis Lok! Mavis Lok, or ‘Figma Queen’ as we’d like to call her, thrives in using innovation and creativity to enhance the user experience (UX) and user interface (UI) of our products. She listens closely to our customers’ needs, conducts user discovery, and translates insights into tangible and elegant solutions. You will find Mavis collaborating with various teams at Encord (from the Sales and Customer Success teams, to the Product and Engineering teams) to ensure that the product aligns with our business goals and user needs. Hi, Mavis, first question is what inspired you to join Encord? When I was planning the next steps in my career, I knew that I wanted to join an emerging and innovative tech startup. In the process, I stumbled upon Encord - with a pretty big vision of helping companies build better AI models with quality data. A problem that seemed ambitious and compelling. I had my first chat with Justin [Encord's Head of Product Engineering], and he gave me great insights into the role, the company, and the domain space, which tied nicely with my design experience and what I was looking for in my next role. I was evaluating many companies, and I made sure (and I'd recommend to anyone reading!) to speak to as many employees from the company I could meet. The more people I met from Encord, the more and more eager I became to join the team. Could you tell me a little about what inspired you to pursue a career in product design? Hah, great question! I was previously in creative advertising and was trained as a Creative/Art Director. During my free time, I would participate in advertising competitions where I would pitch ideas for brands, and I’d always maximize my design potential through digital-led ideas. That brought me to work as a Digital Designer and then as a Design Manager, where I got my first glimpse of what it was like to work closely with co-founders, engineers, and designers. The company I was working at, was going through a transition from an agency to a SaaS type business model, and I found many of the skills I'd developed were actually an edge for what product design requires. Having an impact in balancing business needs, and product development challenges whilst creating products that are user-centric and delightful to use - is why I love what I do every day. How would you describe the company culture? I think the people at Encord are what sets us apart. With a team of over 20 nationalities, it’s an incredible feeling to work in an environment where diversity of thought is encouraged. The grit, ambition, vision, and thoughtfulness of the team are why I enjoy being part of Encord. What have been some of the highlights of working at Encord? Encord has given me the space to throw light on the impact that design can bring to the company and build more meaningful relationships with the team and, of course, our customers. Another big highlight for me is practicing the notion of coming up with ideas rapidly whilst being able to identify the consequences of every design decision. Brainstorming creativity whilst critically is something I hold dearly in my creative/design life, so it’s definitely a highlight of my day-to-day at Encord. On a side note, Encord is also a fun place to work. Whether it is Friday lunches, monthly social activities, or company off-sites, there are plenty of opportunities to have a good time with the team. Lastly, what advice would you give someone considering joining Encord? The first thing I would say is you have to be authentic during the interview, and you should also genuinely care about the mission of the company because there is a lot of buzz around the AI space right now - genuine interest lasts longer than hype. I would recommend reading our blogs on the website; it's a great place to start, as you can gain a lot of insight from it. From learning more about our customers, to exploring where our space is headed. We have big plans for 2023 & are hiring across all teams. Find here the roles we are hiring for.

Jul 17 2023

5 M

Meet Rad - Head of Engineering at Encord

At Encord, we believe in empowering employees to shape their own careers. The company fosters a culture of ‘trust and autonomy’, which encourages people to think creatively and outside the box when approaching challenges. We strongly believe that people are the pillars of the company. With employees from over 20 nationalities, we are committed to building a culture that supports and celebrates diversity. For us, we want our people to be their authentic self at work and be driven to take Encord's mission forward. Rad Ploshtakov was the first employee at Encord and is a testament to how quickly you can progress in a startup. He joined as a Founding Engineer after working as a Software Engineer in the finance industry, and is now our Head of Engineering. Hi Rad! Tell us about yourself, how you ended up in Encord, and what you’re doing. I was born and raised in Bulgaria. I moved to the UK to study a masters in Computing (Artificial Intelligence and Machine Learning) at Imperial College London. I am also a former competitive mathematician and worked in trading as a developer, building systems that operate in single digit microseconds. Then I joined Encord (or Cord, which is how we were known at the time!) as the first hire - I thought the space was really exciting, and Eric and Ulrik are an exceptional duo. I started off as a Founding Engineer and, as our team grew, transitioned to Head of Engineering about a year later. I am responsible for ensuring that as an engineering team we're working on what matters most for our current and future customers - I work closely with everyone to set the overall direction and incorporate values for the team. Nowadays, a lot of my time is also spent on hiring, and on helping build and maintain an environment in which everyone can do their best work. What does a normal day at work look like for you? Working in a startup means no two days are the same! Generally, I would say that my day revolves around translating the co-founders' goals into actionable items for our team to work on - communicating and providing guidance are two important aspects of my role. A typical day includes meeting with customers and prospects, code reviewing, and supporting across different initiatives. Another big part is collaborating with other teams to understand what we want to build and how we are going to build it. Can you tell us a bit about a project that you are currently working on? Broadly speaking, a lot of my last few weeks has been supporting our teams as they set out and execute on their roadmaps. 2023 will be a huge year for us at Encord, and we're moving at a very fast pace, so a lot of my focus recently has been helping us be set up for success. As for specific projects, I'm very excited about all the work our team is doing for our customers. For example, our DICOM annotation tool has recently been named the leading medical imaging annotation tool on the market - which is a huge testament to the work our team has poured into it over the last year. I remember hacking together a first version of our DICOM annotation tool in my first (admittedly mostly sleepless!) weeks at Encord, and seeing how far it's come in just a few months has been one of the most rewarding parts of my last year. What stood out to you about the Encord team when you joined? Many things. When I first met the co-founders (Eric & Ulrik), I was impressed by their unique insights into the challenges that lay ahead for computer vision teams - they can simultaneously visualize strikingly clearly what the next decade will look like, while also being able to execute at mind-boggling speed in the moment, in that direction. I was impressed also by how smart, resourceful and driven they were. By the time I joined, they had been able to build a revenue generating business with dozens of customers - getting to understand deeply the problems that teams were facing and then iterating quickly to build solutions that not even they had thought about. What is it like to work at Encord now? It's a very exciting time to be at Encord. Our customer base has been scaling rapidly, and the feedback loop on the engineering cycle is very short, so we get to see the impact of our work at a very quick pace which is exciting - often going from building specs for a feature, to shipping it, showing it to our customers, and seeing them starting to use it all happen in a span of just a few weeks. A big part of working at Encord is focusing a lot on our customer's success - we always seek out feedback, listen, and apply first principles to the challenges our customers are facing (as well as getting ahead with ones we know they'll be facing soon that they might not be thinking about yet!). Then work on making the product better and better each day. How would you describe the team at Encord now? The best at what they do - also hardworking, very collaborative and always helping and motivating each other. One of our core values is having a growth mentality, and each member of our team has come into the company and built things from the ground up. Everyone has a willingness to roll up their sleeves and make things happen to grow the company. A resulting factor of this is also that it's okay to make mistakes - we are constantly iterating and trying to get 1% better each day. We have big plans for 2023 & are hiring across all teams! ➡️ Click here to see our open positions

Mar 09 2023

3 M

How to Review Encord on G2

One of the most popular sites for researching business software is G2. It features reviews of any kind of software you can think of, allowing people to read reviews from their peers and get a better understanding of what the best software solution is for them. This also applies to the world of computer vision and machine learning. And for us at Encord, we really want the computer vision community to know how good Encord is. That’s why we’re asking Encord users (whether you’re involved in annotation, data operations or machine learning) to head over to G2 and leave us a review. Not only does it help us get in front of a wider group of people, but it also helps the machine learning and computer vision community find out about Encord. Leaving a review doesn’t take long - follow the steps below and you’ll have your review up in no time! Step 1: Registration Follow this link and then click on the blue button that says ‘Continue to Login’ You’ll be asked to sign in. If you’ve got a G2 account, use that. Otherwise, click on ‘Business Email’ or LinkedIn to create your account On the next screen enter your first name, last name, choose a password and press ‘Create an Account’. You’ll be sent to a page where you need to enter a verification code. This code will be sent to your email address. You need to go into your email, find the email with this code and then come back to this page to enter the code. Once you’re in G2 you’ll need to set up your profile. Then you’ll be able to carry on submitting your review (if you need to come back to it, use this link to start the review process again) Step 2. Submitting Your Review Rate Encord on a scale of 1 - 10. These reviews are really important to us, so if you don’t feel we rate a 9 or a 10, please reach out to us first so we can address whatever problems you’re experiencing on the platform. Then add a short title for your review and fill out the sections ‘what do you like best’ and ‘what do you dislike’. (Take a look at these positive reviews for inspiration). You only need to enter 40 characters, but more is definitely better! And if you have any major dislikes, please speak to us first - we might be able to help or show you a feature you’ve missed Select your primary role when using Encord Once you’ve filled out these sections the button in the bottom right will turn green. Click on this. You’ll then see the following screen The more categories you select for the ‘For which purposes do you use Encord’ question, the more questions you’ll have to answer. So if you’ve only got time for one, please select ‘data labeling’. For ‘What problems is Encord solving…’ you need to enter 160 characters - about two sentences. For the next questions, if you don’t think we’re headed in the right direction or if you don’t think we deserve a 6 or 7, please talk to us. As with the other questions, we can work together to address whatever issue you’re having with ease of use or support. Then click on the button in the bottom right once it turns green On the next page, there’s a couple of obligatory questions (with the red asterisk). The most complicated one is ‘Are you a current user of Encord?’. Your answer to this should be yes. You’ll then need to show that you’re a current user of Encord by uploading a screenshot of your account in Encord. For the screenshot, you need to open up a new window in your browser and go to https://app.encord.com/settings (it may prompt you to login to the platform). It should look like this (in dark mode) Then take a screenshot of this screen. There’s two ways to do this depending on whether you’re using a PC or Mac. PC INSTRUCTIONS 1. Go to the Start menu, search for ‘Snipping Tool’ and click on the result 2. Once you’re in the Snipping Tool press click the ‘new’ button in the top left 3. Draw around the whole of the ‘Settings’ screen in Encord (see example screenshot below) 4. Then click the three dots in the top right corner of the snipping tool and save the snip to your desktop (or anywhere else you can find it) MAC INSTRUCTIONS 1. Press and hold these three keys together: Shift, Command, and 4. 2. Drag the crosshair to select the area of the screen to capture and let go 3. The image will be saved to your Mac’s desktop 4. To upload the screenshot you’ve taken click on the blue button that says ‘Upload Screenshot’ and find the screenshot on your computer Once you’ve uploaded your screenshot, you can answer the remaining optional questions (there’s one obligatory question at the bottom). Once you’ve answered all the obligatory questions and uploaded your screenshot, the button in the bottom right will turn green. On the next screen, there’s a couple of obligatory questions, and the rest are optional. Company name is one obligatory question. Please make sure you enter the name of the company you’re using Encord in the question ‘At which organization did you most recently use Encord?’ and then put the company’s website address in the question below so that G2 can find your company. The next obligatory question is ‘What is your industry when using this product?’. Just start typing in here and you’ll see a list of industries that you can select from. There’s three obligatory questions at the bottom of the list - they’re self-explanatory but need to be answered before you can submit your review. Once all the required questions have been answered, you’ll see two buttons appear in the bottom right. You don’t need to click on the green one, but can click on the white one that says ‘Submit My Review’. That’s it, you’re done! We really appreciate you taking the time to review Encord to tell the rest of the world how great the platform is. Even one positive review really helps us, so from the whole Encord team, thank you!

Feb 14 2023

5 M

Tech Startups are not Just About Tech: Relationships Matter too

Introduction People often think that a technology company consists of…technology. Every day, subject-matter experts work together to build cutting-edge tech that will change lives and put their businesses ahead of the competition. Build great tech, and you will build a great tech company - easy and simple. This is, however, only a small part of the story. What often gets lost is that a technology company (or any company for that matter) consists of a set of relationships. Maintaining and servicing these relationships on a regular basis is just as (or even more) important as maintaining and servicing the technology you build. The relationships between the people who build the technology, the people who build the business, and the people who invest in the company are critical to whether the company succeeds or fails. During Y Combinator, I spent a lot of time “doing things that don’t scale.” As a founder, you’re hands-on in everything, including daily problem solving for continuously evolving problems. After YC, however, when we began scaling Encord, I learned (partly because a mentor explained it to me) that as a founder, I needed to focus mostly on just two things. One, ensuring that the business has enough runway – whether from fundraising or revenue or both – to keep going, and two, finding and retaining great people. Ensuring a continuous inflow of funds and great people are two tasks that actually have a lot in common. Specifically, they both depend on building and maintaining relationships. So the question then becomes, ‘how do you build relationships?’ Remember you are in for the long haul A lot of founders think that when an investor sends over a funding check, that’s the end of the relationship. In reality, that check marked the start of the relationship, and now it’s time to maintain and further cultivate it. Investors want to help. They want your company to be successful. Don’t take them for granted. Instead, make sure you keep them in the loop and hold yourself accountable to them by doing your best work and remaining open to feedback. If you maintain and nurture your investor relationships, those firms are likely to help you in future funding rounds. While investor relationships are important, ultimately, a company should gain its funds from customers and not VCs. Buyers are the key to success, so you need to make sure your business solves a real problem, makes people happy, and creates a product or service that people want. A philosophy we learned to follow during YC and continue to follow now is to “bear-hug” your customers. If you have a product or service that people want, and you bear hug your customers, your business will grow and generate revenue. The parable of the elephant applies to your business When building the strategy for a business, you need to stay close to the end users. To understand what your customers need and where your business fits within the larger ecosystem, you need to develop relationships with a variety of stakeholders. Hypotheses for solving customer problems don’t come from reading academic papers or journals but from talking to people. We talked to more than a thousand stakeholders, and we built our business thesis from what we learned in those conversations. Predicting the distant future is difficult, so we think about how to combine more immediate customer needs with the next logical technological steps forward. Our data quality assessment tool is a great example of this synthesis, because we heard the same problem from many stakeholders, and we realised that we could build on our existing technology to solve that problem. We always continue to talk to customers, prospective customers, AI practitioners, academics, big tech companies, corporates, researchers, clinicians, and other medical professionals. We talk to a diverse array of people because each type of person has a different view of Encord and the types of problems our technology can solve. Much like the parable of the blind men and the elephant, different stakeholders focus on different parts of our product. One touches the tusk, while another touches the trunk. These groups might only know their part of the structure, and it's our job to combine their varying perspectives and subjective experiences to create a coherent structure and vision for the technology that drives the business forward. We always have to keep our eyes on the whole elephant. Relationships are critical to finding and retaining talent Without a doubt, having great people on your team is the most important factor in making the company successful. Unfortunately, there’s no shortcut or industry secret for hiring great people. It’s a grind. As an early-stage startup, don’t expect good people to just come to you. You’ve got to invest the time and resources into finding great people from the start. Search for people, and refine your judgment and intuition about the type of people who will thrive in your company. Learn from the mistakes you make. Remember that the hiring process is not a one-way street. Yes, you interview people, but they also interview you. You need to sell the company and its vision. Generating excitement about the work your company is doing is important to attract people who believe in its mission. You can’t sit on your laurels and think that the most talented people will choose your company over another offer. Once you’ve hired people, share the context with new employees so they can solve problems on their own, but don’t micromanage them. Our philosophy is if you’ve hired people you have to micromanage, then you’ve hired the wrong people. You should be happy to let new employees solve problems autonomously. Take steps to build relationships with each employee and ensure that they understand their importance within an early stage company. The odds are always against you as a startup, so just having talented people is often not enough. You also need them to be bought in. These earliest hires need to know and understand that they have a great impact on the trajectory of the company. That’s the best way to get the best from your employees. Your problems are not unique. Leverage the experience of your network. Remember, your company is not a unique snowflake within the larger business world. Many founders, including myself, we begin to think that we’re on our own, struggling through a sea of never-before-encountered problems. In reality, company leaders out there have already encountered and endured every issue you are facing. In fact, some leaders have encountered and endured them several times. In all likelihood, 80 percent of the issues you’re facing are well-trodden, while only 20 percent are company-specific. If you keep up the connections that you have, you’ll be able to lean upon a vast amount of industry expertise and business knowledge to tackle that 80 percent. Stay in contact with your mentors and your friends. At Encord, we still talk to our YC group partners. They're extremely busy, but they are an incredibly useful resource. Having friends and mentors who have experienced the things that you're experiencing and have already solved the problems that you’re trying to solve is immensely valuable, so embrace your network. When you encounter a problem, ask if anyone else has run into the same one. You’ll find that your network is a hive for answers that can save you time and money. Ready to automate and improve the quality of your data labeling? Sign-up for an Encord Free Trial: The Active Learning Platform for Computer Vision, used by the world’s leading computer vision teams. AI-assisted labeling, model training & diagnostics, find & fix dataset errors and biases, all in one collaborative active learning platform, to get to production AI faster. Try Encord for Free Today. Want to stay updated? Follow us on Twitter and LinkedIn for more content on computer vision, training data, and active learning. Join our Discord channel to chat and connect.

Nov 11 2022

5 M

Encord: The SOC 2 Compliant Platform for Computer Vision