Contents

What is Model Inference in Machine Learning?

Benefits of ML Model Inference

Real-World Use Cases of Model Inference

Limitations of Machine Learning Model Inference

Popular Tools for ML Model Inference

Model Inference in Machine Learning: Key Takeaways

Encord Blog

Model Inference in Machine Learning

Today, machine learning (ML)-based forecasting has become crucial across various industries. It plays a pivotal role in automating business processes, delivering personalized user experiences, gaining a competitive advantage, and enabling efficient decision-making. A key component that drives decisions for ML systems is model inference.

In this article, we will explain the concept of machine learning inference, its benefits, real-world applications, and the challenges that come with its implementation, especially in the context of responsible artificial intelligence practices.

What is Model Inference in Machine Learning?

Model inference in machine learning refers to the operationalization of a trained ML model, i.e., using an ML model to generate predictions on unseen real-world data in a production environment. The inference process includes processing incoming data and producing results based on the patterns and relationships learned during the machine learning training phase. The final output could be a classification label, a regression value, or a probability distribution over different classes.

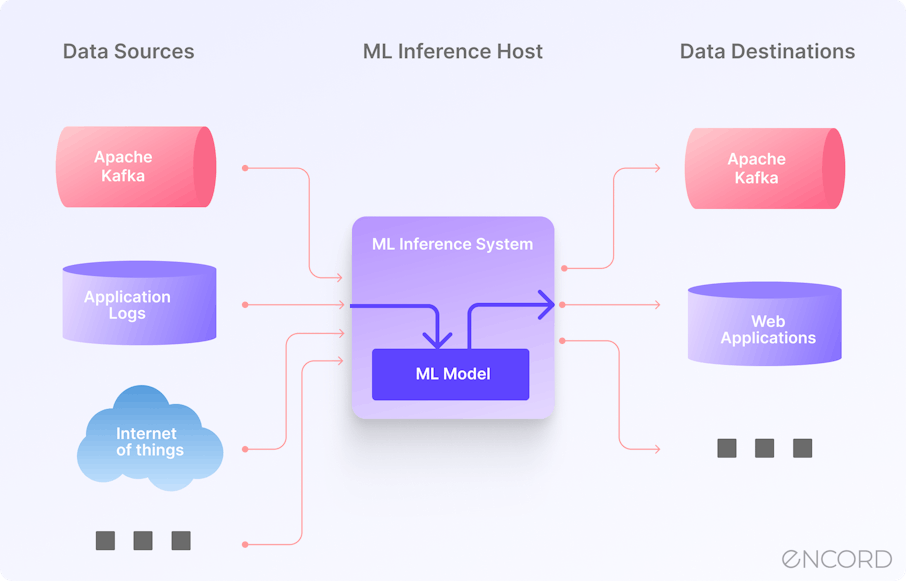

An inference-ready model is optimized for performance, efficiency, scalability, latency, and resource utilization. The model must be optimized to run efficiently on the chosen target platform to ensure that it can handle large volumes of incoming data and generate predictions promptly. This requires selecting appropriate hardware or cloud infrastructure for deployment, typically called an ML inference server.

There are two common ways of performing inference:

- Batch inference: Model predictions are generated on a chunk of observations after specific intervals. It is best-suited for low latency tasks, such as analyzing historical data.

- Real-time inference: Predictions are generated instantaneously as soon as new data becomes available. It is best-suited for real-time decision-making in mission-critical applications.

To illustrate model inference in machine learning, consider an animal image classification task, i.e., a trained convolutional neural network (CNN) used to classify animal images into various categories (e.g., cats, dogs, birds, and horses). When a new image is fed into the model, it extracts and learns relevant features, such as edges, textures, and shapes. The final layer of the model provides the probability scores for each category. The category with the highest probability is considered the model's prediction for that image, indicating whether it is a cat, dog, bird, or horse. Such a model can be valuable for various applications, including wildlife monitoring, pet identification, and content recommendation systems. Some other common examples of machine learning model inference include predicting whether an email is spam or not, identifying objects in images, or determining sentiment in customer reviews.

Benefits of ML Model Inference

Let’s discuss in detail how model inference in machine learning impacts different aspects of business.

Real-Time Decision-Making

Decisions create value – not data. Model inference facilitates real-time decision-making across several verticals, especially vital in critical applications such as autonomous vehicles, fraud detection, and healthcare. These scenarios demand immediate and accurate predictions to ensure safety, security, and timely action.

A couple of examples of how ML model inference facilitates decision-making:

- Real-time model inference for weather forecasting based on sensor data enables geologists, meteorologists, and hydrologists to accurately predict environmental catastrophes like floods, storms, and earthquakes.

- In cybersecurity, ML models can accurately infer malicious activity, enabling network intrusion detection systems to actively respond to threats and block unauthorized access.

Automation & Efficiency

Model inference significantly reduces the need for manual intervention and streamlines operations across various domains. It allows businesses to take immediate actions based on real-time insights. For instance:

- In customer support, chatbots powered by ML model inference provide automated responses to user queries, resolving issues promptly and improving customer satisfaction.

- In enterprise environments, ML model inference powers automated anomaly detection systems to identify, rank, and group outliers based on large-scale metric monitoring.

- In supply chain management,real-timemodel inference helps optimize inventory levels, ensuring the right products are available at the right time, thus reducing costs and minimizing stockouts.

Personalization

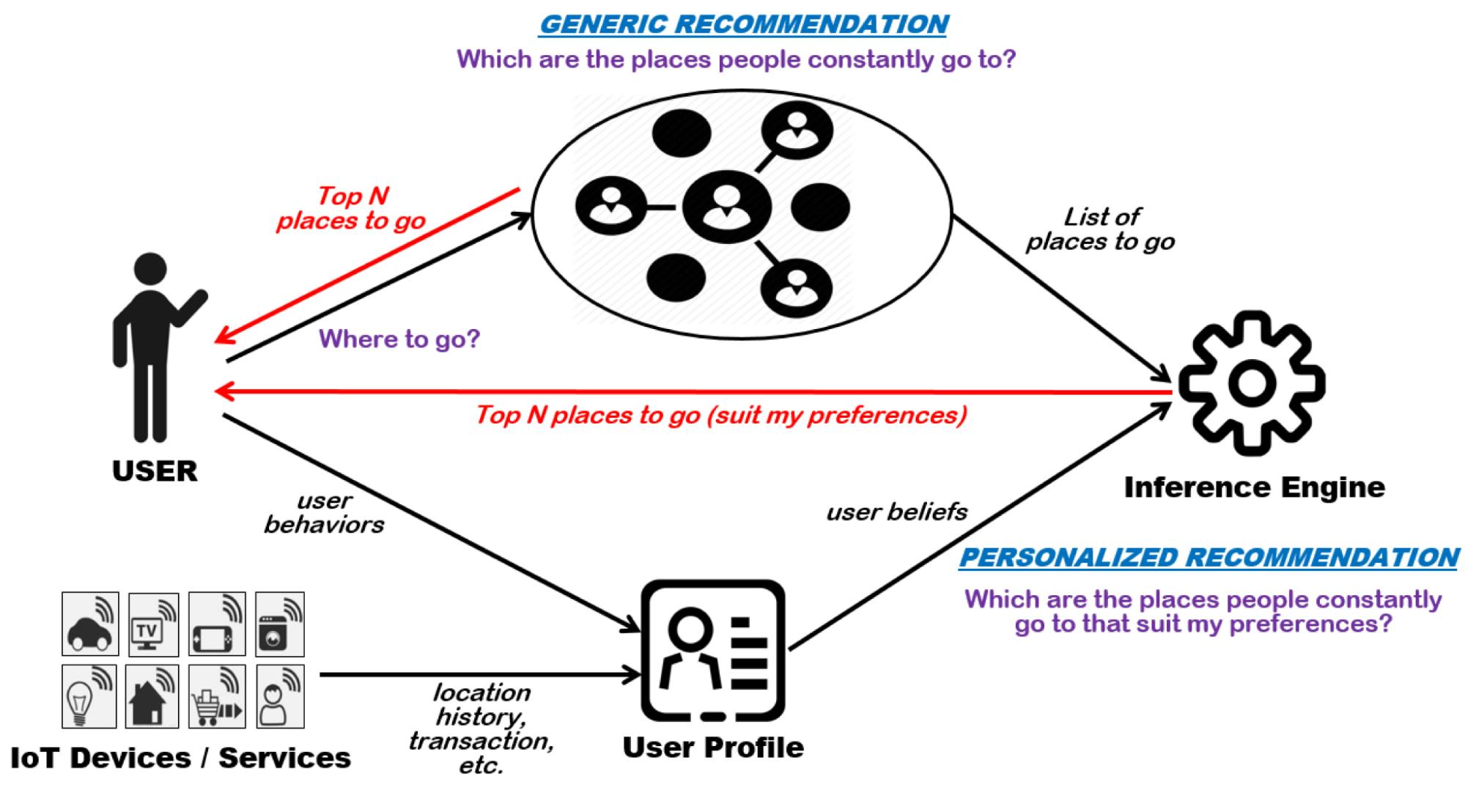

Personalized Recommendation System Compared to Traditional Recommendation

Model inference enables businesses to deliver personalized user experiences, catering to individual preferences and needs. For instance:

- ML-based recommendation systems, such as those used by streaming platforms, e-commerce websites, and social media platforms, analyze user behavior in real-time to offer tailored content and product recommendations. This personalization enhances user engagement and retention, leading to increased customer loyalty and higher conversion rates.

- Personalized marketing campaigns based on ML inference yield better targeting and improved customer response rates.

Scalability & Cost-Efficiency

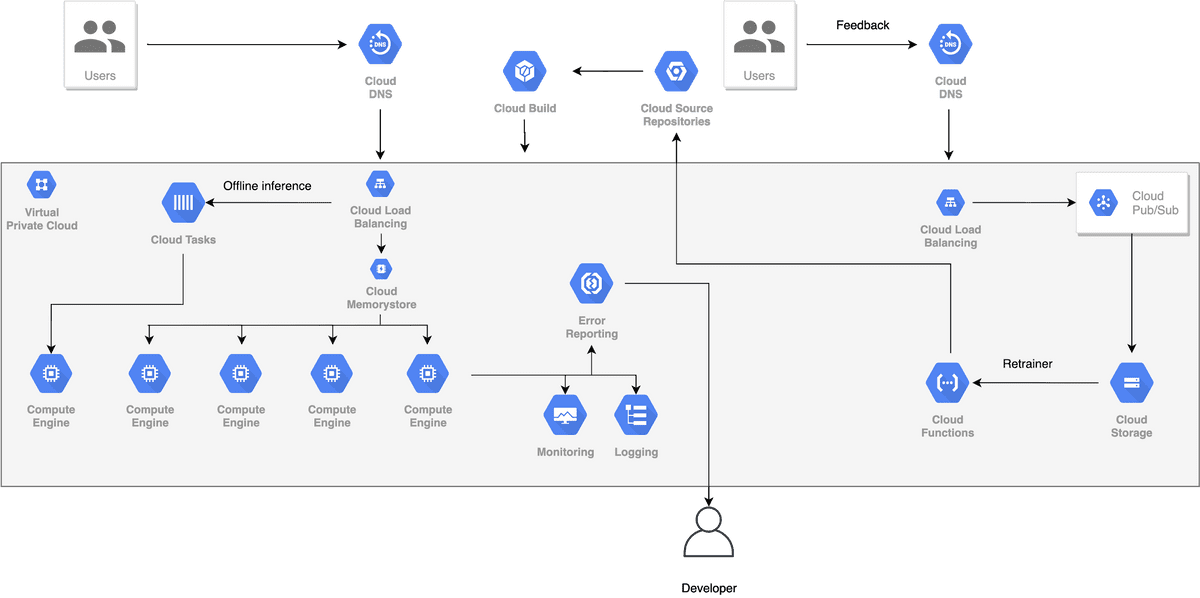

End-to-end Scalable Machine Learning Pipeline

By leveraging cloud infrastructure and hardware optimization, organizations can deploy ML applications cost-efficiently. Cloud-based model inference with GPU support allows organizations to scale with rapid data growth and changing user demands. Moreover, it eliminates the need for on-premises hardware maintenance, reducing capital expenditures and streamlining IT management.

Cloud providers also offer specialized hardware-optimized inference services at a low cost. Furthermore, on-demand serverless inference enables organizations to automatically manage and scale workloads that have low or inconsistent traffic.

With such flexibility, businesses can explore new opportunities and expand operations into previously untapped markets. Real-time insights and accurate predictions empower organizations to enter new territories with confidence, informed by data-driven decisions.

Real-World Use Cases of Model Inference

Model inference in machine learning finds extensive application across various industries, driving transformative changes and yielding valuable insights. Below, we delve into each real-world use case, exploring how model inference brings about revolutionary advancements:

Healthcare & Medical Diagnostics

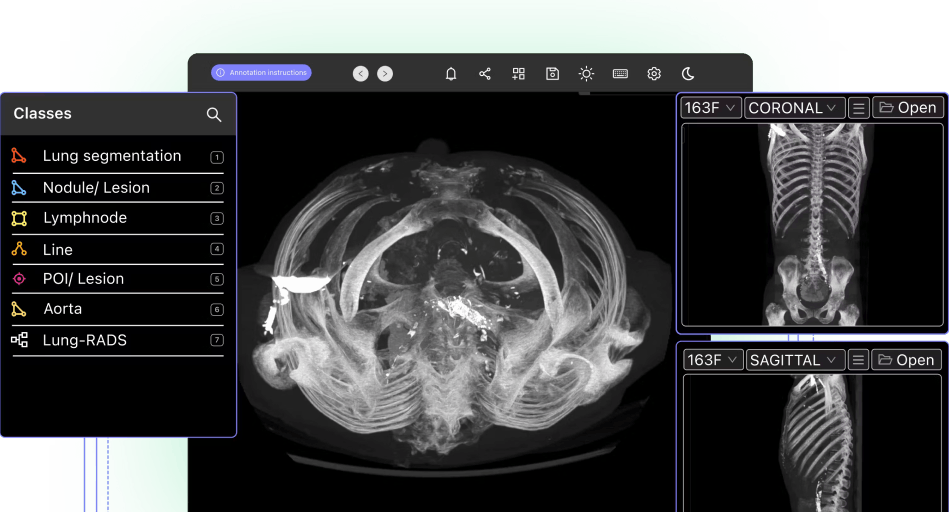

Model inference is revolutionizing medical diagnostics through medical image analysis and visualization. Trained deep learning models can accurately interpret medical images, such as X-rays, MRIs, and CT scans, to aid in disease diagnosis. By analyzing the intricate details in medical images, model inference assists radiologists and healthcare professionals in identifying abnormalities, enabling early disease detection and improving patient outcomes.

Real-time monitoring of patient vital signs using sensor data from medical Internet of Things (IoT) devices and predictive models helps healthcare professionals make timely interventions and prevent critical events. Natural Language Processing (NLP) models process electronic health records and medical literature, supporting clinical decision-making and medical research.

Natural Language Processing (NLP)

Model inference plays a pivotal role in applications of natural language processing (NLP), such as chatbots and virtual assistants. NLP models, often based on deep learning architectures like recurrent neural networks (RNNs), long short-term memory networks (LSTMs), or transformers, enable chatbots and virtual assistants to understand and respond to user queries in real-time. This technology is particularly valuable in contact center AI software, where it powers intelligent automation and enhances customer interactions.

By analyzing user input, NLP models can infer contextually relevant responses, simulating human-like interactions. This capability enhances user experience and facilitates efficient customer support, as chatbots can handle a wide range of inquiries and provide prompt responses 24/7.

Autonomous Vehicles

Model inference is the backbone of decision-making in computer vision tasks like autonomous vehicle driving and detection. Trained machine learning models process data from sensors like LiDAR, cameras, and radar in real-time to make informed decisions on navigation, collision avoidance, and route planning.

In autonomous vehicles, model inference occurs rapidly, allowing vehicles to respond instantly to changes in their environment. This capability is critical for ensuring the safety of passengers and pedestrians, as the vehicle must continuously assess its surroundings and make split-second decisions to avoid potential hazards.

Fraud Detection

In the financial and e-commerce sectors, model inference is used extensively for fraud detection. Machine learning models trained on historical transaction data can quickly identify patterns indicative of fraudulent activities in real-time.

By analyzing incoming transactions as they occur, model inference can promptly flag suspicious transactions for further investigation or block fraudulent attempts. Real-time fraud detection protects businesses and consumers alike, minimizing financial losses and safeguarding sensitive information. So, model interference can be used in horizontal and vertical B2B marketplaces, as well as in the B2C sector.

Environmental Monitoring

Model inference finds applications in environmental data analysis, enabling accurate and timely monitoring of environmental conditions. Models trained on historical environmental data, satellite imagery, and other relevant information can predict changes in air quality, weather patterns, or environmental parameters.

By deploying these models for real-time inference, organizations can make data-driven decisions to address environmental challenges, such as air pollution, climate change, or natural disasters. The insights obtained from model inference aid policymakers, researchers, and environmentalists in developing effective strategies for conservation and sustainable resource management.

Financial Services

In the finance sector, ML model inference plays a crucial role in enhancing credit risk assessment. Trained machine learning models analyze vast amounts of historical financial data and loan applications to predict the creditworthiness of potential borrowers accurately.

Real-time model inference allows financial institutions to swiftly evaluate credit risk and make informed lending decisions, streamlining loan approval processes and reducing the risk of default. Algorithmic trading models use real-time market data to make rapid trading decisions, capitalizing on market opportunities with dependencies.

Moreover, model inference aids in determining optimal pricing strategies for financial products. By analyzing market trends, customer behavior, and competitor pricing, financial institutions can dynamically adjust their pricing to maximize profitability while remaining competitive.

Customer Relationship Management

In customer relationship management (CRM), model inference powers personalized recommendations to foster stronger customer engagement, increase customer loyalty, and drive recurring business.

By analyzing customer behavior, preferences, and purchase history, recommendation systems based on model inference can suggest products, services, or content tailored to individual users. They contribute to cross-selling and upselling opportunities, as customers are more likely to make relevant purchases based on their interests.

Moreover, customer churn prediction models help businesses identify customers at risk of leaving and implement targeted retention strategies. Sentiment analysis models analyze customer feedback to gauge satisfaction levels and identify areas for improvement.

Predictive Maintenance in Manufacturing

Model inference is a game-changer in predictive maintenance for the manufacturing industry. By analyzing real-time IoT sensor data from machinery and equipment, machine learning models can predict equipment failures before they occur. This capability allows manufacturers to schedule maintenance activities proactively, reducing downtime and preventing costly production interruptions. As a result, manufacturers can extend the lifespan of their equipment, improve productivity, and overall operational efficiency. Predictive maintenance is often a key feature of the best EAM software, as it allows for optimized asset management, and model inference is now growing in importance as a key part of that.

Limitations of Machine Learning Model Inference

Model inference in machine learning brings numerous benefits, but it also presents various challenges that must be addressed for successful and responsible AI deployment. In this section, we delve into the key challenges and the strategies to overcome them:

Infrastructure Cost & Resource Intensive

Model inference can be resource-intensive, particularly for complex models and large datasets. Deploying models on different hardware components, such as CPUs, GPUs, TPUs, FPGAs, or custom AI chips, poses challenges in optimizing resource allocation and achieving cost-effectiveness. High computational requirements result in increased operational costs for organizations.

To address these challenges, organizations must carefully assess their specific use case and the model's complexity. Choosing the right hardware and cloud-based solutions can optimize performance and reduce operational costs. Cloud services offer the flexibility to scale resources as needed, providing cost-efficiency and adaptability to changing workloads.

Latency & Interoperability

Real-time model inference demands low latency to provide immediate responses, especially for mission-critical applications like autonomous vehicles or healthcare emergencies. In addition, models should be designed to run on diverse environments, including end devices with limited computational resources.

To address latency concerns, efficient machine learning algorithms and their optimization are crucial. Techniques such as quantization, model compression, and pruning can reduce the model's size and computational complexity without compromising model accuracy. Furthermore, using standardized model formats like ONNX (Open Neural Network Exchange) enables interoperability across different inference engines and hardware.

Ethical Frameworks

Model inference raises ethical implications, particularly when dealing with sensitive data or making critical decisions that impact individuals or society. Biased or discriminatory predictions can have serious consequences, leading to unequal treatment. To ensure fairness and unbiased predictions, organizations must establish ethical guidelines in the model development and deployment process.

Promoting responsible and ethical AI practices involves fairness-aware training, continuous monitoring, and auditing of model behavior to identify and address biases. Model interpretability and transparency are essential to understanding how decisions are made, particularly in critical applications like healthcare and finance.

Transparent Model Development

Complex machine learning models can act as "black boxes," making it challenging to interpret their decisions. However, in critical domains like healthcare and finance, interpretability is vital for building trust and ensuring accountable decision-making.

To address this challenge, organizations should document the model development process, including data sources, preprocessing steps, and model architecture. Additionally, adopting explainable AI techniques like LIME (Local Interpretable Model-agnostic Explanations) and SHAP (SHapley Additive exPlanations) can provide insights into how the model arrives at its decisions, making it easier to understand and interpret its behavior.

Robust Model Training & Testing

During model training, overfitting is a common challenge, where the model performs well on the training data but poorly on unseen data. Overfitting can result in inaccurate predictions and reduced generalization.

To address overfitting, techniques like regularization, early stopping, and dropout can be applied during model training. Data augmentation is another useful approach, i.e., introducing variations in the training data to improve the model's ability to generalize on unseen data.

Furthermore, the accuracy of model predictions heavily depends on the quality and representativeness of the training data. Addressing biased or incomplete data is crucial to prevent discriminatory predictions and ensure fairness.

Additionally, models must be assessed for resilience against adversarial attacks and input variations. Adversarial attacks involve intentionally perturbing input data to mislead the model's predictions. Robust models should be able to withstand such attacks and maintain accuracy.

Continuous Monitoring & Retraining

Models may experience a decline in performance over time due to changing data distributions. Continuous monitoring of model performance is essential to detect degradation and trigger retraining when necessary.

Continuous monitoring involves tracking model performance metrics and detecting instances of data drift. When data drift is identified, models can be retrained on the updated data to ensure their accuracy and relevance in dynamic environments.

Security & Privacy Protection

Model inference raises concerns about data and model security in real-world applications. Typically, four types of attacks can occur during inference: membership inference attacks, model extraction attacks, property inference attacks, and model inversion attacks. Hence, sensitive data processed by the model must be protected from unauthorized access and potential breaches.

Ensuring data security involves implementing robust authentication and encryption mechanisms. Techniques like differential privacy and federated learning can enhance privacy protection in machine learning models. Additionally, organizations must establish strong privacy measures for handling sensitive data, adhering to regulations such as GDPR (General Data Protection Regulation), HIPAA (Health Insurance Portability and Accountability Act), and SOC 2.

Disaster Recovery

In cloud-based model inference, robust security measures and data protection are essential to prevent data loss and ensure data integrity and availability, particularly for mission-critical applications.

Disaster recovery plans should be established to handle potential system failures, data corruption, or cybersecurity threats. Regular data backups, failover mechanisms, and redundancy can mitigate the impact of unforeseen system failures.

Popular Tools for ML Model Inference

Data scientists, ML engineers, and AI practitioners typically use programming languages like Python and R to build AI systems. Python, in particular, offers a wide range of libraries and frameworks like scikit-learn, PyTorch, Keras, and TensorFlow.

Practitioners also employ tools like Docker and Kubernetes to enable the containerization of machine learning tasks. Additionally, APIs (Application Programming Interfaces) play a crucial role in enabling seamless integration of machine learning models into applications and services.

There are several popular tools and frameworks available for model inference in machine learning:

- Amazon SageMaker: Amazon SageMaker is a fully managed service that simplifies model training and deployment on the Amazon Web Services (AWS) cloud platform. It allows easy integration with popular machine learning frameworks, enabling seamless model inference at scale.

- TensorFlow Serving: TensorFlow Serving is a dedicated library for deploying TensorFlow models for inference. It supports efficient and scalable serving of machine learning models in production environments.

- Triton Inference Server: Triton Inference Server, developed by NVIDIA, is an open-source server for deploying machine learning models with GPU support.

Model Inference in Machine Learning: Key Takeaways

Model inference is a pivotal stage in the machine learning lifecycle. This process ensures that the trained models can be efficiently utilized to process real-time data and generate predictions.

Real-time model inference empowers critical applications that demand instant decision-making, such as autonomous vehicles, fraud detection, and healthcare emergencies. It offers a wide array of benefits, revolutionizing decision-making, streamlining operations, and enhancing user experiences across various industries.

While model inference brings numerous benefits, it also presents challenges that must be addressed for responsible AI deployment. These challenges include high infrastructure costs, ensuring low latency and interoperability, ethical considerations to avoid biased predictions, model transparency for trust and accountability, etc.

Organizations must prioritize ethical AI frameworks, robust disaster recovery plans, continuous monitoring, model retraining, and staying vigilant against inference-level attacks to ensure model accuracy, fairness, and resilience in real-world applications.

The future lies in creating a harmonious collaboration between AI and human ingenuity, fostering a more sustainable and innovative world where responsible AI practices unlock the full potential of machine learning inference.

Explore the platform

Data infrastructure for multimodal AI

Explore product

Model inference in machine learning refers to the process of utilizing a trained machine learning model to make predictions or decisions on new, unseen data. It involves passing new data through the trained model to generate outputs, such as classification labels, regression values, or probability distributions, based on the model's learned parameters.

Model training is the initial phase of building a machine learning model. During training, the model learns from a labeled dataset, adjusting its internal parameters to minimize prediction errors. Model inference, on the other hand, occurs after training when the model is deployed and put into practical use to make predictions on new, unseen data.

The techniques of model inference involve deploying the trained model on an ML inference server or cloud environment to process real-time data and generate predictions. Techniques like quantization, model compression, and hardware optimization can be used to optimize the model for efficient inference. Additionally, model interpretability techniques, such as LIME and SHAP, provide insights into the model's decision-making process.

Model prediction refers to the output generated by a trained machine learning model when it processes new, unseen data. The model predicts the target variable's value based on its learned parameters and the input data.

Model inference encompasses the entire process of deploying a trained model to make predictions on new data, including data preprocessing, passing the data through the model, and generating predictions. Model prediction, on the other hand, specifically refers to the output produced by the model when it processes the new data.

An example of inference in machine learning is using a trained image classification model to identify the content of an image. When a new image is fed into the model, the model processes the image's features and predicts the class label, such as identifying whether the image contains a cat, dog, or bird.

To perform inference in machine learning, you need a trained model and new, unseen data. The steps involved include pre-processing the data to match the model's input format, passing the pre-processed data through the trained model, and obtaining the model's predictions as the final output. Inference can be done on an ML inference server, cloud platform, or locally on compatible hardware.

Encord focuses on enabling rapid iteration and deployment by facilitating the annotation of diverse data. The platform allows users to efficiently manage and annotate large datasets, ensuring that models receive varied inputs, which is essential for improving performance in dynamic environments.

Encord streamlines the process of preparing datasets for machine learning model training by providing advanced annotation tools and ensuring data accuracy. The platform allows for quick iterations and adjustments, helping teams to efficiently refine their models with high-quality labeled data.

No, Encord does not offer a training environment for machine learning models. Instead, it focuses on the annotation of raw data and the design of annotation workflows. Once data is annotated, users can export it to their preferred training environments to develop and train their models.

Encord enables the productionization of machine learning models by facilitating quick deployment and management of these models across edge devices. Integration with platforms like AWS Greengrass allows for efficient updates and scalability, which is crucial for real-time applications.

Encord is designed to optimize the deployment process of machine learning models, even on constrained automotive hardware. The platform offers tools that help streamline model integration, ensuring that performance is maximized while accommodating the limitations of the hardware.

Encord emphasizes in-house training and evaluation to maintain control over the quality and effectiveness of its models. This approach allows for tailored regression testing and direct addressing of specific issues, ensuring models are optimized for real-world applications.

Yes, Encord facilitates the entire pipeline from data collection to proof of concept development. Users can leverage the platform to create, test, and validate machine learning models, ensuring they meet the specific requirements of automotive safety protocols like NCAP.

Encord utilizes internal tools to monitor and analyze data for edge cases, employing techniques such as embeddings to uncover potential discrepancies. This approach ensures that AI models are trained on comprehensive datasets that reflect the diversity of the population.

Encord plays a crucial role in building high-quality datasets that are essential for testing and deploying machine learning models. The platform aids in the transition to new model architectures, ensuring that the data used is both explainable and modular.

Encord primarily focuses on supporting other companies in training their models by providing data preparation and evaluation services. We partner with solutions like SageMaker and Vertex for the actual model training.