Contents

What is ML Monitoring?

What is ML Observability?

ML Monitoring vs. ML Observability: Overlapping Elements

ML Monitoring vs. ML Observability: Key Differences

Encord Active: Empowering Robust ML Development With Monitoring & Observability

ML Observability vs. ML Monitoring: Key Takeaways

Encord Blog

ML Monitoring vs. ML Observability

Picture this: you've developed an ML system that excels in domain X, performing task Y. It's all smooth sailing as you launch it into production, confident in its abilities. But suddenly, customer complaints start pouring in, signaling your once-stellar model is now off its game. Not only does this shake your company's reputation, but it also demands quick fixes. The catch? Sorting out these issues at the production level is a major headache. What could've saved the day? Setting up solid monitoring and observability right from the get-go to catch anomalies and outliers before they spiral out of control.

Fast forward to today, AI and ML are everywhere, revolutionizing industries by extracting valuable insights, optimizing operations, and guiding data-driven decisions. However, these advancements necessitate a proactive approach to ensure timely anomaly and outlier detection for building reliable, efficient, and transparent models. That's where ML monitoring and observability come to the rescue, playing vital roles in developing trustworthy AI systems.

In this article, we'll uncover the crucial roles of ML monitoring and ML observability in crafting dependable AI-powered systems. We'll explore their key distinctions and how they work together to ensure reliability in AI deployments.

What is ML Monitoring?

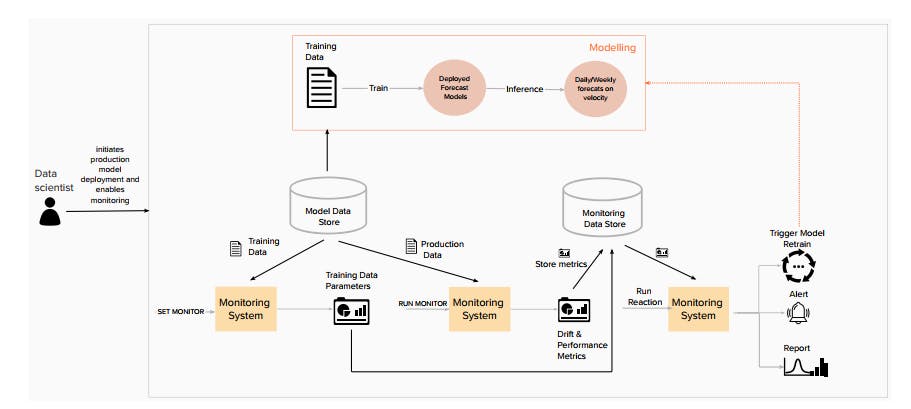

Machine learning monitoring refers to the continuous and systematic process of tracking a machine learning model’s performance, behavior, and health from development to production. It encompasses collecting, analyzing, and interpreting various metrics, logs, and data generated by ML systems to ensure optimal functionality and prompt detection of potential issues or anomalies.

ML monitoring detects and tracks:

- Metrics like accuracy, precision, recall, F1 score, etc.

- Changes in input data distributions, known as data drift

- Instances when a model's performance declines or degrades

- Anomalies and outliers in model behavior or input data

- Model latency

- Utilization of computational resources, memory, and other system resources

- Data quality problems in input datasets, such as missing values or incorrect labels, that can negatively impact model performance

- Bias and fairness of models

- Model versions

- Breaches and unauthorized access attempts

Objectives

A Machine Learning Model Monitoring Checklist

ML monitoring involves the continuous observation, analysis, and management of various aspects of ML systems to ensure they are functioning as intended and delivering accurate outcomes. It primarily focuses on the following:

- Model Performance Tracking: Helps machine learning practitioners and stakeholders understand how well a model fares with new data and whether the predictive accuracy aligns with the intended goals.

- Early Anomaly Detection: Involves continuously analyzing model behavior to promptly detect any deviations from expected performance. This early warning system helps identify potential issues, such as model degradation, data drift, or outliers, which, if left unchecked, could lead to significant business and operational consequences.

- Root-Cause Analysis: Identifying the fundamental reason behind issues in the model or ML pipeline. This enables data scientists and ML engineers to pinpoint the root causes of problems, leading to more efficient debugging and issue resolution.

- Diagnosis: Examines the identified issues to understand their nature and intricacies, which assists in devising targeted solutions for smoother debugging and resolution.

- Model Governance: Establishes guidelines and protocols to oversee model development, deployment, and maintenance. These guidelines ensure ML models are aligned with organizational standards and objectives.

- Compliance: Entails adhering to legal and ethical regulations. ML monitoring ensures that the deployed models operate within defined boundaries and uphold the necessary ethical and legal standards.

Importance of Monitoring in Machine Learning

ML monitoring is significant for several reasons, including:

- Proactive Anomaly Resolution: Early detection of anomalies through ML monitoring enables data science teams to take timely actions and address issues before they escalate. This proactive approach helps prevent significant business disruptions and customer dissatisfaction, especially in mission-critical industries.

- Data Drift Detection: ML monitoring helps identify data drift, where the input data distribution shifts over time. If a drift is detected, developers can take prompt action to update and recalibrate the model, ensuring its accuracy and relevance to the changing data patterns.

- Continuous Improvement: ML Monitoring ensures iterative model improvement by providing feedback on model behavior. This feedback loop supports refining ML algorithms and strategies, leading to enhanced model performance over time.

- Risk Mitigation: ML monitoring helps mitigate risks associated with incorrect predictions or erroneous decisions, which is especially important in industries such as healthcare and finance, where model accuracy is critical.

- Performance Validation: Monitoring provides valuable insights into model performance in production environments, ensuring that they continue to deliver reliable results in real-word applications. To achieve this, monitoring employs various techniques, such as cross-validation and A/B testing, which help assess model generalization and competence in dynamic settings.

What is ML Observability?

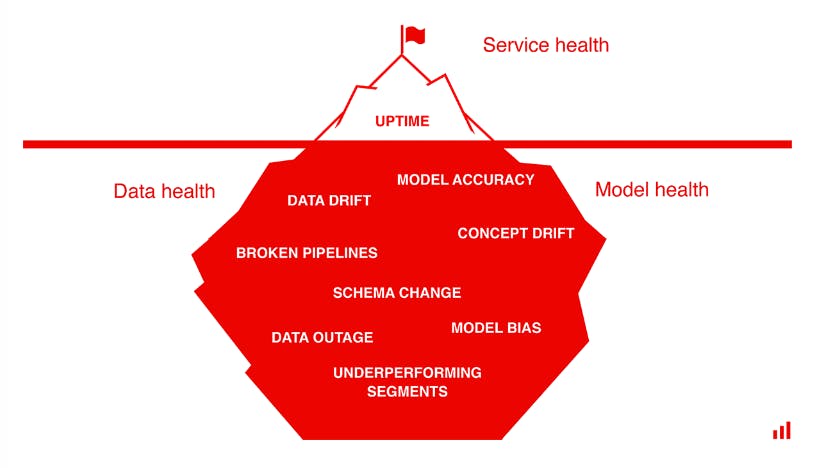

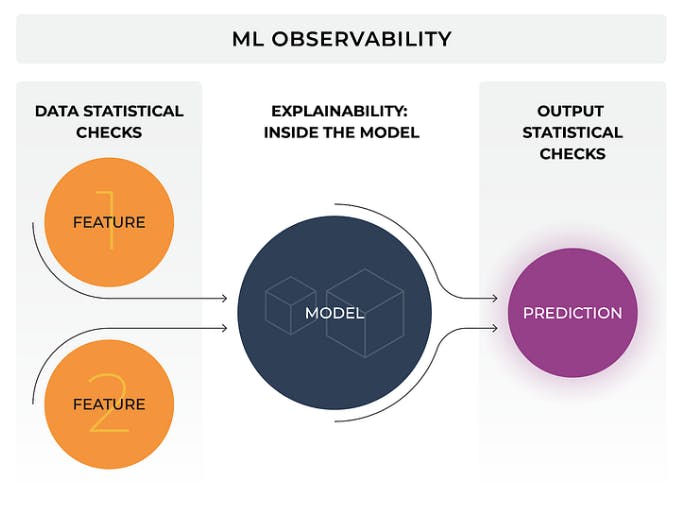

Machine learning observability is an important practice that provides insights into the inner workings of ML data pipelines and system well-being. It involves understanding decision-making, data flow, and interactions within the ML pipeline. As ML systems become more complex, so does observability due to multiple interacting components such as data pipelines, model notebooks, cloud setups, containers, distributed systems, and microservices.

ML observability detects:

- Model behavior during training, inference, and decision-making processes

- Data flow through the ML pipeline, including preprocessing steps and data transformations

- Feature importance and their contributions to model predictions

- Model profiling

- Model performance metrics, such as accuracy, precision, recall, and F1 score

- Utilization of computational resources, memory, and processing power by ML models

- Bias in ML models

- Anomalies and outliers in model behavior or data.

- Model drift, data drift, and concept drift occur when model behavior or input data changes over time

- Overall performance of the ML system, including response times, latency, and throughput

- Model error analysis

- Model explainability and interpretability

- Model versions and their performance using production data

Objectives

The primary objectives of ML observability are:

- Transparency and Understandability: ML observability aims to provide transparency into the black-box nature of ML models. By gaining a deeper understanding of model behavior, data scientists can interpret model decisions and build trust in the model's predictions.

- Root Cause Analysis: ML observability enables thorough root cause analysis when issues arise in the ML pipeline. By tracing back the sequence of events and system interactions, ML engineers can pinpoint the root causes of problems and facilitate effective troubleshooting.

- Data Quality Assessment: ML observability seeks to monitor data inputs and transformations to identify and rectify data quality issues that may adversely affect model performance.

- Performance Optimization: With a holistic view of the system's internal dynamics, ML observability aims to facilitate the optimization of model performance and resource allocation to achieve better results.

Importance of Observability in Machine Learning

ML observability plays a pivotal role in AI and ML, offering crucial benefits such as:

- Continuous Improvement: ML observability offers insights into model behavior to help refine algorithms, update models, and continuously enhance their predictive capabilities.

- Proactive Problem Detection: ML observability continuously observes model behavior and system performance to address potential problems before they escalate.

- Real-time Decision Support: ML observability offers real-time insights into model performance, enabling data-driven decision-making in dynamic and rapidly changing environments.

- Building Trust in AI Systems: ML observability fosters trust in AI systems. Understanding how models arrive at decisions provides confidence in the reliability and ethics of AI-driven outcomes.

- Compliance and Accountability: In regulated industries, ML observability helps maintain compliance with ethical and legal standards. Understanding model decisions and data usage ensure models remain accountable and within regulatory bounds.

ML Monitoring vs. ML Observability: Overlapping Elements

Both monitoring and observability are integral components of ML OPs that work in tandem to ensure the seamless functioning and optimization of ML models. Although they have distinct purposes, there are essential overlaps where their functions converge, boosting the overall effectiveness of the ML ecosystem.

Some of their similar elements include:

Anomaly Detection

Anomaly detection is a shared objective in both ML monitoring and observability. Monitoring systems and observability tools are designed to identify deviations in model behavior and performance that may indicate potential issues or anomalies.

Data Quality Control

Ensuring data quality is essential for robust ML operations, and both monitoring and observability contribute to this aspect. ML monitoring systems continuously assess the quality and integrity of input data, monitoring for data drift or changes in data distribution that could impact model performance. Similarly, observability tools enable data scientists to gain insights into the characteristics of input data and assess its suitability for training and inference.

Real-time Alerts

Real-time alerts are a shared feature of both ML monitoring and observability. When critical issues or anomalies are detected, these systems promptly trigger alerts, notifying relevant stakeholders for immediate action to minimize outages.

Continuous ML Improvement

ML monitoring and observability foster a culture of ongoing improvement in machine learning. Monitoring identifies issues like performance drops, prompting iterative model refinement. Observability offers insights into system behavior, aiding data-driven optimization and enhanced decisions.

Model Performance Assessment

Evaluating model performance is a fundamental aspect shared by both monitoring and observability. Monitoring systems track model metrics over time, allowing practitioners to assess performance trends and benchmark against predefined thresholds. Observability complements this by offering a comprehensive view of the ML pipeline, aiding in the identification of potential bottlenecks or areas of improvement that may affect overall model performance.

ML Monitoring vs. ML Observability: Key Differences

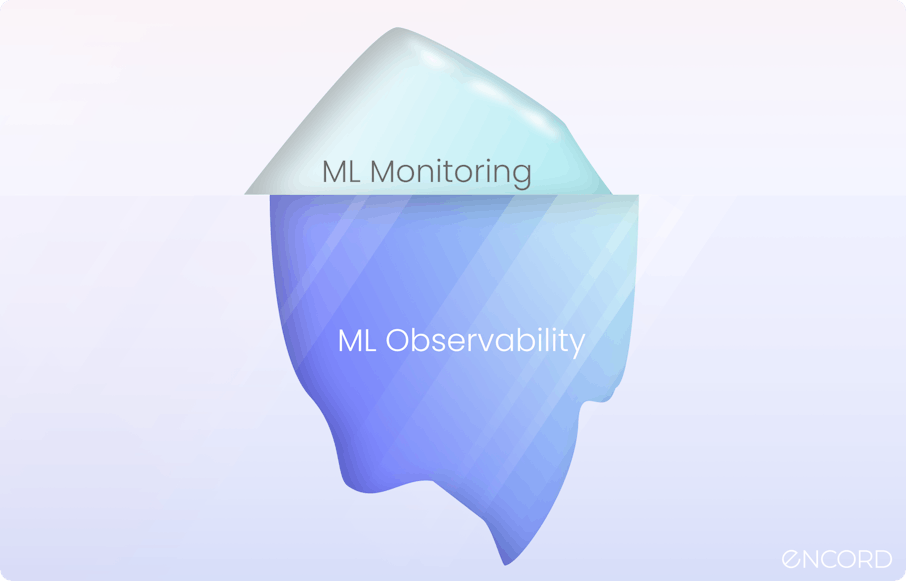

ML Monitoring vs. ML Observability

While ML monitoring and ML observability share common goals in ensuring effective machine learning operationalization, they differ significantly in their approaches, objectives, and the scope of insights they provide.

Area of Focus

The primary distinction lies in their focus. ML monitoring primarily answers "what" is happening within the ML system, tracking key metrics and indicators to identify deviations and issues.

On the other hand, ML observability delves into the "why" and "how" aspects, providing in-depth insights into the internal workings of the ML pipeline. Its goal is to provide a deeper understanding of the model's behavior and decision-making process.

Objectives

ML monitoring's main objective is to track model performance, identify problem areas, and ensure operational stability. It aims to validate that the model is functioning as expected and provides real-time alerts to address immediate concerns.

In contrast, ML observability primarily aims to offer holistic insights into the ML system's health. This involves identifying systemic issues, data quality problems, and shedding light on the broader implications of model decisions.

Approach

ML monitoring is a failure-centric practice, designed to detect and mitigate failures in the ML model. It concentrates on specific critical issues that could lead to incorrect predictions or system downtime.

In contrast, ML observability pursues a system-centric approach, analyzing the overall system health, including data flows, dependencies, and external factors that denote the system's behavior and performance.

Perspective

ML Monitoring typically offers an external, high-level view of the ML model, focusing on metrics and performance indicators visible from the outside.

ML Observability, on the other hand, offers a holistic view of the ML system inside and out. It provides insights into internal states, algorithmic behavior, and the interactions between various components, leading to an in-depth awareness of the system's dynamics.

Performance Analytics

ML monitoring relies on historical metrics data to analyze model performance and identify trends over time.

ML observability, in contrast, emphasizes real-time analysis, allowing data scientists and engineers to explore the model's behavior in the moment, thereby facilitating quicker and more responsive decision-making.

Use Case

ML monitoring is particularly valuable in scenarios where immediate detection of critical issues is essential, such as in high-stakes applications like healthcare and finance.

ML observability, on the other hand, shines in complex, large-scale ML systems where understanding the intricate interactions between various components and identifying systemic issues are crucial.

To exemplify this difference, consider a medical AI company analyzing chest x-rays. Monitoring might signal a performance metric decline over the past month. Meanwhile, ML observability can detect that a new hospital joined the system, introducing different image sources and affecting features, underscoring the significance of systemic insights in intricate, large-scale ML systems.

Encord Active: Empowering Robust ML Development With Monitoring & Observability

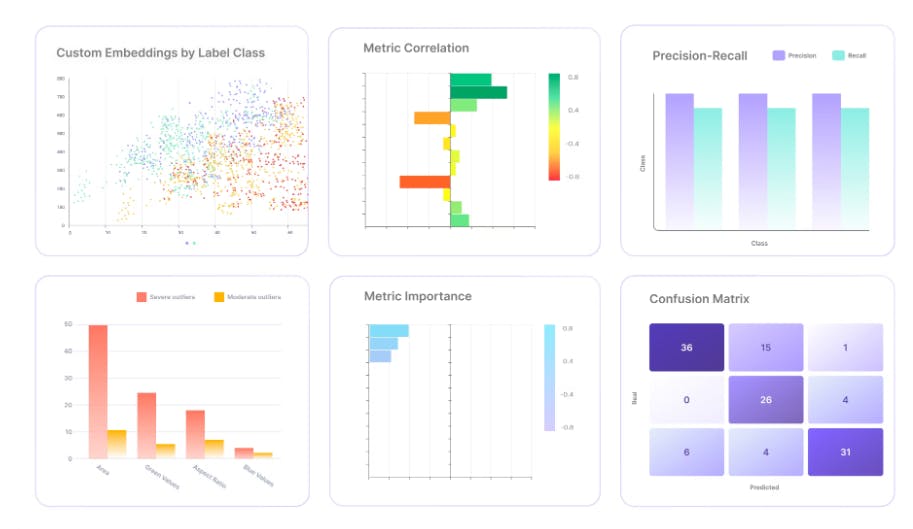

Encord Active is an open-source ML platform that is built to revolutionize the process of building robust ML models. With its comprehensive suite of end-to-end monitoring and observability features, Encord Active equips practitioners with the essential tools to elevate their ML development journey.

Prominent features include intuitive dashboards for performance assessment. These dashboards facilitate the monitoring of performance metrics and visualization of feature distributions. It also offers automated robustness tests for mitigation, detects biases for ethical outcomes, and enables comprehensive evaluations for effective comparisons. Additionally, auto-identification of labeling errors ensures reliable results.

ML Observability vs. ML Monitoring: Key Takeaways

- Effective ML monitoring and ML observability are crucial for developing and deploying successful machine learning models.

- ML monitoring components, such as real-time alerts and metrics collection, ensure continuous tracking of model performance and prompt issue identification.

- ML observability components, such as root cause analysis and model fairness assessment, provide detailed insights into the ML system's behavior and enable proactive improvements for long-term system reliability.

- The combination of ML monitoring and ML observability enables proactive issue detection and continuous improvement, leading to optimized ML systems.

- Together, ML monitoring and ML observability play a pivotal role in achieving system health, mitigating bias, and supporting real-time decision-making.

- Organizations can rely on both practices to build robust and trustworthy AI-driven solutions and drive innovation.

Explore our products

- Monitoring focuses on real-time tracking of specific metrics and immediate issue detection, while observability provides a more holistic understanding of the ML system's internal dynamics and interactions.

- Measurable metrics like CPU usage or memory consumption can be monitored. However, these aspects are not directly observable in terms of understanding the intricate behavior and decision-making processes within an ML model.

- Model explainability, data quality trends, feature drift, and model robustness analysis are some observable aspects of ML systems. However, monitoring them in real-time can be challenging due to their complexity and resource-intensive nature.

- No, both model monitoring and observability are essential and complement each other. Monitoring provides real-time insights and immediate issue detection, while observability offers a deeper understanding and proactive problem-solving capabilities.

- Incorporating monitoring and observability in the ML deployment strategy ensures the reliability and transparency of ML models and enhanced performance of the overall ML system.