Contents

The challenges of real-world road images

Starting with limited resources

A shift to a unified data platform

Handling the variability of real-world data

Scaling without compromising on quality

The benefits of a unified AI data stack

Building the next generation of smart cities

Encord Blog

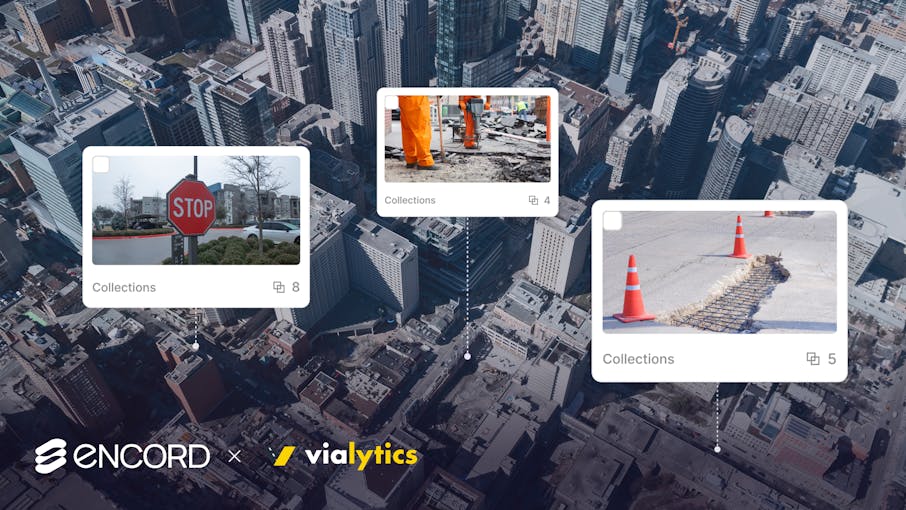

How vialytics transformed inconsistent road images into AI-powered Smart Cities

5 min read

Inconsistent, messy, and often unpredictable - real-world image data is rarely as clean as the curated benchmarks researchers train on. For vialytics, building Smart City solutions with AI meant turning chaotic road imagery - captured under varying weather conditions, speeds, and lighting - into production-grade computer vision models capable of precise, reliable outputs.

As vialytics scaled its operations to cover hundreds of cities, the team faced a unique challenge: how to manage, annotate, and process vast amounts of real-world data that didn’t resemble “perfect” inputs.

This is their story of scaling an AI data stack to handle the noise, turning it into consistent and robust computer vision performance for city infrastructure.

If you'd rather hear this directly from vialytics' Director of Data Science, you can watch his presentation here.

The challenges of real-world road images

vialytics’ AI-powered platform helps cities manage and repair infrastructure by using smartphone cameras mounted in municipal vehicles to capture imagery. These images are then analyzed to detect road defects, inventory traffic signs, and monitor lane markings. The core issue? The images collected aren’t taken in controlled environments, they come from real-world conditions, often with issues like:

- Poor lighting, extreme sun and shadow transitions

- Motion blur from fast driving

- Reflections from the vehicle’s bonnet

- Weather-related issues such as rain on windows

The inconsistency in image data posed significant challenges. Even the most sophisticated models can struggle with real-world noise. But vialytics knew that scaling AI systems for smart cities meant creating reliable outputs from imperfect inputs.

Starting with limited resources

When vialytics first began its journey, the team relied on GPU-powered laptops running self-hosted annotation tools, such as CVAT, and storing labeled data in folders on local drives. This setup worked at first, but quickly became unsustainable as the dataset grew.

A few key limitations held them back:

- Slow backend processes when adding new features or label classes.

- Crashes and downtime in self-hosted annotation tools like CVAT, which resulted in lost annotation work.

- Limited data management - large datasets stored as zip files made it difficult to inspect, query, or understand what was inside.

The team realized that as their data and annotation efforts expanded, they would need a more scalable, efficient system to handle and process real-world images consistently.

A shift to a unified data platform

The turning point came when vialytics adopted Encord to consolidate their data management and annotation workflow. With Encord, vialytics could manage, curate, and annotate images all in one place, significantly reducing friction in their data pipeline. Here’s how it helped them scale their data stack:

Increased data accessibility and visibility

By centralizing their data, vialytics moved away from unwieldy zip files and fragmented file storage. Encord allowed them to query, inspect, and manage large datasets efficiently, creating a streamlined way to handle everything from indexing to annotation. The ability to track and update data in real time meant that the team could rapidly iterate on their models without losing track of the raw data.

Automated review and error correction

With an AI-driven platform, vialytics could ensure higher data quality through automated error detection. Disagreement mining - where models are evaluated against labeled data to identify areas of high uncertainty or conflict - was key in finding mislabeled or problematic data points. These high-priority errors were then flagged for manual review, ensuring that problematic annotations were addressed quickly.

Flexible annotation workflows

As the team grew and the data volume increased, maintaining consistent and accurate annotations across hundreds of thousands of images became a significant challenge.

Encord’s customizable workflows allowed vialytics to implement a two-stage review process, where data scientists and QA reviewers could work together on edge cases. The process was iterative, allowing annotation guidelines to evolve as new challenges emerged from the data itself.

Handling the variability of real-world data

A core challenge of scaling AI in smart cities is dealing with the variability of real-world data. Every city has different infrastructure, road conditions, and even types of vehicles. vialytics found that it wasn't enough to simply scale their system - they had to ensure the model could handle a wide range of real-world scenarios. Here’s how they achieved that:

Managing different types of edge cases

vialytics’ platform needed to work with imagery that varied wildly in quality. This included capturing data under bright sunlight, cloudy skies, shadows, and reflections that made road conditions harder to detect. For example, glare from a car’s windshield or a truck driving by at high speed could distort road markings, making it difficult for models to discern accurate road damage.

To address this, vialytics designed a system that focused on continuously mining difficult-to-annotate examples from their dataset and retraining their models with these hard cases. This approach helped the system become more reliable and resilient to unpredictable data.

Ensuring consistent model performance across geographies

Road conditions in Germany may not match those in the United States, but vialytics needed their models to work across both. To address the geographical variability of road infrastructure, vialytics used a two-pronged approach:

- Country-specific models. Different countries have unique road signs that look similar but carry different meanings, so vialytics trained separate classifiers for each region to ensure accurate detection and classification.

- Unified models. On the other hand, they found that detecting road damage (e.g., cracks or potholes) benefited from global model generalization, since the types of damage were relatively consistent regardless of the country (most of the time).

This combination of specialized and unified models allowed vialytics to strike the right balance between generalization and localization.

Scaling without compromising on quality

As vialytics scaled, the volume of data they needed to handle exploded. With images pouring in from municipal fleets in multiple cities, the data pipeline had to scale without compromising on quality. Quality control measures were built into every stage of the process to ensure that image data stayed consistent across the entire pipeline:

- Annotation guidelines were continuously refined, incorporating feedback from difficult cases to improve accuracy.

- Automated checks for data quality ensured that the team caught issues before they became widespread, and data inconsistencies were flagged in real-time.

vialytics learned that scaling data infrastructure is as much about data quality control as it is about processing power. By investing in tools that allowed them to continuously monitor and improve the quality of incoming data, vialytics ensured that their computer vision models could consistently deliver results.

The benefits of a unified AI data stack

With the newly implemented data stack, vialytics was able to turn a variable data stream into a robust AI system capable of powering smart-city applications. This led to:

- More reliable outputs: Models could now handle inconsistent imagery in a wide variety of conditions, making them suitable for real-world deployments in cities worldwide.

- Faster iteration cycles: The ability to monitor and annotate data in real-time allowed vialytics to rapidly iterate and improve their models, shortening the feedback loop and accelerating time-to-market.

- Reduced annotation overhead: By automating key steps of the annotation process, vialytics reduced manual effort and focused human oversight on edge cases, ensuring data consistency across their datasets.

Building the next generation of smart cities

Looking ahead, vialytics is focused on further scaling their data stack to incorporate even more diverse data types. With the rise of multimodal AI - using not just images, but also sensor data from cameras, accelerometers, and other sources - they aim to expand their ability to automatically detect and interpret infrastructure conditions from a variety of data sources.

The lesson here is clear: to build robust, production-quality computer vision models at scale, it's not enough to just collect data. You need a scalable AI data stack that can handle, refine, and optimize that data at every step. For vialytics, it’s this combination of smart data management and continuous learning that has enabled their AI system to thrive in urban infrastructure.

Ready to scale your own AI data stack? Check out this interactive demo of Encord.

Explore the platform

Data infrastructure for multimodal AI

Explore product

Explore our products