Announcing our Series C with $110M in total funding. Read more →.

Contents

Key Applications of Computer Vision in Robotics

Benefits of Using Computer Vision in Robotics

Computer Vision in Robotics: Key Takeaways

Encord Blog

Top 8 Applications of Computer Vision in Robotics

Remember Atlas? Boston Dynamics’s acrobatic humanoid robot that can perform somersaults, flips, and parkour with human-like precision. That’s a leading example of state-of-the-art robotics engineering powered by modern computer vision (CV) innovation. It features athletic intelligence, real-time perception, and predictive motion control.

Today, organizations are realizing the significant benefits of deploying intelligent robots. Their ability to understand the environment and adapt to any situation makes them extremely useful for laborious and repetitive tasks, such as:

- Industrial inspection to detect anomalies,

- Remotely investigating critical situations like chemical or biological hazards,

- Site planning and maintenance,

- Warehouse automation,

This article will explore the applications of computer vision in the robotics domain and mention key challenges that the industry faces today.

Key Applications of Computer Vision in Robotics

Autonomous Navigation and Mapping

Computer vision is pivotal in enabling robots to navigate complex environments autonomously. By equipping robots with the ability to perceive and understand their surroundings visually, they can make informed decisions and maneuver through intricate scenarios efficiently. Key use cases include:

- Autonomous vehicles and drones:

Autonomous vehicles (like Waymo) process real-time data from cameras, LiDAR, and radar sensors to detect lane markings, pedestrians, and other vehicles, ensuring safe road navigation. Delivery robots like Starship utilize computer vision to navigate sidewalks and deliver packages autonomously to customers' doorsteps. Drones, such as those from DJI, use CV for obstacle avoidance, object tracking, and precise aerial mapping, making them versatile tools in agriculture, surveying, and cinematography. - Industrial automation solutions:

In warehouse automation, like Amazon's Kiva robots, computer vision-guided robots perform pick and place operations, efficiently locating, assembling, and transporting items, revolutionizing order fulfillment. Mining and construction equipment, as demonstrated by Komatsu, employ computer vision to enhance safety and productivity by enabling autonomous excavators, bulldozers, and compact track loaders engineered for construction tasks like digging, dozing, and moving materials.

Fully Autonomous Driver – The Waymo Driver

Object Detection and Recognition

Object recognition classifies and identifies objects based on visual information without specifying their locations, while object detection not only identifies objects but also provides their locations through bounding boxes and object names. Key applications include:

- Inventory management: Mobile robotics like the RB-THERON enable efficient inventory tracking in warehousing, employing object detection to autonomously update records, monitor inventory levels, and detect product damage.

- Healthcare services:

Akara, a startup specializing in advanced detection techniques, has developed an autonomous mobile robot prototype to sanitize hospital rooms and equipment, contributing to virus control. Check out how Encord helped Viz.ai in Accelerating Medical Diagnosis. - Home automation and security system: Ring's home security systems, like Ring Doorbells, employ cutting-edge computer vision technology to provide real-time camera feedback for enhanced security and convenience. Astro, an advanced Alexa robot, represents the fusion of AI, robotics, and computer vision for home interaction and monitoring with features like navigation and visual recognition.

Gestures and Human Pose Recognition

Gesture recognition allows computers to respond to nonverbal cues, including physical movements and voice commands, while human pose tracking involves detecting and tracking key points. In 2014, Alexander Toshev introduced DeepPose, a landmark in human pose estimation, using CNNs. This groundbreaking work catalyzed a shift towards deep learning-based approaches in Human Pose Estimation (HPE) research.

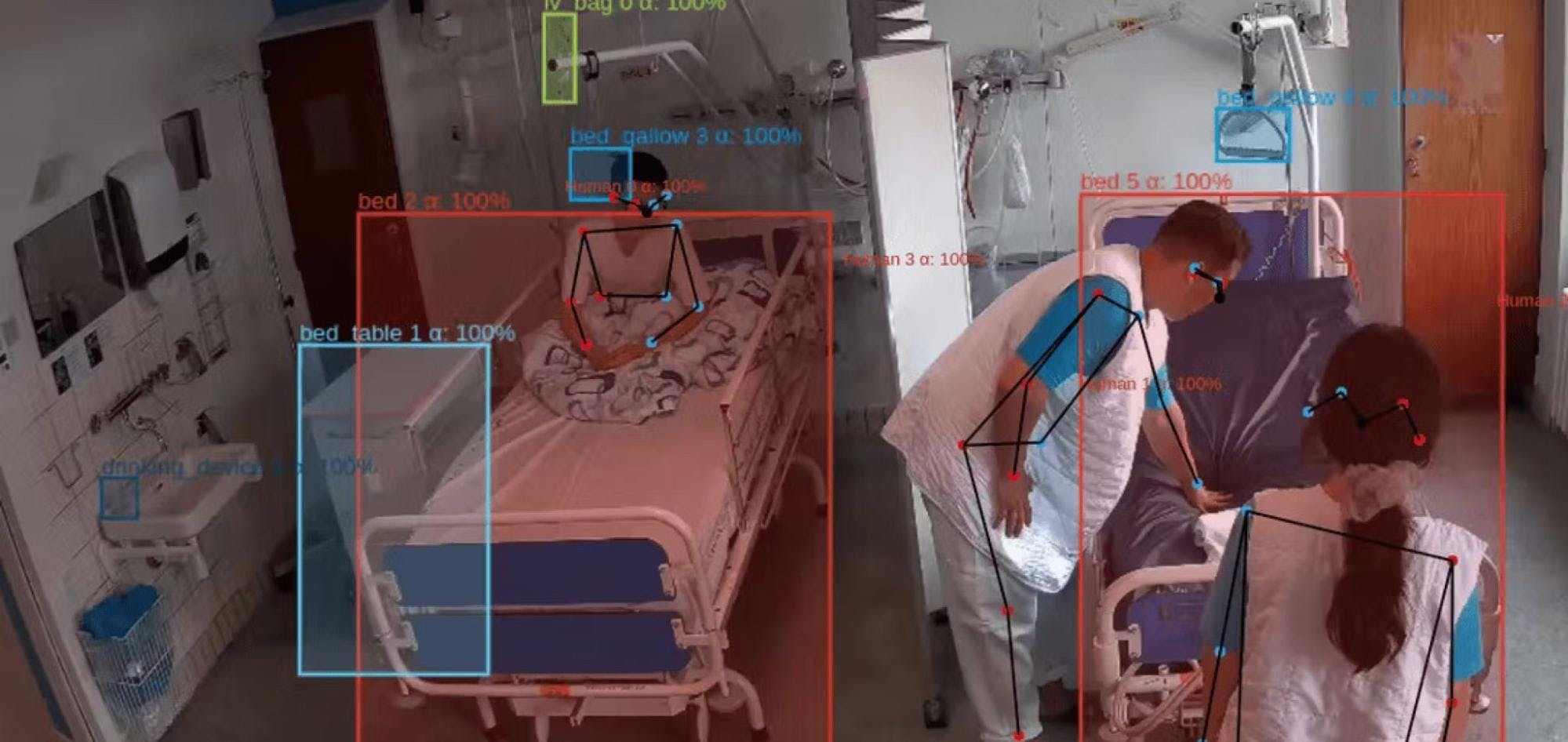

Pose recognition to prevent falls in care homes

Recently, natural human-computer interaction methods like recognizing faces, analyzing movements, and understanding gestures have gained significant interest in many industries. For instance:

- Healthcare and rehabilitation: Pepper, Softbank Robotics humanoid, recognizes faces and emotions, assisting those with limited conversation skills. Socially assistive robots (SAR) offer verbal support and care for individuals with dementia. Moreover, the ABLE Exoskeleton is a lightweight device designed for patients with spinal cord injuries. An award-winning health tech company Viz.ai uses Encord’s to annotate more speedily and accurately. Due to Encord, clinical AI teams can accelerate processes and swiftly review medical imaging.

- Retail and customer service: Retail robots like LoweBot equipped with gesture recognition can assist customers in finding products within the store. Customers can gesture or ask for help, and the robot can respond by providing directions and information about product locations. One of our global retail customers uses Encord's micro-model & interpolation modules to track and annotate different objects. They improved their labeling efficiency by 37% with up to 99% accuracy.

- Gaming and Entertainment: Microsoft Kinect, a motion-sensing input device, revolutionized gaming by allowing players to control games through body movements. Gamers can interact with characters and environments by moving their bodies, providing an immersive and engaging gaming experience.

Facial and Emotion Recognition

Facial and emotion recognition (ER) provide robots with the ability to infer and interpret human emotions, enhancing their interactions with people. Emotion models broadly fall into two categories:

- Categorical models, where emotions are discrete entities labeled with specific names, such as fear, anger, happiness, etc.

- Dimensional models, where emotions are characterized by continuous values along defined features like emotional valence and intensity, plotted on a two-dimensional axis.

ER tasks use various input techniques, including facial expressions, voice, physiological signals, and body language, to build robust CV models. Some advanced feature sets include:

- Brain activity: Various measurement systems, such as electroencephalography (EEG), are available for capturing brain activity.

- Thermal signals: Alterations in emotional states lead to blood vessel redistribution through vasodilation, vasoconstriction, and emotional sweating. Infrared thermal cameras can identify these variations as they affect skin temperature.

- Voice: We naturally can deduce the emotional state conveyed by a speaker's words. These emotional changes align with physiological changes like larynx position and vocal fold tension, resulting in voice variations that can be used for accurate acoustic emotion recognition.

Key applications of emotion recognition include:

- Companionship and mental health: A survey of 307 care providers in Europe and the United States revealed that 69% of physicians believe social robots can alleviate isolation, enhance companionship, and potentially benefit patients' mental health. For instance, social robots like ElliQ engage in thousands of user interactions, with a significant portion focused on companionship.

- Digital education: Emotion recognition tools can monitor students' emotional well-being. It can help identify emotional challenges such as frustration or anxiety, allowing for timely interventions and support. If signs of distress or anxiety are detected, the system can recommend counseling or provide resources for managing stress.

- Surveillance and interrogation: Emotion recognition in surveillance identifies suspicious behavior, assessing facial expressions and body language. In interrogations, it aids in understanding the emotional state of the subject.

Augmented and Virtual Reality

Augmented reality (AR) adds digital content (digital images, videos, and 3D models) to the real world via smartphones, tablets, or AR glasses. Meanwhile, virtual reality (VR) immerses users in computer-generated environments through headsets, replacing the real world. The increasing adoption of AR and VR is making its way into numerous industries.

For instance:

- Education and training: Students use AR/VR apps for interactive learning at home. For instance, Google Arts & Culture extends learning beyond classrooms with AR/VR content for schools. Zspace offers AR/VR learning for K-12 education, career and technical education, and advanced sciences with all-in-one computers featuring built-in tracking and stylus support.

- Music and Live Events: VR music experiences have grown significantly, with companies like Wave and MelodyVR securing substantial funding based on high valuations. They offer virtual concerts and live performances, fostering virtual connections between artists and music enthusiasts.

- Manufacturing: As part of Industry 4.0, AR technology has transformed manufacturing processes. For instance, DHL utilized AR smart glasses for "vision picking" in the Netherlands, streamlining package placement on trolleys and improving order picking. Moreover, emerging AR remote assistance technology, with the HoloLens 2 AR headset, is making an impact. For instance, Mercedes-Benz is using HoloLens 2 for automotive service and repairs.

Agricultural Robotics

The UN predicts that the global population will increase from 7.3 billion today to 9.8 billion by 2050, driving up food demand and pressuring farmers. As urbanization rises, there is a growing concern about who will take on the responsibility of future farming.

Agricultural robots are boosting crop yields for farmers through various technologies, including drones, self-driving tractors, and robotic arms. These innovations are finding unique and creative uses in farming practices. Prominent applications include:

- Agricultural drones: Drones have a long history in farming, starting in the 1980s with aerial photography. Modern AI-powered drones have expanded their roles in agriculture, now used for 3D imaging, mapping, and crop and livestock monitoring. Companies like DJI Agriculture and ZenaDrone are leading in this field.

- Autonomous tractors: The tractor, being used year-round, is a prime candidate for autonomous operation. As the agricultural workforce declines and ages, autonomous tractors like YANMAR could provide the industry's sought-after solution.

- Irrigation control: Climate change and global water scarcity are pressing issues. Water conservation is vital in agriculture, yet traditional methods often waste water. Precision irrigation with robots and calibrators minimizes waste by targeting individual plants.

- Autonomous sorting and packing: In agriculture, sorting and packing are labor-intensive tasks. To meet the rising demand for faster production, many farms employ sorting and packing robots. These robots, equipped with coordination and line-tracking technology, significantly speed up the packing process.

DJI Phantom 4 Shooting Images for Plant Stand Count

Space Robotics

Space robots operate in challenging space environments to support various space missions, including satellite servicing, planetary exploration, and space station maintenance. Key applications include:

- Planetary exploration: Self-driving reconnaissance vehicles have made significant discoveries during Martian surface exploration. For instance, NASA's Spirit rover and its twin, Opportunity, researched the history of climate and water at various locations on Mars. Planetary robot systems are also crucial in preparing for human missions to other planets. The Mars rovers have been used to test technologies that will be used in future human missions.

- Satellite repair and maintenance: To ensure the satellite's longevity and optimal performance, satellite repair and maintenance operations are essential, yet they present significant challenges that can be overcome using space robotics. For instance, in 2020, MEV-1 achieved a successful automated rendezvous with a non-transmitting satellite, Intelsat 901, to extend its operations. This operation aimed to conduct an in-orbit service check and refuel the satellite. Moreover, NASA engineers are actively preparing to launch OSAM-1, an unmanned spacecraft equipped with a robotic arm designed to reach and refurbish aging government satellites.

- Cleaning of space debris: Space debris, whether natural meteorites or human-made artifacts, poses potential hazards to spacecraft and astronauts during missions. NASA assesses the population of objects less than 4 inches (10 centimeters) in diameter through specialized ground-based sensors and examinations of returned satellite surfaces using advanced computer vision techniques

Military Robotics

Military robotics is crucial in modern defense and warfare. Countries like Israel, the US, and China are investing heavily in AI and military robotics. These technologies enhance efficiency, reduce risks to soldiers, and enable missions in challenging environments, playing a pivotal role in contemporary warfare. Key applications include:

- Unmanned Aerial Vehicles (UAVs): UAVs, commonly known as drones, are widely used for reconnaissance, surveillance, and target recognition. They provide real-time intelligence, surveillance, and reconnaissance (ISR) capabilities, enabling military forces to gather vital information without risking the lives of pilots.

- Surveillance: Robotic surveillance systems involving ground and aerial vehicles are vital for safeguarding crucial areas. The Pentagon, with private contractors, has developed software that integrates reconnaissance footage, highlighting flags, vehicles, people, and cars, as well as tracking objects of interest, for a human analyst’s attention.

- Aerial refueling: Autonomous aerial refueling systems enable mid-air refueling of military aircraft, extending their operational range and mission endurance.

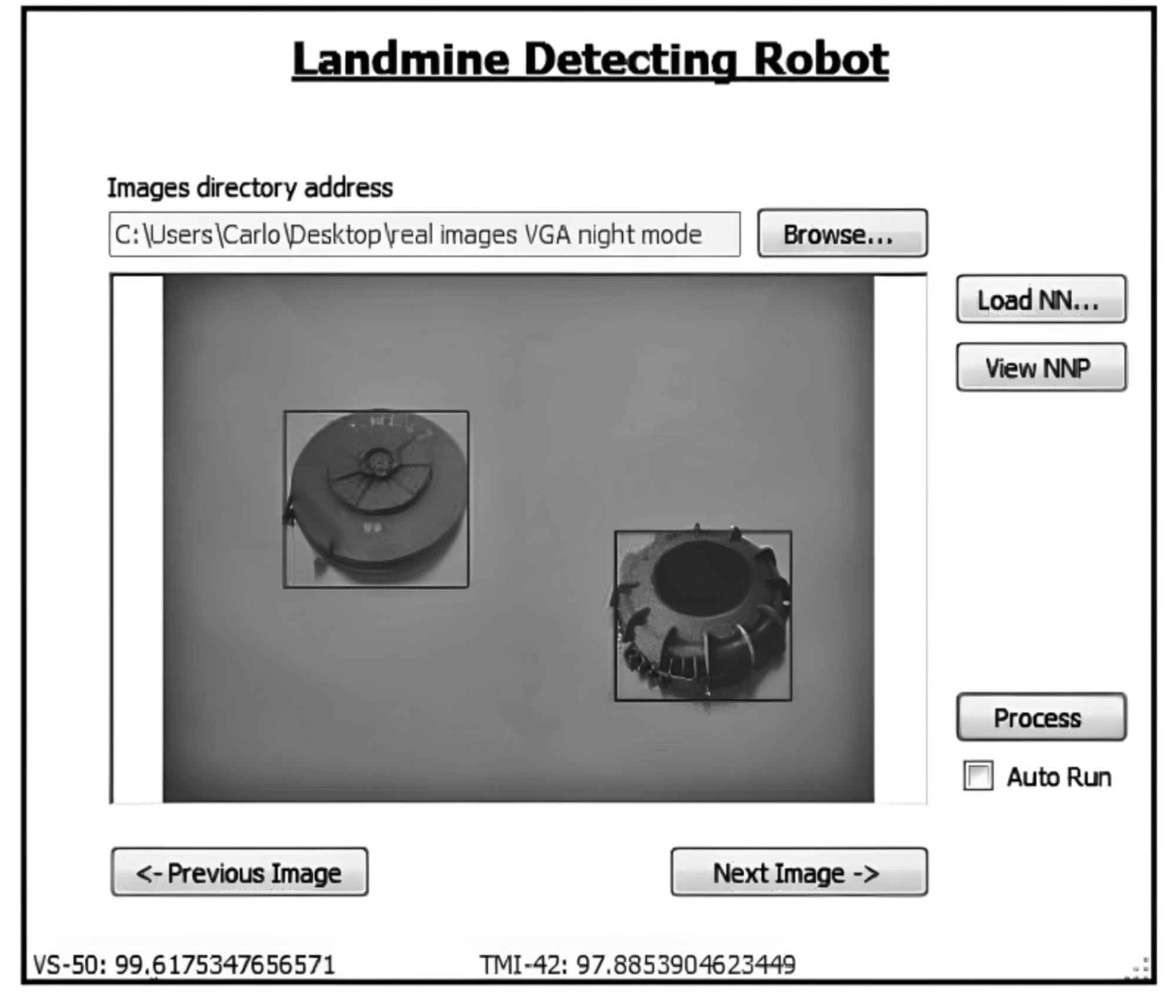

- Landmine removal: Robots equipped with specialized sensors and tools are used to detect and safely remove landmines and unexploded ordnance, reducing the threat to troops and civilians in conflict zones. For instance, Achkar and Owayjan of The American University of Science & Technology in Beirut have developed an AI model with a 99.6% identification rate on unobscured landmines.

VS-50 and TMI-42 Landmine Classification Using the Proposed Model

Now that we understand various computer applications in robotics, let’s discuss some major benefits you can leverage across industries.

Benefits of Using Computer Vision in Robotics

Robots equipped with computer vision technology revolutionize industries, yielding diverse benefits, such as:

- Improved productivity: Computer vision-based robotics enhances task efficiency, reduces errors, and saves time and resources. Machine vision systems can flexibly respond to changing environments and tasks. This boosts ROI through lower labor costs, and improves accuracy with long-term productivity gains.

- Task automation: Computer vision systems automate repetitive but complex tasks, freeing up humans for creative work. This speeds up cumbersome tasks for an improved time-to-market for products, increases business ROI, and boosts job satisfaction, productivity, and skill development.

- Better quality control: Robots with computer vision enhance quality control, reducing defects and production costs. Robotic vision systems can detect hazards, react in real-time, and autonomously handle risky tasks, reducing accidents and protecting workers.

Despite many benefits, CV-powered robots face many challenges when deployed in real-world scenarios. Let’s discuss them below.

What are the challenges of implementing computer vision in robotics?In robotic computer vision, several critical challenges must be addressed for reliable and efficient performance. For instance:

- Scalability: As robotic systems expand, scalability challenges arise. Scaling operations demand increased computing power, energy consumption, and hardware maintenance, often affecting cost-effectiveness and environmental sustainability.

- Camera placement and occlusion: Stability and clarity are essential for optimal robot vision, which requires accurate camera placement. Occlusion occurs when part of an object is hidden from the camera's view. Robots may encounter occlusion due to the presence of other objects, obstructed views by their own components, or poorly placed cameras. To address occlusion, robots often rely on matching visible object parts to a known model and making assumptions about the hidden portions.

- Operating environment: Inadequate lighting hinders object detection. Hence, the operating environment for a robot must offer good contrast and differ in color and brightness from the detectable objects. Additionally, fast movement, like objects on conveyors, can lead to blurry images, impacting the CV model’s recognition and detection accuracy.

- Data quality and ethical concerns: Data quality is pivotal in ensuring ethical robot behavior. Biased or erroneous datasets can lead to discriminatory or unsafe outcomes. For instance, biased training data for facial recognition can result in racial or gender bias, raising ethical concerns about the fairness and privacy of AI applications in robotics.

Computer Vision in Robotics: Key Takeaways

- Computer vision enables robots to interpret visual data using advanced AI models, similar to human vision.

- Use cases of computer vision in robotics include autonomous navigation, object detection, gesture and human pose recognition, and facial and emotion recognition.

- Key applications of computer vision in robotics span autonomous vehicles, industrial automation, healthcare, retail, agriculture, space exploration, and the military.

- The benefits of using robotics with computer vision include improved productivity, task automation, better quality control, and enhanced data processing.

- Challenges in computer vision for robotics include scalability, occlusion, camera placement, operating environment, data quality, and ethical concerns.

Explore the platform

Data infrastructure for multimodal AI

Explore product

Frequently asked questions

Computer vision in robotics and automation refers to using visual perception and artificial intelligence to enable robots and automated systems to understand and interpret visual information from the environment. It allows robots to make informed decisions, navigate autonomously, detect objects, recognize gestures and faces, and perform various tasks based on visual input.

Computer vision has a wide range of applications, including autonomous navigation, object detection, gesture recognition, facial recognition, augmented/virtual reality, agricultural robotics, space robotics, and military robotics.

An Autonomous Mobile Robot (AMR) is a self-guided robot equipped with sensors and software that allows it to navigate and operate in its environment without human intervention. W. Grey Walter's Elmer and Elsie, created in the late 1940s, were the pioneering autonomous robot pair, laying the foundation for early autonomous robotics.

A mobile manipulator has both mobility (like a mobile robot) and manipulation capabilities (using a robotic arm).

Yes, there are ethical considerations and challenges to using computer vision in robotics, including concerns about biased data leading to discriminatory outcomes, privacy issues with facial recognition, and the responsible use of AI in decision-making processes. Addressing these challenges is crucial for ensuring fair and safe robot behavior.

The critical components of a computer vision system in robotics include cameras or sensors for data capture, image processing algorithms, deep learning models for tasks like object detection and recognition, and hardware for computation and control.

An example of a robot vision application is autonomous navigation in self-driving cars and drones.

Computer vision is crucial in robotics because it allows robots to visually perceive and understand their environment. This capability enables them to navigate autonomously, recognize objects, interact with humans, and perform tasks precisely.

Yes, computer vision enhances robotic perception. It enables robots to understand information from their surroundings, facilitating object recognition, navigation and interaction with the environment.

Yes, computer vision algorithms provide robots with accurate and relevant visual data, improving decision-making.

Encord leverages lidar and camera technology to improve the safety and efficiency of its automation solutions. For example, by using these sensors, the system can detect potential obstacles, such as people entering the path of RGVs, and optimize the picking processes in layered systems, ensuring that items are accurately identified and picked without errors.

Encord se concentre sur l'amélioration des capacités de vision par ordinateur et d'accès aux données vidéo pour les projets de robotique et d'IA. En collaborant avec des entreprises dans le domaine des drones, nous cherchons à optimiser les modèles d'IA en utilisant des données spécifiques pour répondre aux besoins de nos clients.

Encord's Orbit feature allows users to view data from the robot's perspective and interact with a digital twin of their site. This capability enables users to draw boxes around areas of interest, prompt questions, and receive insightful answers, streamlining the data collection process for robotics applications.

Encord provides robust annotation tools that facilitate the collection and organization of data, allowing teams to effectively label and train models for obstacle detection. By utilizing advanced features, users can enhance the reliability of ground plane classification, even under varying lighting conditions.

Encord's platform is built to complement existing robotics projects by providing tools that enhance annotation and data management. Teams can leverage Encord's capabilities to improve their vision pipelines, facilitating smoother integration and boosting overall productivity in robotics development.

Encord has successfully supported a variety of use cases in the robotics sector, including damage detection, object recognition, and sensor fusion. Our platform is adaptable to meet the specific needs of different projects, making it a valuable asset for robotics applications.

Encord provides a comprehensive annotation platform that supports various image formats, including 2D and 3D RGBD images. This allows teams to efficiently annotate data for training models, which is crucial for developing advanced perception systems in robotics.

Encord offers various modules that focus on scaling annotation pipelines for robotics customers. These include tools for distributing tasks, a user interface for operators to interact with, and analytics to monitor performance and efficiency in data production.

Yes, Encord is designed to meet the needs of robotics applications, particularly in areas such as warehouse robotics. Its capabilities in handling both 2D and 3D data make it a valuable tool for developing advanced machine learning models in this field.

Encord supports a variety of sensor modalities, including cameras and LiDAR. This ability to work with different types of sensors allows teams to effectively manage and annotate data collected from diverse sources in autonomous vehicle applications.