Announcing our Series C with $110M in total funding. Read more →.

Contents

Introduction: Why We Need Annotation Analytics

Key Metrics: Throughput, Quality, and Cost

Common Bottlenecks in Annotation Workflows

A Real-World Example with Encord’s Annotation Analytics

Actionable Steps to Optimize Annotation Projects

Encord Blog

How to Diagnose and Improve Annotation Performance: Deep-Dive on Metrics, Workflows, and Quality

5 min read

Training data quality often determines the success (or failure) of any machine learning (ML) initiative. Yet, many teams discover belatedly that annotation performance can become a significant and costly bottleneck. Without clear visibility into time spent, quality control, and feedback loops, labeling errors slip into production data and hamper the entire ML lifecycle.

This post compiles insights from a webinar with our Encord ML engineers and deployment strategists, who presented a real-world look at diagnosing annotation performance. We’ll walk through the importance of annotation analytics, show how to interpret the metrics and dashboards effectively, and discuss strategies for improving label quality and throughput at scale. By the end, you’ll have actionable steps to level up your annotation pipeline and build a more robust dataset for your models.

Introduction: Why We Need Annotation Analytics

It’s no secret that data labeling can be tedious and expensive. When you have a stream of images, videos, or text that must be annotated, even small inefficiencies multiply rapidly. For instance:

- A complex label schema can cause annotators to spend extra time on each task.

- Inconsistencies between reviewers can lead to back-and-forth rejections.

- Blurry data or trivial edge cases can waste hours of annotation time without contributing to the real value of your ML pipeline.

Unfortunately, many project owners run large annotation efforts “in the dark.” They might keep an eye on basic metrics—such as tasks completed per day—but rarely do they track fine-grained insights like per-annotator performance or how often certain label classes get rejected. As a result, bottlenecks and quality drift go undetected until the next training cycle exposes them in the form of poor model performance.

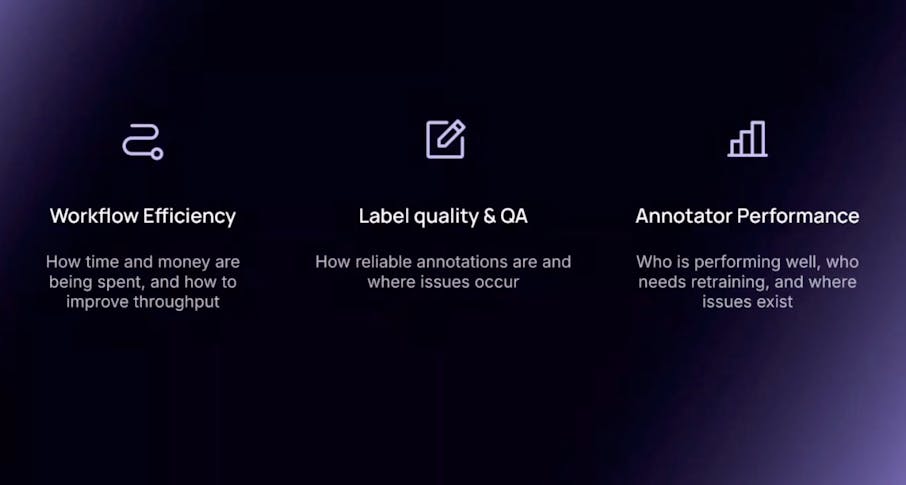

This is where annotation analytics comes in. By surfacing key information about throughput, label quality, and the feedback loops across your entire labeling workforce, you can identify issues early and fix them while the project is still in progress.

Key Metrics: Throughput, Quality, and Cost

Before diving into the how, let’s frame the top metrics you should be tracking in any annotation project.

1. Throughput (Time Spent per Task or Label)

- Why it matters: If an annotator doubles the time spent per label relative to peers, the project timeline and cost can skyrocket. Alternatively, you might discover unexpected data complexities or an overly detailed instruction set that slows everyone down.

- What to measure:Average time per task (or per label).Distribution of time (e.g., 90th percentile for outlier tasks that take significantly longer).Time spent on each workflow stage (annotation vs. review vs. QA).

2. Label Quality (Approval vs. Rejection Rate)

- Why it matters: Rejection rates and frequent rework dig into your productivity and can produce frustration on both sides. When the labeling workforce is unsure about instructions, they are more likely to guess, and get it wrong.

- What to measure:The number of approved vs. rejected labels, broken down by annotator.The rejection rate per class (e.g., is “car” harder to label than “pedestrian”?).Trends in rejections over time (are we correcting issues or letting them persist?).

3. Cost Drivers (Human Effort and Scalability)

- Why it matters: If your labeling cost is well above budget, you risk exhausting resources mid-project or cutting corners on data verification.

- What to measure: The ratio of tasks completed vs. projected costs.Opportunities to automate easy tasks (e.g., pre-labeling) so human reviewers can focus on complex edge cases.

4. Issues and Comments in Context

- Why it matters: Quantitative metrics tell part of the story, but rich context emerges from open-ended feedback. If multiple annotators raise concerns about particular images (e.g., “object partially occluded”), that might explain a spike in labeling time.

- What to measure: The number and type of issues raised.Most common comment categories (e.g., “blurry images,” “incorrect ontology label,” “missing bounding box”).Frequency of issues per worker or label class.

Common Bottlenecks in Annotation Workflows

While every ML team has unique workflows, many share the same pitfalls. Below are three primary bottlenecks that often emerge:

1. Reviewer Drag and Quality Drift

Annotators might label images quickly, but if a single reviewer is tasked with verifying everything, that reviewer stage becomes a backlog. Over time, this backlog can force reviewers to lower their standards, inevitably lowering label quality.

How to spot it: Look for tasks piling up in the “review” stage, and keep an eye on any suspicious drop in the reviewer’s rejection notes over time.

2. Unclear or Overly Detailed Ontologies

A labeling ontology is meant to provide clarity on what should be labeled and how it should be labeled. However, if it is too complex, annotators will spend hours perfecting micro-details that might not matter for your eventual ML model.

How to spot it:

- Excessively long labeling times for certain classes.

- Rising frustration or confusion among the labelers.

- Inconsistent label shapes: e.g., some polygons have dozens of vertices, while others have just a few.

3. Mismatched Annotators and Data

Sometimes annotators are assigned data they’re not fully equipped to label. For example, if an annotator is fluent only in English and French but must transcribe audio in Spanish, they will be slow or inaccurate.

How to spot it:

- One annotator has significantly worse performance than peers on particular data segments.

- Issue logs referencing difficulties in understanding the data.

A Real-World Example with Encord’s Annotation Analytics

In our recent webinar, we showcased how Encord’s platform can surface many of these metrics in real time. Let’s walk through the highlights of that demonstration to show how you might diagnose bottlenecks and fix them.

Scenario: Self-Driving Car Dataset

We used an open-source dataset of road scenes, where the object classes included:

- Cars

- Trucks

- Buses

- Pedestrians

For each image, annotators drew polygons of varying fidelity around target objects.

Step 1: Investigating Time Spent per Task

Our first step was to open the analytics dashboard and filter for tasks within the relevant date range. We observed:

- Most tasks took around 5 minutes on average, consistent with our initial estimates.

- Some tasks took 10 minutes or more.

Digging deeper, we noticed a single annotator was repeatedly the one spending longer on tasks.

Step 2: Collaborator-By-Collaborator Breakdowns

Moving to the Collaborators tab, we looked at each team member’s total time spent and tasks completed. This revealed:

- The annotator had submitted the highest number of tasks.

- Her average time per task was roughly four times as long as other annotators.

At first glance, one might assume the annotator needs more training. However, we soon discovered the underlying causes were more nuanced.

Step 3: Examining Label Classes and Rejection Rates

Looking at the “Labels” tab:

- We filtered by the specific anotator's annotations.

- Observed that “car” and “pedestrian” classes had high volumes and moderate rejection rates.

- “Bus” and “truck” classes had higher rejection percentages, but the total number of those labels was smaller, so they weren’t the primary time sink.

When we removed the filter and looked at everyone’s labeling performance for “car,” the overall rejection rate was quite similar to the specific annotator's. That meant her performance in labeling “car” was not significantly worse than her peers. So perhaps it wasn’t just a matter of her needing more training.

Step 4: Identifying the Root Cause

We wanted to confirm whether the data distribution, rather than the annotator, might be the actual culprit. Specifically:

- Were the specific annotator's “car” images especially complex?

- Did they contain more vehicles, or were these images blurrier or at unusual angles?

- Were polygons with high vertex counts (fine-grained segmentation) used for these images?

Real-time issue logs and comments pointed us in the right direction. The logs mentioned repeated concerns about image clarity and the size of bounding shapes:

- “Labels too large”: Suggesting objects were partially out of frame.

- “Wrong label”: Confusion over whether a blurry shape was a pedestrian or a cyclist.

As it turned out, the specific annotator's set of tasks included many multi-vehicle scenes, forcing more polygons per image, thus driving up annotation time. This indicates a mismatch between the complexity of the data and the uniform ontology or, at least, the distribution of tasks among annotators.

Lessons Learned

- Time Spent is Nuanced: A single outlier annotator might not always be the cause. Their assigned data slice might be disproportionately harder.

- Class Distribution Matters: A class like “car” may appear frequently, so small inefficiencies multiply.

- Issue Logs are Gold: They provide feedback beyond raw metrics, showing where instructions or data might be the real issue.

Actionable Steps to Optimize Annotation Projects

With the above example in mind, let’s outline practical steps you can apply to your own labeling ops to increase efficiency, lower costs, and maintain quality.

1. Segment and Filter Data Before Assigning

- Why: Different subsets of data can vary in complexity, e.g., day vs. night scenes, stable vs. blurry frames, or standard bounding boxes vs. polygons.

- How: Use basic heuristics (e.g., time of day, clarity level) to group data.If you have a specialized domain (healthcare imaging, multilingual transcripts, etc.), confirm your annotators have the right expertise for each set.Use an analytics tool or SDK to estimate label density or complexity (e.g., how many objects are typically in each image).

2. Calibrate Your Ontology to Project Goals

- Why: Overly detailed ontologies lead to annotation overhead that may not significantly boost model performance. Conversely, an ontology that’s too coarse may yield incomplete data.

- How: Evaluate if certain classes are truly needed. Remove or merge classes that cause confusion if they’re rarely used by your ML model.Confirm label types (bounding box, polygon, mask) align with your model type. For broad object detection, bounding boxes might suffice. For pixel-level segmentation tasks, polygons or masks are necessary.Pilot the ontology with a small batch of data and compare annotation time vs. model performance improvements.

3. Leverage Reviewer Insights Early

- Why: Reviewers detect patterns (like systematic mistakes) that annotators might not notice. Early feedback can halt poor labeling trends from scaling up.

- How: Ask reviewers to record short notes or flags whenever they reject a label.Periodically remind reviewers to maintain high standards rather than expedite approvals if a backlog grows.Monitor rejection numbers daily or weekly to detect sudden changes in acceptance rates.

4. Track Collaborator Performance Holistically

- Why: A single “bad actor” can torpedo your project’s average quality, but data complexity can also mislead us into blaming the annotator.

- How: Use a per-annotator dashboard to see average time spent, average rejection rate, and tasks completed.Cross-reference tasks with the data subset complexity (are some annotators labeling advanced data types?).Reassign tasks or redistribute workloads if one annotator is systematically overloaded with challenging tasks.

5. Use Commenting and Issue Logs to Provide Context

- Why: Qualitative data helps clarify the “why” behind unexpected metrics.

- How: Encourage annotators to raise issues in real time when they’re confused by the instructions.Tag any repeated issues to see if a pattern emerges (e.g., “blurry image,” “too many objects,” “ontology mismatch”).Integrate these logs into your pipeline so that you can refine instructions, retrain annotators, or discard problematic data.

6. Iterate with Batch Updates and Training

- Why: Large-scale annotation projects shouldn’t be a one-and-done approach. Iteration prevents dozens of hours from being wasted on the wrong approach.

- How: Annotate in smaller batches, then measure performance after each batch.Adjust instructions, clarifying the most common mistakes from the previous batch.If you can, train preliminary models on partial data to see how the labels hold up, and share those results with annotation teams.

Ready to Take the Next Step?

Building a robust, scalable data annotation pipeline relies on more than mere instructions and deadlines. High-quality data results from balancing label fidelity with timely feedback, efficient assignment strategies, and continuous iteration. By tracking the right metrics, throughput, label quality, annotator performance, rejection trends, and issues, and by systematically investigating anomalies, you can pre-empt most major labeling pitfalls.

Annotation analytics are key to reining in project costs, maintaining strong label quality, and ensuring your ML model learns from consistent and accurate data. The example from our self-driving car dataset highlights how easy it is to blame an annotator for slow performance when the real problem might be with data distribution or an overly detailed labeling approach. Armed with the right analytics dashboards and a willingness to iterate, you can steer your annotation projects toward success.

Discover how Encord’s annotation analytics can help your team gain real-time visibility into throughput, rejection patterns, and more.

Explore the platform

Data infrastructure for multimodal AI

Explore product