Announcing our Series C with $110M in total funding. Read more →.

Contents

What is Data Labeling for Machine Learning and Computer Vision?

Challenges of Scaling Data Labeling Operations

6 Best Practices to Implement Scalable Data Labeling Operations

Scaling Data Labeling Operations: Key Takeaways

Encord Blog

How to Scale Data Labeling Operations

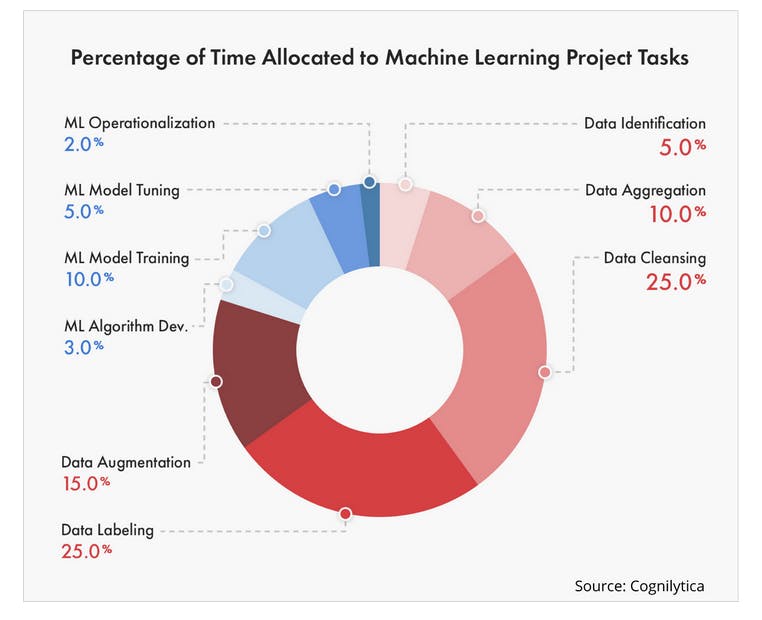

Data labeling operations are integral to the success of machine learning and computer vision projects. Data operation teams manage the entire end-to-end lifecycle of data labeling, including data sourcing, cleaning, and collaborating with ML teams to implement model training, quality assurance, and auditing workflows. Therefore to scale data labeling operations is crucial.

Behind the scenes, data operations teams ensure that artificial intelligence projects run smoothly.

As computer vision, machine learning, and deep learning projects scale and data volumes expand, it is critical that data ops teams grow, streamline, and adapt to meet the challenge of handling more labeling tasks.

In this article, we will cover 6 steps that data operations managers need to take to scale their teams and operational practices.

What is Data Labeling for Machine Learning and Computer Vision?

Data labeling or data annotation ⏤ the two terms that are often used synonymously, ⏤ is the act of applying labels and annotations to unlabeled data for the purpose of machine learning algorithms. Labels can be applied to various types of data, including images, video, text, and voice.

For the purpose of this article, we will focus on data labeling for computer vision use cases, in which labels are applied to images and videos to create high-quality training datasets for AI models.

Data labeling tasks could be as simple as applying a bounding box or polygon annotation with “cat” label or as complicated as microcellular labels applied to segmentations of tumors for a healthcare computer vision project.

Regardless of complexity, accuracy is essential in the labeling process to ensure high-quality training datasets and to optimize model performance.

Data labeling can be time-consuming and expensive. As such, companies must weigh the advantages and disadvantages of outsourcing or hiring in-house. While outsourcing is often more cost-effective, it comes with quality control concerns and data security risks. And, while in-house teams are expensive, they guarantee higher labeling quality and real-time insight into team members labeling tasks.

The quality of training data directly impacts the performance of machine learning algorithms.,, Ultimately, it comes down to the labeling quality, a responsibility entrusted to data labeling teams.

High-quality data requires a quality-centric data operations process with systems and management that can handle large volumes of labeling tasks for images or videos.

💡Find out more with Encord’s guides on How to choose the best datasets for machine learning and How to choose the right data for computer vision projects.

💡Find out more with Encord’s guides on How to choose the best datasets for machine learning and How to choose the right data for computer vision projects. Challenges of Scaling Data Labeling Operations

Data labeling is a time-consuming and resource-intensive function.

Data ops team members have to account for and manage everything from sourcing data to data cleaning, building and maintaining a data pipeline, quality assurance, and training a model using training, validation, and test sets.

Even with an automated data annotation tool, there is a lot for data ops managers to oversee.

There are several challenges that data labeling teams face when scaling:

- Project resources: Scaling requires additional resources and funding. Determining the best allocation of both can be a challenge

- Hiring and training: Hiring and training new team members require time and resources to align with project requirements and data quality standards. This forces teams to consider the options of outsourcing or managing teams in-house?

- Quality control: As the volume of data increases, maintaining How do we maintain high-quality labels becomes challenging.

- Workflow and data security: As data labeling tasks increase, it can be challenging to maintain data security, compliance, and audit trails.

- Annotation software: As image and video volumes increase, it can be challenging to manage projects. It is imperative to use the right tools, as teams can often benefit from the automation of data labeling tasks.

Let’s look at how to solve these challenges.

6 Best Practices to Implement Scalable Data Labeling Operations

Data operations teams are crucial for supporting data scientists and engineers.

Here are 6 best practices for managing and implementing data labeling operations at scale.

1. Design a workflow-centric process

Designing workflow-centric processes is crucial for any AI project. Data ops managers need to establish the data labeling project’s processes and workflows by creating standard operating procedures.

The support of senior leadership is vital to obtain the resources and budget to grow the data ops team, use the right tools, and employ a workforce for data labeling that can handle the volume needed.

2. Select an effective workforce for data labeling

To select the appropriate workforce for data labeling operations, there are three options available: an in-house team, outsourced labeling services, or a crowd-sourced labeling team.

The choice depends on several factors:

- Data volume

- Specialist knowledge

- Data security

- Cost considerations

- Management

In many cases, the benefits of using outsourced labeling service providers outweigh the associated risks and costs. In regulated sectors like healthcare, however, the use of in-house teams is often the only option given data security concerns and the highly specialized knowledge required.

Crowdsourcing through platforms like Amazon Mechanical Turk (MTurk) and SageMaker Ground Truth is another viable option for computer vision projects. Proper systems and processes, including workforce and workflow management and annotator training, are essential to the success of crowdsourcing or outsourcing.

3. Automate the data labeling process

Similar to the staffing question, there are three options for automating data labeling: in-house tools, open-source, or commercial annotation solutions such as Encord.

Open-source data labeling tools are suitable for projects with limited funding, such as academia or research, or for when a small team is building an MVP (minimum viable product) version of an AI model. These tools, however, often don’t meet the requirements for large-scale commercial projects.

Developing an in-house tool can be a time-consuming and costly endeavor, taking 9 to 18 months and involving significant R&D expenses.

In contrast, an off-the-shelf labeling platform can be quickly implemented. While pricing is higher than open-source (usually free for basic versions), it is cheaper than building an in-house data labeling tool.

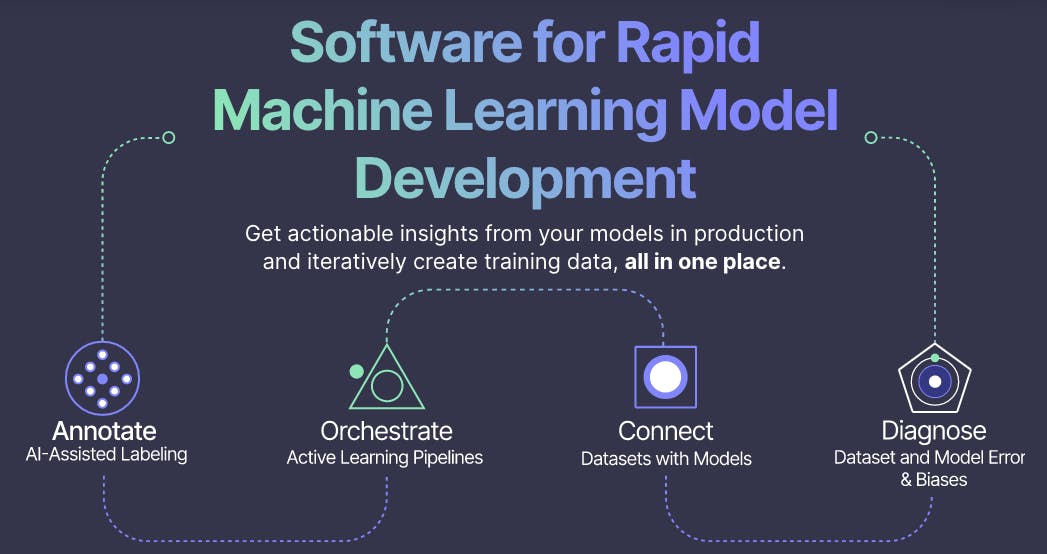

With an AI-assisted labeling and annotation platform, such as Encord, data ops teams can manage and scale the annotation workflows. The right tool also provides quality control mechanisms and training data-fixing solutions.

4. Leverage software principles for DataOps

Software development principles can be leveraged when scaling data labeling and training for a computer vision project.

Since data engineers, scientists, and analysts often engage in code-intensive tasks, integrating practices like continuous integration and delivery (CI/CD) and version control into data ops workflows is logical and advantageous.

5. Implement quality assurance (QA) iterative workflows

To ensure quality control and assurance at scale, it is crucial to establish a fast-moving and iterative process. One effective approach is to establish an active learning pipeline and dashboard. This allows data ops leaders to maintain tight control over quality at both a high-level and individual label level.

💡Here are our guides on 5 Ways to Improve The Quality of Data Labels and an Introduction to Quality Metrics in Computer Vision

💡Here are our guides on 5 Ways to Improve The Quality of Data Labels and an Introduction to Quality Metrics in Computer Vision 6. Ensure transparency and audibility in the data and labeling pipeline

Label transparency and audibility are essential throughout the data pipeline.

A clear, user-logged, and timestamped audit trail is critical for projects in secure or regulated sectors like healthcare where FDA compliance is required. With new AI laws likely to come into force worldwide in the next few years, a data labeling audit trail could also become mandatory for commercial AI models in non-regulated industries.

💡 Find out more with our Best Practice Guide for Computer Vision Data Operations Teams

💡 Find out more with our Best Practice Guide for Computer Vision Data Operations Teams Scaling Data Labeling Operations: Key Takeaways

High-quality training datasets are essential for optimizing model performance. The function of data operations teams is to ensure the labeling quality and labeling workflow are smooth and frictionless.

Follow these 6 best practices to scale your data operations properly:

- Design workflow-centric processes

- Select an effective workforce for data labeling

- Automate the data labeling process

- Leverage software principles for DataOps

- Implement QA iterative workflows

- Ensure transparency and audibility in the data and labeling pipeline

With an AI-powered annotation platform, data ops managers can oversee complex workflows, make annotation more efficient, and achieve labeling quality and productivity targets.

Sign-up for a free trial of Encord: The Data Engine for AI Model Development, used by the world’s pioneering computer vision teams.

Follow us on Twitter and LinkedIn for more content on computer vision, training data, and active learning.

Explore the platform

Data infrastructure for multimodal AI

Explore product

Frequently asked questions

Encord streamlines the data ingestion process by incorporating pre-labeling and automated labeling as part of the annotation flow. This allows users to efficiently prepare raw data for labeling by integrating machine-generated labels and automated quality assurance steps, ultimately enhancing the accuracy and speed of the labeling tasks.

Yes, Encord can support an incremental labeling pipeline, allowing users to sample and label images continuously. This capability is essential for teams looking to manage extensive datasets while ensuring that new objects are integrated smoothly into their training models.

Encord's editor supports a wide range of data modalities, including images, videos, and text documents such as PDFs. This flexibility allows users to perform various labeling tasks across different types of media, ensuring comprehensive coverage for all annotation needs.

Encord offers comprehensive support for ML ops pipelines, including pre-labeling and post-labeling needs. Our team has extensive experience in various use cases, enabling us to provide tailored insights and strategies that can optimize your machine learning workflows.

Encord allows users to set priority values for their data within workflows projects. This feature enables the most important data to be addressed first, ensuring that high-value labels are processed quicker, especially when utilizing Encord's labeling team.

Encord provides a robust platform designed to streamline data labeling operations, enabling teams to reduce costs and speed up deployment cycles. By leveraging our existing infrastructure, organizations can optimize their workflows and enhance efficiency.

Encord provides data labeling services that can scale with your needs. We can extract data from raw S3 buckets, draw bounding boxes over areas of interest, and organize the labeled data into clean S3 buckets according to your specifications.

Yes, Encord enables users to explore data at both the general data level and the individual label level. This dual approach allows for a more granular understanding of data distributions and facilitates targeted annotation efforts.

Encord ensures scalability by developing robust processes that can adapt to increasing data inflow from deployed cameras. Our team is prepared to handle the labeling needs of thousands of images as your operations expand.

Yes, Encord is built to handle large-scale data operations, making it suitable for organizations looking to expand their data infrastructure while entering new markets.