THE ENCORD ML TEAM PRESENTS

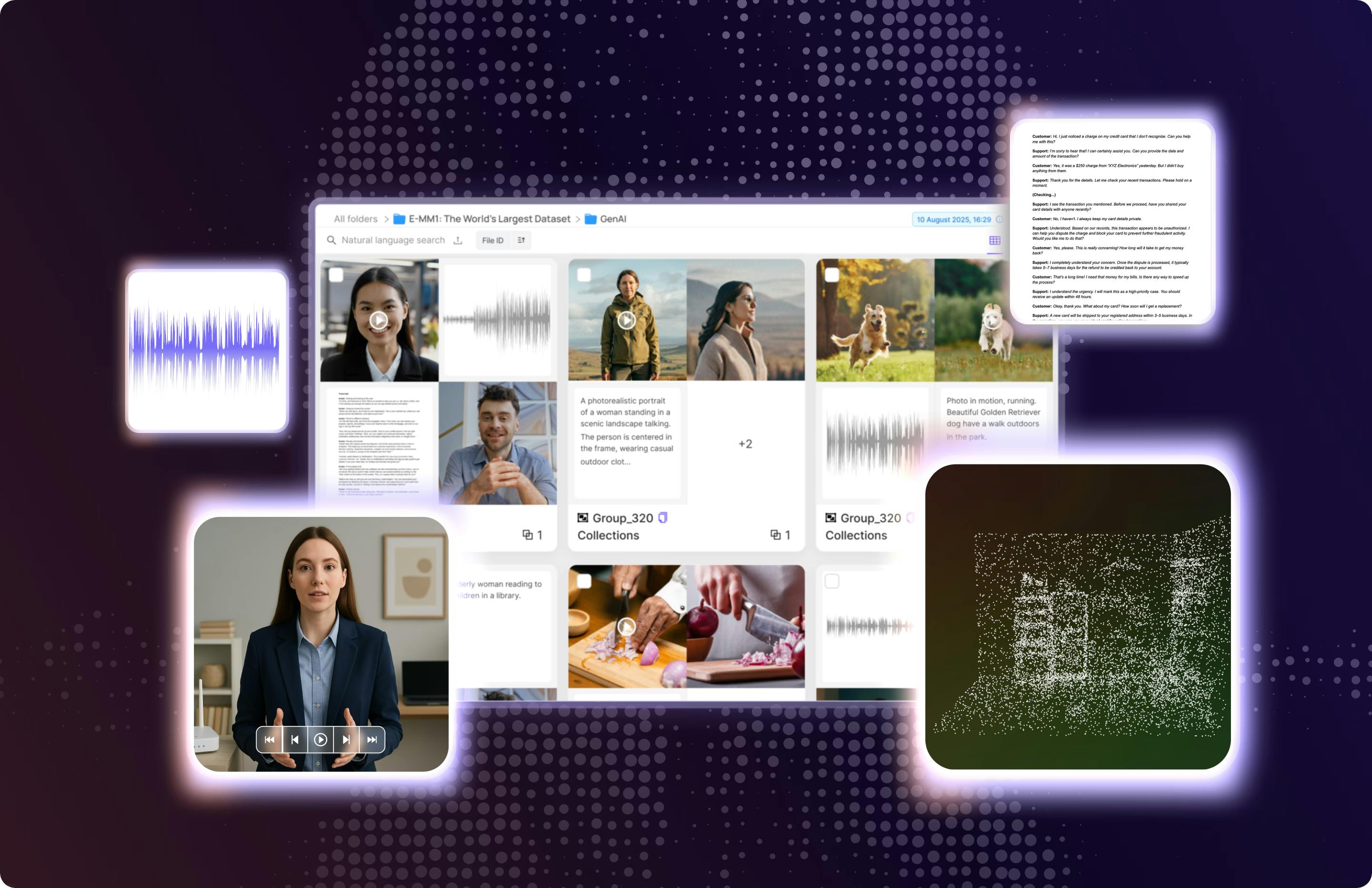

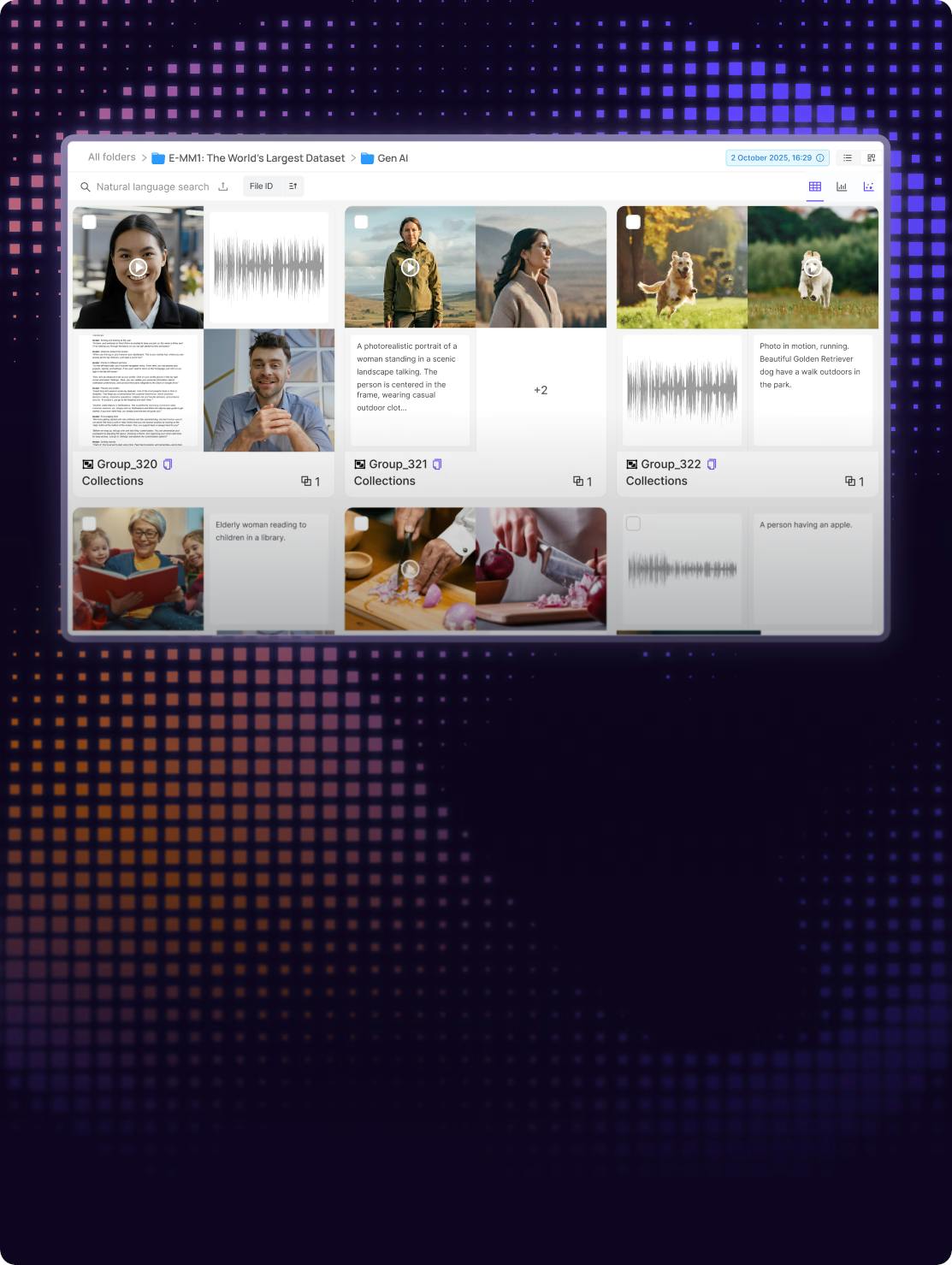

The World’s Largest Multimodal Dataset - for Generative AI

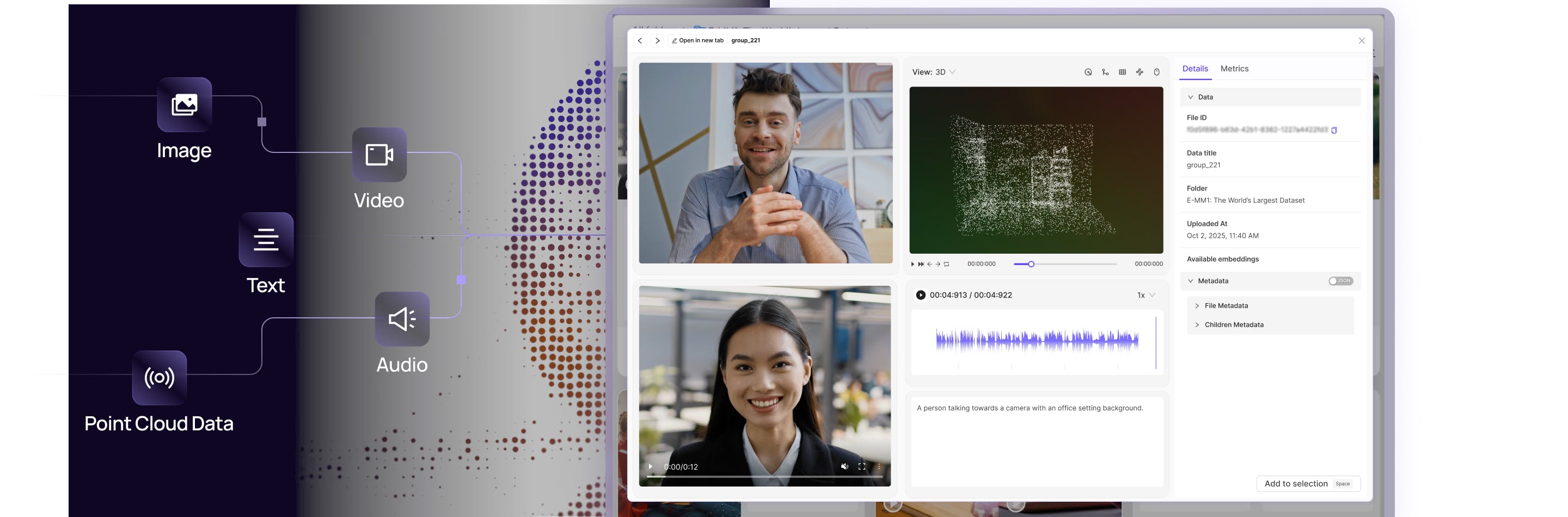

Encord has built a new, open-source dataset of images, video, text, audio, and point cloud embeddings for Generative AI teams to use – more than 10x the size of previous multimodal datasets.