Contents

Why 3D Object Detection Matters in Autonomous Driving

Sensor Modalities for 3D Object Detection

Model Architectures and Learning Paradigms

Datasets and Benchmarks

Deployment Challenges in Production ADAS

Encord Blog

3D Object Detection for Autonomous Vehicles: Models, Sensors, and Real-World Challenges

5 min read

Autonomous vehicles drive through space, so they not only have to detect and classify objects, but they also need spatial awareness. They not only need to understand that the light in the intersection is red, but also keep enough distance from the car in front to come to a safe stop.

Therefore, 3D object detection is a cornerstone perception task for autonomous vehicles (AVs), enabling them to classify, and track objects in space. Unlike 2D detection, which uses images, 3D detection provides metric-scale understanding of the environment. This is critical for motion planning, collision avoidance, and decision-making.

This article dives deep into 3D object detection for autonomous vehicles, focusing on sensor modalities, model architectures, datasets, evaluation metrics, and deployment challenges.

If you are an ML or AI engineer working in ADAS and AV systems, this one is for you.

Why 3D Object Detection Matters in Autonomous Driving

In real-world driving, understanding where an object is in space matters just as much as knowing what it is. Let’s take the example of a stop sign. The ADAS system may be able to detect a stop sign but what if it doesn’t know where to actually stop? The stop sign classification would no longer serve its purpose.

Or, in the case of adaptive cruise control, 3D segmentation means the vehicle has a precise understanding of distance from the vehicle ahead, and is able to estimate the right speed.

Accurate 3D segmentation enables:

- Precise distance and velocity estimation

- Safer path planning and control

- Robust obstacle avoidance in dense traffic

- Sensor fusion across camera, LiDAR, and radar

For ADAS features such as automatic emergency braking (AEB), adaptive cruise control (ACC), and lane keeping assist (LKA), even small localization errors can translate into unsafe behavior.

Sensor Modalities for 3D Object Detection

Computer vision applications, including AVs & ADAS, can be trained on image and video data. But when it comes to safe navigation, LiDAR and sensor data are essential. Let’s explore the different modalities used for object detection in AVs.

LiDAR-Based Detection

LiDAR provides sparse but highly accurate depth measurements, making it the dominant sensing modality for 3D object detection in both research and production autonomous vehicle systems.

Camera-Based Detection

Camera-based 3D object detection is attractive for cost-sensitive ADAS systems, leveraging rich semantic information from RGB imagery but requiring learned depth inference.

Radar-Based Detection

Radar offers robustness in adverse weather conditions and provides direct velocity measurements, making it valuable for long-range perception in ADAS and AV systems.

| Modality | LiDAR-Based Detection | Camera-Based Detection | Radar-Based Detection |

| Overview | Provides sparse but highly accurate depth measurements; dominant modality for 3D object detection in research and production AVs. | Cost-effective approach leveraging RGB imagery, requiring learned depth inference for 3D understanding. | Robust sensing modality that performs well in adverse weather and provides direct velocity measurements. |

| Common Representations | - Raw point clouds (XYZ, intensity) - Voxelized point clouds - Bird’s Eye View (BEV) feature maps | - Image-space features - Multi-view feature volumes - Predicted depth or pseudo-LiDAR | - Range-Doppler maps - Radar point detections - BEV radar feature maps |

| Typical Approaches / Models | - VoxelNet, SECOND - PointPillars - PV-RCNN, CenterPoint | - Depth estimation + 3D box regression - Keypoint-based 3D detection - Transformer-based monocular/multi-view models | - Classical clustering and tracking - Learning-based radar detection - Multi-modal fusion with camera or LiDAR |

| Strengths | - High geometric and depth accuracy - Lighting-invariant sensing | - Low sensor cost - High semantic richness and spatial resolution | - Robust to rain, fog, and low light - Direct relative velocity estimation |

Model Architectures and Learning Paradigms

Anchor-Based vs Anchor-Free Detection

Anchor-based methods rely on a pre-defined set of 3D boxes (anchors) at different scales, aspect ratios, and orientations. During training, predictions are made relative to these anchors. They are well-studied, stable, and good for objects with consistent sizes, such as cars, pedestrians. However, they can miss irregularly sized or oriented objects.

On the other hand, anchor-free models predict object centers and dimensions directly, without pre-defined anchors. These methods are increasingly popular in both LiDAR and multi-view camera 3D detection. These models simplify hyperparameter tuning, and are often able to generalise better to new object sizes and orientations. But, they can be sensitive to object density in crowded scenes.

Source: Learning Salient Boundary Feature for Anchor-free Temporal Action Localization

BEV-Centric Architectures

Bird’s Eye View (BEV) architectures convert sensor data into a top-down spatial representation. This simplifies the geometric reasoning problem in 3D space. They have easier fusion across sensors, better object localization in metric space, and are dominant in production AV systems. However, they require accurate calibration and projection; performance can degrade with occluded or small objects.

Source: Vision-Centric BEV Perception: A Survey

Transformers for 3D Detection

Transformers provide global context modeling and have started outperforming CNN-only pipelines in complex scenes. They enable strong global reasoning and improved occlusion handling but are computationally expensive and often need to be combined with BEV representations for efficiency.

Their main applications in 3D AV perception are:

- Multi-view camera fusion: Aggregates features from multiple cameras into a consistent 3D representation

- Spatio-temporal detection: Models object motion over time for improved tracking and prediction

Datasets and Benchmarks

High-quality datasets are critical for developing, training, and evaluating 3D object detection models in autonomous vehicles and ADAS systems. While public datasets provide valuable benchmarks, production-grade perception systems require scalable, high-fidelity annotated data that captures the long-tail edge cases encountered in real-world deployment.

Public Datasets

These remain essential for research, model comparison, and baseline evaluation:

- KITTI: Early benchmark dataset for LiDAR and camera-based 3D detection; small scale but well-annotated for cars, pedestrians, and cyclists.

- nuScenes: Multi-modal dataset including LiDAR, radar, and 360° camera coverage, annotated with 3D bounding boxes, velocity, and tracking information.

- Waymo Open Dataset: Large-scale LiDAR dataset covering urban and highway scenarios with highly diverse object instances.

- Argoverse 2: Designed for tracking and motion forecasting, with accurate 3D annotations and high-definition maps.

Production-Grade Data with Encord

Public datasets provide a starting point, but real-world AV systems require proprietary datasets that reflect environmental diversity, sensor configurations, and rare edge cases.

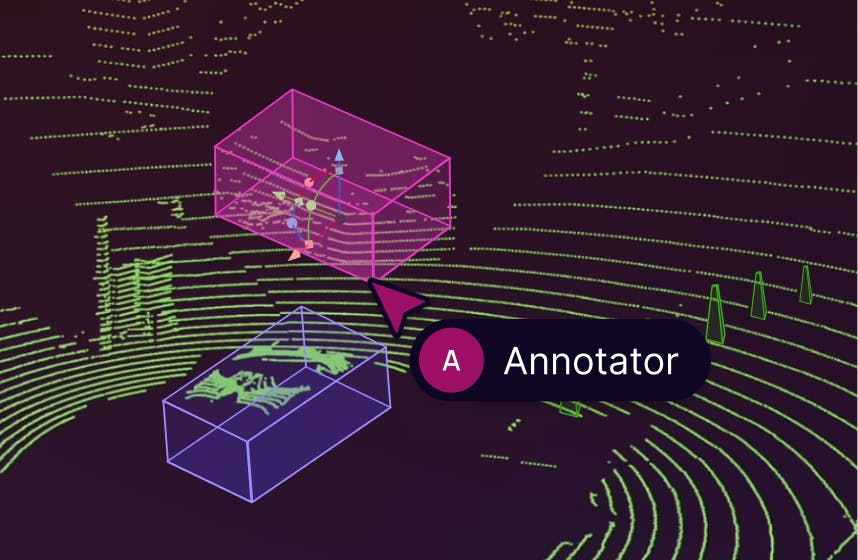

Encord enables engineers to scale high-quality annotation pipelines, to annotate large volumes of LiDAR, radar, and multi-camera data efficiently. This also includes customizing annotation schemas to track 3D bounding boxes, object velocity, lane information, and complex ADAS labels tailored to your system.

Encord also integrates active learning into the data pipeline with model-in-the-loop annotation to accelerate labeling while continuously improving dataset quality.

{light_callout_start}} Explore why Human-in-the-Loop Is the Missing Link in Autonomous Vehicle Intelligence

With full versioning, QA pipelines, and metadata tracking, Encord helps create datasets that are auditable and production-ready. Encord supports label versioning via Active Collections. There is support for structured QA via workflows (including review, strict review. Encord also supports custom metadata schemas and recommends using them for data curation and discoverability.

By combining public benchmarks with Encord-powered datasets, AV teams can both compare against standard baselines and train perception models that handle real-world variability at scale.

{light_callout_start}} Explore the 7 Best Data Labeling Platforms for 3D & LiDAR

Deployment Challenges in Production ADAS

Deploying AVs is one of the most challenging applications of physical AI as the safety implications are critical. And, in most cases, everyday people are operating these systems.

Real-time inference constraints

ADAS and autonomous vehicle systems operate in highly dynamic, safety-critical environments, meaning that perception models must make accurate predictions within tens of milliseconds. This is essential for functions like braking, steering, and path planning. Achieving real-time inference often requires hardware optimizations, such as model pruning, quantization, TensorRT acceleration, and feature compression in Bird’s Eye View representations.

Sensor calibration drift

3D object detection models rely heavily on precise alignment between multiple sensors, including LiDAR, radar, and cameras. Even small drifts in intrinsic parameters (e.g., camera focal length) or extrinsic parameters (sensor-to-sensor alignment) can lead to significant errors in bounding box placement and distance estimation. Without proper calibration, ADAS features such as Lane Keeping Assist (LKA) or collision avoidance may become unreliable.

Edge cases

ADAS must handle rare scenarios that are underrepresented in typical training datasets. For example, atypical vehicles like tractors or scooters, pedestrians moving unpredictably, animals crossing roads, or partially occluded objects in complex environments. These edge cases often determine whether a system performs safely in real-world conditions. Addressing them requires targeted data collection and active learning pipelines that prioritize these instances.

Domain shift across geographies

Models trained in one geographic region may underperform when deployed elsewhere due to differences in road infrastructure, traffic patterns, weather, and vehicle types. Lane markings, signs, road density, and local driving behaviors can all introduce domain shifts that reduce model reliability. Mitigation strategies include domain adaptation techniques, incremental dataset collection, and fine-tuning on region-specific data. Platforms like Encord can accelerate this process by enabling scalable, region-specific annotation pipelines that produce high-quality, production-ready datasets.

3D object detection remains a fast-evolving field at the heart of autonomous driving. As sensors diversify and models grow more sophisticated, success increasingly depends on robust data pipelines, scalable annotation, and production-ready ML systems.

{light_callout_start}} If you want to learn more about how Encord supports 3D object segmentation, visit our 3D & LiDAR page.