Contents

Snapshot: the field in 2025

Quick pick:

What actually makes a platform “best”

The comparison matrix for labelling platforms

Overviews

About Encord

Encord Blog

Best Data Labeling Platform (2025 Buyer’s Guide)

5 min read

In the AI development lifecycle, few tasks are as essential—and time-consuming—as data annotation. Whether you’re training a computer vision model, building a large language model, or developing domain-specific AI, the quality of your labeled data directly drives model performance.

With hundreds of tools on the market, choosing the best AI data annotation platform has never been more critical. In this guide, we compare the top platforms, highlight strengths and trade-offs, and help you decide what fits your workflow—whether you’re labeling medical images, autonomous-driving footage, or sensitive enterprise data.

Snapshot: the field in 2025

- Full-stack data ops wins. Platforms that combine labeling + curation + evaluation in one loop are pulling ahead—tight feedback loops beat tool sprawl. See Encord for a unified stack across Annotate and Active.

- Model-in-the-loop is table-stakes. Pre-labels, active learning, and automation are now expected: Encord, Labelbox Model-Assisted Labeling, Label Studio ML backends, AWS Ground Truth automation.

- Governance matters. Expect RBAC, audit trails, encryption; regulated teams often require a HIPAA & SOC 2 posture.

Quick pick:

- Enterprise, multimodal, end-to-end → Encord for labeling, curation, and model evaluation in one platform (Annotate + Active), with HIPAA/SOC 2 security.

What actually makes a platform “best”

1) Model-in-the-loop → measurable throughput gains

Look for pre-labels and active learning you can wire into CI/CD. Test by pre-labeling 1k assets and measuring review time.

2) Quality & governance you can audit

You’ll want consensus/review stages, sampling, and audit trails—and external attestations if you’re handling PHI/PII (e.g., Encord HIPAA/SOC 2).

3) Curation + evaluation in the same loop

Catching data errors, drift, and blind spots before training saves cycles. Good platforms excel at this.

The comparison matrix for labelling platforms

| Platform | Best For | Modalities | AI Assist / Automation |

| Encord | Enterprise, multimodal, regulated data | Images, video, text, audio, DICOM/NIfTI, docs | AI-assisted labeling, active learning, and curation/eval |

| SuperAnnotate | Software + managed workforce | Image, video, text | Automation options; layered QA; services |

| Labelbox | Cloud-integrated CV/NLP pipelines | Image, video, text | Model-Assisted Labeling |

| CVAT | Free/open-source CV | Image, video | Manual + plugins |

| Lightly | Data curation, not labeling | Any files (curation) | Embedding-based selection |

| Label Studio | OSS, developer control | Image, text, audio, video | ML backends |

| V7 (Darwin) | Speedy CV segmentation | Image, video, bio | Auto-Annotate |

| SageMaker Ground Truth | AWS-aligned orgs | Image, text (AWS) | Automated labeling |

| Snorkel Flow | Programmatic labels | Text/LLM/CV | Rules + FM prompts |

| Prodigy | Small expert teams | NLP (+ CV/audio) | Active-learning recipes |

| Dataloop | Pipelines & ops | Multimodal | Pre-label Pipelines |

| Basic.ai | Platform + workforce | LiDAR, image, video | Workforce mgmt, QA |

Overviews

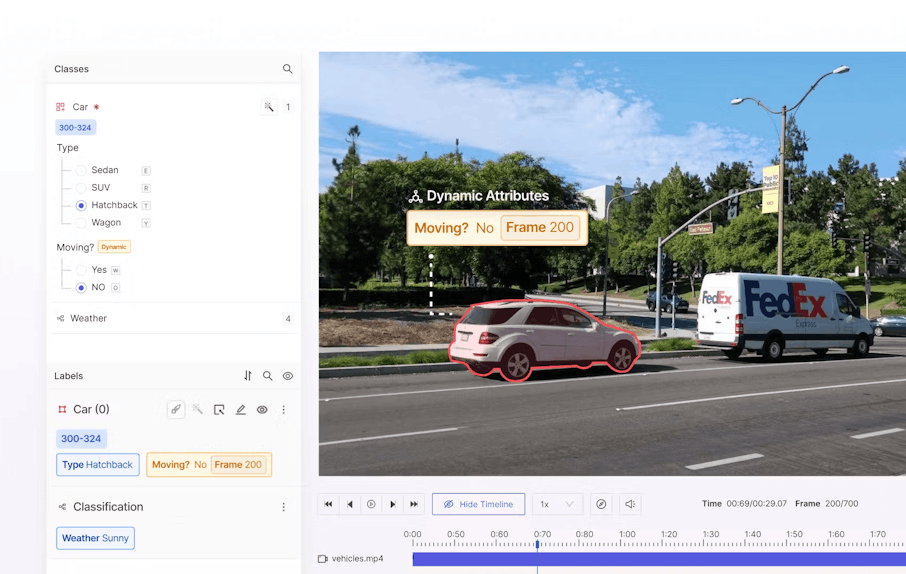

1) Encord — Best enterprise-grade platform for complex AI

What stands out: Annotate covers images, video, text, docs, and medical imaging (DICOM/NIfTI). Annotation is tied directly to Active for data curation, error discovery, and model evaluation—so you can close the loop in one place. Teams use review workflows, consensus checks, and analytics to keep quality high; HIPAA/SOC 2 supports sensitive industries. Index speeds large-scale data discovery, while Data Agents automate repetitive pipeline steps. If you need surge capacity, Accelerate provides vetted labeling services.

Best for: Regulated or multimodal programs that need measurable throughput and governance—healthcare AI, robotics/industrial, retail at scale.

2) SuperAnnotate — Designed for speed and team collaboration

What stands out: Visual project dashboards, layered QA, and the option to combine software with managed services. Strong for image/video and text, with real-time performance metrics.

Best for: Teams that want a single vendor for platform and workforce.

Watch-outs: Align on SLAs, instructions, and escalation paths so quality scales with volume.

3) Labelbox — Good for integrated cloud ML pipelines

What stands out: Mature CV/NLP interfaces, Model-Assisted Labeling, and broad cloud integrations. Advanced data slicing and QA help production teams ship faster.

Best for: Cloud-native teams running large CV workloads.

Watch-outs: Validate medical/DICOM requirements and whether you need a separate curation/eval layer.

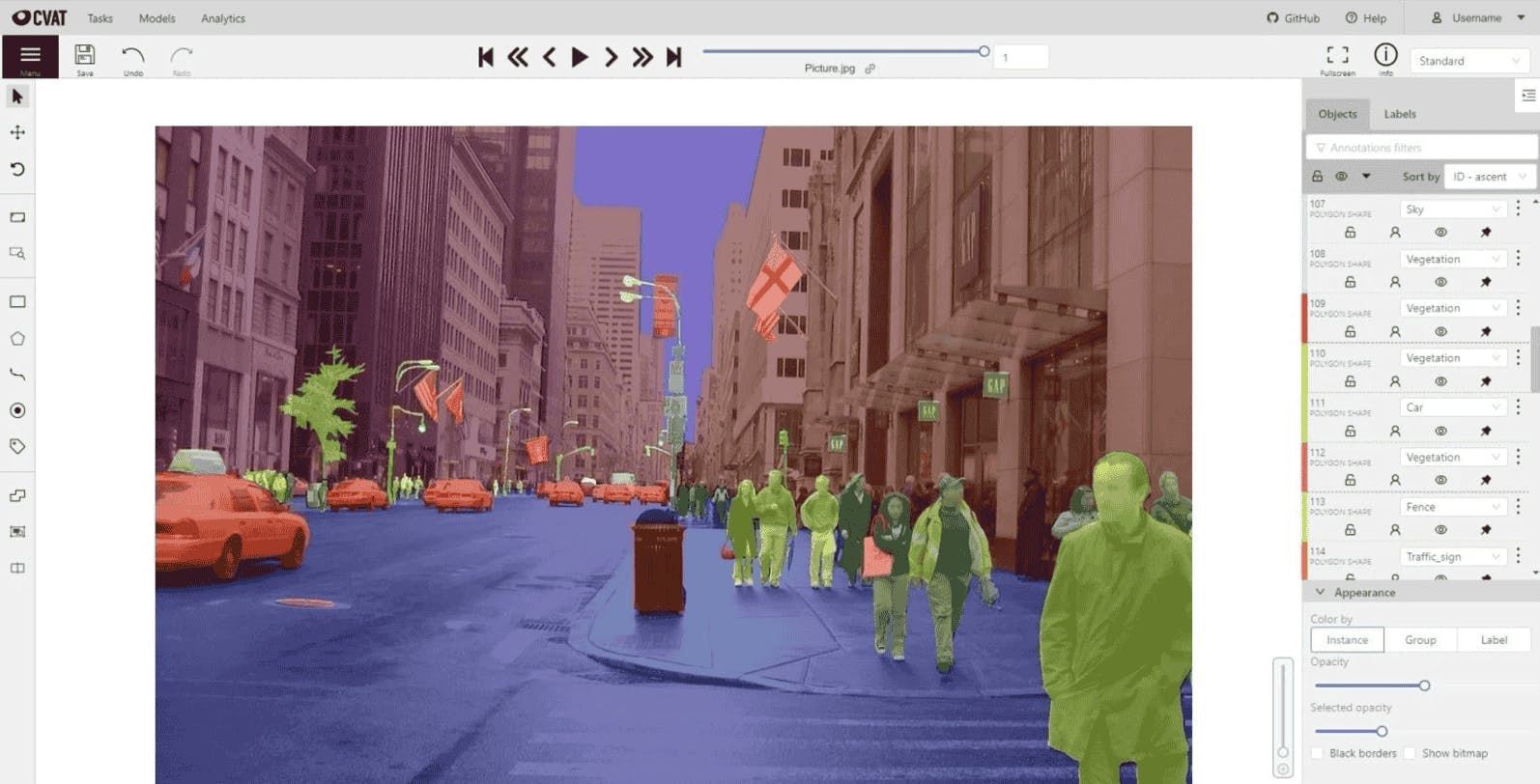

4) CVAT — Best open-source “get it done” annotator

What stands out: Free, battle-tested CV toolkit with a manual-first interface and plugin ecosystem. Easy to stand up for on-prem research or cost-sensitive teams.

Best for: Engineering-heavy teams who prefer self-hosting.

Watch-outs: Limited native QA and multimodal depth; you’ll own ops and governance.

5) Lightly — Curation (not labeling) that cuts waste

What stands out: Embedding-based selection to find the most valuable samples to label—reducing volume while preserving accuracy.

Best for: Teams drowning in redundant data who want to label less and learn more.

Watch-outs: Pair with a labeling platform (e.g., Encord or Labelbox) to complete the loop.

6) Label Studio — Open-source with strong developer support

What stands out: Flexible templates, ML backends, and webhooks let you bring your own model-in-the-loop.

Best for: Self-hosted pipelines, research, and regulated environments preferring OSS control.

Watch-outs: More setup/maintenance than SaaS; consider enterprise add-ons if you need governance.

7) V7 (Darwin) — Computer-vision velocity

What stands out: Auto-Annotate and SAM-style assists speed boxes/polygons/masks; good UX for image/video segmentation.

Best for: Repetitive CV segmentation at volume.

Watch-outs: Validate medical/DICOM specifics and evaluation depth.

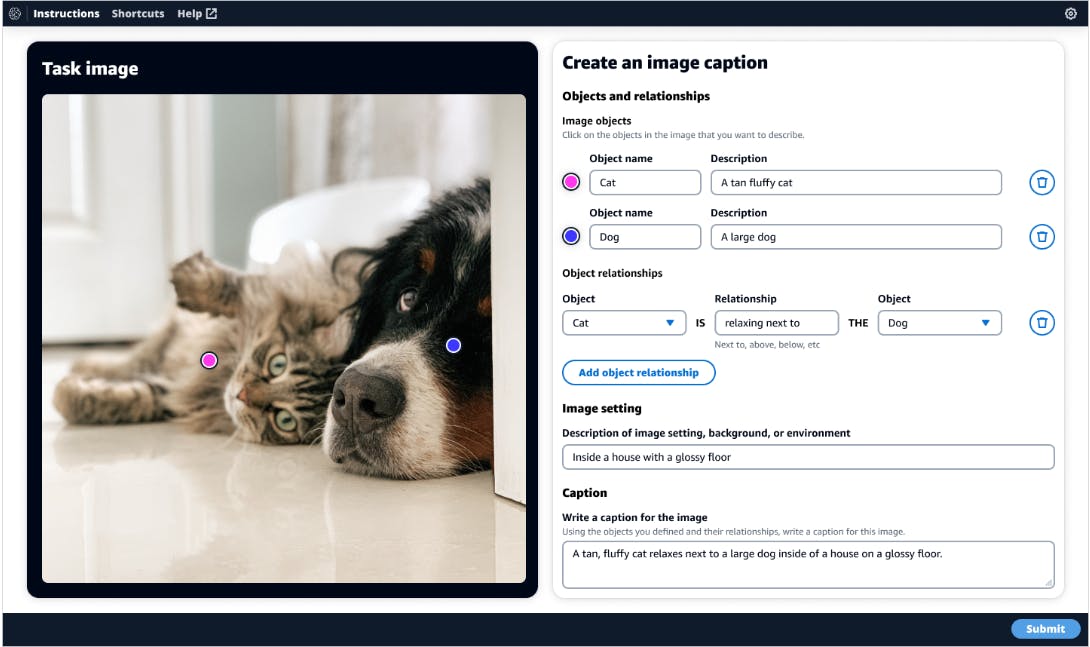

8) SageMaker Ground Truth — AWS all-in

What stands out: Automated labeling and annotation consolidation inside your AWS boundary; easy IAM alignment.

Best for: Teams standardized on AWS.

Watch-outs: UI depth and multimodal flexibility may require complementary tools.

9) Snorkel Flow — Programmatic labels that move the needle

What stands out: Encode SME rules and foundation-model prompts to generate labels, then iterate with guided error analysis (overview).

Best for: LLM/RAG and large text classification tasks.

Watch-outs: Still plan for human review and an evaluation pass to manage bias.

10) Prodigy — Fast expert loops

What stands out: Scriptable, developer-friendly labeling with active-learning recipes; great for small, high-skill teams.

Best for: NLP teams who want speed and local control.

Watch-outs: Not a full data-ops stack; pair with curation/eval.

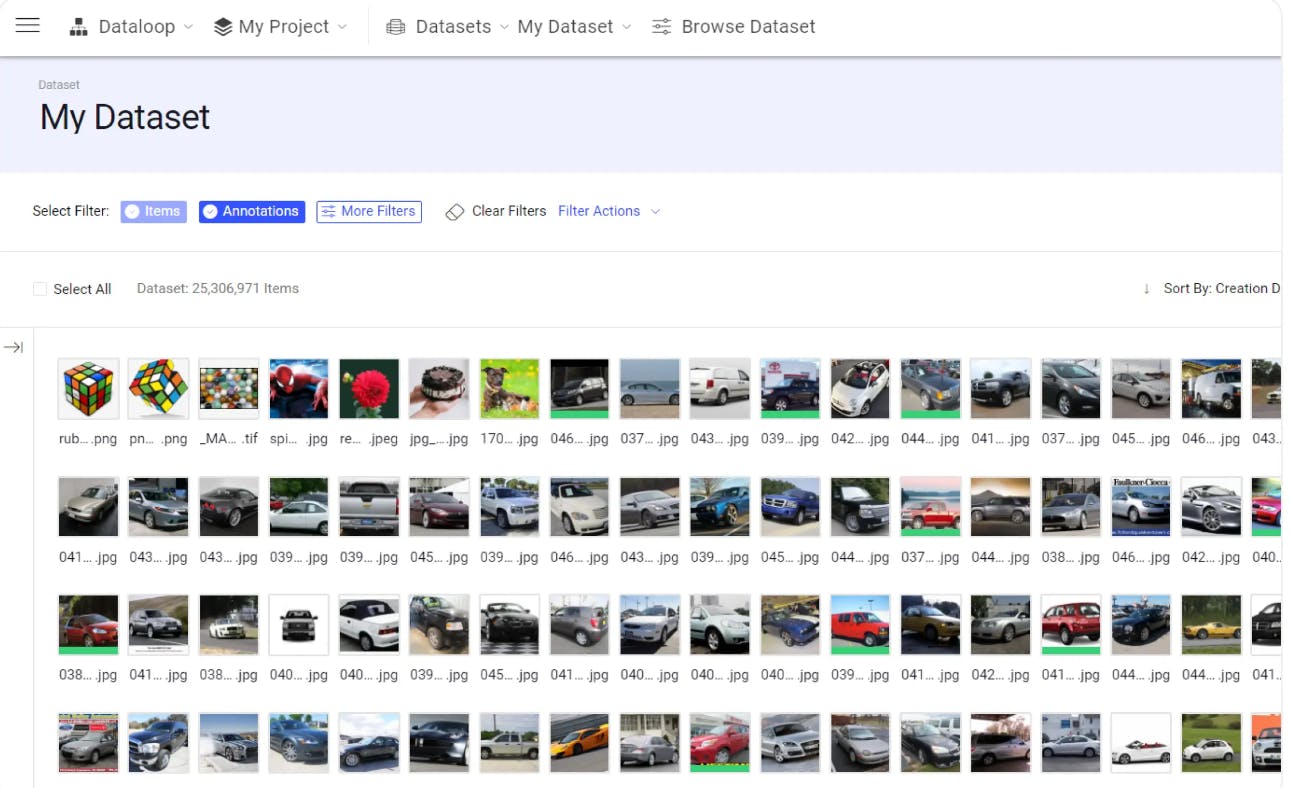

11) Dataloop — Pipelines first

What stands out: Pre-labeling pipelines and automation for always-on flows.

Best for: Continuous ingestion → labeling → training cycles.

Watch-outs: Validate advanced eval and cross-modal needs.

12) Basic.ai — Workforce + platform

What stands out: Combined software and workforce for LiDAR, image, and video; annotator training and performance management.

Best for: Companies that want to offload execution while maintaining tight QA.

Watch-outs: As with any managed workforce, define QA criteria and edge-case handling up front.

About Encord

Encord is a multimodal AI data platform to manage, curate, and annotate images, video, audio, documents, and medical imaging—with AI-assisted labeling, model evaluation & active learning, and enterprise security. Explore Annotate, Multimodal Data Management, Index, and Data Agents.

Explore the platform

Data infrastructure for multimodal AI

Explore product

Explore our products

There is no universal best. Pick Encord or consider SuperAnnotate, Labelbox, CVAT, or Label Studio based on modality, compliance, and hosting needs

Encord is a strong enterprise choice with multimodal workflows and evaluations. Validate against your compliance requirements.

Encord emphasize human evaluation and model-assisted workflows for GenAI.

Ensuring high-quality data is often a challenge due to the trade-off between the time spent on labeling and the quality of the annotations. Teams may struggle to allocate sufficient time for thorough data review while also needing to focus on the development of features and models. It's crucial to balance these demands to maintain the integrity of the data.

Encord provides a seamless integration for remote labeling services, allowing teams to collaborate efficiently regardless of their location. The platform supports both in-house and third-party annotation workforces, enabling users to plug in their preferred labeling resources as they scale their data collection efforts.

Encord specializes in data labeling and annotation, providing tools for label editing, data management, and curation. The platform focuses on creating representative datasets and identifying edge cases that require labeling, ensuring a comprehensive approach to the data annotation process.

Encord offers a comprehensive platform for data labeling and model training, allowing teams to efficiently collect, label, and improve their datasets. The platform is designed to streamline the process of building and refining machine learning models, enhancing performance and accuracy.

Encord provides a robust data development platform specifically designed to help teams efficiently label data for captions and commands relevant to humanoid applications. This includes leveraging our experience in the physical AI domain to streamline the data preparation process.

Encord's platform can utilize both foundation models and user-generated models for pre-labeling tasks. This capability allows teams to streamline the labeling process by providing initial labels that can be refined by human annotators, especially for complex or challenging cases.

Encord offers a combination of data management solutions and annotation services. Customers can choose to integrate Encord with their internal tools or utilize our platform for streamlined data labeling, depending on their specific needs and workflows.

Encord integrates advanced semi-automation features that leverage foundation models to enhance the data labeling process. This allows teams to increase efficiency and accuracy when preparing training datasets for computer vision applications.