Contents

What Is Mistral Large?

Key Attributes of Mistral Large

Performance Benchmark

Comparison of Mistral Large with other SOTA Models

Mistral Large: Platform Availability

Mistral Large on Microsoft Azure

Mistral Small

Mistral Large: What’s Next?

Encord Blog

Mistral Large Explained

Mistral AI made headlines with the release of Mistral 7B, an open-source model competing with tech giants like OpenAI and Meta and surpassing several state-of-the-art large language models such as LLaMA 2. Now, in collaboration with Microsoft, the French AI startup introduces Mistral Large, marking a significant advancement in language model development and distribution.

What Is Mistral Large?

Mistral Large, developed by Mistral AI, is an advanced language model renowned for its robust reasoning capabilities tailored for intricate multilingual tasks.

Fluent in English, French, Spanish, German, and Italian, it exhibits a nuanced grasp of various languages. Boasting a 32K tokens context window, Mistral Large ensures precise information retrieval from extensive documents, facilitating accurate and contextually relevant text generation.

With the incorporation of retrieval augmented generation (RAG), it can access facts from external knowledge bases, thereby enhancing comprehension and precision. Mistral Large also excels in instruction-following and function-calling functionalities, enabling tailored moderation policies and application development.

Its performance in coding, mathematical, and reasoning tasks makes it a notable solution in natural language processing.

Key Attributes of Mistral Large

- Reasoning Capabilities:

Mistral Large showcases powerful reasoning capabilities, enabling it to excel in complex multilingual reasoning tasks. It stands out for its ability to understand, transform, and generate text with exceptional precision. - Native Multilingual Proficiency:

With native fluency in English, French, Spanish, German, and Italian, Mistral Large demonstrates a nuanced understanding of grammar and cultural context across multiple languages. - Enhanced Contextual Understanding:

Featuring a 32K tokens context window, Mistral Large offers precise information recall from large documents, facilitating accurate and contextually relevant text generation.

Mistral Large, unlike Mistral 7B, the open-sourced LLM that provided stiff competition to state-of-the-art (SOTA) large language models, is equipped with retrieval augmented generation (RAG). This feature enables the LLM to retrieve facts from an external knowledge base, grounding its understanding and enhancing the accuracy and contextuality of its text-generation capabilities. - Instruction-Following

Mistral Large's instruction-following capabilities allow developers to design customized moderation policies and system-level moderation, exemplified by its usage in moderating platforms like le Chat. - Function Calling Capability

Mistral Large can directly call functions, making it easier to build and update apps and tech stack modernization on a large scale. With this feature and limited output mode, developers can add advanced features and make interactions smoother without any hassle. - For more information, read the blog What is Retrieval Augmented Generation (RAG)?

Performance Benchmark

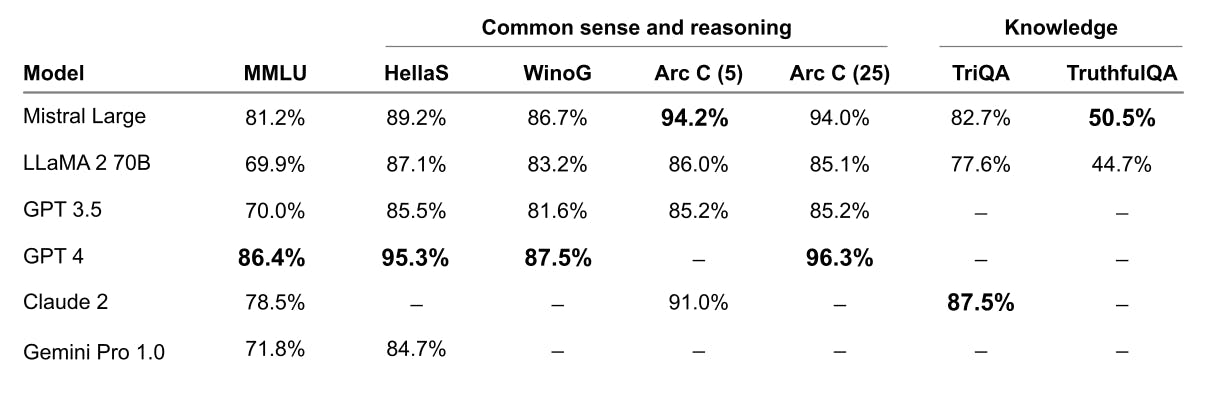

The performance of Mistral Large is compared on various tasks against other state-of-the-art LLM models which are commonly used as benchmarks.

Reasoning and Knowledge

These benchmarks assess various aspects of language understanding and reasoning, including tasks like understanding massive multitask language (MMLU), completing tasks with limited information (e.g., 5-shot and 10-shot scenarios), and answering questions based on different datasets (e.g., TriviaQA and TruthfulQA).

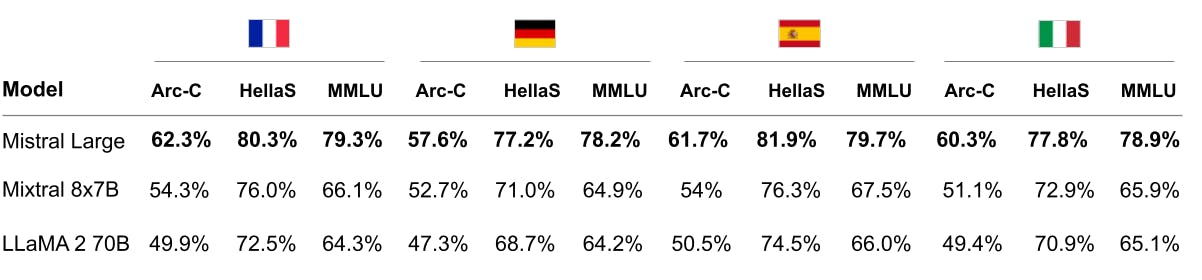

Multi-lingual Capacities

The multilingual capability of Mistral Large undergoes benchmarking on HellaSwag, Arc Challenge, and MMLU benchmarks across French, German, Spanish, and Italian languages. Its performance is compared to Mistral 7B and LLaMA 2. Notably, Mistral Large hasn't been tested against the GPT series or Gemini, as these language models have not disclosed their performance metrics on these 4 languages.

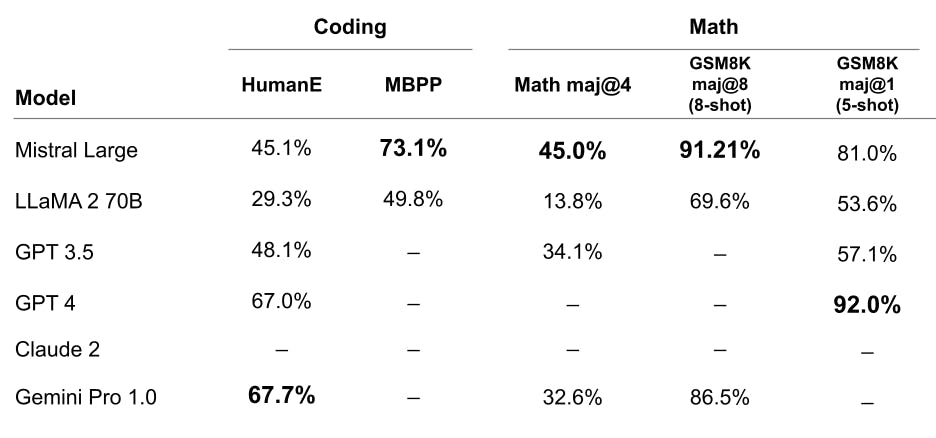

Maths and Coding

Mistral Large excels across coding and math benchmarks, showcasing strong problem-solving abilities. With high pass rates in HumanEval and MBPP, it demonstrates proficiency in human-like evaluation tasks. Achieving a majority vote accuracy of 4 in the Math benchmark and maintaining accuracy in scenarios with limited information in GSM8K benchmarks, Mistral Large proves its effectiveness in diverse mathematical and coding challenges.

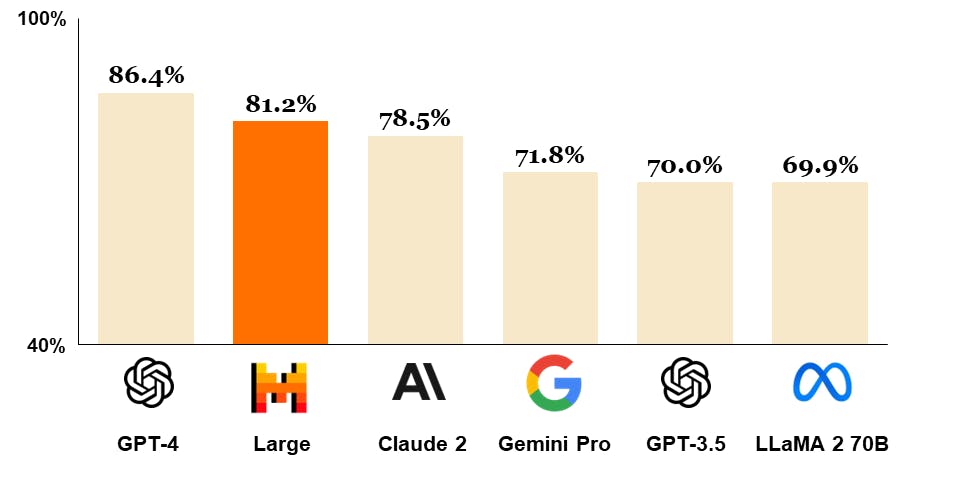

Comparison of Mistral Large with other SOTA Models

Mistral Large demonstrates impressive performance on widely recognized benchmarks, securing its position as the second-ranked model available via API globally, just behind GPT-4.

Detailed comparisons against other state-of-the-art (SOTA) models like Claude 2, Gemini Pro 1.0, GPT 3.5, and LLaMA 2 70B are provided on benchmarks such as MMLU (Measuring massive multitask language understanding), showcasing Mistral Large's competitive edge and advanced capabilities in natural language processing tasks.

Mistral Large: Platform Availability

- La Plataforme

Hosted securely on Mistral's infrastructure in Europe, La Plateforme offers developers access to a comprehensive array of models for developing applications and services. This platform provides a wide range of tools and resources to support different use cases. - Le Chat

Le Chat serves as a conversational interface for interacting with Mistral AI's models, providing users with a pedagogical and enjoyable experience to explore the company's technology. It can utilize Mistral Large or Mistral Small, as well as a prototype model called Mistral Next, offering brief and concise interactions. - Microsoft Azure

Mistral AI has announced its partnership with Microsoft and made Mistral LArge available in Azure AI Studio providing users with a user-friendly experience similar to Mistral's APIs. Beta customers have already experienced notable success utilizing Mistral Large on the Azure platform, benefiting from its advanced features and robust performance. - Self-deployment

For sensitive use cases, Mistral Large can be deployed directly into the user's environment, granting access to model weights for enhanced control and customization.

Mistral Large on Microsoft Azure

Mistral Large is set to benefit significantly from the multi-year partnership of Microsoft with Mistral AI on three key aspects:

- Supercomputing Infrastructure:

Microsoft Azure will provide Mistral AI with supercomputing infrastructure tailored for AI training and inference workloads, ensuring best-in-class performance and scalability for Mistral AI's flagship models like Mistral Large. This infrastructure will enable Mistral AI to handle complex AI tasks efficiently and effectively. - Scale to Market:

Through Models as a Service (MaaS) in Azure AI Studio and Azure Machine Learning model catalog, Mistral AI's premium models, including Mistral Large, will be made available to customers. This platform offers a diverse selection of both open-source and commercial models, providing users with access to cutting-edge AI capabilities. Additionally, customers can utilize Microsoft Azure Consumption Commitment (MACC) for purchasing Mistral AI's models, enhancing accessibility and affordability for users worldwide. - AI Research and Development:

Microsoft and Mistral AI will collaborate on AI research and development initiatives, including the exploration of training purpose-specific models for select customers. This collaboration extends to European public sector workloads, highlighting the potential for Mistral Large and other models to address specific customer needs and industry requirements effectively.

Mistral Small

Mistral Small, introduced alongside Mistral Large, represents a new optimized model specifically designed to prioritize low latency and cost-effectiveness. This model surpasses Mixtral 8x7B, the sparse mixture-of-experts network, in performance while boasting lower latency, positioning it as a refined intermediary solution between Mistral's open-weight offering and its flagship model.

Mistral Small inherits the same innovative features as Mistral Large, including RAG-enablement and function calling capabilities, ensuring consistent performance across both models.

To streamline their endpoint offering, Mistral is introducing two main categories:

- Open-weight Endpoints: These endpoints, named open-mistral-7B and open-mixtral-8x7b, offer competitive pricing and provide access to Mistral's models with open weights, catering to users seeking cost-effective solutions.

- New Optimized Model Endpoints: Mistral is introducing new optimized model endpoints, namely mistral-small-2402 and mistral-large-2402. These endpoints are designed to accommodate specific use cases requiring optimized performance and cost efficiency. Also, mistral-medium will be maintained without updates at this time.

Mistral Large: What’s Next?

- Multi-currency Pricing

Moving forward, Mistral AI is introducing multi-currency pricing for organizational management, providing users with the flexibility to transact in their preferred currency. This enhancement aims to streamline payment processes and improve accessibility for users worldwide. - Reduced End-point Latency

Mistral AI states that it is working to reduce the latency of all our endpoints. This improvement ensures faster response times, enabling smoother interactions and improved efficiency for users across various applications. - La Plataforme Service Tier Updates

To make their services even better, Mistral AI has updated the service tiers on La Plataforme. These updates aim to improve performance, reliability, and user satisfaction for those using Mistral AI's platform for their projects and applications.

Explore the platform

Data infrastructure for multimodal AI

Explore product

Explore our products

To access Mistral Large on Azure, users utilize the [mistral-large-latest] model endpoint. The cost varies based on token usage: $8 USD or €7.3 EUR per 1M tokens for input, and $24 USD or €22 EUR per 1M tokens for output. Users subscribe to the endpoint and pay accordingly.

Yes, Mistral Large is available in Azure Machine Learning Studio. Beta users now access Mistral Large through the corresponding model endpoint, [mistral-large-latest], and utilize its advanced language processing capabilities for various tasks within the Azure Machine Learning Studio environment.

No, Mistral Large differs from Mistral AI OSS models, which deploy to VMs with GPUs via Online Endpoints. Instead, Mistral Large is provided as an API, offering a different deployment method for users to access its capabilities.

Yes, it is possible to fine-tune Mistral Large. Fine-tuning allows users to customize the model's parameters and optimize its performance for specific tasks or datasets, enhancing its effectiveness in various applications.

No, Mistral AI previously released an open-source LLM named Mistral 7B. However, Mistral Large is a partnership venture with Microsoft, featuring pay-as-you-go pricing. Unlike Mistral 7B, it's not openly available and operates under a different pricing model, reflecting a collaboration between Mistral AI and Microsoft.