Contents

Prerequisites

Train YOLOv9 on Encord Dataset

Train YOLOv8 on Encord Dataset

Comparative Analysis on Encord Active

Encord Blog

Comparative Analysis of YOLOv9 and YOLOv8 Using Custom Dataset on Encord Active

Even as foundation models gain popularity, advancements in object detection models remain significant. YOLO has consistently been the preferred choice in machine learning for object detection.

Let’s train the latest iterations of the YOLO series, YOLOv9, and YOLOV8 on a custom dataset and compare their model performance.

In this blog, we will train YOLOv9 and YOLOv8 on the xView3 dataset. The xView3 dataset contains aerial imagery with annotations for maritime object detection, making it an ideal choice for evaluating the robustness and generalization capabilities of object detection models.

If you wish to curate and annotate your own dataset for a direct comparison between the two models, you have the option to create the dataset using Encord Annotate. Once annotated, you can seamlessly follow the provided code to train and evaluate both YOLOv9 and YOLOv8 on your custom dataset.

Prerequisites

We are going to run our experiment on Google Colab. So if you are doing it on your local system, please bear in mind that the instructions and the code was made to run on Colab Notebook.

Make sure you have access to GPU. You can either run the command below or navigate to Edit → Notebook settings → Hardware accelerator, set it to GPU, and the click Save.

!nvidia-smi

To make it easier to manage datasets, images, and models we create a HOME constant.

import os HOME = os.getcwd() print(HOME)

Train YOLOv9 on Encord Dataset

Install YOLOv9

!git clone https://github.com/SkalskiP/yolov9.git %cd yolov9 !pip install -r requirements.txt -q !pip install -q roboflow encord av # This is a convenience class that holds the info about Encord projects and makes everything easier. # The class supports bounding boxes and polygons across both images, image groups, and videos. !wget 'https://gist.githubusercontent.com/frederik-encord/e3e469d4062a24589fcab4b816b0d6ec/raw/fa0bfb0f1c47db3497d281bd90dd2b8b471230d9/encord_to_roboflow_v1.py' -O encord_to_roboflow_v1.py

Imports

from typing import Literal from pathlib import Path from IPython.display import Image import roboflow from encord import EncordUserClient from encord_to_roboflow_v1 import ProjectConverter

Data Preparation

Set up access to the Encord platform by creating and using an SSH key.

# Create ssh-key-path

key_path = Path("../colab_key.pub")

if not key_path.is_file():

!ssh-keygen -t ed25519 -f ../colab_key -N "" -q

key_content = key_path.read_text()We will now retrieve the data from Encord, converting it to the format required by Yolo and storing it on disk. It's important to note that for larger projects, this process may encounter difficulties related to disk space.

The converter will automatically split your dataset into training, validation, and testing sets based on the specified sizes.

# Directory for images

data_path = Path("../data")

data_path.mkdir(exist_ok=True)

client = EncordUserClient.create_with_ssh_private_key(

Path("../colab_key").resolve().read_text()

)

project_hash = "9ca5fc34-d26f-450f-b657-89ccb4fe2027" # xView3 tiny

encord_project = client.get_project(project_hash)

converter = ProjectConverter(

encord_project,

data_path,

)

dataset_yaml_file = converter.do_it(batch_size=500, splits={"train": 0.5, "val": 0.1, "test": 0.4})

encord_project_title = converter.title

Download Model Weight

We will download the YOLOv9-e and the gelan-c weights. Although the YOLOv9 paper mentions versions yolov9-s and yolov9-m, it's worth noting that weights for these models are currently unavailable in the YOLOv9 repository.

!mkdir -p {HOME}/weights

!wget -q https://github.com/WongKinYiu/yolov9/releases/download/v0.1/yolov9-e-converted.pt -O {HOME}/weights/yolov9-e.pt

!wget -P {HOME}/weights -q https://github.com/WongKinYiu/yolov9/releases/download/v0.1/gelan-c.pt

You can predict and evaluate the results of object detection with the YOLOv9 weights pre-trained on COCO model.

Train Custom YOLOv9 Model for Object Detection

We train a custom YOLOv9 model from a pre-trained gelan-c model.

!python train.py \

--batch 8 --epochs 20 --img 640 --device 0 --min-items 0 --close-mosaic 15 \

--data $dataset_yaml_file \

--weights {HOME}/weights/gelan-c.pt \

--cfg models/detect/gelan-c.yaml \

--hyp hyp.scratch-high.yamlYou can examine and validate your training results. The code for validation and inference with the custom model is available on Colab Notebook. Here we will focus on comparing the model performances.

Converting Custom YOLOv9 Model Predictions to Encord Active Format

pth = converter.create_encord_json_predictions(get_latest_exp("detect") / "labels", Path.cwd().parent)

print(f"Predictions exported to {pth}")Download the predictions on your local computer and upload them via the UI to Encord Active for analysis of your results.

Moving on to training YOLOv8!

Train YOLOv8 on Encord Dataset

Install YOLOv8

!pip install ultralytics==8.0.196 from IPython import display display.clear_output() import ultralytics ultralytics.checks()

Dataset Preparation

As we are doing a comparative analysis of two models, we will use the same dataset to train YOLOv8.

Train Custom YOLOv8 Model for Object Detection

from ultralytics import YOLO

model = YOLO('yolov8n.pt') # load a pretrained YOLOv8n detection model

model.train(data=dataset_yaml_file.as_posix(), epochs=20) # train the model

model.predict()The code for running inference on the test dataset is available on the Colab Notebook shared below.

Converting Custom YOLOv8 Model Predictions to Encord Active Format

pth = converter.create_encord_json_predictions(get_latest_exp("detect", ext="predict") / "labels", Path.cwd().parent)

print(f"Predictions exported to {pth}")Download this JSON file and upload it to Encord Active via UI.

Comparative Analysis on Encord Active

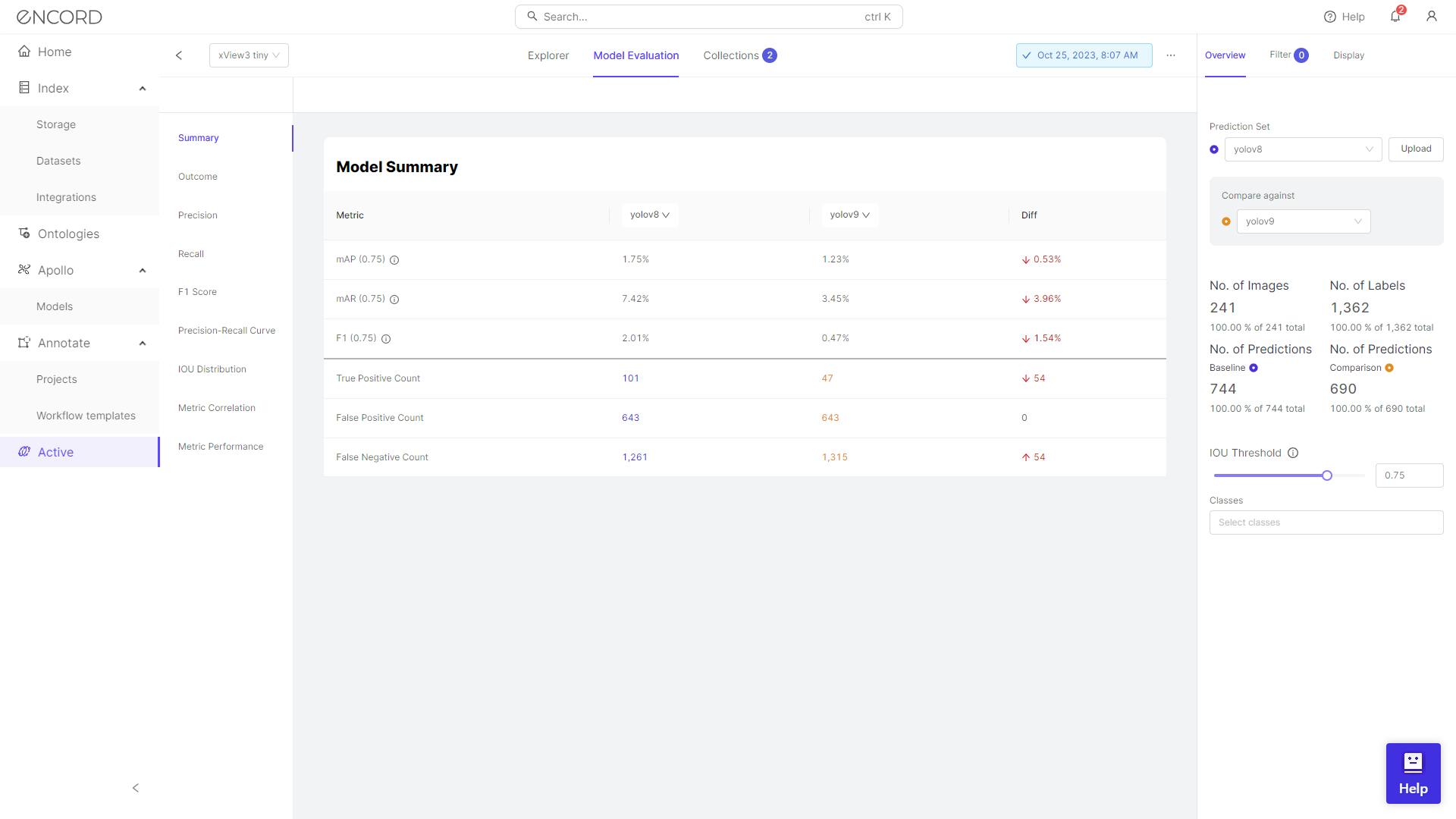

On Encord Active under the tab Model Evaluation, you can compare both the model’s predictions.

You can conveniently navigate to the Model Summary tab to view the Mean Average Precision (mAP), Mean Average Recall (mAR), and F1 score for both models. Additionally, you can compare the differences in predictions between YOLOv8 and YOLOv9.

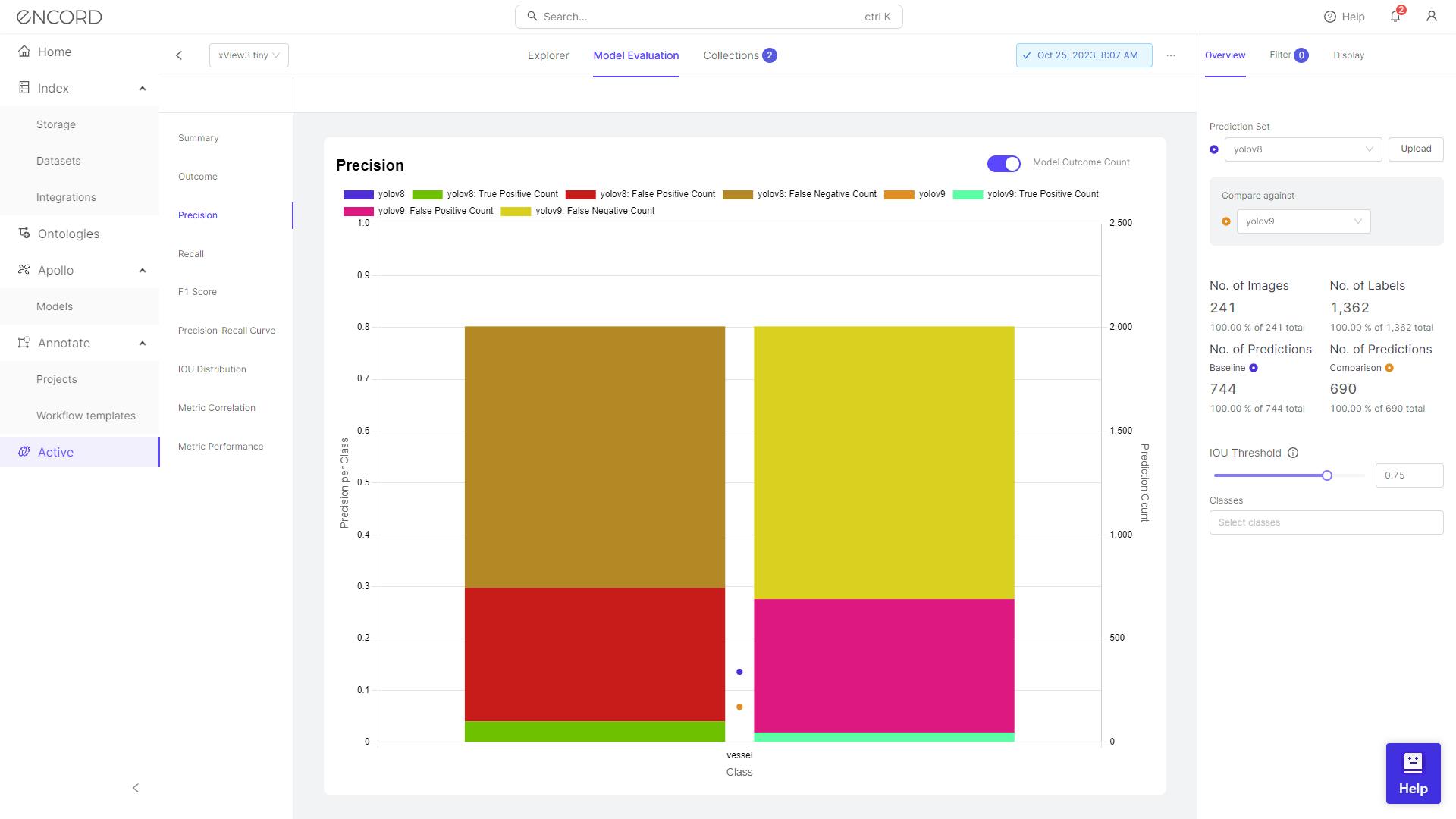

Precision

YOLOv8 may excel in correctly identifying objects (high true positive count) but at the risk of also detecting objects that aren't present (high false positive count). On the other hand, YOLOv9 may be more conservative in its detections (lower false positive count) but could potentially miss some instances of objects (higher false negative count).

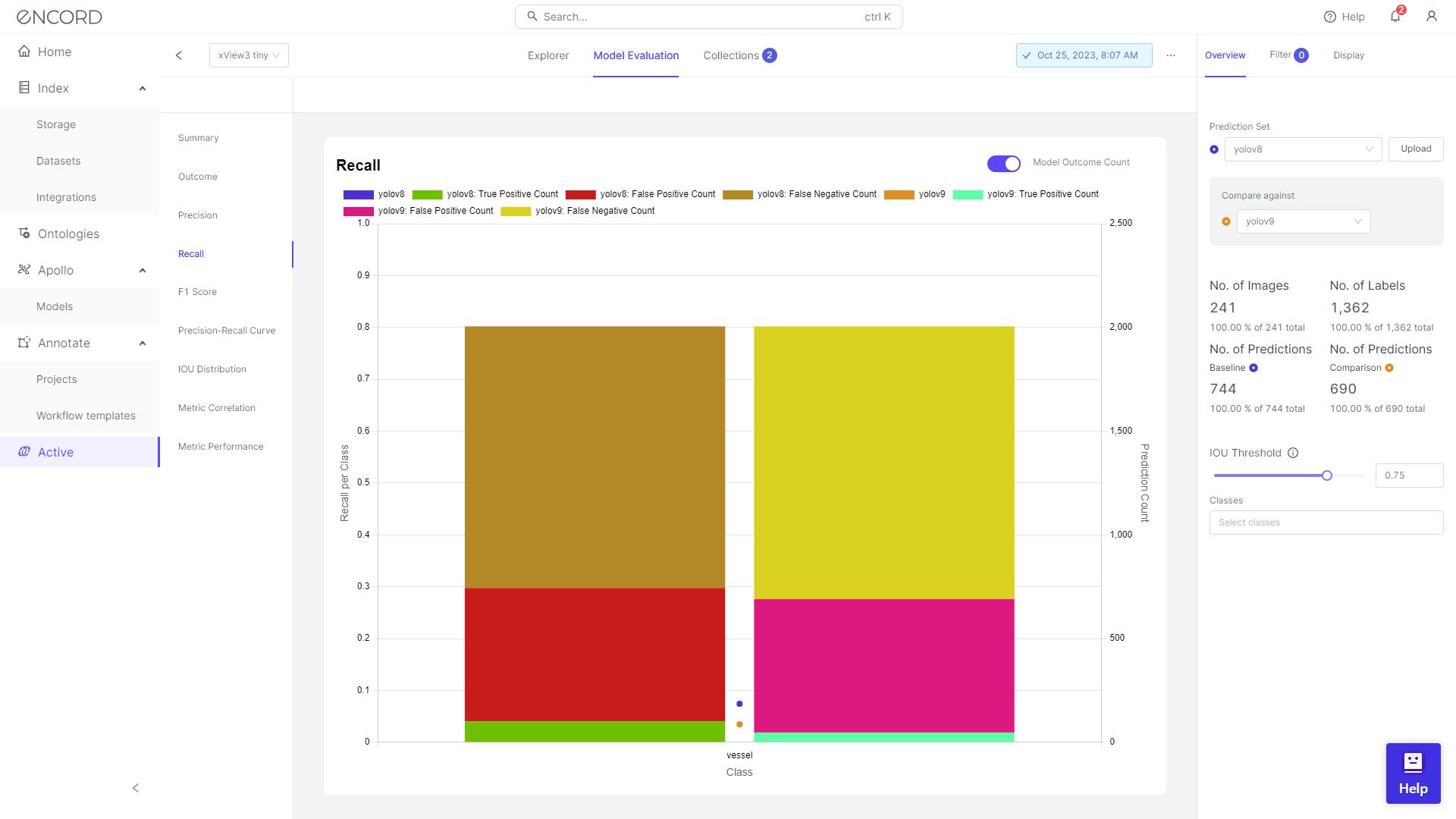

Recall

In terms of recall, YOLOv8 exhibits superior performance with a higher true positive count (101) compared to YOLOv9 (43), indicating its ability to correctly identify more instances of objects present in the dataset.

Both models, however, show an equal count of false positives (643), suggesting similar levels of incorrect identifications of non-existent objects. YOLOv8 demonstrates a lower false negative count (1261) compared to YOLOv9 (1315), implying that YOLOv8 misses fewer instances of actual objects, highlighting its advantage in recall performance.

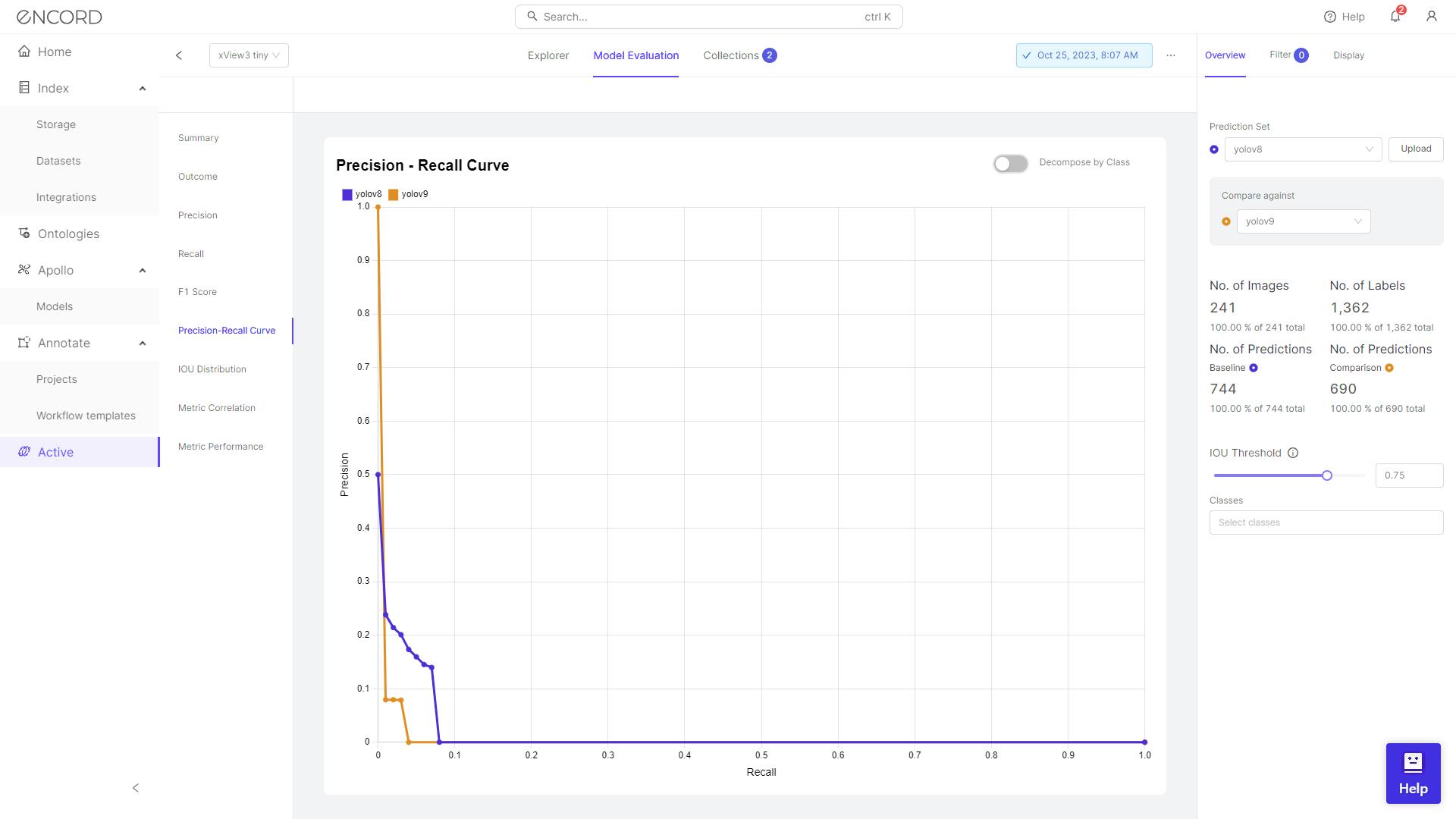

Precision-Recall Curve

Based on the observed precision-recall curves, it appears that YOLOv8 achieves a higher Area Under the Curve (AUC-PR) value compared to YOLOv9. This indicates that YOLOv8 generally performs better in terms of both precision and recall across different threshold values, capturing a higher proportion of true positives while minimizing false positives more effectively than YOLOv9.

Precision-Recall Curve is not the only metric to evaluate the performance of models. There are other metrics like F1 score, IOU distribution, etc.

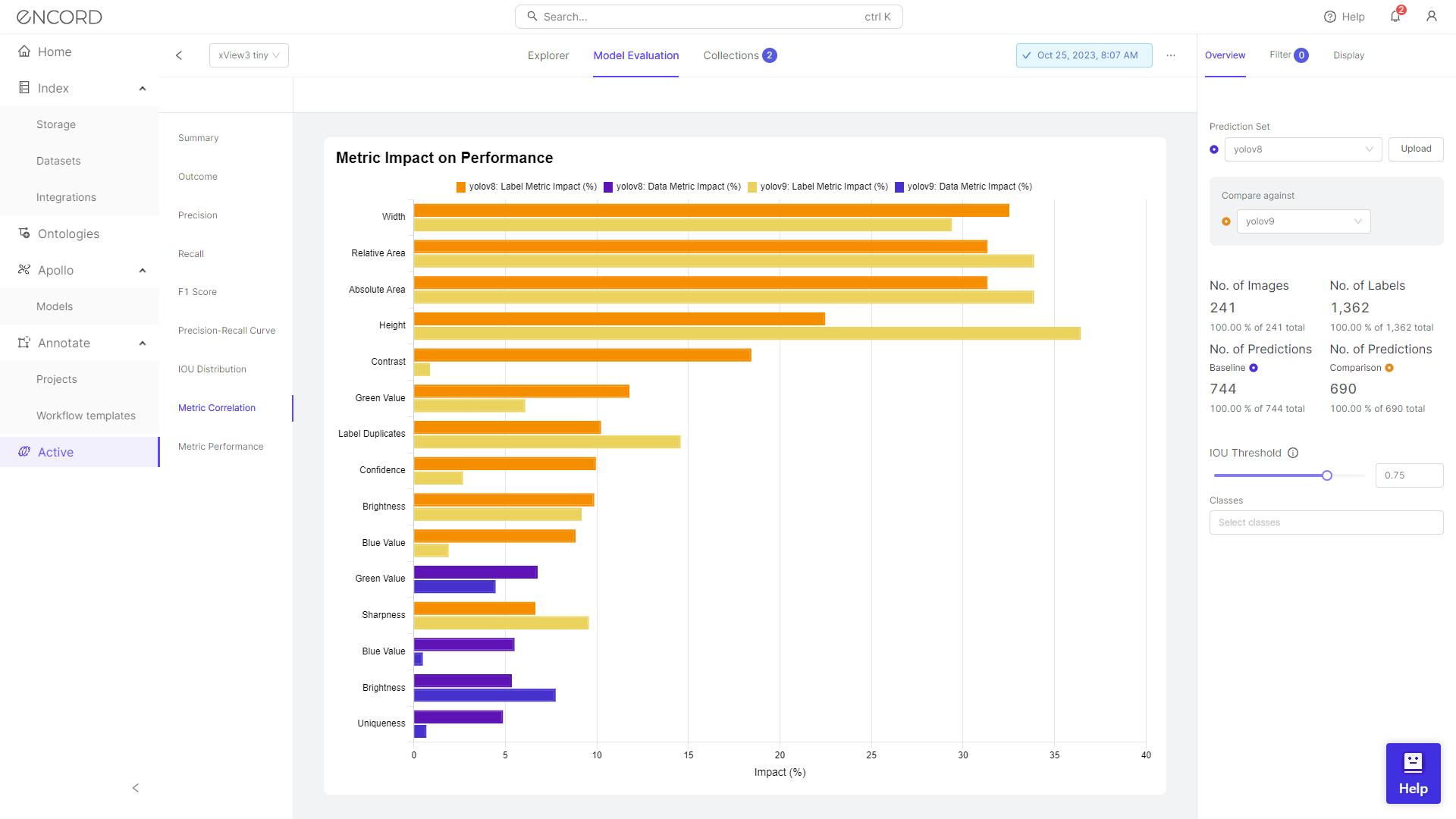

Metric Correlation

The metric impact on performance in Encord refers to how specific metrics influence the performance of your model. Encord allows you to figure out which metrics have the most influence on your model's performance. This metric tells us whether a positive change in a metric will lead to a positive change (positive correlation) or a negative change (negative correlation) in model performance.

The dimensions of the labeled objects significantly influence the performance of both models. This underscores the importance of the size of objects in the dataset. It's possible that the YOLOv9 model's performance is adversely affected by the presence of smaller objects in the dataset, leading to its comparatively poorer performance.

Metric Performance

The Metric Performance in model evaluation in Encord provides a detailed view of how a specific metric affects the performance of your model. It allows you to understand the relationship between a particular metric and the model's performance.

In conclusion, the comparison between YOLOv8 and YOLOv9 on Encord Active highlights distinct performance characteristics in terms of precision and recall.

While YOLOv8 excels in correctly identifying objects with a higher true positive count, it also exhibits a higher false positive count, indicating a potential for over-detection. On the other hand, YOLOv9 demonstrates a lower false positive count but may miss some instances of objects due to its higher false negative count.

The precision-recall curve analysis suggests that YOLOv8 generally outperforms YOLOv9, capturing a higher proportion of true positives while minimizing false positives more effectively.

However, it's important to consider other metrics like F1 score and IOU distribution for a comprehensive evaluation of model performance. Moreover, understanding the impact of labeled object dimensions and specific metric correlations can provide valuable insights into improving model performance on Encord Active.

Data infrastructure for multimodal AI

Click around the platform to see the product in action.

Explore the platformWritten by

Akruti Acharya

Explore our products

- YOLOv9 and YOLOv8 are versions of the YOLO (You Only Look Once) real-time object detection model. They are iterations of the original YOLO model, each incorporating improvements and advancements in machine learning techniques.

- YOLOv9 typically offers improvements in speed, accuracy, and scale of prediction compared to YOLOv8. These enhancements are achieved through optimizations in the model architecture, training process, and integration of advanced techniques such as Programmable Gradient Information (PGI) and Generalized ELAN (GELAN) architecture.

- YOLOv9 generally outperforms YOLOv8 in terms of speed, accuracy, and scalability. The improvements in performance are attributed to advancements in model architecture, training techniques, and integration of novel features such as PGI and GELAN. However, on xView3 dataset YOLOv8 had better performance.

- YOLOv9's performance on embedded platforms can vary depending on factors such as computational resources, hardware specifications, and optimization techniques. While YOLOv9 is designed to be efficient and adaptable, its performance on embedded platforms may require additional optimization and tuning to achieve real-time object detection capabilities.

- Yes, YOLOv9 can be used with depth cameras for object detection tasks. Depth cameras provide additional depth information, which can enhance the detection accuracy and capabilities of YOLOv9, particularly in scenarios where depth perception is crucial, such as robotics, augmented reality, and autonomous driving applications.