Customer Stories

Trusted by 300+ leading AI teams

4.8/5

4.8/5

All customer stories

Zeitview Improves Recall by 12% and Doubles Data Throughput Using Encord

Zeitview, a provider of advanced inspection solutions for the energy and infrastructure sectors, significantly improved performance of their rooftop penetration detection model by focusing on high quality data delivery. We spoke with Jonathan Lwowski, Head of AI/ML, and Conor Wallace, Machine Learning Engineer, to understand how leveraging Encord helped improve data quality, tightened their feedback loop, and accelerated the deployment of machine learning models into production. Key results Initial training data for Zeitview's rooftop penetration detection models suffered from quality issues. The first dataset was labeled by 15 external contractors using a third-party tool, resulting in inconsistent annotations and suboptimal model performance. Zeitview improved data quality using the Encord platform: Moved from external contractors to a smaller, specialized 5-person internal labeling team Implemented robust QA workflows for systematic quality control Relabeled problematic data while simultaneously expanding the dataset These changes yielded meaningful results: 3.67% boost in precision 12.33% boost in recall 2x increase in dataset size and throughput Reduced team size by ⅓ The recall improvement is particularly significant for Zeitview's inspection use case, representing a meaningful enhancement in detection reliability. How did Zeitview achieve these results? Encord's platform enabled several workflow improvements: Consolidate labeling and QA operations within a single environment Implemented structured QA/QC workflows that integrated MLEs directly into the feedback loop Leveraged image similarity search to quickly curate high-quality datasets As Jonathan notes, human labeling data can be expensive and time-consuming if not done efficiently: Intelligent data curation For complex projects with no reference data – like rooftop penetrations – Zeitview now leverages image similarity search and image quality metrics to curate high-quality datasets. This approach allows the team to rapidly identify edge cases and ensure diverse training datasets. Automated QA Zeitview moved from spreadsheet-based feedback to a three-round QA workflow built within Encord, integrating ML engineers directly into the review process. As label accuracy improves, they are able to gradually reduce human oversight. Active learning Zeitview is preparing to use Encord Active to close the loop between model predictions and data curation. The goal: trigger automated retraining when failed predictions are detected, ensuring continuous model improvement. Summary Zeitview integrated Encord’s platform to centralize their data operations and automate key parts of the annotation and feedback workflow, enabling greater efficiency and consistency across teams. With Encord, Zeitview has established a foundation for trustworthy, production-ready datasets and continuous model improvement - delivering faster iteration cycles, higher label quality, and more accurate inspection models.

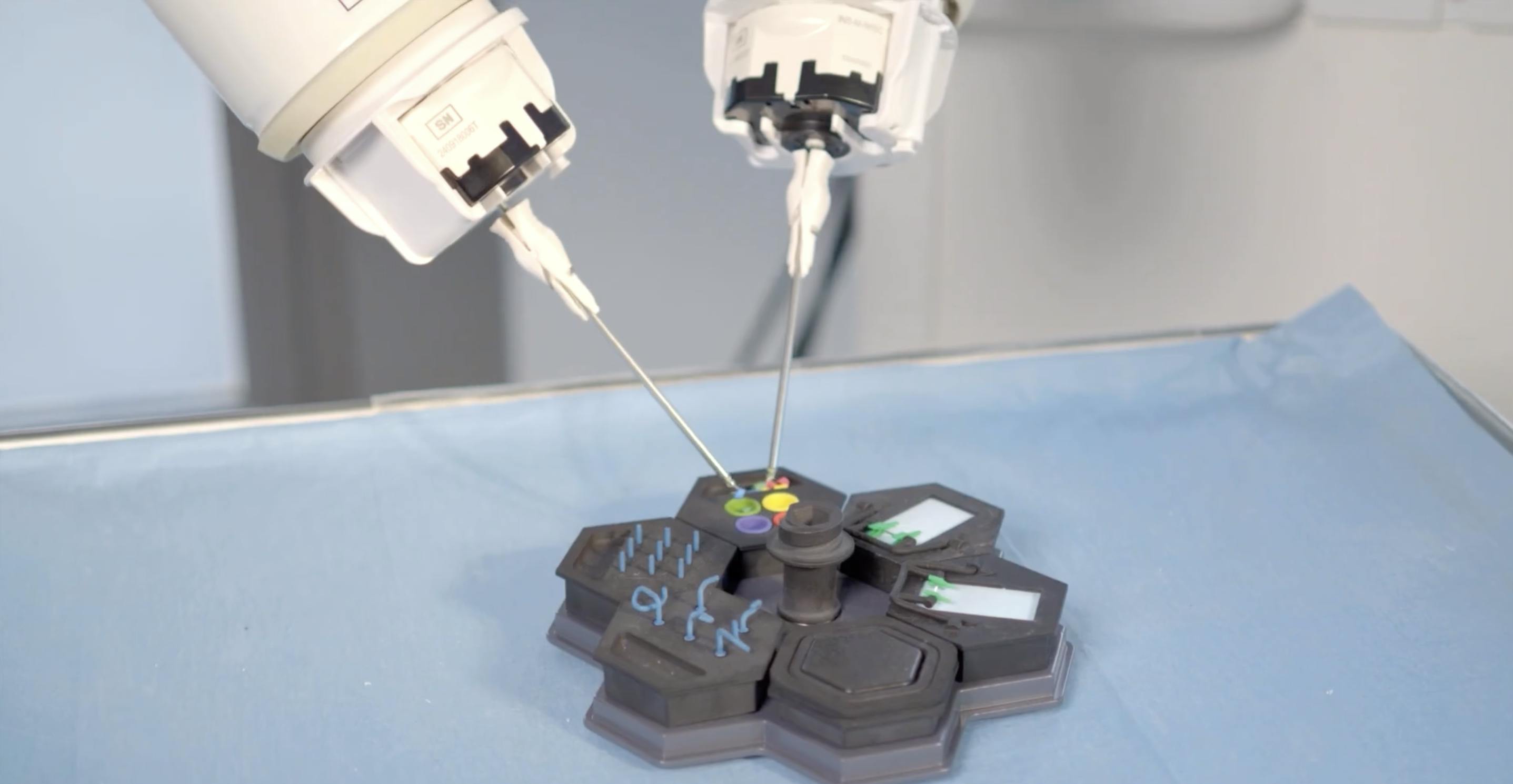

How MMI Accelerates Digital Surgery Innovation with Encord

The Challenge: Scaling Computer Vision for Robotic Microsurgery MMI is building the future of digital and robotic microsurgery. Their work sits at the intersection of robotics, computer vision, and AI, supporting surgeons who operate at an extremely small scale where precision, tremor reduction, and motion control are critical. To power these systems, MMI relies on large volumes of high-quality annotated video data. But microsurgical data presents unique challenges: it comes from multiple sources, requires extreme precision, and must be curated and validated carefully to ensure stable model performance across environments. As their computer vision efforts scaled, manual data handling and annotation became a bottleneck. Engineers needed to focus on model optimisation, testing, and innovation, not spending disproportionate time curating datasets, reviewing annotations, or managing fragmented workflows. MMI needed a solution that could: Scale annotation and review efficiently Support complex video-based computer vision workflows Integrate tightly with their existing AI pipelines Improve dataset consistency and model stability Reduce the time from hypothesis to model validation The Solution: Encord as the Backbone of MMI’s Data & Annotation Workflow MMI adopted Encord as a core part of their computer vision and data operations, using it across annotation, review, data curation, and automation. With Encord, the team gained access to: An external annotation workforce, allowing engineers to prioritise model innovation over manual labeling Workflow automation and model-assisted labeling, accelerating annotation and review cycles Systematic review policies and targeted relabeling, improving dataset consistency and edge-case handling Encord Index, enabling efficient browsing, categorisation, and reuse of large-scale surgical video data SDK integrations, allowing seamless export into MMI’s internal training pipelines Engineers across roles, from senior CV engineers to PhD researchers and interns, use Encord daily to review annotations, visualise edge cases, automate parts of the workflow, and move faster from experimentation to validation. The Results: Faster Iteration, Better Data, More Focus on Innovation By integrating Encord into their AI-driven workflows, MMI significantly improved both operational efficiency and model quality. Annotation and review cycles became faster and more consistent, allowing the team to iterate on multiple model versions more quickly. Improved dataset quality translated directly into more stable model performance across different test environments. Most importantly, Encord freed up valuable engineering time. Instead of manually handling data, MMI’s team can focus on what matters most: advancing the state of the art in digital surgery, developing assistive and autonomous capabilities, and delivering better outcomes for surgeons and patients. As MMI continues to expand its digital surgery platforms, including first-in-human deployments, Encord remains a critical partner in enabling scalable, reliable, and high-quality computer vision development.

OnsiteIQ Achieves 5x Faster Data Throughput and Streamlines Their AI Infrastructure

OnsiteIQ, a construction intelligence platform using computer vision for safety and quality inspections, migrated their data workflows to Encord after overhauling their AI infrastructure. This enabled them to become operational 4x faster and achieve 5x data throughput with their existing team. "Encord integrates seamlessly into our entire AI infrastructure," says Evgeny Nuger, Principal Engineer at OnsiteIQ. "By implementing Encord within our redesigned ML infrastructure, we've established an efficient end-to-end workflow from data sampling through to model training." Platform Migration + Key Results OnsiteIQ faced significant limitations with their previous annotation platform, struggling with poor usability and underperforming automation capabilities that hindered their workflow efficiency. After evaluating several vendors, the team chose Encord for its advanced features and intuitive interface, particularly the SAM 2 integration for automated labeling workflows. The results: 5x improvement in data throughput – with corresponding decrease in labeling costs through advanced automation features 75% reduction in time to value – implementation time decreased from 2 months to just 2 weeks Real-time annotation analytics – simplified team management through Encord's monitoring dashboard Superior Automation and Intuitive User Experience The automation capabilities paired with the UX of Encord's platform were decisive factors in OnsiteIQ's selection: Despite offering sophisticated organization with distinct layers for datasets, projects, ontologies, and files, the team found Encord's platform surprisingly intuitive, effectively balancing complexity with usability. Evgeny notes, "The UX on Encord's platform was way more clear as to how everything comes together." Accelerated Time to Value The implementation timeline also improved dramatically. The team saw a 4x acceleration in their time to value, reducing time to value from 2 months to just 2 weeks: Improved Team Management OnsiteIQ now utilizes Encord's built-in analytics to optimize their operations: The fully integrated system has improved operational efficiency for OnsiteIQ. The company is now actively using the data generated through Encord for deploying models into production.

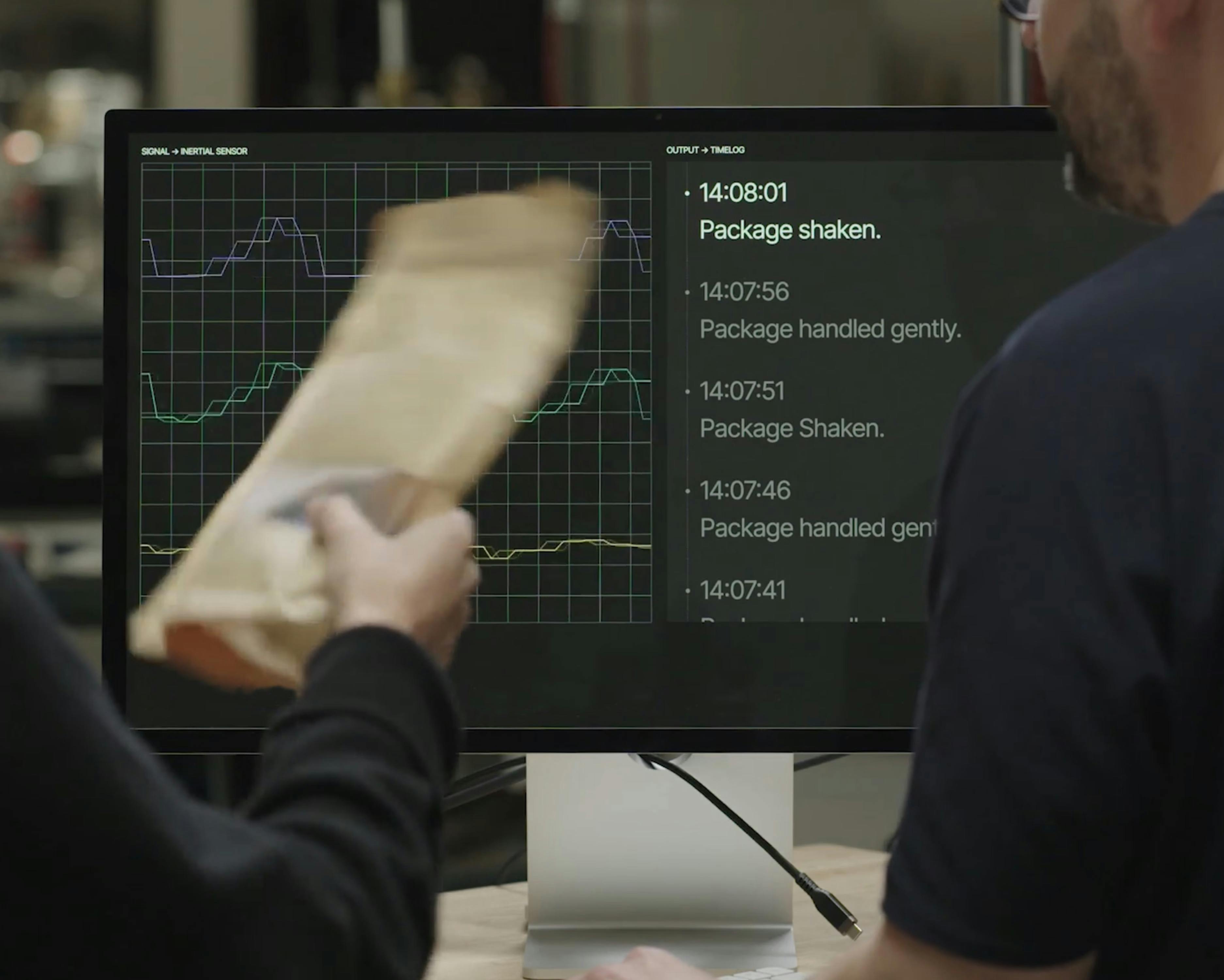

How Pickle Robot Is Using Encord To Build Physical AI For Warehouse Automation

Introducing: Pickle Robot Pickle Robot, a Cambridge, MA-based Physical AI innovator, is revolutionizing the logistics industry with cutting-edge applications of hardware, AI, and data. Focused first on optimizing truck unloading and loading, Pickle Robot streamlines one of the most physically demanding tasks in warehousing. Historically, the process of unloading non-palletized goods from trailers relied heavily on manual labor, resulting in fatigue and inefficiencies and prone to high worker safety risks. To address these challenges, a team of MIT graduates came together to form Pickle Robot. They developed a sophisticated solution that uses advanced AI algorithms trained on highly accurate images and videos to automate the unloading of up to 1,500 packages per hour with its green mobile manipulation robots. The purpose-built solution combines custom AI/ML, highly contextualized data, and off-the-shelf hardware and sensors, significantly reducing the time, effort, and risk in an often overlooked part of the supply chain that warehouses spend over $100 Billion a year to do. Today, they are helping their customers like UPS, Ryobi Tools, and Randa Apparel & Accessories unload millions of pounds of packages monthly. The data challenge To build fast and precise robotic systems, Pickle Robot needed to think about the end-to-end experience while facing hurdles across hardware and AI models. Specifically on the model front, Pickle Robot recognized the need for a robust AI system capable of handling diverse cargo. This led the team to implement a unique combination of sensors and machine learning models to identify different types of packages and manipulate various goods accurately. The integration of these technologies enhanced operational efficiency and minimized errors and downtime. Achieving success required a robust data engine and rich images with precise annotations. Prior to Encord, Pickle Robot used data annotation services from other providers. It relied on an outsourced labeling team to conduct the labeling within the software's limitations and the skills of the outsourced labelers. Challenges included: Poor labels—overlapping polygons, or, more often than not, a significant number of packages were submitted with incomplete labels. Excessive auditing cycles—the legacy approach was error-prone. The lean team of AI and ML engineers spent up to 20+ minutes on auditing tasks per cycle, with high rejection rates. Complex semantic segmentation ontologies were infeasible, which inhibited the robot's ability to accurately understand its operating environment. Platform unreliability limited the efficacy of automated workflows and reduced the time available for model development. Accuracy in training data is critical when your business depends on the accuracy and efficiency of the robotics system's performance. Utilising Encord for consolidated data curation & annotation To address these challenges, Pickle Robot made a strategic decision to partner with Encord. With Encord, Pickle Robot gained a platform that does data curation, annotation, and provides robust analytics and model evaluation functionality, with full integration to Pickle Robot's Google Cloud Platform based data engine infrastructure. The Encord platform enables data management and discoverability capabilities, granular annotation features (bounding boxes, polylines, key points, bitmasks, and polygons), nested ontologies, collaborative workflows, AI-assisted labeling with HITL, and comprehensive data curation functionality required to run efficient data pipelines. "For our AI initiatives, rapid iteration is critical. Encord and our ML infrastructure allow us to prototype learning tasks efficiently. The composability of Encord enables us to merge diverse data sources, facilitating extensive experimentation. With a well-integrated SDK, it's a matter of a few lines of code to achieve seamless integration and functionality." - Matt Pearce, Applied ML, Pickle Robot Benefits: Pickle Robot increased precision by 15% and iterated models 60% faster Since Pickle Robot partnered with Encord, Pickle Robot has seen a drastic improvement in the AI and ML engineers’ productivity, improved precision in task execution, and faster time-to-model improvement. Key benefits: Achieving reliable data pipelines for model training and evaluation 60% faster 30% improvement in annotation accuracy Faster and more comprehensive audit and review cycles Increased observability of real-time data distributions, allowing for rapid domain drift corrections. 15% Improvement in robotic grasping accuracy with better training data

Archetype AI Improve Efficiency by 70% With Encord

Introducing Customer: Archetype AI Archetype AI is building the first developer platform for Physical AI — powered by Newton, a foundation model trained on real-world sensor data. The platform enables enterprises to build and deploy custom AI applications for the physical world through simple APIs and no-code tools. With deep multimodal sensor fusion, Newton delivers rich, accurate insights across industries — from predictive maintenance to human behavior understanding. They're working with global leaders like Mercedes-Benz, NTT Data, and SLB, and backed by Venrock, Amazon, and Bezos Expeditions. Before Encord Prior to adopting Encord, Archetype AI managed data curation and annotation through a combination of internally developed tools and third-party platforms. A key limitation was the lack of native video support within their primary annotation tool. Without the ability to annotate directly on video, annotators were forced to label frame by frame — an inefficient and labor-intensive process that significantly hindered throughput. Object tracking capabilities were similarly constrained, leading to inconsistent performance and difficulty in maintaining continuity across frames. As the scale and complexity of the data increased, maintaining label accuracy was difficult due to limited visibility in the annotation interface, and the overall user experience lacked the flexibility required for managing high-volume video datasets. These inefficiencies created friction throughout the pipeline, delaying project timelines and impacting the quality of data used for model development. Recognizing the need for a more robust and purpose-built solution, the team began evaluating platforms capable of supporting scalable, high-performance video and multimodal annotation workflows. The Encord Solution Since adopting Encord, the team has achieved a 70% increase in overall productivity. Most significantly, annotation speed has doubled, allowing the team to work far more efficiently than with previous tooling. This improvement is driven by Encord’s fast and intuitive annotation tools, powerful features like trackers and bulk operations, and most importantly, the flexibility of Encord's SDK, which enabled the team to build custom ETL pipelines for seamless data ingestion and annotation retrieval. Tasks that previously required manual upload/download steps and browser-heavy interactions are now fully streamlined. Together, these capabilities have drastically streamlined Archetype AI’s end-to-end labeling workflow. Encord’s intuitive interface has streamlined project setup and management. It’s now easier for them to design workflows, assign and review tasks, and maintain full visibility across active projects. Labeling accuracy has also improved, leading to tangible gains in model performance, highlighting the impact of having the right data labeled correctly from the start. The platform made it easy for the team to experiment with new annotation methodologies and iterate quickly, without being constrained by tooling limitations. This flexibility introduced a new level of agility, enabling the team to adapt and innovate faster across their data pipeline. Collectively, these benefits have resulted in substantial time savings, improved model outcomes, and a more scalable and adaptable approach to handling complex multimodal data.

How Standard AI Powers Retail Intelligence at Scale With Encord

Introducing Customer: Standard AI Retailers today face some of the toughest challenges in physical stores — from tracking shopper behavior to optimizing promotions and in-store media. Standard AI solves these challenges by equipping retailers and CPG brands with AI-powered computer vision technology that unlocks real-time insights on promotions, media impact, new product launches, and more. The VISION platform turns data into actionable insights, helping retailers drive sales, improve store performance, and gain deeper visibility into the shopper journey. Standard AI is equipping brick-and-mortar stores and their suppliers with real-time in-store analytics, all while maintaining a privacy-first approach. Helmed by an executive team that spans firms like Mars, Sara Lee, Oracle, NASA, and Adobe, Standard AI is at the forefront of applying artificial intelligence and computer vision to physical retail spaces. The data challenge: lower quality, longer time to value At its core, Standard AI’s business is based on its ability to extract meaningful insights from millions of hours of video footage from store’s security cameras. For the ML team, access to high-quality and enriched video data was an extremely cumbersome, time-consuming process that relied on multiple internal and agency hand-off points, lengthy quality control review cycles, and fragmented point solutions. In several instances, custom scripts were needed to glue these workflows together. The ML team spent more time managing fragile data pipelines than focusing on model performance. The product and ML teams also wanted to find a way to streamline the data curation and annotation processes and spend more time evaluating and fine-tuning their models to continue improving performance. This wasn’t possible due to limitations of Standard AI’s existing tooling, which offered limited debugging, quality control, and statistical insights. From a business perspective, Standard AI understood that the way of managing their AI data pipeline wouldn’t scale or support the rapid innovation needed to meet customer demands. Standard AI’s Product and ML team defined strict requirements for their updated approach: Native support for large-scale video processing (including fish eye lens) with support for other modalities A unified platform for curation, labeling, and evaluation Robust API and SDK support Ability to automate labeling tasks with HITL A simplified user experience for non-technical users The solution: easy access to pixel-perfect video data to build production-grade AI Native video processing — With prior approaches, the Standard AI team spent long cycles manually uploading videos to other platforms and faced significant conversion issues. With Encord’s native video support and API, the Standard AI team is now able to upload and process millions of video files 5x faster. Unified data management platform for AI — Standard AI shifted from relying on outsourced labelers, open-source tooling, and niche labeling platforms to Encord, a unified platform that streamlines data curation and annotation at enterprise scale. Robust API and SDK support — The API integration for data upload is intuitive, enabling rapid implementation. and programmatic data annotation at speed with the Encord SDK. Granular ontologies — Standard AI was able to rapidly refine complex hierarchical ontologies in response to evolving project insights, continuously enhancing data labeling precision. AI-assisted labeling with HITL — Standard AI benefited from automated labeling functions paired with human-in-the-loop reviews to ensure label accuracy whilst speeding up labeling pipelines. Seamlessly scalable across the organization — The ML team has democratized access, enabling all team members to add, annotate, and utilize enriched data for their specific tasks. This distributed approach accelerates company-wide progress by eliminating bottlenecks previously created by specialized tooling gatekeepers. Benefits: Standard AI built production-ready models faster and at lower cost Since moving to Encord, Standard AI has seen a drastic improvement in productivity across its product and ML teams, significant cost savings, improved data quality, and faster time-to-model improvement. Key benefits: Saved $600k a year Process millions of video files 5x faster with Encord’s native video platform 99.4% faster project initiation

How Yutori Built the Best Web Agent in the World with High-Quality Human Data

Key Stats Thousands of weekly trajectory evaluations with 20+ evolving error categories Systematic head-to-head model comparisons across hundreds of trajectory pairs weekly to quantify performance gains Yutori Navigator outperforms Gemini 2.5 and Claude 4.5 by 10-20 percentage points on real-world web tasks Yutori is reimagining how people interact with the web. Founded in 2024 by former Meta AI leaders Devi Parikh, Dhruv Batra and Abhishek Das, the San Francisco startup builds autonomous web agents that reliably execute complex tasks – from booking reservations to researching products and making purchases. In November 2025, Yutori launched Navigator, a web agent that operates a real browser to autonomously complete web tasks. Navigator outperforms Gemini 2.5, Claude 4.0 and 4.5, and OpenAI's Operator by 10-20 percentage points in accuracy while being 2-3x faster. The Challenge: Foundation Models Can't Navigate the Real Web Foundation models struggle with dynamic interfaces, multi-step workflows, ambiguous user intent, and the need to reason about both what to do and how to do it. "Models aren't ready yet to deliver this out of the box," Devi explained. "In sequential decision making problems, errors compound at each step. We need to push on modeling capabilities to complete complex workflows on the web with high reliability. To this end, it is critical for data that models are trained on to be high quality. It's the starting point for where model capabilities will come from." Yutori faced critical data challenges. Supervised fine-tuning requires high quality human-annotated trajectories. Every action an annotator takes on the web while completing a specified task – including any exploration and backtracking – needs to be accurately recorded while keeping the annotation interface simple. Trajectory evaluation can’t be fully automated yet across the entire diversity of tasks. Incorrect actions or subgoals along the way may still lead to successful outcomes. That needs to be appropriately accounted for. While quality is most important, throughput of data annotation also needs to be reasonable to make fast progress. How Encord Built a Scalable Data Pipeline for Yutori We partnered with Yutori to develop data infrastructure spanning the agent development lifecycle. High-Quality Training Data We created a tailored pipeline for supervised fine-tuning: Human-annotated trajectories Iterative quality control with strict standards enforced by both teams Large-Scale Evaluation As Yutori's customer base grew, evaluating agent performance at scale became critical. We worked together to build a comprehensive evaluation framework: Custom error taxonomy: We iteratively developed 20+ error types capturing action prediction, model reasoning and infra errors. Error categories evolved weekly as Yutori identified new model behaviors. We trained our team to adapt quickly while maintaining quality. Massive scale: We conducted thousands of trajectory evaluations per week, evolving from task outcome evaluation to detailed error categorization of both the process and outcome of the agentic trace. Model evaluations: Beyond single trajectory and Online Mind2Web evaluations, we conduct systematic head-to-head comparisons for hundreds of trajectory pairs weekly where different models attempt identical prompts. Our framework has captured signals across multiple performance dimensions - identifying improvement areas and quantifying Navigator's gains over Claude 4.5 and Gemini 2.5. Model-in-the-loop: We balanced human expertise with AI efficiency. Over time, human annotators provided the correct action labels only when models made mistakes. The Result: Yutori Navigator Sets a New Standard The partnership enabled Yutori to launch Navigator as the highest-performing web agent available: 10-20 percentage points higher in accuracy than Gemini 2.5, Claude 4.0 and 4.5, and OpenAI's Operator 2-3x faster task completion with lower inference costs Uniformly preferred by users in head-to-head evaluations Yutori agents completing autonomous tasks (Videos courtesy of Yutori) You can try Yutori’s product Scouts or build on their state-of-the-art web agentic tech via their API. What This Means for Agentic AI As agentic AI expands into web browsing, coding assistants, and customer service, evaluating performance in production becomes critical. Our work with Yutori demonstrates what frontier AI teams need: Flexible data infrastructure that adapts to key experimental needs as model capabilities evolve Custom workflows for each development phase Quality and quantity of training data Human-model collaboration balancing automation with nuanced understanding Structured evaluation frameworks capturing fine-grained signals If you're building agentic AI that needs to work reliably in the real world, your data pipeline quality will determine whether your agents deliver on their promise.

How Harvard Medical School and MGH Cut Down Annotation Time and Model Errors with Encord

Introduction A new paper published in MDPI (Multidisciplinary Digital Publishing Institute) demonstrates how, using the Encord platform, researchers at Harvard Medical School, Massachusetts General Hospital, and Brigham and Women’s Hospital were able to reduce vascular ultrasound annotation time from days to minutes and run automated analyses of their datasets. Using Encord, the team was able to: Create their first segmentation models by labeling only a handful of images Cut annotation time through segmentation models by an order of magnitude Visually explore their dataset and identify problematic areas - in their case, the impact of blur on their dataset Evaluate the performance of their segmentation models in the Encord platform Problem: DUS Image Annotation is Resource-Intensive and Prone to Human Error Medical imaging, particularly Arterial Duplex Ultrasound (DUS), plays a crucial role in diagnosing and managing vascular diseases like Popliteal Artery Aneurysms (PAAs). The traditional method of analyzing DUS images relies heavily on manual annotation by skilled medical professionals. This process is fraught with challenges: Time-consuming—especially with the growing volume of medical imaging data. Prone to human error. Heavily dependent on expertise and experience - furthering how resource-intensive the process becomes The subjective nature of manual annotations can lead to inconsistent measurements and interpretations due to inter- and intra-observer variability during annotation. This raises concerns about the reliability and reproducibility of the results and could impact the accuracy of diagnoses and treatment plans for patients. The primary issue in this research paper lies in precisely annotating the inner and outer lumens of the artery in images - a critical step for accurate measurement and subsequent treatment planning. Solution: Encord Annotate to Auto-Label DUS Images and Encord Active to Validate Model Performance The study tested the feasibility of the Encord platform to create an automated model that segments the inner and outer lumen within PAA DUS. Using image segmentation to find the largest diameter and thrombus area within PAAs helped standardize DUS measurements that are important for making decisions about surgery. Using Encord Annotate for Automated Annotation The researchers collected and prepared (deidentification and extraction) a dataset comprising DUS images of PAAs for upload to Encord before annotating a few images to serve as ground truth for the annotation models using Encord Annotate. Using Encord Annotate’s automated labeling feature, they could generate segmentation masks for unlabeled images. This reduced the time and effort required for DUS image analysis while minimizing the potential for human error. Using Encord Active to Select the Best-Performing Model They trained three models and validated them with Encord Active on the annotated images (20, 60, and 80 sets). Encord Active enabled the researchers to understand the performance metrics that helped them select the best model for segmenting the inner and outer lumens of the popliteal artery with high precision. After training models on image subsets, we tested them within the Encord platform. We selected the desired tests in the analysis tab of the project, and after a runtime period, the platform presented calculations of true positives, false negatives, mAP, IoU, and blur. The report referenced Encord’s ability to seamlessly integrate into clinical processes with a user-friendly interface, simple onboarding, and rapid annotation workflows as crucial to the study's success. For healthcare practitioners who use the platform, this improves their diagnostic process without disrupting established procedures. Results Encord Reduced Annotation Time from Days to Minutes Where manual annotation could take several minutes per image, the researchers accomplished the task in a fraction of the time using Encord. Their workflow went from relying on RPVI-certified physicians manually annotating DUS images, a process that took several days, to using Encord to annotate a few images, train models, and auto-label unlabeled images in minutes. This efficiency proves crucial in clinical settings, where timely diagnosis and treatment decisions can significantly impact patient outcomes. Figure 1. AI segmentation classifications on duplex ultrasound images. (A) Outer polygon true-positive classification, where the color green indicates a correct segmentation. (B) Outer polygon false-positive classification, where red indicates an incorrect segmentation. (C) Inner polygon true-positive classification, where the color green indicates a correct segmentation. (D) Inner polygon false-positive classification, where red indicates an incorrect segmentation. Better Evaluation and Observability of Model Performance with Encord Active The researchers quantitatively assessed the performance of the three models with Encord Active providing analytics on the following metrics: mean Average Precision (mAP). Intersection over Union (IoU). True Positive Rate (TPR). Encord Active calculated the outer polygon's mAP to be 0.85 for the 20-image model, 0.06 for the 60-image model, and 0 for the 80-image model. The mAP of the inner polygon was 0.23 for the 20-image, 60-image, and 80-image models. The true-positive rate (TPR) for the inner polygon remained at 0.23. See the full results in the table below: “With regard to the models for outer and inner polygons, the outer polygon model outperformed the inner polygon model on every metric. The outer polygon demonstrated almost equal precision and recall at 0.85. The mAP for the outer polygon model was 0.85 with a true-positive rate of 0.86, which is comparable to other clinically used high-performing models for US segmentation.” With Encord Active automatically providing model evaluation analytics, the team instantly discovered the model's strengths and weaknesses. For every model they trained, Active provided breakdowns and graphs on its performance, including the ability to visually explore the regions the model incorrectly segmented vs. the ground truth. Encord Active Uncovered Blurry DUS Images that Could Degrade Annotation Model Performance The researchers used Encord Active to explore the model's performance depending on the blur level, allowing them to visually interact with varying levels of blur in their dataset to understand how this impacted model performance. The paper states, “Intuitively, our analysis found that as the images became blurrier, the model precision declined, and false-negative rates increased... Removing blur from—or augmenting—blur in images can be important for training accurate AI models.” Conclusion In summary, the platform’s intuitive navigation, complemented by tutorials for both model training and analysis, allowed for straightforward operationalization of the model training system among members of the research team. The results were displayed in an understandable format and interpreted within the following discussion. The findings have far-reaching consequences for medical imaging and diagnosis. The researchers greatly improved the accuracy, reliability, and efficiency of DUS image analysis by auto-annotating images with Encord Annotate and validating annotation models with Encord Active. This could result in potentially better patient care, treatment planning, and diagnostic procedures. At Encord, we are committed to continually providing healthcare practitioners and physicians with the data-centric AI platform they need to improve their medical imaging and analysis workflows. We’re proud of the work the researchers were able to accomplish and how Encord is paving the way for broader applications of AI in various aspects of medical diagnostics. 📑 Read the full paper on MDPI (Multidisciplinary Digital Publishing Institute).

Reducing Model False Positive Rate from 6% to 1% with Vida ID

Vida, a full-service verified digital identity platform, serves customers throughout Southeast Asia. While facial recognition is a mature technology, most open-source facial recognition datasets aren’t reflective of the region’s populations. Models trained on these datasets perform poorly, so Vida needs to build and manage new own datasets. Using Encord’s platform, Vida can oversee a large labeling team and annotate tens of thousands of images quickly. Introducing Customer: Vida Vida uses optical character recognition and computer vision technology to provide a full-service verified digital identity platform. Digital verification empowers people to participate in the economy. For instance, financial institutions require identity verification to reduce fraud and ensure that assets arrive in the correct accounts, and ride-hailing services require drivers to verify their identity. Operating mainly in Indonesia, Vida’s services enable banks, fintechs, online trading platforms, ride-hailing companies, and other companies to verify the identity of users online. Indonesia is the biggest archipelago in the world, and traveling long distances can be challenging. With digital verification, customers no longer have to spend time waiting or traveling to get their identities verified. Vida users take a photo of themselves and a photo of their identification document. Vida’s technology then confirms the authenticity of the document, and compares the document to the photo and an authoritative source to verify the user's identity. Throughout Indonesia, a digital signature must be accompanied by identity verification. Being able to sign documents and open bank accounts online is incredibly beneficial, especially for micro-entrepreneurs and SMEs in rural areas. Vida’s platform reduces barriers for these populations when accessing financial products like loans and savings accounts. Problem: Large Datasets, Large Labeling Teams Vida trains its computer vision models to predict the liveness of an image. The models learn to determine whether the image contains a physically present person or whether the image contains a fake representation of a person, such as a pre-taken photo or a 2D mask. Although facial recognition is a mature technology, most of the open-source facial verification datasets contain faces from the Western Hemisphere or East Asia. When models train on these datasets, they don’t perform well on Southeast Asian demographics. Indonesia is also a majority Muslim country, so many women wear a hijab, an attribute rarely encountered by models that train on these open-source datasets. To improve model performance, Vida began collecting and annotating new datasets– ones reflective of Southeast Asian populations. The company needed a platform that could help them label and manage the tens of thousands of new images collected. Vida’s team tried using some open source tools, but none of them allowed for managing a labeling team. Furthermore, facial verification data contains sensitive Personally Identifiable Information (PII), and Vida struggled to find a tool that gave them strong access control and the ability to keep customer data on their own servers. Solution: Flexible Platform, Iterative Process With Encord’s platform, Vida could easily set up a system for managing their 20-person labeling team. They have key managers who oversee the other annotators as well as reviewers. When a new annotator comes on board, Vida uses Encord’s tools to evaluate the new annotations and ensure that all labels are high quality. Vida’s work requires managing a lot of images – about 60,000 in a project. At first, Encord’s interface was showing all 60,000 at once, which created challenges around speed. However, after Vida gave Encord’s team feedback, they quickly changed the UI so that Vida could scale up the amount of images in each project. “I’ve been very impressed with how Encord iterates on the SDK, listens to feedback, and constantly improves the product,” says Jeffrey Siaw, VP of Data Science at Vida. With Encord, Vida can keep the data in their own Amazon S3 buckets, alleviating data privacy concerns about access and storage. Rather than require that data be stored on its own servers, Encord’s platform facilitates the use of a signed URL allowing it to access and retrieve the data from a customer’s preferred storage facility without storing data locally. Results: Increased Labeling Speed, Decreased False Acceptance Rate In the first month of using Encord, Vida’s team labeled 70,000 images at a rate much faster than they expected. When trained on the old datasets, Vida’s previous models had a false acceptance rate– they predicted that an image was of a physically present person when it was not– of six percent. False acceptances can have serious implications for Vida’s customers. For banks, a false verification could result in the opening of a fraudulent account. In ride hailing companies, it can increase the chance of robbery because a driver with a criminal record is onboarded using a false identity. With Encord, Vida improved the quality of their datasets, and the new models had a false acceptance rate of only one percent. “Using Encord’s platform, we were able to train our new models on much better datasets with much higher quality labels, reducing our false acceptance rate to only one percent” says Jeffrey Siaw, VP of Data Science. As Vida continues to grow, the data science team will begin taking a more granular approach in their data management and labeling. They’ll try labeling faces differently and label attributes such as religious headdresses to better track how their models perform across more specific demographic features. Using Encord, they can label these datasets at speed while managing multiple projects with different types of labels and ontologies, all in one platform. Ready to automate and improve the quality of your data labeling? AI-assisted labeling, model training & diagnostics, find & fix dataset errors and biases, all in one collaborative active learning platform, to get to production AI faster. Try Encord for Free Today.

Rapid Annotation & Flexible Ontology for a Sports Tech Startup

A leading sports analytics company needed to label large amounts of video footage to train its models to identify and analyze key moments in matches. Labeling data quickly is key, but when the company’s rapid in-house annotation tool limited its ML team’s ability to iterate and develop new features, they turned to Encord to annotate at speed and build new ontologies quickly. Use Case: Sports Analytics This leading sports analytics company’s computer vision technology allows teams to record, live stream, and analyze professional quality videos of their matches. It detects key events, such as free kicks and goals, and player positions. Players and coaches can interact with the recording, look at stats, and review critical moments from the match to improve their skills. Problem: Scaling In-house Tools When labeling video datasets, the company’s annotation team must click within each frame to mark players and objects. At first, the company built its own in-house tool and interface for data annotation. While the tool worked well for labeling at speed, it had limitations: it took months to build and refine, and the result was a single-purpose tool. Having been built for a specific annotation task, the tool could only label certain objects, which restricted the machine learning team from trying new models, working on new problems, and building out new products and features. Spending months building an in-house tool for each specific annotation task wasn’t a feasible, sustainable, or scalable strategy. Solution: Using API Access and Object Detection The company’s annotation team has a workload that consists of approximately two million clicks, so speed matters. They needed a data labeling tool that allowed them to label objects rapidly as they moved quickly from frame to frame. Before finding Encord, the team tried a couple of high-profile off-the-shelf annotation tools, but these products didn’t have a multi-frame object detection feature. Most of them focused on fancy annotation features without prioritizing the importance of annotating at speed. For this sports analytics company, spending several seconds to click and select an object – as opposed to spending only one second to click and select an object – significantly and negatively affected the rate at which they could train and develop models. Speed matters most to the company because if the annotation team can label 10 times faster, then they can annotate 10 times as many frames. In other products, it wasn’t possible to quickly track an object across frames with a single click per frame, but Encord built out a feature to do exactly what the company needed. The team could easily add action classifications to the labeled data, providing the ML team with important information about cameras and frame timestamps. Another benefit of working with Encord was that its API allowed the company to take the datasets already annotated with the in-house tool and upload them into the platform. That meant that the company’s previous annotation effort was not wasted because the ML team could continue to use and expand upon the old data using Encord. Because Encord’s user-friendly interface allows for multiple annotators to use the platform at the same time, the company could also continue to use the external annotation team with whom they had worked and built trust for years. Annotating a video of a soccer match in Encord Results: Start Collecting Data Immediately While building a new annotation-specific tool in-house takes months, Encord can build out a new ontology in a matter of minutes. Having this flexible ontology enables this company’s ML teams to try out different ontologies, work with new data types, and explore different ways of annotating to learn what works best. Before using Encord, the ML team had to take the safe route because of the high cost of pursuing new ideas that failed. With a multi-purpose, cost-effective annotation tool, they can now iterate on ideas and be more adventurous in developing new products and features. As the company continues to build analysis features for additional sports, using Encord’s flexible, multi-purpose annotation tools will help them move with speed to create new training datasets for identifying key sports events such as jump balls, faceoffs, and touchdowns. By harnessing the power of data-centric AI, this computer vision technology is on the path to giving players around the world the AI-powered tools they need to improve their games.

Improving Efficiency in the Construction Industry with Encord

CONXAI is building an AI platform for the Architecture, Engineering and Construction industries to contextualise different data and transform them into actionable insights. CONXAI, however, encountered challenges with optimizing datasets, reviewing labels, and managing large volumes of data with their in-house data annotation solution. This is where Encord came in - CONXAI was looking for an end-to-end solution for data management and curation, annotation, and evaluation. Introducing Customer: CONXAI CONXAI’s goal is to help AEC teams perform better by organizing and making sense of the vast amount of data generated during different stages of construction projects. They specialize in making data more useful, especially since a lot of project data often goes unused. Their ultimate aim is to help AEC professionals use AI effectively to improve efficiency and tackle challenges in their projects. We sat down with Markus Kittel, AI Product Development Manager at CONXAI, to discuss his work overseeing the product roadmap, and their exciting plans ahead for the business. Problem: Challenges in Data Curation and Management CONXAI's approach involves working with large unstructured datasets, which leads to challenges in effectively managing and curating project data. Their initial reliance on their in-house solution for data annotation proved to be problematic as the volume of data increased. Like many in-house tools, it was prone to frequent malfunctions, obscured the data it processed, and lacked mechanisms for reviewing annotations. Additionally, scalability was a major concern, as the in-house tool struggled to handle the increasing volume and complexity of project data. Without a reliable and scalable data management system in place, they faced difficulties in optimizing datasets and analyzing data effectively. As a result, CONXAI recognized the pressing need for a comprehensive solution that could streamline its data curation and management processes, enabling it to unlock the full potential of AI-driven insights within the AEC industry. CONXAI were also in need of a solution where data security took precedence, enabling data to remain within CONXAI servers and be accessed via an API or SDK. Solution: Encord Provides a Unified Platform for Data Curation and Management “With other labeling tools, we needed to integrate another tool for data management and exploration capabilities, but Encord combined the two needs and provided a single comprehensive solution, along with excellent customer care and support,” Markus says. To address these challenges, CONXAI explored various annotation tools. They were searching for a single platform that could handle data curation and management seamlessly. Encord's Annotate and Encord Active emerged as the ideal solution, offering a comprehensive platform to streamline CONXAI’s operations. As Markus says “We connect Encord Active with our large dataset and then use metrics to prioritize building a collection of images. This collection is then sent to Encord Annotate for labeling images in preparation for training. And all this without the data leaving our server.” Result: 60% Increase in Labeling Speed With the adoption of Encord into the data pipeline, CONXAI witnessed significant improvements in its data management processes. Encord facilitated the transformation of unstructured data into labeled, training-ready, datasets. The intuitive interface of Encord's Annotate tool simplified the annotation process for CONXAI's team, while also providing robust label review capabilities. Moreover, Encord's Active platform allowed CONXAI to efficiently curate and evaluate their datasets. “The labeling speed of the annotation team improved to almost 60% compared to when using their in-house tool.” - Markus Kittel CONXAI was able to curate over 40k images with Encord Active. They were then able to efficiently evaluate and prioritize these images based on metrics, facilitating streamlined data management and enhanced decision-making processes within their operations. CONXAI were able to contribute to Encord’s product roadmap by identifying that mapping relationships between labels in their ontology would enhance model performance. The Encord team were able to deploy this functionality, resulting in an improved user experience for CONXAI. Overall, using Encord led to enhanced robustness, simplified data pipelines, and a remarkable 60% increase in labeling speed compared to CONXAI's previous in-house tool. This demonstrates how adopting an end-to-end platform with annotation, curation, and evaluation capabilities provides the best solution for computer vision teams.

Boosting Last-mile Model Performance: Increase mAP by 20% while reducing your dataset size by 35% with intelligent visual data curation

Introducing Customer: Automotus Automotus is leading the advancement of parking and traffic management solutions through the use of AI and computer vision technology. The platform serves cities and airports to enhance street safety, sustainability, and efficiency by automating curbside operations, including parking, loading zones, zero-emission areas, bike lanes, and more. Problem: Large volume of data, expensive to label Automotus faced challenges in managing their entire data pipeline for model development, including automating data curation, ensuring efficient AI-assisted labeling, and streamlining model evaluation processes. After first establishing a continuous annotation pipeline, the team faced a critical question: Among all the collected data, how could they ensure they were labeling the most relevant data? And, how would they identify such data? The large volumes of de-identified images captured from hundreds of cameras presented an abundance of data. Labeling all of it would not necessarily improve model performance and would be prohibitively expensive. Solution: Use Encord to manage data pipeline Upon evaluating other tools, the Automotus team partnered with Encord to manage their entire data pipeline for model development, from automated data curation to AI-assisted labeling to model evaluation. Here's a look at their entire workflow: One of the team’s first priorities was converting the vast amount of de-identified images into labeled training data. Results: 35% reduction in the datasets' size for annotation Leveraging Encord, the team was able to visually inspect, query, and sort their datasets to eliminate undesirable low-quality data in just a few clicks. This process resulted in a 35% reduction in the datasets' size for annotation. Consequently, the team achieved over a 33% reduction in their labeling costs. The high model accuracy enabled Automotus to better serve and grow with their customers – presenting more accurate data to their clients: “From the modality distribution that happens at the street-level, to more accurate representations of the dwell times [and a few other metrics that we supply to our clients] – these base models are the ones driving these analytics.” How does Encord compare to other tools on the market? The team evaluated several other tools on the market but ultimately decided to partner with Encord for several reasons: Flexible Ontology Structure: Encord facilitated efficient object classification and tracking, allowing for straightforward ontology management and seamless training of both detection and classification models within a single project. Quality Control Capabilities: Encord's human-in-the-loop feedback mechanisms significantly enhanced annotation performance, particularly in identifying small objects within frames. Automated Labeling Features: The ability to label a few frames and use assisted labeling to speed up the process, reducing the need for manual labeling of every frame, was highly advantageous. The Automotus AI team notes: “For example, a shortcoming with other tools was the quality of the labels: we’d occasionally realize bounding boxes would be tighter or too wide around the objects they were identifying, or objects wouldn’t be classified correctly within frames. Now, we can select the sampling rate of frames that we want to move towards a review process, and share real-time context with annotators so that they can also power our model performance. This human-in-the-loop approach means we can use Encord to help our annotators perform annotations better, which in turn speeds up how quickly we can improve our model performance. We are able to localize objects better and increase accuracy.”