Contents

Introduction: The Hidden Edge in the AI Race

Why Data Ops Is Not Just a Cost—It’s a Strategic Asset

Why Competitors Can’t Copy Your Data Flywheel

How Strong Data Infrastructure Reduces Cost and Time-to-Market

Moving from Prototype to Production-Ready AI

Winning with Data—Not Just Models

Build Your Data-Centric Infrastructure with Encord

Conclusion

Encord Blog

Why Your AI Data Infrastructure Is the Real Competitive Advantage

5 min read

Introduction: The Hidden Edge in the AI Race

This guide to AI data infrastructure breaks down why it’s the real competitive advantage for your AI initiatives, showing how strong pipelines, automation, and feedback loops drive speed, accuracy, and cost efficiency.

Artificial intelligence (AI) and machine learning innovation now rely more on data infrastructure than just building smarter models. Leading tech companies like Microsoft, Amazon, Alphabet, and Meta are investing in AI infrastructure. Total capital expansion is projected to grow from $253 billion in 2024 to $1.7 trillion by 2035. That investment signals the actual value is in scalable, performant, and secure data foundations.

A MIT Sloan Management Review study found that organizations with high-quality data are three times more likely to gain significant benefits from AI. Managing your AI data infrastructure well and deploying AI with ready AI datasets takes you far beyond your peers in terms of speed, cost, and AI-driven decision-making accuracy.

In this article, we will discuss why your AI data infrastructure matters more than model tweaks and how it drives scalability and operational efficiency. We will also go over how feeding enriched data back-to-back into training through feedback loops accelerates model improvements, as well as how infrastructure investments reduce costs and time-to-market.

Why Data Ops Is Not Just a Cost—It’s a Strategic Asset

An effective way to improve data infrastructure is by adopting DataOps. DataOps is a set of agile, DevOps-inspired practices that streamline end-to-end data workflows, from ingestion to insights.

Many leaders view data operations as a sunk cost or a side project to support AI models. This perspective limits their AI strategy. In reality, Data Ops is a strategic asset that drives competitive advantage across your AI initiatives. Treating data like code transforms how teams develop AI systems.

When you adopt data-as-code principles, your workflows become:

- Modular: Data pipelines and workflows are broken down into clear, manageable components. This way, you can swap pipelines or models without having to rebuild your system.

- Versioned: Just as software engineers version their code, dataset versioning allows you to track changes and maintain full audit trails. Additionally, it helps compare model performance across iterations. Tools like DVC (Data Version Control) enforce version tracking for datasets, just like Git for code.

- Reusable: Once you codify a pipeline, you can reuse it in AI projects. This reduces duplication of effort and speeds up deployment.

The Winning Loop Explained

Successful AI initiatives rely on a winning loop that few competitors can replicate. This loop turns data operations from a support function into a strategic growth engine. Here are the loop steps:

Capture Live Signals from Real-World Usage

Real-world usage generates immediate insights like clicks, transactions, chat logs, and sensor readings. Capture these live signals in real time to align models with actual user behavior.

Triage and Enrich Data with Human- and Model-in-the-Loop Workflows

Raw captured data is rarely perfect. The data pipeline can use automation features present in tools like Encord. Its active filtering functionality can help filter out, categorize, and pre-process extensive datasets.

In addition, human-in-the-loop (HITL) and model-in-the-loop (MITL) workflows further refine this data for quality. Humans resolve edge cases, ambiguities, and context-specific labels, while AI tools streamline scalable enrichment and annotation tasks. This combined approach optimizes data quality while controlling costs.

Feed Data Back into Training, Fine-Tuning, and Evaluation

Once data is cleaned and enriched, it flows into versioned pipelines for training, fine-tuning, and automated evaluation. DataOps enforces Continuous Integration and Continuous Delivery (CI/CD) for data, automated tests, schema validation, and real-time Quality Assurance (QA) before the data enters production.

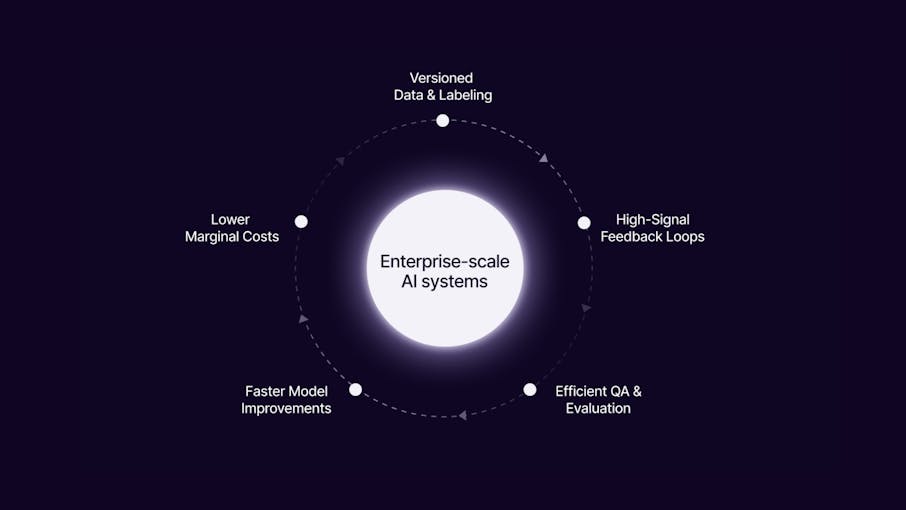

Fig 1. Continuous Feedback Loop Drives Competitive Advantage

Pro Insight:

Teams running this loop continuously build compounding value. They don’t just rely on new algorithms to drive results. Instead, their AI systems evolve daily with fresh, relevant data. Meanwhile, competitors who focus only on tweaking models face diminishing returns without a strong data infrastructure to support ongoing learning.

Why Competitors Can’t Copy Your Data Flywheel

Having a strong data infrastructure built on an efficient DataOps workflow gives your team a lasting edge. It enables faster iteration, better quality control, and domain-specific learning that compounds over time.

While competitors may replicate your models, algorithms, AI tools, or frameworks, they cannot recreate your data flywheel. This system captures real-time signals, efficiently enriches the data, and feeds it back into the model pipeline.

Fig 2. Data Flywheel and How it Works

Datasets as Intellectual Property

Treating datasets like intellectual property assets helps you secure a competitive advantage. Companies with high data valuation often have market-to-book ratios 2–3 times higher, reflecting a premium on data quality, ownership, and governance. Internal tools and processes for data versioning, annotation, and lineage are proprietary and provide your organization with a hidden edge.

Edge Cases Are Unique to Your Domain

Every AI system encounters edge cases, those rare, unexpected scenarios that models struggle with. Only your team understands the full context behind edge-case exceptions, the frequency, and the business impact. Over time, your feedback loops and triage processes effectively capture and resolve these edge cases, empowering you to leverage AI capabilities for deeper domain adaptation.

This leads to fine-tuned models deeply aligned with your operations, workflows, and customer interactions. For competitors, if they want to replicate your data, they may require your organizational knowledge and historical context.

Real-World Example: A lead data scientist we spoke to expressed the workflow complexity surrounding edge cases and feedback. This extends beyond basic data operations and instead requires knowledge reflected in the company’s datasets, tools, and processes.

How Strong Data Infrastructure Reduces Cost and Time-to-Market

A well-built AI data infrastructure delivers measurable savings and accelerates deployment. It replaces fragmented, manual efforts with repeatable systems that enable scalable efficiency across teams and projects.

Faster Project Onboarding

According to a study, organizations using data pipeline automation saw 80% reductions in time to create new pipelines, moving fast from concept to live data. When data pipelines, workflows, and versioning are standardized, new AI initiatives get up and running quickly. Teams don’t waste weeks rebuilding ingestion scripts or annotation processes. Instead, they use existing modular frameworks to start experimentation and model training sooner, aligning with business needs efficiently.

Smarter Annotation at Scale

Manual data labeling becomes a bottleneck as datasets grow. Having a tool like Encord AI data management in your data stack automates data triage and integrates human-in-the-loop (HTIL) annotation seamlessly.

Additionally, it deploys model-in-the-loop pre-labeling to reduce manual effort. This optimized annotation process improves data quality and speeds up training cycles. It ensures your AI applications remain accurate and reliable as workloads increase.

Fig 3. Human-in-the-Loop Automation

Reuse Models and Datasets Across Products

An AI data infrastructure that is versioned and reusable enables teams to use existing datasets and pretrained models for new use cases. This reduces duplication of work, lowers operational costs, and shortens time-to-market for AI-powered features across products.

High Marginal Costs Without Infrastructure

Without a scalable data infrastructure, every new AI project faces high additional costs. Labeling costs increase linearly with customer growth unless automation is used. QA and feedback pipelines struggle to scale, which causes deployment delays and increased operational risk. This is not sustainable for companies looking to grow AI capabilities throughout their ecosystem.

CEO Insight:

These needs were validated by a CEO of a firm in the computer vision space, who expressed that scaling past the competition has a high marginal cost. However, there is a competitive advantage in building a data labeling infrastructure. Without it, the cost to support each customer remains high, hurting cost efficiency.

Moving from Prototype to Production-Ready AI

Experimentation is easy, but scaling AI to production brings a lot of challenges. Many teams build impressive proof-of-concept models. These models perform well in controlled environments but fail when exposed to real-world requirements such as auditability, consistency, and compliance.

Without a strong, end-to-end data infrastructure, moving from prototype to production becomes a bottleneck. To overcome these challenges, teams must adopt repeatable, automated pipelines as the foundation of their infrastructure.

Here’s why repeatable pipelines matter:

- Consistent Quality: Repeatable data pipelines ensure that the same standards are applied across training, validation, and deployment. This consistency is crucial for maintaining model performance when working with diverse and evolving datasets.

- Regulated Industry Compliance: Industries such as healthcare and finance require traceable and auditable AI systems. Without automated and versioned data workflows, proving compliance becomes manual, slow, and prone to errors.

- Faster Iteration Cycles: Automated pipelines reduce time spent on repetitive tasks. Engineers can quickly update models, test them with fresh data, and deploy improvements. This agility gives companies a strong competitive edge.

Researcher Testimony:

This is exactly what was described by a researcher at a firm developing agentic AI. While his team had working models, they did not have repeatable data infrastructure. This put them at a competitive disadvantage.

Winning with Data—Not Just Models

AI innovation is often framed as a race to build smarter generative AI models. Yet, the true differentiator is how your team uses AI data infrastructure to keep models learning, accurate, and ready for production.

A data-focused approach, supported by automated pipelines and strong data management, turns raw datasets into a strategic asset. This boosts AI adoption across industries such as healthcare, finance, and supply chain.

Teams that invest in strong AI data infrastructure build a data flywheel. This flywheel becomes an engine of continuous improvement, embedding domain expertise into every cycle.

Key advantages of data-centric infrastructure include:

- Faster Iteration: Automated data pipelines reduce turnaround time for model updates. Teams move from weeks to days in deploying improvements, staying ahead in competitive markets.

- Higher Model Accuracy: Fresh, high-quality, on-distribution data improves predictive performance. Models stay aligned with evolving user behavior and edge cases unique to your ecosystem.

- Lower Deployment Costs: Reusable pipelines, versioned datasets, and streamlined labeling reduce infrastructure costs. Teams avoid rebuilding from scratch for each use case, maximizing operational efficiency.

- Fewer Failures in Production: Repeatable, audited workflows ensure that models work as expected when exposed to real-world demands, regulatory requirements, and customer interactions.

Build Your Data-Centric Infrastructure with Encord

Encord offers a unified platform to manage, curate, and annotate multimodal datasets. It serves as a direct enabler of robust AI data infrastructure in production settings.

Unified Data Management & Curation (Index)

Encord handles images, video, audio, and documents within a single environment for in-depth data management, visualization, search, and granular curation. It connects directly to cloud storage, including AWS S3, GCP, Azure, and indexes nested data structures.

Fig 4. Manage & Curate Your Data

AI-Assisted and Human-in-the-Loop Annotation

Automated labeling offloads repetitive tasks, then humans review and correct edge-case outputs. This HTIL workflow boosts annotation accuracy by up to 30% and speeds delivery by 60%, ensuring data quality at scale. Encord Active monitors model performance, surfaces data gaps, finds failure modes, and helps improve your data quality for model retraining loops.

Data‑Centric Curation & Dataset Optimization

Encord applies intelligent filters to detect corrupt, redundant, or low-value datapoints. Encord index led to a 20% increase in model accuracy and a 35% reduction in dataset size for Automotus.

Business Impact & Results

Encord users report tangible outcomes, including $600,000 saved annually by Standard AI and a 30% improvement in annotation accuracy at Pickle Robot. Its use of the Index alone cut dataset sizes by 35%, saving on compute and annotation costs.

Conclusion

AI success at scale comes from data infrastructure. Tech companies are investing to scale their infrastructure because data pipelines, governance, and real-time systems deliver growth, productivity, and long-term profitability.

When you treat data as a strategic asset, applying versioning, modular pipelines, and automated workflows, you gain:

- Faster iteration and deployment, outpacing competitors

- Higher accuracy and robustness, driven by quality datasets

- Lower costs and failure rates, thanks to reusable, scalable systems

- Defensible differentiation, through proprietary feedback loops and domain edge cases

If you want a lasting competitive edge, focus on your AI data infrastructure. Scalable pipelines, automated workflows, and strong governance are the foundation your AI strategy needs.

Explore the platform

Data infrastructure for multimodal AI

Explore product

Explore our products

Encord provides comprehensive support for data integration and pipeline design, ensuring that customers can seamlessly onboard and utilize their data within the platform. Our solutions team assists in creating custom models and designing efficient data workflows to optimize the user experience throughout their lifecycle with Encord.

Encord's data marketplace is designed to seamlessly integrate with external data sources, allowing users to access a diverse array of datasets from multiple agencies. This integration supports the creation of a centralized hub where customers can find and utilize data for various applications, ensuring a one-stop-shop experience.

Yes, Encord is designed to support teams at various stages of their AI journey, including those with limited data infrastructure. Our platform offers solutions that help teams manage and utilize their existing data effectively while also providing pathways to scale their data capabilities as needed.

The end-to-end data ops capabilities of Encord allow teams to manage their AI processes efficiently as they evolve. This means that as new projects or product lines emerge, teams can quickly adapt and implement model improvements, significantly speeding up the commercialization process.

Encord enables teams to seamlessly integrate their cloud storage solutions, facilitating easy data registration within the platform. This feature ensures that teams can bring their datasets into Encord without unnecessary complications, streamlining the annotation process.

Encord emphasizes data governance by offering features that allow users to manage data sensitivity and sharing protocols. This ensures that organizations can collaborate with partners while maintaining compliance and security across different regions.

Yes, Encord can interact seamlessly with existing data platforms, including BigQuery. This integration facilitates better data management and enables users to run ETL processes efficiently to create data for training or analytics purposes.

Encord provides robust features for data distribution, allowing seamless transfer of data from storage into workflows for external workforces. This ensures that teams can access and annotate data efficiently, regardless of their location.

Yes, Encord supports cloud integrations, enabling users to utilize their own cloud infrastructure for data storage. This ensures that data is not transferred to Encord's servers, maintaining privacy and control over sensitive information.

Encord is designed as a collaborative data creation platform, enabling teams to work together effectively. It streamlines communication and data sharing, which is crucial for teams handling large volumes of multimodal data.