How to Scale Audio Annotation: Diarization, Transcription, and Automation with Encord

Technical Writer at Encord

In our latest webinar, we explored one of the most underestimated challenges in Audio AI development, building production-ready audio systems.

Hosted by Diarmuid, Lead Customer Support Engineer, and Merricc, Senior Software Engineer at Encord, the session unpacked what makes audio uniquely difficult and how thoughtful workflow design can dramatically reduce labeling time while improving model performance.

If you are working on speech models, call center AI, voice agents, or environmental audio systems, here are the key takeaways.

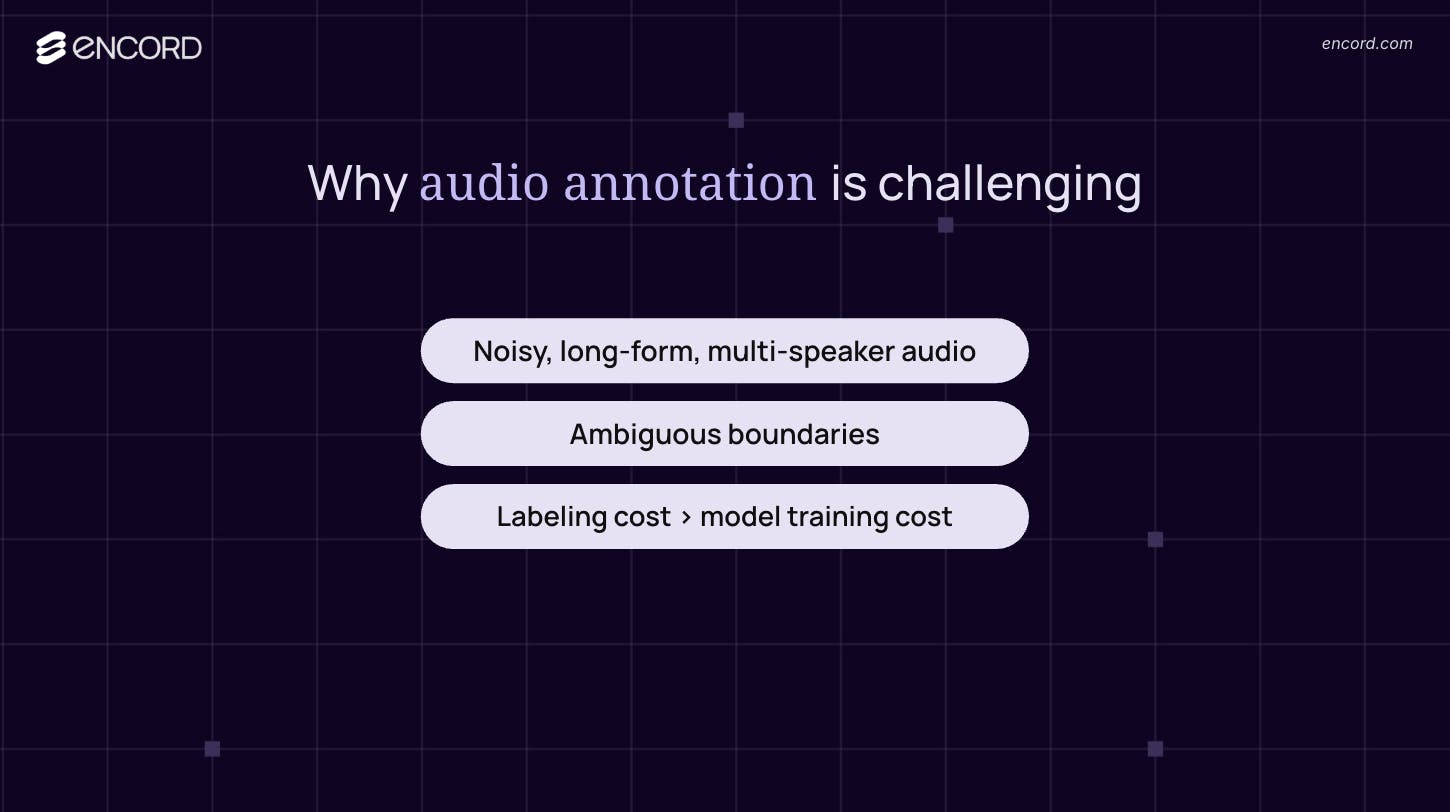

Why Audio Is So Hard, and So Expensive

Audio looks simple on the surface, but it is inherently complex. Unlike images or short text snippets, audio is long-form, noisy, and multi-speaker by default.

A five-minute conversation is rarely just five minutes of clean speech. It includes overlapping voices, background noise, interruptions, silence, filler words, and environmental artifacts. All of this must be interpreted correctly before a model can learn from it.

The real constraint is not access to data. Most teams already have thousands or even millions of recordings. The bottleneck is labeling. In audio AI, labeling costs frequently outscale data access, which makes efficiency the defining factor in whether a system can scale.

Audio is also temporal. Teams must capture not just what was said, but exactly when it was said. Who is speaking? When do they start? When do they stop? Are two people speaking at the same time? Small boundary errors compound into downstream performance issues, especially in production systems.

The 10x Reality of Audio Annotation

During the webinar, Diarmuid highlighted a common rule of thumb. A five-minute audio file often takes fifty minutes or more to fully transcribe and diarize manually. That is the 10x rule.

In more complex recordings, for example those with strong accents, heavy cross-talk, or poor audio quality, the effort can increase to fifteen or even twenty times the original duration.

At scale, this approach becomes unsustainable. Hiring more annotators helps temporarily, but it does not solve the structural inefficiency.

Automation as a Force Multiplier

Instead of relying purely on manual workflows, Encord enables teams to use automation as a starting point.

In the demo, Diarmuid showed how task agents can automatically perform speaker diarization and transcription using models such as Whisper and Pyannote. The output is then routed through a confidence-based workflow.

High-confidence predictions go straight to review, where annotators validate and make small adjustments. Low-confidence predictions are flagged for deeper annotation work. This ensures that human effort is focused where it adds the most value.

Annotators are no longer starting from a blank waveform. Instead, they refine and correct machine-generated labels, which significantly reduces total effort while maintaining quality.

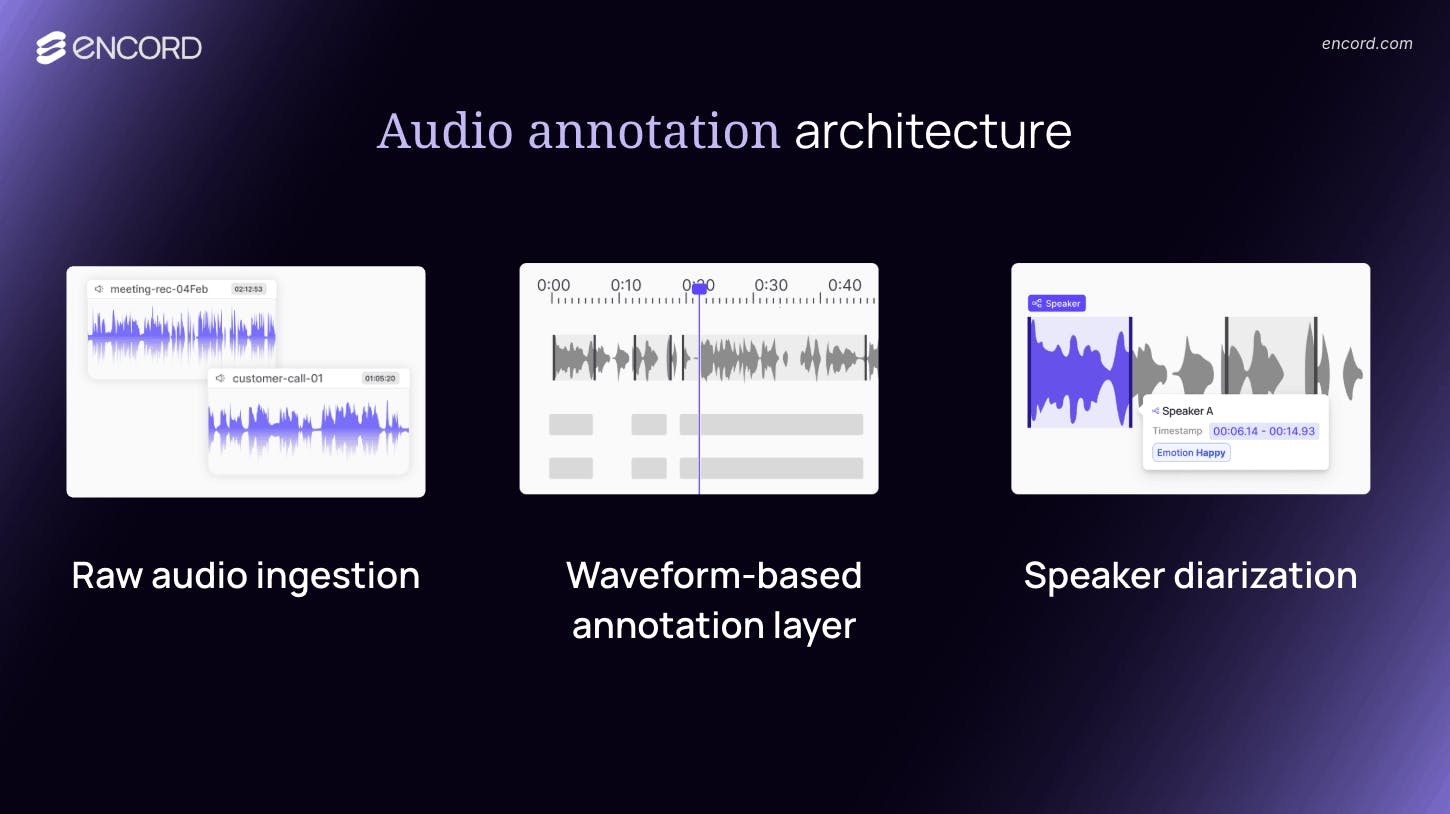

Why Waveform-Based Labeling Matters

A key design choice in Encord’s audio tooling is labeling directly on the waveform, rather than working only from text.

Because audio is temporal, visualizing the waveform allows annotators to zoom into millisecond-level detail. They can loop tiny snippets repeatedly, drag precise speaker boundaries, and account for overlapping speech. This is especially important in conversational audio, such as customer support calls, where interruptions and cross-talk are common.

For teams building word-level models, including those that need to capture filler words like “um” or subtle stutters, the ability to listen on repeat while adjusting timestamps is critical. Precision at this level often determines whether a model feels production-ready or unreliable.

From Curation to Active Learning

The webinar also covered how production audio systems evolve over time.

Encord supports three key stages. First is curation, where teams index and tag their audio to ensure diversity across accents, speakers, and recording conditions. Second is annotation, where transcription, speaker labels, emotion tags, and other attributes are applied. Third is active learning, where model predictions are brought back into the platform, compared against ground truth, and analyzed for edge cases.

This is where performance gains accelerate. Corrections made during review feed back into the training pipeline. Rather than relabeling the same failure cases repeatedly, teams identify patterns, fix them, and improve future predictions.

As Merric put it during the session, pipelines improve when corrections change future behavior. Audio systems scale when annotation systems learn.

Workflow Changes Drive the Biggest Gains

One of the clearest insights from the discussion was that the biggest improvements in audio AI rarely come from swapping in a new model.

They come from workflow design.

Teams that implement confidence-based routing, close the feedback loop, and prevent repeated labeling of the same errors see significantly better outcomes. In many cases, operational changes yield larger gains than upgrading the underlying model architecture.

Production audio AI is less about chasing novelty and more about building disciplined systems that learn over time.

What Is Next: The Agents Catalog

Merric also previewed an upcoming release, the Agents Catalog.

The goal is to make automation even more accessible. Instead of manually wiring up infrastructure and integrating models, users will be able to browse a searchable library of agents inside Encord, select the automation they need, and trigger it directly from the UI. The model follows a bring-your-own-keys approach, where Encord provides the tooling and orchestration.

This lowers the barrier to entry for teams who may not have deep machine learning infrastructure expertise, while still enabling powerful, customizable workflows.

Closing the Loop

Building production-grade audio AI is not just about transcription accuracy or speaker detection benchmarks. It is about designing systems where corrections inform future behavior.

When annotation workflows are structured intelligently, automation reduces repetitive work, review catches critical edge cases, and feedback loops continuously improve model outputs.

Audio may be noisy and complex, but with the right workflow architecture, it becomes scalable.

If you missed the live session, you can watch the replay at the top of this article, and join us for upcoming online events as we continue exploring production AI systems across modalities.