Announcing our Series C with $110M in total funding. Read more →.

Contents

What is Image Annotation?

What is the Goal of Image Annotation?

Image Annotation in Machine Learning

What is the Difference Between Classification and Annotation in Computer Vision?

What Should an Image Annotation Tool Provide?

What are the Most Common Types of Image Annotation?

Challenges in the Image Annotation Process

Best Practices for Image Annotation for Computer Vision

Annotate with a powerful user-friendly data labeling tool

Encord Blog

The Complete Guide to Image Annotation for Computer Vision

Image annotation is a crucial part of training AI-based computer vision models. Almost every computer vision model needs structured data created by human annotators.

Images are annotated to create training data for computer vision models. Training data is fed into a computer vision model that has a specific task to accomplish – for example, identifying black Ford cars of a specific age and design across a dataset. Integrating active learning with the computer vision model can improve the model’s ability to learn and adapt, which can ultimately help to make it more effective and suitable for use in production applications.

In this post, we will cover 5 things:

- Goals of image annotation

- Difference between classification and image annotation

- Common types of image annotation

- Challenges in the image annotation process

- Best practices to improve image annotation for your computer vision projects

What is Image Annotation?

Inputs make a huge difference to project outputs. In machine learning, the data-centric AI approach recognizes the importance of the data a model is trained on, even more so than the model or sets of models that are used.

So, if you’re an annotator working on an image or video annotation project, creating the most accurately labeled inputs can mean the difference between success and failure. Annotating images and objects within images correctly will save you a lot of time and effort later on.

Computer vision models and tools aren’t yet smart enough to correct human errors at the project's manual annotation and validation stage. Training datasets are more valuable when the data they contain has been correctly labeled.

As every annotator team manager knows, image annotation is more nuanced and challenging than many realize. It takes time, skill, a reasonable budget, and the right tools to make these projects run smoothly and produce the outputs data operations and ML teams and leaders need.

Image annotation is crucial to the success of computer vision models. Image annotation is the process of manually labeling and annotating images in a dataset to train artificial intelligence and machine learning computer vision models.

What is the Goal of Image Annotation?

Image annotation aims to accurately label and annotate images that are used to train a computer vision model. It involves

Labeled images create a training dataset. The model learns from the training dataset. At the start of a project, once the first group of annotated images or videos are fed into it, the model might be 70% accurate. ML or data ops teams then ask for more data to train it, to make it more accurate.

Image annotation can either be done completely manually or with help from automation to speed up the labeling process.

Manual annotation is a time-consuming process because it requires a human annotator to go through each data point and label it with the appropriate annotation. Depending on the complexity of the task and the size of the dataset, this process can take a significant amount of time, especially when dealing with a large dataset.

Using automation and machine learning techniques, such as active learning, can significantly reduce the time and effort required for annotation, while also improving the accuracy of the labeled data. By selecting the most informative data points to label, active learning allows us to train machine learning models more efficiently and effectively, without sacrificing accuracy. However, it is important to note that while automation can be a powerful tool, it is not always a substitute for human expertise, particularly in cases where the task requires domain-specific knowledge or subjective judgment.

Image Annotation in Machine Learning

Image annotation in machine learning is the process of labeling or tagging an image dataset with annotations or metadata, usually to train a machine learning model to recognize certain objects, features, or patterns in images.

Image annotation is an important task in computer vision and machine learning applications, as it enables machines to learn from the data provided to them. It is used in various applications such as object detection, image segmentation, and image classification. We will discuss these applications briefly and use the following image on these applications to understand better.

Object detection

Object detection is a computer vision technique that involves detecting and localizing objects within an image or video. The goal of object detection is to identify the presence of objects within an image or video and to determine their spatial location and extent within the image. Annotations play a crucial role in object detection as they provide the labeled data for training the object detection models. Accurate image annotations help to ensure the quality and accuracy of the model, enabling it to identify and localize objects accurately. Object detection has various applications such as autonomous driving, security surveillance, and medical imaging.

Image classification

Image classification is the process of categorizing an image into one or more predefined classes or categories. Image annotation is crucial in image classification as it involves labeling images with metadata such as class labels, providing the necessary labeled data for training computer vision models. Accurate image annotations help the model learn the features and patterns that distinguish between different classes and improve the accuracy of the classification results. Image classification has numerous applications such as medical diagnosis, content-based image retrieval, and autonomous driving, where accurate classification is crucial for making correct decisions.

Image segmentation

Image segmentation is the process of dividing an image into multiple segments or regions, each of which represents a different object or background in the image. The main goal of image segmentation is to simplify and/or change the representation of an image into something more meaningful and easier to analyze. There are three types of image segmentation techniques:

Instance segmentation

It is a technique that involves identifying and delineating individual objects within an image, such that each object is represented by a separate segment. In instance segmentation, every instance of an object is uniquely identified, and each pixel in the image is assigned to a specific instance. It is commonly used in applications such as object tracking, where the goal is to track individual objects over time.

Semantic segmentation

It involves labeling each pixel in an image with a specific class or category, such as “person”, “cat”, or “unicorn”. Unlike instance segmentation, semantic segmentation does not distinguish between different instances of the same class. The goal of semantic segmentation is to understand the content of an image at a high level, by separating different objects and their backgrounds based on their semantic meaning.

Panoptic segmentation

It is a hybrid of instance and semantic segmentation, where the goal is to assign every pixel in an image to a specific instance or semantic category. In panoptic segmentation, each object is identified and labeled with a unique instance ID, while the background and other non-object regions are labeled with semantic categories. The main goal is to provide a comprehensive understanding of the content of an image, by combining the advantages of both instance and semantic segmentation.

💡 To learn more about image segmentation, read Guide to Image Segmentation in Computer Vision: Best Practices

💡 To learn more about image segmentation, read Guide to Image Segmentation in Computer Vision: Best Practices What is the Difference Between Classification and Annotation in Computer Vision?

Although classification and annotation are both used to organize and label images to create high-quality image data, the processes and applications involved are somewhat different.

Image classification is usually an automatic task performed by image labeling tools.

Image classification comes in two flavors: “supervised” and “unsupervised”. When this task is unsupervised, algorithms examine large numbers of unknown pixels and attempt to classify them based on natural groupings represented in the images being classified.

Supervised image classification involves an analyst trained in datasets and image classification to support, monitor, and provide input to the program working on the images.

On the other hand, and as we’ve covered in this article, annotation in computer vision models always involves human annotators. At least at the annotation and training stage of any image-based computer vision model. Even when automation tools support a human annotator or analyst, creating bounding boxes or polygons and labeling objects within images requires human input, insight, and expertise.

What Should an Image Annotation Tool Provide?

Before we get into the features annotation tools need, annotators and project leaders need to remember that the outcomes of computer vision models are only as good as the human inputs. Depending on the level of skill required, this means making the right investment in human resources before investing in image annotation tools.

When it comes to picking image editors and annotation tools, you need one that can:

- Create labels for any image annotation use case

- Create frame-level and object classifications

- And comes with a wide range of powerful automation features.

While there are some fantastic open-source image annotation tools out there (like CVAT), they don’t have this breadth of features, which can cause problems for your image labeling workflows further down the line. Now, let’s take a closer look at what this means in practice.

Labels For Any Image Annotation Use Case

An easy-to-use annotation interface, with the tools and labels for any image annotation type, is crucial to ensure annotation teams are productive and accurate. It's best to avoid any image annotation tool that comes with limitations on the types of annotations you can apply to images.

Ideally, annotators and project leaders need a tool that can give them the freedom to use the four most common types of annotations, including bounding boxes, polygons, polylines, and keypoints (more about these below). Annotators also need the ability to add detailed and descriptive labels and metadata.

During the setup phase, detailed and accurate annotations and labels produce more accurate and faster results when computer vision AI models process the data and images.

Classification, Object Detection, Segmentation

Classification is a way of applying nested and higher-order classes and classifications to individuals and an entire series of images. It’s a useful feature for self-driving cars, traffic surveillance images, and visual content moderation.

Object detection is a tool for recognizing and localizing objects in images with vector labeling features. Once an object is labeled a few times during the data training stage, automated tools should label the same object over and over again when processing a large volume of images. It’s an especially useful feature in gastroenterology and other medical fields, in the retail sector, and in analyzing drone surveillance images.

Segmentation is a way of assigning a class to each pixel (or group of pixels) within images using segmentation masks. Segmentation is especially useful in numerous medical fields, such as stroke detection, pathology in microscopy, and the retail sector (e.g. virtual fitting rooms).

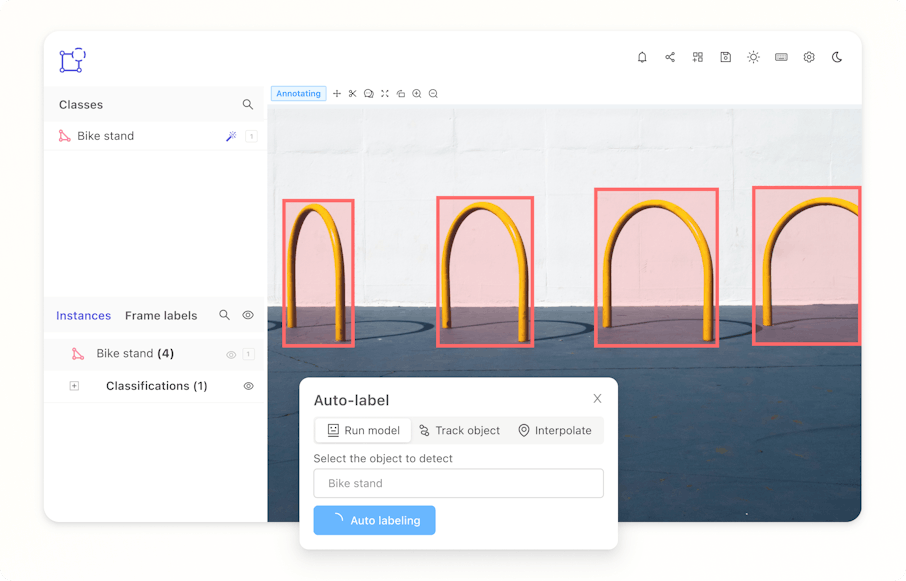

Automation features to increase outputs

When using a powerful image annotation tool, annotators can make massive gains from automation features. With the right tool, you can import model predictions programmatically.

Manually labeled and annotated image datasets can be used to train machine learning models that can then be used for automated pre-annotation of images. By leveraging these pre-annotations, human annotators can quickly and efficiently correct any errors or inaccuracies, rather than having to label each image from scratch. This approach can significantly reduce the cost and time required for annotation, while also improving the accuracy and consistency of the labeled data. Additionally, by incorporating automation features, such as pre-annotation, into the annotation process, project implementation can be accelerated, leading to more efficient and successful outcomes.

What are the Most Common Types of Image Annotation?

There are four most commonly used types of image annotations — bounding boxes, polygons, polylines, key points— and we cover each of them in more detail here:

Bounding Box

Drawing a bounding box around an object in an image — such as an apple or tennis ball — is one of several ways to annotate and label objects. With bounding boxes, you can draw rectangular boxes around any object, and then apply a label to that object. The purpose of a bounding box is to define the spatial extent of the object and to provide a visual reference for machine learning models that are trained to recognize and detect objects in images. Bounding boxes are commonly used in applications such as object detection, where the goal is to identify the presence and location of specific objects within an image.

Polygon

A polygon is another annotation type that can be drawn freehand. On images, these annotation lines can be used to outline static objects, such as a tumor in medical image files.

Polyline

A polyline is a way of annotating and labeling something static that continues throughout a series of images, such as a road or railway line. Often, a polyline is applied in the form of two static and parallel lines. Once this training data is uploaded to a computer vision model, the AI-based labeling will continue where the lines and pixels correspond from one image to another.

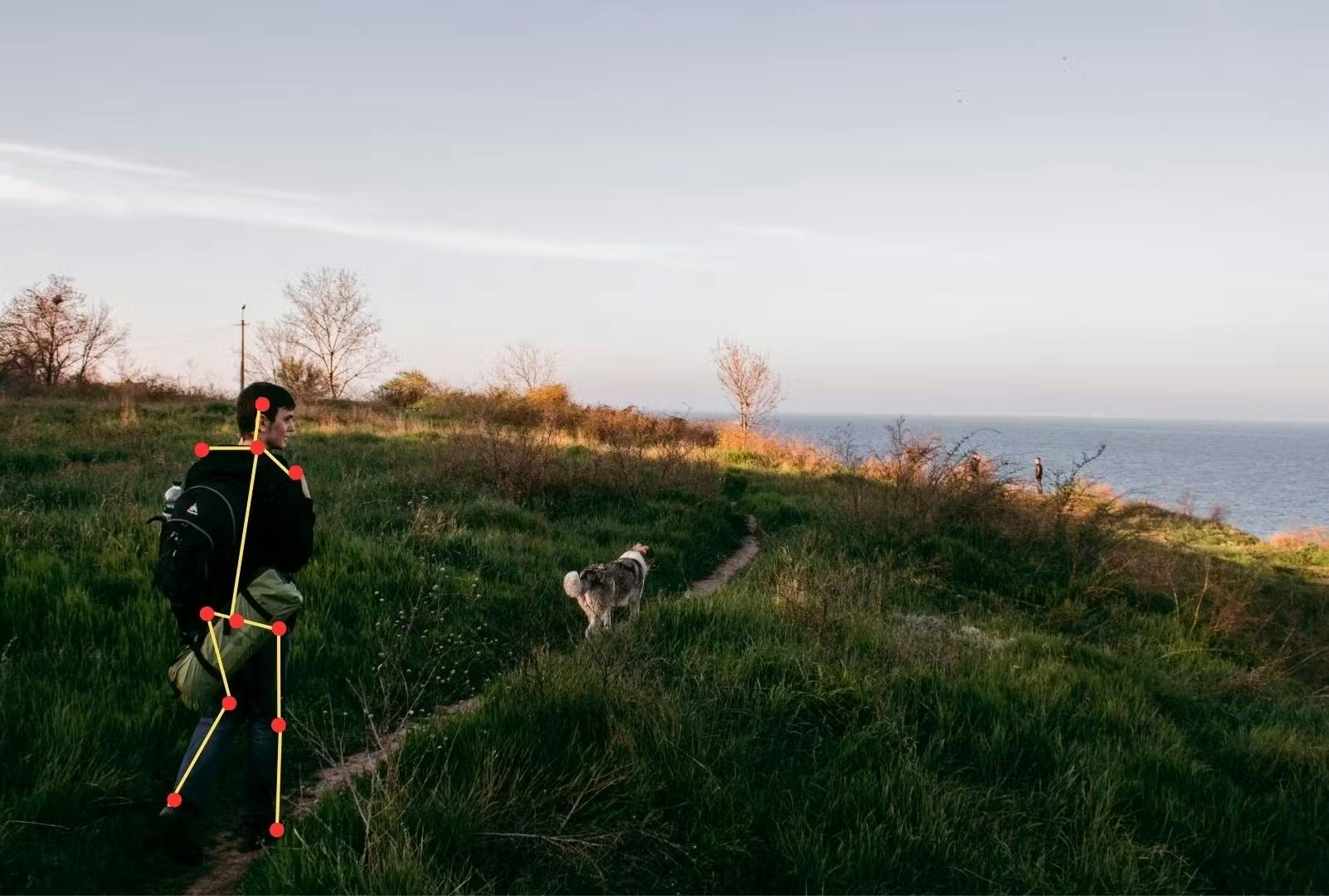

Keypoints

Keypoint annotation involves identifying and labeling specific points on an object within an image. These points, known as keypoints, are typically important features or landmarks, such as the corners of a building or the joints of a human body. Keypoint annotation is commonly used in applications such as pose estimation, action recognition, and object tracking, where the labeled keypoints are used to train machine learning models to recognize and track objects in images or videos. The accuracy of keypoint annotation is critical for these applications' success, as labeling errors can lead to incorrect or unreliable results.

Now let’s take a look at some best practices annotators can use for image annotation to create training datasets for computer vision models.

Challenges in the Image Annotation Process

While image annotation is crucial for many applications, such as object recognition, machine learning, and computer vision, it can be challenging and time-consuming. Here are some of the main challenges in the image annotation process:

Guaranteeing consistent data

Machine learning models need a good quality of consistent data to make accurate predictions. But complexity and ambiguity in the images may cause inconsistency in the annotation process.

Ambiguous images like images that contain multiple objects or scenes, make it difficult to annotate all the relevant information. For example, an image of a bird sitting on a dog could be labeled as “dog” and “bird”, or both.

Complex images may contain multiple objects or scenes, making it difficult to annotate all the relevant information. For example, an image of a crowded street scene may contain hundreds of people, cars, and buildings, each of which needs to be annotated.

Ontologies can help in maintaining consistent data in image annotation. An ontology is a formal representation of knowledge that specifies a set of concepts and the relationships between them. In the context of image annotation, an ontology can define a set of labels, classes, and properties that describe the contents of an image. By using an ontology, annotators can ensure that they use consistent labels and classifications across different images. This helps to reduce the subjectivity and ambiguity of the annotation process, as all annotators can refer to the same ontology and use the same terminology.

Inter-annotator variability

Image annotation is often subjective, as different data annotators may have different opinions or interpretations of the same image. For example, one person may label an object as a “chair”, while another person may label it as a stool. Dealing with inter-annotator variability is important because it can impact the quality and reliability of the annotated data, which can in turn affect the performance of downstream applications such as object recognition and machine learning.

Providing training and detailed annotation guidelines to annotations can help to reduce variability by ensuring that all annotators have a common understanding of all the annotation tasks and use the same criteria for labeling and classification. For example, on AI day, 2021, Tesla demonstrated how they follow a 80-page annotation guide. This document provides guidelines for human annotators who label images and data for Tesla’s driving car project. The purpose of the annotation guide is to ensure consistency and accuracy in the labeling process, which is critical for training machine learning models that can reliably detect and respond to different driving scenarios. By providing clear and comprehensive guidelines for annotation, Tesla can ensure that its self-driving car technology is as safe and reliable as possible.

Balancing costs with accuracy levels

Balancing cost with accuracy levels in image annotation means finding a balance between the level of detail and accuracy required for the annotations and the cost and effort required to produce them.

In many cases, achieving a high level of accuracy in image annotation requires significant resources, including time, effort, and expertise. This can include hiring trained annotators, using specialized annotation tools, and implementing quality control measures to ensure accuracy.

However, the cost of achieving high levels of accuracy may not always be justified, especially if the annotations are for tasks that do not require high precision or detail. For example, if the annotations are being used to train a machine learning model for a task that does not require high precision, such as image classification, then a lower level of accuracy may be sufficient. This could reduce the cost and labor associated with the annotation.

Therefore, balancing cost with accuracy levels in image annotation involves finding the optimal balance between the level of accuracy required for the specific task and the resources available for annotation. This can involve prioritizing the annotation of critical data, using a combination of automated and manual annotation, outsourcing to specialized providers, and evaluating and refining the annotation process.

Choosing a suitable annotation tool

Choosing a suitable annotation tool for image annotation can be challenging due to the variety of tasks, complexity of the tools, cost, compatibility, scalability, and quality control requirements.

Image annotation involves a wide range of tasks such as object detection, image segmentation, and image classification, which may require different annotation tools with different features and capabilities. Many annotation tools can be complex and difficult to use, especially for users who are not familiar with image annotation tasks.

The cost of annotation tools can vary widely, with some tools being free and others costing thousands of dollars per year. The tool should be compatible with the data format and software used for the image processing task.

The annotation tool should be able to handle large datasets and have features for quality control, such as inter-annotator agreement metrics and the ability to review and correct annotations.

If you are looking for image annotation tools, here is a curated list of the best image annotation tools for computer vision.

Overall, selecting a suitable annotation tool for image annotation requires careful consideration of the specific requirements of the task, the available budget and resources, and the capabilities and limitations of the available annotation tools.

Best Practices for Image Annotation for Computer Vision

Ensure raw data (images) are ready to annotate

At the start of any image-based computer vision project, you need to ensure the raw data (images) are ready to annotate. Data cleansing is an important part of any project. Low-quality and duplicate images are usually removed before annotation work can start.

Understand and apply the right label types

Next, annotators need to understand and apply the right types of labels, depending on what an algorithmic model is being trained to achieve. If an AI-assisted model is being trained to classify images, class labels need to be applied. However, if the model is being trained to apply image segmentation or detect objects, then the coordinates for boundary boxes, polylines, or other semantic annotation tools are crucial.

Create a class for every object being labeled

AI/ML or deep learning algorithms usually need data that comes with a fixed number of classes. Hence the importance of using custom label structures and inputting the correct labels and metadata, to avoid objects being classified incorrectly after the manual annotation work is complete.

Annotate with a powerful user-friendly data labeling tool

Once the manual labeling is complete, annotators need a powerful user-friendly tool to implement accurate annotations that will be used to train the AI-powered computer vision model. With the right tool, this process becomes much simpler, cost, and time-effective.

Annotators can get more done in less time, make fewer mistakes, and have to manually annotate far fewer images before feeding this data into computer vision models.

And there we go, the features and best practices annotators and project leaders need for a robust image annotation process in computer vision projects!

Explore the platform

Data infrastructure for multimodal AI

Explore product

Frequently asked questions

Encord provides solutions to tackle the complexities associated with annotating and curating low-quality images, like those often found in property listings. The platform enables users to create high-quality training labels, making it easier to define what constitutes an appealing room, thus enhancing the overall data quality.

Encord offers a range of annotation services specifically tailored for satellite imagery, including curation, prioritization, and detailed annotation tasks. These services are designed to enhance the overall efficiency and effectiveness of data labeling for geospatial projects.

Encord includes features that streamline the annotation process, helping users work more efficiently. These features are designed to minimize disruptions and enhance productivity, allowing users to focus on their tasks without the frustrations common in other annotation tools.

Encord employs advanced techniques to identify out-of-distribution images that can have the most impact on model performance. This targeted approach ensures that the annotation process focuses on data that will enhance the overall effectiveness of machine learning models.

Encord supports geotiff formats that contain multiple spectral bands, allowing users to annotate images that have been purchased or sourced from archives. The platform is designed to handle high-resolution imagery effectively, making it suitable for detailed analysis.

Encord supports various annotation types for satellite data, including bounding boxes for objects like ships and annotations for specific features such as clouds in images. This flexibility enables precise data preparation for machine learning tasks.

Encord includes features that facilitate the curation of images, ensuring that your annotation team can efficiently manage and organize tasks. This helps in reducing the manual effort involved in selecting and preparing images for annotation.

Yes, Encord allows users to select and annotate specific subsets of images based on heuristics or particular issues, such as camera calibration. This targeted approach helps in addressing unique challenges within the training process.

Users can add custom metadata to images in Encord, such as the date the image was captured or the location of the vending machine. This metadata assists in better filtering and organizing datasets for training.

Encord prefers using uncompressed image formats such as TIFF and PNG for high-quality annotation. These formats maintain the integrity of the data, which is crucial for precise pixel-level analysis.