Contents

Importance of AI Evaluations

Traditional vs. Modern Evaluation Methods

Rubric-Based Evaluation

Implementation and Iteration

Human and Programmatic Evaluations

Key Takeaways

Encord Blog

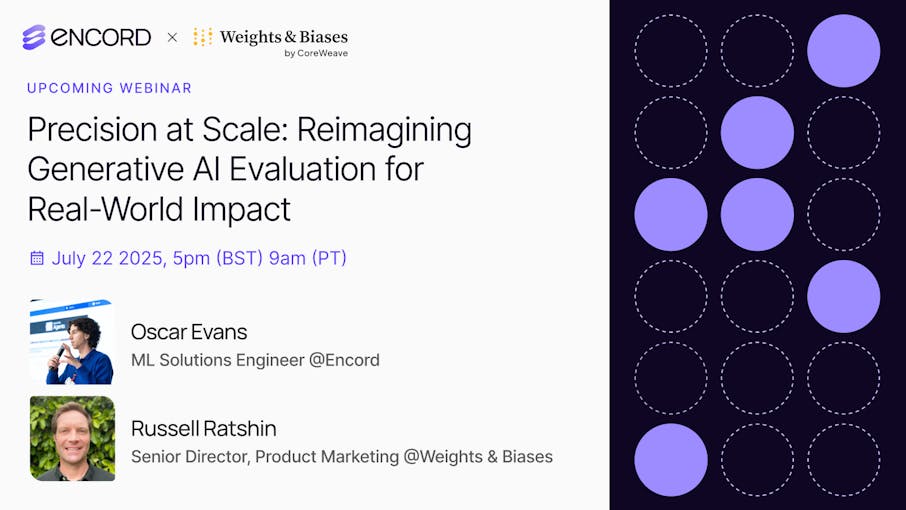

Webinar Recap - Precision at Scale: Reimagining Generative AI Evaluation for Real-World Impact

5 min read

Generative models are being deployed across a range of use cases, from drug discovery to game design. The deployment of these models in real-world applications necessitates robust evaluation processes. However, traditional metrics can’t keep up with today’s generative AI.

So we had Weights & Biases join us on a live event to explore rubric-based evaluation — a structured, multi-dimensional approach that delivers deeper insight, faster iteration, and more strategic model development.

This article recaps that conversation, diving into the importance of building effective evaluation frameworks, the methodologies involved, and the future of AI evaluations.

Importance of AI Evaluations

Deploying AI in production environments requires confidence in its performance. Evaluations are crucial for ensuring that AI applications deliver accurate and reliable results. They help identify and mitigate issues such as hallucinations and biases, which can affect user experience and trust. Evaluations also play a vital role in optimizing AI models across dimensions like quality, cost, latency, and safety.

Traditional vs. Modern Evaluation Methods

Traditional evaluation methods often rely on binary success/fail metrics or statistical comparisons against a golden source of truth. While these methods provide a baseline, they can be limited in scope, especially for applications requiring nuanced human interaction. Modern evaluation approaches incorporate rubric-based assessments, which consider subjective criteria such as friendliness, politeness, and empathy. These rubrics allow for a more comprehensive evaluation of AI models, aligning them with business and human contexts.

Rubric-Based Evaluation

Rubric-based evaluations offer a structured approach to assess AI models beyond traditional metrics. By defining criteria such as user experience and interaction quality, businesses can ensure their AI applications meet specific objectives. This method is customizable and can be tailored to different use cases and user groups, ensuring alignment across business operations.

Implementation and Iteration

Implementing rubric-based evaluations involves starting with simple cases and gradually expanding to more complex scenarios. This iterative process allows for continuous improvement and optimization of AI models. By leveraging human evaluations alongside programmatic assessments, businesses can gain deeper insights into model performance and make informed decisions about deployment.

Human and Programmatic Evaluations

Human evaluations provide invaluable context and subjectivity that programmatic methods may lack. However, scaling human evaluations can be challenging. Programmatic evaluations, such as using large language models (LLMs) as judges, can complement human assessments by handling large datasets efficiently. Combining both approaches ensures a balanced evaluation process that mitigates biases and enhances model reliability.

Key Takeaways

The integration of rubric-based evaluations into AI development processes is essential for creating robust and reliable AI applications. By focusing on both human and programmatic assessments, businesses can optimize their AI models for real-world deployment, ensuring they meet the desired quality and performance standards. As AI technology continues to advance, the importance of comprehensive evaluation frameworks will only grow, driving innovation and trust in AI solutions.

Explore the platform

Data infrastructure for multimodal AI

Explore product

Explore our products

Encord provides a dedicated customer success manager to guide new users through the onboarding process, ensuring that they are implemented and up and running smoothly. The onboarding experience is tailored to meet the specific needs of each organization, helping users maximize the value of the Encord platform.

Encord provides a robust platform designed to enhance the management and training of biometric data for AI models. By focusing on image-level data, Encord allows users to efficiently capture and analyze crucial frames from video sources, thereby optimizing performance and accuracy in facial recognition tasks.

Encord assists teams in enhancing the efficiency of their AI projects by offering tools that streamline data annotation, model training, and performance tracking. By providing a centralized platform for these tasks, Encord helps teams accelerate their development cycles and achieve faster results.

Encord fosters collaboration by providing a centralized platform where team members can access and discuss relevant data. This enables seamless communication between technical experts and decision-makers, ensuring that all aspects of the evaluation are considered.

Encord offers an active feature that aids in model evaluation specifically for visual AI teams. This feature enables teams to assess the performance of their models in real-time, providing insights that help improve the accuracy and reliability of AI systems.

Encord provides a seamless human-in-the-loop review process within its platform, allowing users to easily modify any changes that need to be made during the annotation workflow. This ensures high-quality outputs while maintaining automation efficiency.