Contents

Best Data Curation Tools for Computer Vision

Encord Index

Sama

Superb AI DataOps

FiftyOne

Lightly.AI

Scale Nucleus

ClarifAI

What is Data Curation in Computer Vision?

What to Consider in a Data Curation Tool in Computer Vision?

Why Is Data Curation Important in Computer Vision?

Conclusion

Encord Blog

7 Best Data Curation Tools for Computer Vision of 2024

5 min read

Finding and implementing a high-quality data curation tool in your computer vision MLOps Pipeline can be a hard and tedious process.

Especially since most tools require you to do a lot of manual integration work to make it fit your specific MLOps stack.

With SO many platforms, tools, and solutions on the market, it can be hard to get a clear understanding of what each tool offer, and which one to choose

In this post, we will be covering the top data curation tools for computer vision as of 2024. We will compare them based on criteria such as annotation support, features, customization, data privacy, data management, data visualization, integration with the machine learning pipeline, and customer support.

Here’s what we’ll cover:

- Encord Index

- Sama

- Superb AI

- Lightly.ai

- Voxerl51

- Scale Nucleus

- ClarifAI

Best Data Curation Tools for Computer Vision

Take a look at the best data curation tools for computer vision in the sections below.

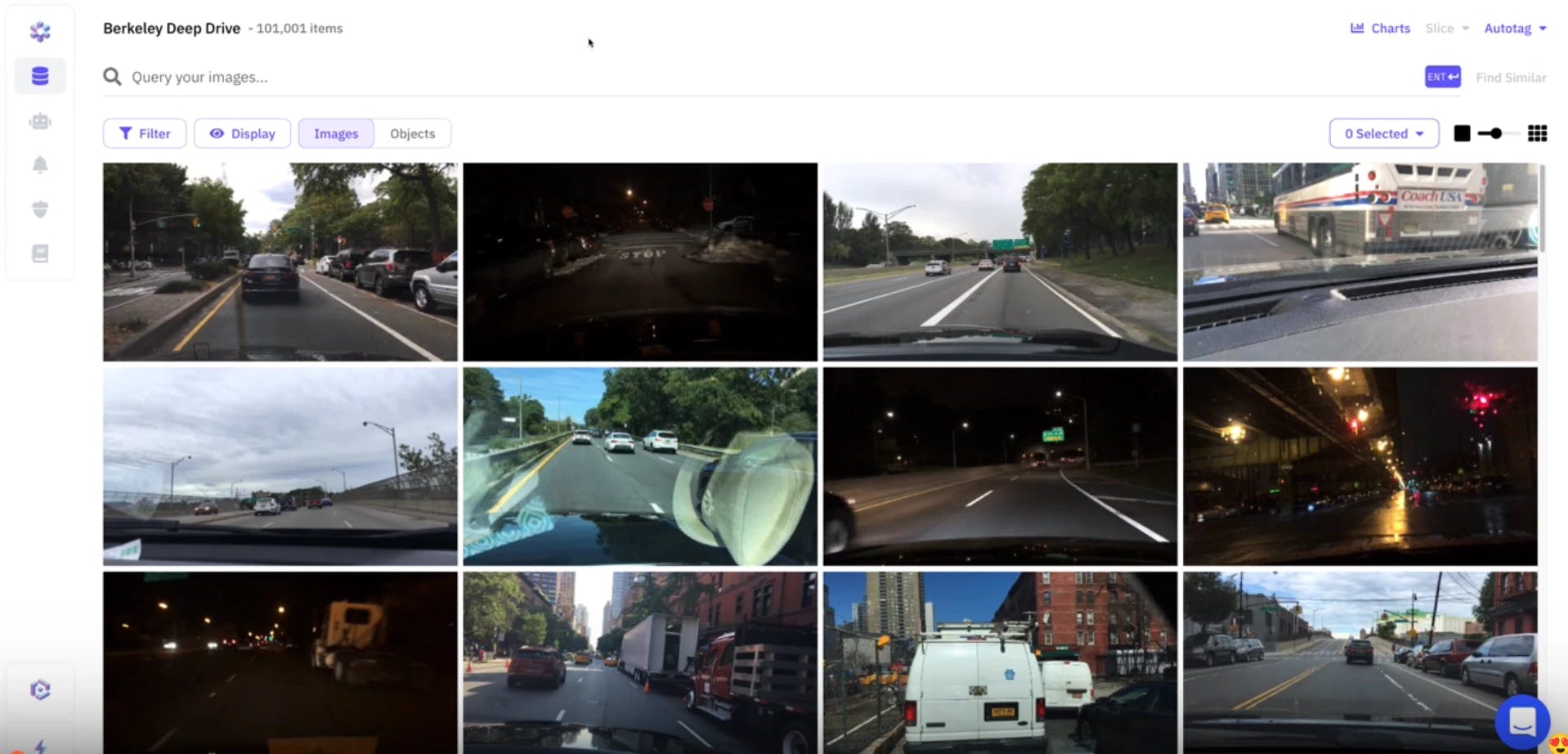

Encord Index

Encord Index is an end-to-end data management and curation tool designed to empower AI teams to manage their data more effectively. With Encord Index, teams can visualize, search, sort, and control their datasets, streamlining the curation process and significantly improving model quality and deployment speed. This platform is built to address the common challenges of data curation, making it simpler and more efficient to find and utilize the most valuable data for AI model training.

Benefits & Key features:

- User experience: It has a user-friendly interface (UI) that is easy to navigate.

- Scale: Explore and curate 10s of millions of images and videos in the same folder.

- Integration: Use Encord’s SDK, API, and pre-built integrations to customize your data pipelines.

- Multimodal Search: Encord supports natural language search, external image, and similarity search to find desired visual assets quickly.

- Metadata management: Manage all your metadata in one place with simple metadata import and schemas.

- Custom & Pre-computed Embeddings: Explorer your data with Encords or your own embeddings using various embeddings-based features.

- Integration for Labeling: Direct integration with datasets for seamless data labeling.

- Error Surfacing: Automated detection and surfacing of data errors.

- Permissioning: Manage organization and team-level permissions.

- Security: Encord is SOC 2 and GDPR compliant.

Best for:

Companies looking to power their data curation process. Encord Index is not only the preferred solution for mature computer vision companies but also the best for companies just starting out and looking for a free and open source toolkit to add to their MLops or training data pipelines.

Open source license:

Simple volume-based pricing for teams and enterprises.

Sama

Sama Curate employs models that interactively suggest which assets need to be labeled, even on pre-filtered and completely unlabeled artificial intelligence datasets.

This smart analysis and curation optimize your model accuracy while maximizing your ROI. Sama can help you identify the best data from your “big data” database to label so that your data science team can quickly optimize the accuracy of your deep learning model.

Benefits & Key features:

- Interactive embeddings and analytics

- Machine learning model monitoring

- On-prem deployment

- Provides a streamlined process for corporates

Best for:

The ML engineering team looking for a tool with a workforce.

Open source license:

Sama does currently not have an open source solution.

Superb AI DataOps

Superb AI DataOps ensures you always curate, label, and consume the best machine learning datasets. Use SuperbAIs curation tools to curate better datasets and create AI that delivers value for end-users and your business.

Make data quality a near-forgone conclusion DataOps takes the labor, complexity, and guesswork out of data exploration, curation, and quality assurance so you can focus solely on building and deploying the best models. Good for streamlining the process of building training datasets for simple image datatypes.

Benefits & Key features:

- Similarity search

- Interactive embeddings

- Model-assisted data and label debugging

- Good for object detection as it supports bounding boxes, segmentation, and polygons

Best for:

The patient machine learning engineer looking for a new tool.

Open source license:

Superb AI does currently not have an open source solution.

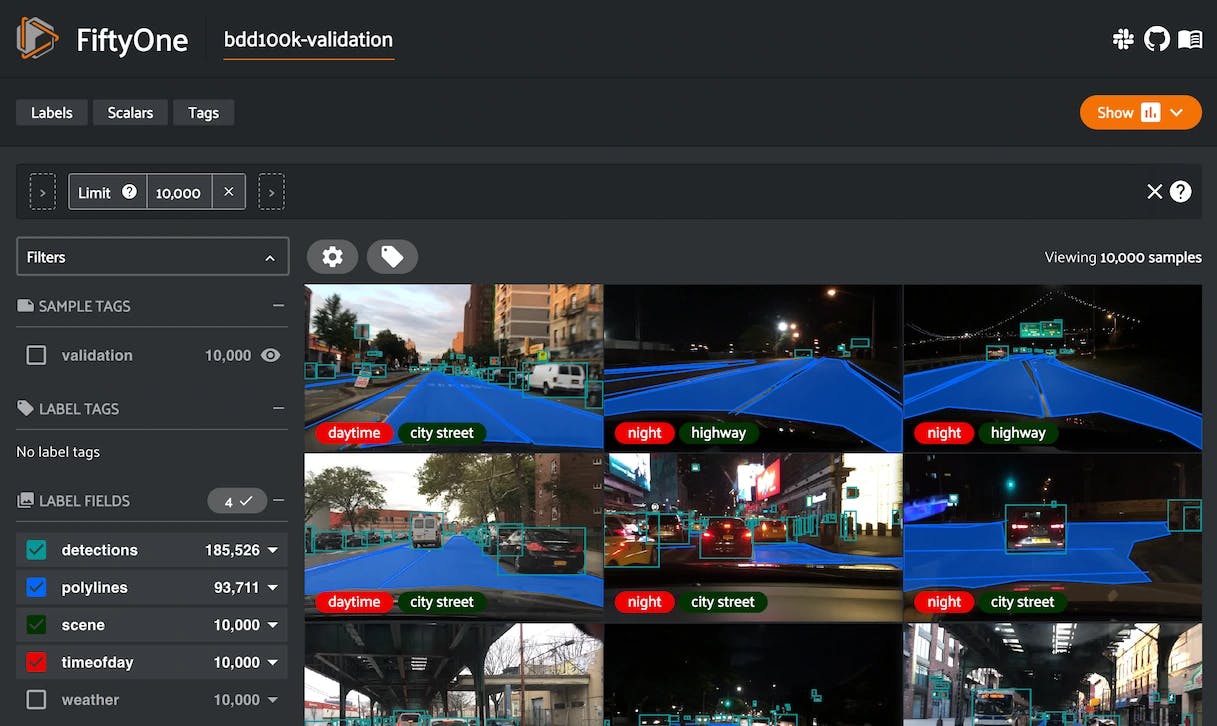

FiftyOne

Originally developed by Voxel51, FiftyOne is an open-source tool to visualize and interpret computer vision datasets.

The tool is made up of three components: the Python library, the web app (GUI), and the Brain. The Library and GUI are open-source whereas the Brain is closed-source.

FiftyOne does not contain any auto-tagging capabilities, and therefore works best with datasets that have previously been annotated. Furthermore, the tool supports image and video data but does not work for multimodal sensor datasets at this time.

FiftyOne lacks interesting visuals and graphs and does not have the best support for Microsoft windows machines.

Benefits & Key features:

- FiftyOne has a large “zoo” of open source datasets and open source models.

- Advanced data analytics with Fiftyone Brain, a separate closed-source Python package.

- Good integrations with popular annotation tools such as CVAT.

Best for:

Individuals, students, and machine learning researchers with projects not requiring complex collaboration or hosting.

Open source license:

FiftyOne is licensed under Apache-2.0 and is available from their repo here. FiftyOne Brain is a closed source software.

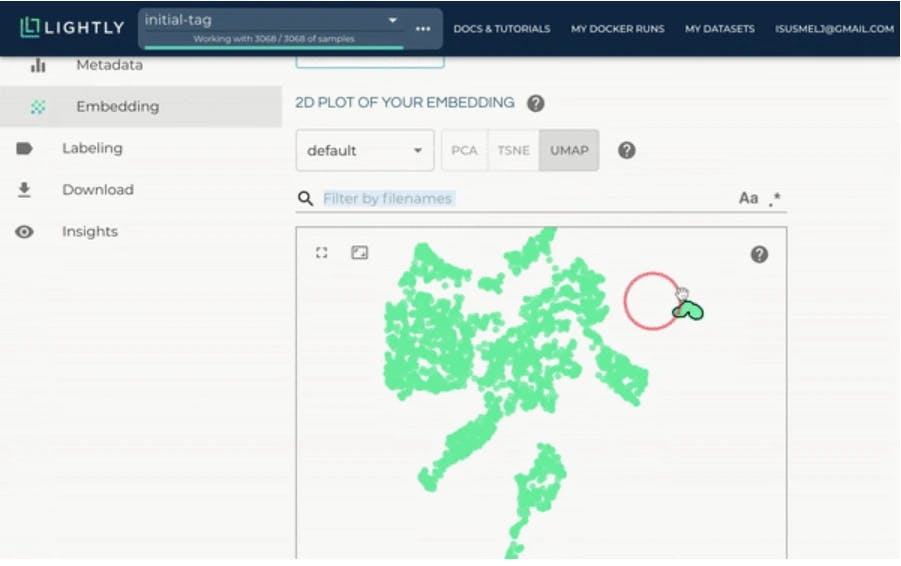

Lightly.AI

Lightly is a data curation tool specialized in computer vision. It uses self-supervised learning to find clusters of similar data within a dataset. It is based on smart neural networks that intelligently help you select the best data to label next (also called active learning read more here).

Benefits & Key features:

- Supports data selection through active learning algorithms and AI models

- On-prem version available

- Interative embeddings based on metadata.

- Open source python library

Best for:

ML Engineers looking for an on-prem deployment.

Open source license:

Lightly.ai’s main tool is closed-source but they have an extensive python library for self-supervised learning licensed under MIT. Find it on Github here.

Scale Nucleus

Created in late 2020 by Scale AI, Nucleus is a data curation tool for the entire machine learning model lifecycle. Although most famously known as a provider of data annotation workforce. The new Nucleus platform allows users to search through their visual data for model failures (false positives) and find similar images for data collection campaigns. As of now, Nucleus supports image data, 3D sensor fusion, and video.

Sadly Nucleus does not support smart data processing or any complex or custom metrics. Nucleus is part of the Scale AI ecosystem of various interconnected tools that streamline the process of building real-world AI models.

Benefits & Key features:

- Integrated data annotation and data analytics

- Similarity search

- Model-assisted label debugging

- Supports bounding boxes, polygons, and image segmentation

- Natural language processing support

Best for:

ML teams & teams looking for a simple data curation tool with access to an annotation workforce.

Open source license:

Scale Nucleus does currently not have an open source solution.

ClarifAI

Clarifai is a computer vision platform that specializes in labeling, searching, and modeling unstructured data, such as images, videos, and text. As one of the earliest AI startups, they offer a range of features including custom model building, auto-tagging, visual search, and annotations. However, it's more of a modeling platform than a developer tool, and it's best suited for teams who are new to ML use cases. They have wide expertise in robotics and autonomous driving, so if you’re looking for ML consulting services in these areas we would recommend them.

Benefits & Key features:

- Integrated data annotation

- Support for most data types

- Broad model zoo similar to Voxel51

- End-to-end platform/ecosystem

- Supports semantic segmentation, object detection, and polygons.

Best for:

New ML teams & teams looking for consulting services.

Open source license:

ClarifAI does currently not have an open source solution.

What is Data Curation in Computer Vision?

Data curation is a relatively new focus area for Machine Learning teams. Essentially it covers the management and handling of data across your MLOps pipeline. More specifically It refers to the process of 1) collecting, 2) cleaning, 3) organizing, 4) evaluating, and 5) maintaining data to ensure its quality, relevance, and suitability for your specific computer vision task.

In recent times it has also come to refer to finding model edge cases and surfacing relevant data to improve your model performance for these cases.

Before the entry of the data curation paradigm, Data Scientists and Data Operation teams were simply feeding their labeling team raw visual data which was labeled and sent for model training. As training data pipelines have matured this strategy is not practical and cost-effective anymore.

This is where good data curation enters the picture.

Without good data curation practices, your computer vision models may suffer from poor performance, accuracy, and bias, leading to suboptimal results and even failure in some cases.

Furthermore, once you’re ready to scale your computer vision efforts and bring multiple models into production, the task of funneling important production data into your training data pipeline and prioritizing what to annotate next becomes increasingly challenging. In the base case, you’d want a structured approach, and in the best case a highly automated data-centric approach.

Lastly, as you discover edge cases for your computer vision models in a production environment, you would need to have a clear and structured process for identifying what data to send for labeling to improve your training data and cover the edge case.

Therefore, having the right data curation tools is crucial for any computer vision project.

What to Consider in a Data Curation Tool in Computer Vision?

Having worked with hundreds of ML and Data Scientist teams deploying thousands of models into production every year, we have gathered a comprehensive list of best practices when selecting a tool. The list is not 100% exhaustive, so if you have anything you would like to add we would love to hear from you here.

Data Prioritization

Selecting the right data is crucial for training and evaluating computer vision models. A good data curation tool should have the ability to filter, sort, and select the appropriate data for a given task. This includes being able to handle large datasets, as well as the ability to select data based on certain attributes or labels. If the tool supports reliable automation features for data prioritization that is a big plus.

Visualizations

Customizable visualization of data is important for understanding and analyzing large datasets. A good tool should be able to display data in various forms such as tables, plots, and images, and allow for customization of these visualizations to meet the specific needs of the user.

Model-Assisted Insights

Model-assisted debugging is another important feature of a data curation tool. This allows for the visualization and analysis of model performance and helps to identify issues that may be present in the data or the model itself. This can be achieved through features such as confusion matrices, class activation maps, or saliency maps.

Modality Support

Support for different modalities is also important for computer vision. A good data curation tool should be able to handle multiple different types of data such as images, videos, DICOM, and geo. tiff, while extending support to all annotation formats such as bounding boxes, segmentation, polyline, keypoint, etc.

Data Management

The data curation tool should efficiently handle large image datasets, offer seamless import/export functionalities, and provide effective organization features like hierarchical folders, tagging, and metadata management. It should support annotation versioning and quality control to ensure data integrity and consistency.

Simple & Configurable User Interface (UI)

A data curation tool is often used by multiple technical and non-technical stakeholders. Thus a good tool should be easy to navigate and understand, even for those with little experience in computer vision. Setting up recurring automated workflows should be supported while programmatic support for webhooks, API calls, and SDK should also be available.

Annotation Integration

Recurring annotation and labeling a crucial part of data curation for computer vision. A good tool should have the ability to easily support annotation workflows and allow for the creation, editing, and management of labels and annotations.

Collaboration

Collaboration is also important for data curation. A good tool should have the ability to support multiple users and allow for easy sharing and collaboration on datasets and annotations. This can be achieved through features such as shared annotation projects and real-time collaboration.

Why Is Data Curation Important in Computer Vision?

Data curation is critical in computer vision because it directly affects the performance and accuracy of models. Computer vision models rely on large amounts of data to learn and make predictions, and the quality and relevance of that data determine the model's ability to generalize and adapt to new situations.

Conclusion

Data curation is a crucial aspect of any computer vision project. Without good data curation practices, your models may suffer from poor performance, accuracy, and bias. To ensure the best results, it is essential to have the right data curation tools.

In this article, we have covered the top 7 data curation tools for computer vision of 2023, comparing them based on criteria such as annotation support, features, customization, data privacy, data management, data visualization, integration with the machine learning pipeline, and customer support.

We hope that this article has provided valuable information and insights to help you make an informed decision on which data curation tool is best for your specific use case and budget. In any case, it is important to keep in mind that tool selection should be based on your specific needs, budget, and team size.

Explore the platform

Data infrastructure for multimodal AI

Explore product

Explore our products

Encord offers a comprehensive data curation feature that allows users to manage and prioritize data efficiently across various projects. The platform supports sorting and filtering data based on custom metrics, RGB values, or deep learning model embeddings, making it easier to prepare data for annotation and model training.

Encord provides robust features for data management and curation, enabling users to organize, evaluate, and curate their datasets effectively. The platform's tools allow for seamless tracking of data quality and versioning, which are crucial for maintaining high standards in AI model training.

Yes, Encord is particularly suited for companies that require a high level of human involvement in their data curation processes. The platform is designed to support workflows where human annotators are integral to ensuring the quality and accuracy of labeled data for AI model training.

Encord provides a comprehensive platform that supports data curation as a foundational step before moving to annotation. The platform is designed to streamline the entire data pipeline, allowing for efficient data management and annotation processes tailored to various needs.

Encord provides various automation solutions for data curation, which can help reduce the manual effort involved in the process. These solutions enable users to curate data more efficiently, leveraging both user-generated and web-sourced data to enhance model training.

Encord provides powerful data curation tools that allow users to effectively organize and manage their annotation data. This includes features like Encord Index, which streamlines the process of data management and enhances the overall efficiency of data workflows.

Encord employs various heuristics and automated processes to filter and curate high-quality data. These methods enhance the data curation workflow, enabling teams to iteratively improve their datasets and focus on acquiring the most relevant data for their needs.

Encord offers advanced curation functionalities that enable users to filter and slice large datasets effectively. By utilizing metadata and derived filters, users can identify relevant data subsets, making the annotation process more efficient.

Currently, Encord users can benefit from automated curation processes that enhance the efficiency of data handling. If existing curation methods are not meeting expectations, Encord provides tools to streamline and optimize these workflows.

Encord provides tools for data curation that help improve model accuracy by standardizing datasets across projects and enabling users to evaluate corner cases and different environments more efficiently.