Contents

What Is Data Annotation in the Context of Generative AI?

Why High-Quality Annotation Determines Gen AI Success

Annotation Challenges in Generative AI

Key Features to Look For in an AI Annotation Tool

Tools Overview

Best Data Annotation Tools for Generative AI

Which is the Best Data Annotation Tool for Generative AI?

Final Thoughts

Encord Blog

Best Data Annotation Tools for Generative AI 2026

5 min read

This guide to data annotation tools for Generative AI breaks down how teams can improve model accuracy and align LLMs with human values. It also explains how to scale AI projects with the right platforms and workflows.

Today, around 72% of companies use Gen-AI in at least one business function. This number is almost triple the share just three years ago. However, over half of artificial intelligence (AI) initiatives never reach production. Many Gen-AI pilots fail due to incomplete, biased, or poorly labeled data.

AI teams need structured feedback loops like Reinforcement Learning from Human Feedback (RLHF) to train safe, high-performing models. They also require specialized data-annotation platforms like Encord, which can manage multimodal data, annotation at scale, and automated quality checks.

This article explains what data annotation means and outlines the six must-have features of a modern annotation platform. We will also compare the best data annotation tools for Generative AI.

What Is Data Annotation in the Context of Generative AI?

Data annotation means adding human-readable labels to raw text, images, audio, video, or documents so a model can learn from them. In generative AI, the model's quality, safety, and ethics depend on how people label “what’s in the data” and “which output is better.”

Unlike traditional supervised learning, which uses labels to classify one correct category. Gen-AI annotation reflects more complex human judgment. It is about encoding human preferences, safety rules, and multimodal context to teach models how to think, not just what to see.

Why High-Quality Annotation Determines Gen AI Success

Quality human data annotation drives the success of generative AI projects. Accurate, diverse datasets ensure AI models deliver reliable, safe outputs. Models can generate hallucinations, biases, or irrelevant results without precise data labeling, undermining their effectiveness. Accurate annotation offers the following benefits:

- Alignment & RLHF: Human preference labels guide LLMs and multimodal AI systems toward helpfulness and safety. These labels help AI experts to fine-tune model performance, ensuring their outputs match human values in diverse use cases. They also let teams develop and ship reliable AI models faster.

- Bias control: High-quality labeled datasets prevent harmful or skewed outputs. Unbiased annotation processes categorize data types to reduce risks of bias and keep the labeling process fair and traceable for teams.

- Model generalization: Without quality-labeled training datasets, hallucination rates increase, and models may struggle with generalization. This occurs when LLMs face rare prompts and multimodal models need fine-grained object detection and pixel-level semantic segmentation.

Annotation Challenges in Generative AI

Generative AI projects require robust data annotation, but several challenges complicate the process. Addressing them can help build high-quality datasets for AI models.

- Scale & Velocity: LLMs and multimodal AI models consume terabyte-class datasets. Manual data labeling cannot keep pace, causing pipelines to stall and model updates to lag. Teams need automation and batch workflows that stream high-volume, real-time input through a single data annotation platform.

- Multimodal Complexity: Modern use cases mix text, images, video, audio, LiDAR, and PDFs. Each data type requires different annotation types. Managing different editors or file formats encourages version drift and slow project management.

- Quality Assurance: Ensuring quality data is tough when annotation errors occur. Labeled datasets can degrade without rigorous quality control, causing poor model performance. Human-in-the-loop workflows and active learning help maintain accuracy by flagging issues in real-time.

- Security & Compliance: Annotated medical scans, chat logs, and financial docs often contain Personally Identifiable Information (PII) and Protected Health Information (PHI). GDPR, HIPAA, and SOC 2 rules demand encrypted storage, audit trails, and on-premise deployment options.

- Cost Pressure: RLHF, red-teaming, and human-in-the-loop review can incur significant costs. Without AI-assisted labeling and usage-based pricing, annotation costs can quickly escalate, draining resources before AI applications reach production.

Key Features to Look For in an AI Annotation Tool

Given the challenges in data annotation, we must be cautious when selecting a platform. The best annotation tools streamline workflows, improve scalability, and ensure model performance in diverse AI applications. Below are some features to prioritize when choosing an annotation tool.

- RLHF Support: Look for platforms that support RLHF. It enables annotators to rank outputs, score safety, and generate reward signals for fine-tuning LLMs more efficiently.

- Multimodal Editors: Modern AI systems combine different data formats. A strong platform handles all data types, from bounding boxes and polygons in image annotation to pixel-level semantic segmentation. It also supports text annotation for natural language processing (NLP) and 3-D point-cloud labels for autonomous driving.

- AI-assisted Labeling & Active Learning: Look for the tool that supports AI-powered annotation to predict labels, auto-draw boxes, or suggest classes, so human annotators focus on edge cases. This automation cuts costs on large datasets while boosting scalability.

- Collaboration & Quality Control: High-quality data requires reviewer consensus and real-time metrics dashboards. Look for task routing, comment threads, and role-based permissions that help data scientists, domain experts, and QA stay aligned.

- Secure Infrastructure: Data security is non-negotiable. Platforms must meet SOC-2 and GDPR standards, providing on-premise or cloud-based options to protect sensitive AI data, especially in regulated fields like healthcare.

- SDK / API & Cloud Integrations: Scalable tools provide APIs and SDKs for seamless integration with model pipelines. This helps in automation, supports Python-based workflows, and streamlines data management for end-to-end model training.

Tools Overview

{{table(tools)}}

Best Data Annotation Tools for Generative AI

Many annotation platforms now bundle multimodal editors, RLHF workflows, and active-learning automation so you can push large datasets through a single, secure pipeline. Below, we cover the best annotation tools that address the unique demands of data for generative AI.

Encord – Multimodal Data Platform Built for RLHF

Encord is a multimodal labeling tool that unifies text, image, video, audio, and native DICOM within one data annotation platform. This lets AI teams label their data all in a shared, user-friendly interface.

Analyze and annotate multimodal data in one view

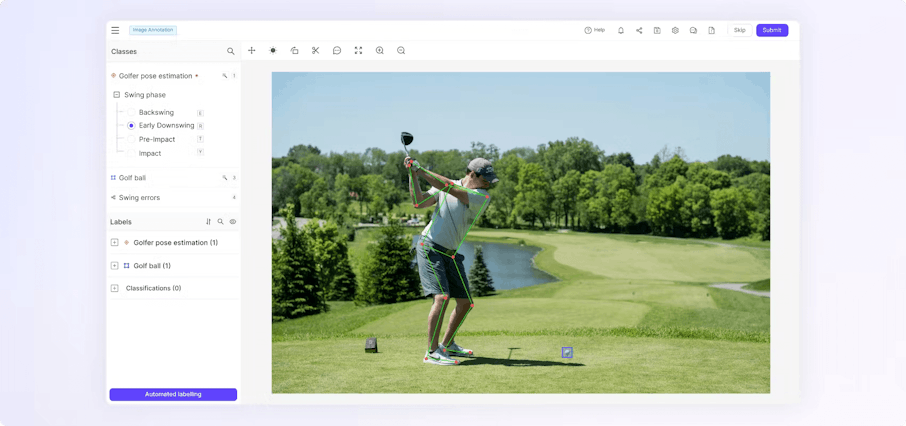

Encord Image Annotation

Encord’s image toolkit lets you draw bounding boxes, polygons, keypoints, or pixel-level semantic-segmentation masks in the same editor. It uses model-in-the-loop suggestions from Meta-AI’s SAM-2 to automate the labeling.

Auto-labeling reduces the annotation time by roughly 70% on large datasets while maintaining 99% accuracy. Every label is saved, so active-learning loops in Encord Active can flag drift or low-quality labels before they get used in training data.

Encord Video Annotation

Encord streams footage at native frame rates for video pipelines. It then applies smart interpolation to propagate labels forward and backward. This process means you do not need to label each frame by hand, yielding 6 times faster labeling throughput.

Built-in advanced features include multi-object tracking, scene-level metadata, and automated pre-labeling to maintain high quality for gen AI training data. Meanwhile, background pre-computations allow annotators to scrub long clips without latency spikes.

Encord Text Annotation

On the NLP side, Encord supports annotations such as entity, intent, sentiment, and free-form span tagging. More importantly, it adds preference-ranking templates for RLHF so teams can vote on which LLM response is safer or more helpful.

Encord text annotation integrates SOTA models such as GPT4o and Gemini Pro 1.5 into annotation workflows. This integration speeds up document annotation processes, improving the accuracy of text training data for LLMs.

Encord Audio Annotation

Encord’s audio module lets you slice, label, and classify waveforms for speech recognition, speaker diarization, and sound-event detection. Its AI-assisted labeling uses models like OpenAI Whisper to pre-label audio data, pauses, and speaker identities, reducing manual effort.

Paired with foundation models such as Google’s AudioLM, it accelerates audio curation. This allows a faster feed of high-quality clips into generative pipelines.

Scale AI – Generative-AI Data Engine

Scale AI offers a comprehensive Generative-AI Data Engine that supports end-to-end workflows for building and refining large language models (LLMs) and other generative AI systems. The platform includes tools for RLHF, synthetic data generation, and red teaming, essential for aligning models with human values and ensuring safety.

Its synthetic-data module generates millions of language or vision examples on demand. This helps improve the detection of the rare class for object detection or multilingual NLP.

Scale AI’s expertise in combining AI-based techniques with human-in-the-loop annotation allows for high-quality, scalable data labeling. This approach meets the demands of complex generative AI projects.

Kili Technology – Hybrid Human-Plus-AI Labeling

Kili Technology combines human expertise with AI pre-labeling to achieve a balance of speed and accuracy that suits Gen AI’s demanding annotation tasks. It supports various data types, including text, images, video, and PDFs, and provides customizable annotation tasks optimized for quality.

A key feature is the use of foundation models like ChatGPT and SAM for AI-assisted pre-labeling, which accelerates the annotation process. Kili Technology also emphasizes collaboration with machine learning experts.

It provides tools for quality control, ensuring that the annotated data meets the high standards required for generative AI. Its flexible on-premise deployment options cater to industries like finance and defense, where data security is critical.

Appen

Appen is a leading provider of data annotation services, offering high-quality datasets for training generative AI models. It supports a vast, vetted workforce that delivers richly annotated data across text, image, audio, and video modalities.

Appen's workforce ensures multilingual support, reducing cultural bias in NLP outputs. It also offers differential privacy options to protect personal data.

Additionally, Appen provides pre-labeled datasets and custom data collection services, tailored to specific use cases in generative AI, such as sentiment analysis and content moderation.

Multimodal data annotation in Appen

Dataloop – RLHF Studio & Feedback Loops

Dataloop provides an enterprise-grade AI development platform with robust data annotation tools for generative AI. Dataloop’s RLHF studio enables prompt engineering. This allows annotators to offer their feedback on model-generated responses to prompts. It supports various data types, including images, video, audio, text, and LiDAR, and offers drag-and-drop data pipelines for efficient data management.

Dataloop integrates with multiple cloud services and offers a marketplace for models and datasets. This makes it a comprehensive solution for generative AI projects. Its Python SDK allows for programmatic control of annotation workflows, enhancing automation and scalability.

Amazon SageMaker Ground Truth Plus

Amazon SageMaker Ground Truth Plus data labeling service supports the creation of high-quality training datasets for generative AI applications. It supports customizable templates for LLM safety reviews, dialogue ranking, and multimodal scoring.

Tight identity and access management (IAM) and VPC peering ensure your data remains secure within your cloud environment. When labeled, assets automatically fill up in S3. This starts SageMaker processes for retraining models or checking for bias. The system uses active learning to reassess low-confidence labels, and metrics dashboards display accuracy and recall rates.

Amazon SageMaker ground truth image annotation

Which is the Best Data Annotation Tool for Generative AI?

Among the platforms we covered above, Encord stands out for turning complex, multi-step Gen-AI annotation workflows into a single, secure workspace. Its support for multimodal data annotation within a single platform makes it a better choice for teams working on generative AI projects. It also eliminates the need for multiple tools and reduces workflow complexity.

Encord's integration of RLHF workflows enables teams to compare and rank outputs from generative AI models and align them with ethical and practical standards. Whether it’s improving model behavior or meeting compliance needs, RLHF makes Encord a standout choice.

Encord supports seamless cloud integration with major cloud storage providers such as AWS S3, Azure Blob Storage, and Google Cloud Storage. This allows teams to efficiently manage and annotate large datasets directly from their preferred cloud environments.

Encord's developer-friendly API and SDK enable programmatic access to projects, datasets, and labels. This facilitates seamless integration into machine learning model pipelines and enhances automation.

Moreover, Security is another area where Encord is a better choice. It is SOC2, HIPAA, and GDPR compliant, offering robust security and encryption standards to protect sensitive and confidential data.

Final Thoughts

Data annotation tools are vital for building a generative AI application. They help create high-quality datasets that power models capable of producing human-like text, images, and more. These tools must manage large datasets and diverse data types to ensure AI outputs are reliable and aligned with human expectations.

Below are key points to remember when selecting and using data annotation tools for generative AI projects.

- Best Use Cases for Data Annotation Tools: The best data annotation tools excel at preference ranking, training models with human feedback, red-teaming models with challenge inputs, and enhancing model transparency. These functions are essential for developing safe, effective, and interpretable generative AI systems.

- Challenges in Data Annotation: Generative AI annotation comes with difficulties such as rapidly managing large-scale datasets, processing multimodal data, maintaining consistent data quality over time, ensuring security and regulatory compliance, and controlling costs. Addressing these challenges is essential for successful AI model deployment.

- Encord for Generative AI: Encord features a multimodal editor, RLHF support, and secure AI-assisted workflows. Other tools such as Scale AI, Labelbox, Kili, Appen, Dataloop, and SageMaker also provide strong capabilities. The best choice depends on your data types, project scale, and workflow needs.

Explore the platform

Data infrastructure for multimodal AI

Explore product

Explore our products

Data annotation tools label and structure raw data (text, images, video, audio) to create high-quality datasets for training AI models. This is particularly important for generative AI tasks like RLHF and preference ranking.

Data annotation tools ensure accurate, unbiased training data, which is critical for aligning generative AI models with human values. This process helps reduce hallucinations in NLP applications and improves computer vision model performance.

Data automation uses AI and algorithms to streamline annotation tasks, such as pre-labeling or quality checks. This process reduces manual effort and accelerates the creation of training datasets for machine learning models.

Data labeling involves tagging or categorizing data, such as images and text, with annotations like bounding boxes. This process prepares high-quality training data for AI and generative AI models.

The top data annotation tool is Encord, which offers multimodal support, RLHF, and AI-assisted labeling for generative AI projects.

Encord provides robust data annotation tools that facilitate the labeling of images, videos, and other media, essential for training generative AI models. This capability allows users to create high-quality training datasets efficiently, ensuring that the models perform optimally based on well-annotated data.

Encord's approach to annotation for generative AI is primarily unsupervised, aiming to minimize user interaction while maximizing output quality. The platform is designed to streamline the user experience, allowing clients to quickly select from multiple generated outputs without extensive manual input.

Encord integrates advanced AI capabilities within its annotation workflows, enabling users to automate repetitive tasks and enhance the efficiency of data labeling. This includes tools for generating annotations based on pre-trained models, which can significantly speed up the annotation process.

Encord enhances collaboration by providing a centralized platform where annotation teams can interact directly with algorithm developers. This facilitates real-time feedback, improves data quality, and ensures that the annotated data meets the specific criteria needed for model training.

Flexibility is crucial in annotation platforms for generative AI projects because the requirements for labeling can evolve rapidly. Encord's flexibility enables teams to adapt their annotation strategies in response to new challenges and opportunities in the AI landscape.

Yes, Encord's annotation and curation tools play a critical role in improving the quality of responses generated by AI models. By ensuring that the training data is well-organized and accurately labeled, Encord helps enhance the performance of generative AI solutions.

Encord streamlines the annotation process by offering tools that allow users to efficiently manage and collaborate on data annotation tasks. The platform's user-friendly interface helps teams annotate data accurately, which is crucial for training effective AI models.

Encord leverages AI to assist in the labeling process, providing automated suggestions and accelerating the annotation workflow. This capability is particularly beneficial as data volumes increase, helping to maintain label quality while saving time.

Encord includes AI-assisted tooling designed to make the annotation process smooth and efficient. These tools help ensure high-quality annotations across various data modalities, supporting teams in managing their projects effectively.

Encord supports a wide array of data types for annotation, including images, videos, text, and audio. This versatility makes it suitable for various AI applications across different industries.