Contents

Mistral 7B

Mistral 7B Architecture

Mistral 7B Performance

Open Source and Accessible

Encord Blog

Mistral 7B: Mistral AI's Open Source Model

5 min read

Mistral AI has taken a significant stride forward with the release of Mistral 7B. This 7.3 billion parameter language model is making waves in the artificial intelligence community, boasting remarkable performance and efficiency.

Mistral 7B

Mistral 7B is not just another large language model (LLM); it's a powerhouse of generative AI capability. Mistral AI introduced Mistral-7B-v0.1 as their first model.

Mistral 7B Architecture

Behind Mistral 7B's performance lies its innovative attention mechanisms:

Sliding Window Attention

One of the key innovations that make Mistral 7B stand out is its use of Sliding Window Attention (SWA) (Child et al., Beltagy et al.). This mechanism optimizes the model's attention process, resulting in significant speed improvements. By attending to the previous 4,096 hidden states in each layer, Mistral 7B achieves a linear compute cost, a crucial factor for efficient processing.

SWA allows Mistral 7B to handle sequences of considerable length with ease. It leverages the stacked layers of a transformer to attend to tokens in the past beyond the window size, providing higher layers with access to information further back in time. This approach not only enhances efficiency but also empowers the machine learning model to tackle tasks that involve lengthy texts.

Grouped-query Attention (GQA)

This mechanism enables faster inference, making Mistral 7B an efficient choice for real-time applications. With GQA, it can process queries more efficiently, saving valuable processing time.

Local Attention

Efficiency is a core principle behind Mistral 7B's design. To optimize memory usage during inference, the model implements a fixed attention span. By limiting the cache to a size of sliding_window tokens and using rotating buffers, Mistral 7B can save half of the cache memory, making it resource-efficient while maintaining model quality.

Mistral 7B Performance

Outperforming the Competition

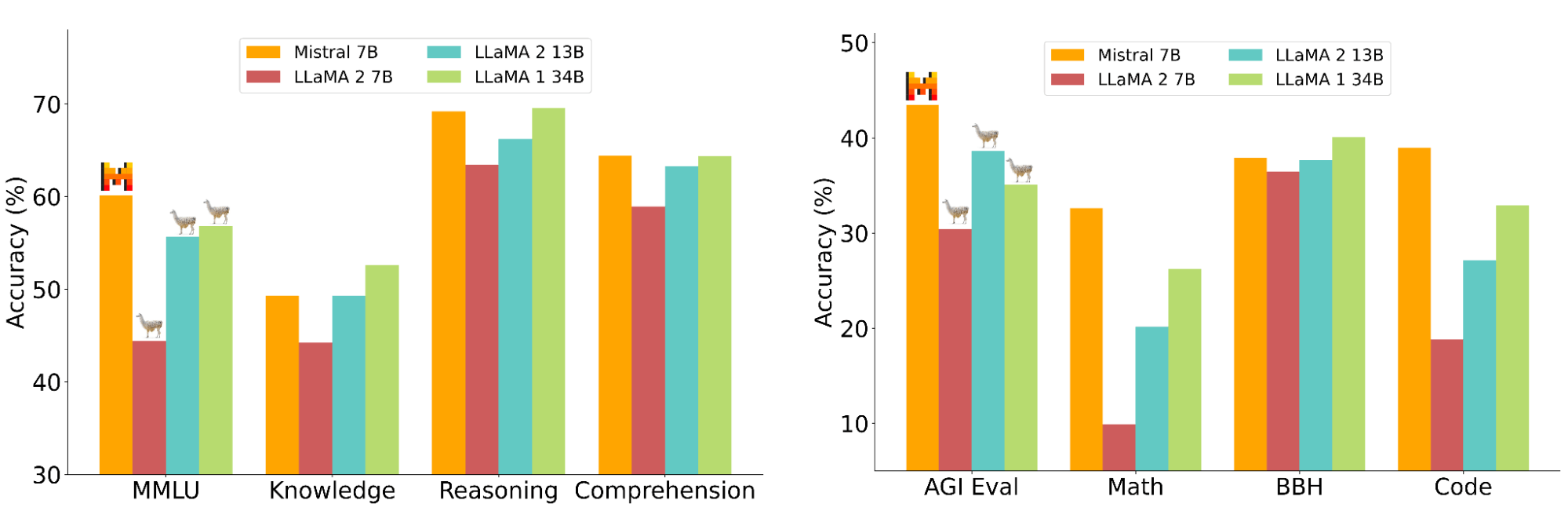

One of the standout features of Mistral 7B is its exceptional performance. It outperforms Llama 2 13B on all benchmarks and even gives Llama 1 34B a run for its money on many benchmarks. This means that Mistral 7B excels in a wide range of tasks, making it a versatile choice for various applications.

Bridging the Gap in Code and Language

Mistral 7B achieves an impressive feat by approaching CodeLlama 7B's performance on code-related tasks while maintaining its prowess in English language tasks. This dual capability opens up exciting possibilities for AI developers and researchers, making it an ideal choice for projects that require a balance between code and natural language processing.

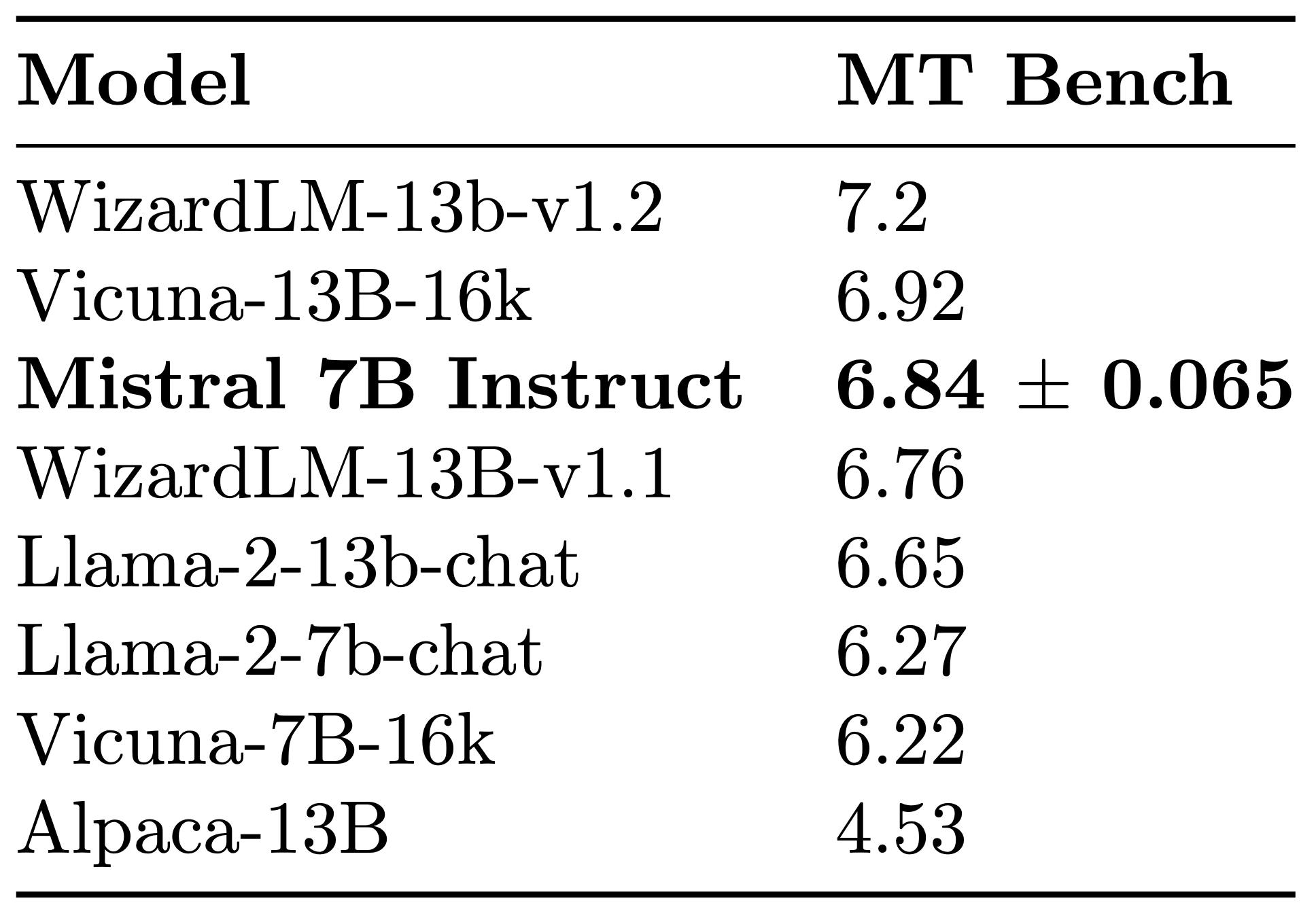

Fine-Tuning for Chat

Mistral 7B's versatility shines when it comes to fine-tuning for specific tasks. As a demonstration, the Mistral AI team has fine-tuned it on publicly available instruction datasets on HuggingFace. The result? Mistral 7B Instruct, a model that not only outperforms all 7B models on MT-Bench but also competes favorably with 13B chat models. This showcases the model's adaptability and potential for various conversational AI applications.

Mistral 7B emerges as a formidable contender in the world of AI chatbots. Notably, OpenAI has just unveiled GPT-4 with image understanding, a remarkable advancement that integrates ChatGPT with capabilities spanning sight, sound, and speech, effectively pushing the boundaries of what AI-driven chatbots can achieve. As Mistral 7B now enters the open-source arena, it becomes an intriguing subject for comparison against these highly capable chatbots. The stage is set for an exciting showdown to determine how Mistral 7B holds its own against these top-tier conversational AI systems.

Open Source and Accessible

One of the most welcoming aspects of Mistral 7B is its licensing. Released under the Apache 2.0 license, it can be used without restrictions. This open-source model encourages collaboration and innovation within the AI community.

The raw model weights of Mistral-7B-v0.1 are distributed via BitTorrent and readily available on Hugging Face. However, what truly sets Mistral-7B apart is its comprehensive deployment bundle, meticulously crafted to facilitate swift and hassle-free setup of a completion API. This bundle is tailored to seamlessly integrate with major cloud providers, especially those equipped with NVIDIA GPUs, enabling users to leverage the model's capabilities with remarkable ease.

Hailing from the vibrant city of Paris, France, this innovative AI startup is steadfast in its mission to develop top-tier generative AI models. Despite competing in a field dominated by industry behemoths such as Google's DeepMind, Meta AI, Apple, and Microsoft this company is making impressive strides.

Explore the platform

Data infrastructure for multimodal AI

Explore product

Explore our products

Yes, Encord is designed to support clients who are building their own proprietary models or fine-tuning open-source models. Many clients start with open-source options like YOLO for labeling and gradually develop their proprietary solutions as they scale.

Encord provides the flexibility to integrate open source or proprietary models into its platform, allowing users to perform pre labeling runs. This integration helps streamline the labeling process by automating the handling of easier labeling cases.

Encord's platform is built on its own infrastructure, hosted on Google Cloud Platform (GCP). This means that all workflow management systems are proprietary to Encord and not reliant on third-party workflow builders.