Back

on demand

on demand

The 2026 Annotation Analytics Masterclass: How to Measure Efficiency & Quality

Thu, Jan 15, 05:00 PM - 05:45 PM UTC

The 2026 Annotation Analytics Masterclass

How do you really know if your annotation pipeline is performing at the level your models need? If your annotators are working efficiently?

If your reviewers are catching the right issues or creating new ones?

Most teams can’t answer these questions, and it slows down model development more than they realise.

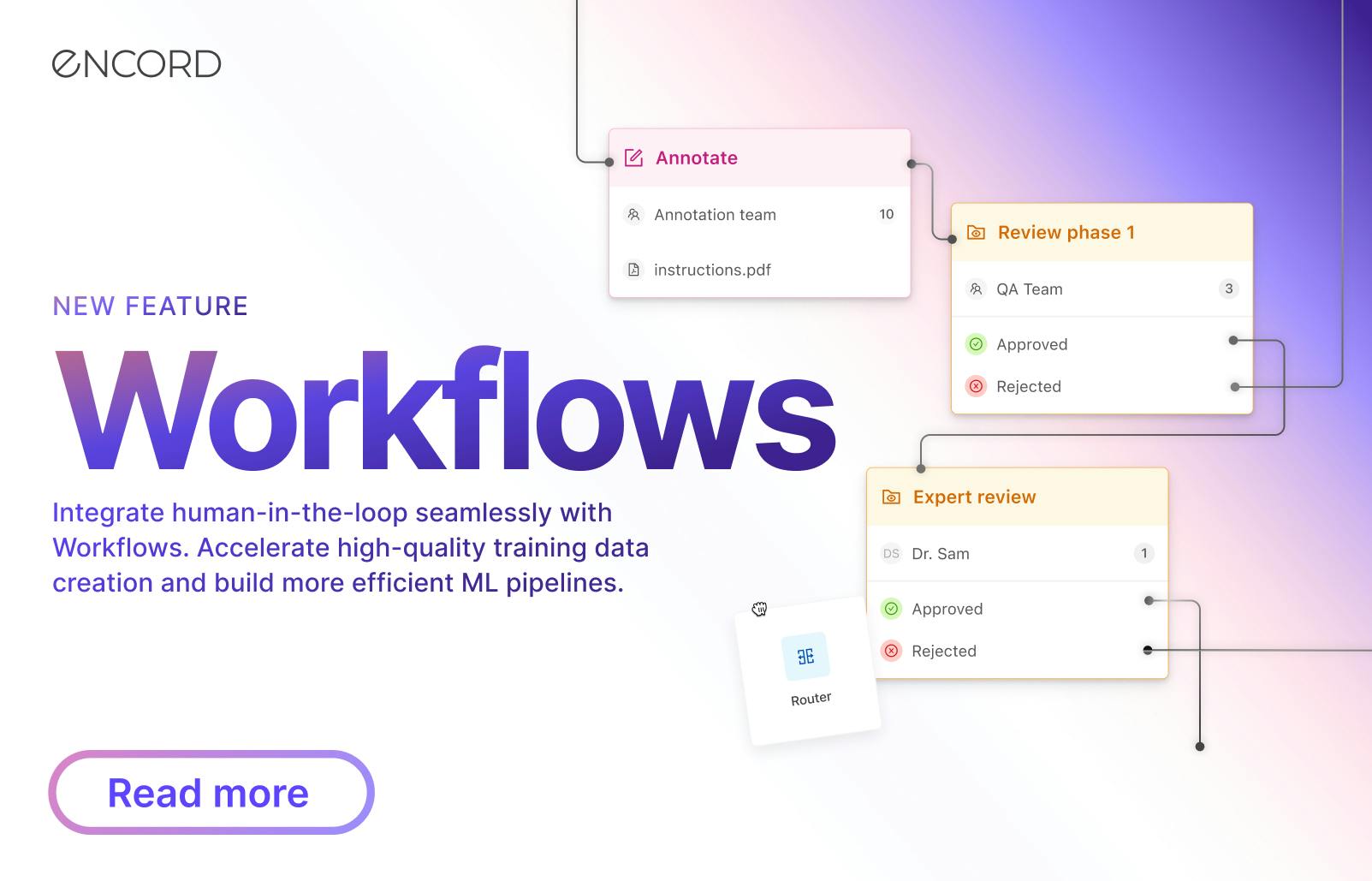

In this technical deep-dive, we’ll show how Encord’s analytics reveals whether your annotation team, workflow, and data quality are actually performing well. And, how ML leaders can use these insights to iterate faster, reduce noise, and run a genuinely high-performing pipeline.

In this session, you’ll learn how to:

- Measure team productivity using throughput, time per task, and stage efficiency metrics, such as the ratio of time spent annotating vs. reviewing.

- Identify quality gaps through understanding deviation, issue-tags, and filtering by issue resolution.

- Evaluate annotator consensus by analysing reviewer rejections and the accuracy of ontology classes.

- Audit workflows using editor logs, event sources, and label-level statistics to trace inefficiencies and systematic errors across different modalities.

Who it’s for:

ML and AI engineers, data operations teams, and technical practitioners who manage or rely on large-scale annotation pipelines. If you’re responsible for diagnosing quality issues, monitoring annotator performance, reducing label noise, or ensuring your dataset is stable enough for reliable model training, this session is designed for you.

Register to participate in the session live or to get a recording.

Speakers

Jim Broadbent