Software To Help You Turn Your Data Into AI

Forget fragmented workflows, annotation tools, and Notebooks for building AI applications. Encord Data Engine accelerates every step of taking your model into production.

Contents

Annotation and labeling of raw data — images and videos — for machine learning (ML) models is the most time-consuming and laborious, albeit essential, phase of any computer vision project.

Quality outputs and the accuracy of an annotation team’s work have a direct impact on the performance of any machine learning model, regardless of whether an AI (artificial intelligence) or deep learning algorithm is applied to the imaging datasets.

Organizations across dozens of sectors — healthcare, manufacturing, sports, military, smart city planners, automation, and renewable energy — use machine learning and computer vision models to solve problems, identify patterns, and interpret trends from image and video-based datasets.

Every single computer vision project starts with annotation teams labeling and annotating the raw data; vast quantities of images and videos. Successful annotation outcomes ensure an ML model can ‘learn’ from this training data, solving the problems organizations and ML team leaders set out to solve.

Once the problem and project objectives and goals have been defined, organizations have a not-so-simple choice for the annotation phase: Do we outsource or keep imaging and video dataset annotation in-house?

In this guide, we seek to answer that question, covering the pros and cons of outsourced video and image data labeling vs. in-house labeling, 7 best practice tips, and what organizations should look for in annotation and machine learning data labeling providers.

Let’s dive in . . .

In-house data labeling and annotation, as the name suggests, involves recruiting and managing an internal team of dataset annotators and big data specialists. Depending on your sector, this team could be image and video specialists, or professionals in other data annotation fields.

Before you decide, “Yes, this is what we need!”, it’s worth considering the pros and cons of in-house data labeling compared to outsourcing this function.

Even for experienced project leaders, this isn’t an easy call to make. In many cases, 6 or 7-figure budgets are allocated for machine learning and computer vision projects. Outcomes and outputs depend on the quality and accurate labeling of image and video annotation training datasets, and these can have a huge impact on a company, its customers, and stakeholders.

Hence the need to consider the other option: Should we consider outsourcing data annotation projects to a dedicated, experienced, proven data labeling service provider?

What is Outsourced Data Labeling?

Instead of recruiting an in-house team, many organizations generate a more effective return on investment (ROI) by partnering with third-party, professional, data annotation service providers.

Taking this approach isn’t without risk, of course. Outsourcing never is, regardless of what services the company outsources, and no matter how successful, award-winning, or large a vendor is. There’s always a danger something will go wrong. Not everything will turn out as you hoped.

However, in many cases, organizations in need of video and image annotation and data labeling services find the upsides outweigh the risks and costs of doing this in-house. Let’s take a closer look at the pros and cons of outsourced annotation and labeling.

Now let’s review what you should look for in an annotation provider, and what you need to be careful of before choosing who to work with.

Outsourcing data annotation is a reliable and cost-effective way to ensure training datasets are produced on time and within budget. Once an ML team has training data to work with, they can start testing a computer vision model. The quality, accuracy, and volume of annotated and labeled images and videos play a crucial role in computer vision project outcomes.

Consequently, you need a reliable, trustworthy, skilled, and outcome-focused data labeling service provider. Project leaders need to look for a partner who can deliver:

At the same time, ML and computer vision project leaders — those managing the outsourced relationship and budget — need to watch out for potential pitfalls.

Common pitfalls include:

Now let’s dive into the 7 best practice tips you need to know when working with an outsourced annotation provider.

Start Small: Commission a Proof of Concept Project (POC)

Data annotation outsourcing should always start with a small-scale proof of concept (POC) project, to test a new provider's abilities, skills, tools, and team. Ideally, POC accuracy should be in the 70-80 percentile range. Feedback loops from ML and data ops teams can improve the accuracy, and outcomes, and reduce dataset bias, over time.

Benchmarking is equally important, and we cover that and the importance of leveraging internal annotation teams shortly.

Carefully Monitor Progress

Annotation projects often operate on tight timescales, dealing with large volumes of data being processed every day. Monitoring progress is crucial to ensuring annotated datasets are delivered on time, at the right level of accuracy, and at the highest quality possible.

As a project leader, you need to carefully monitor progress against internal and external provider milestones. Otherwise, you risk data being delivered months after it was originally needed to feed into a computer vision model. Once you’ve got an initial batch of training data, it’s easier to assess a provider for accuracy.

Monitor and Benchmark Accuracy

When the first set of images or videos is fed into an ML/AI-based or computer vision model, the accuracy might be 70%. A model is learning from the datasets it receives. Improving accuracy is crucial. Computer vision models need larger annotated datasets with a higher level of accuracy to improve the project outcomes, and this starts with improving the quality of training data.

Some of the ways to do this are to monitor and benchmark accuracy against open source datasets, and imaging data your company has already used in machine learning models. Benchmarking datasets and algorithms are equally useful and effective, such as COCO, and numerous others.

Keep Mistakes & Errors to a Minimum

Mistakes and errors cost time and money. Outsourced data labeling providers need a responsive process to correct them quickly, re-annotating datasets as needed.

With the right tools, processes, and proactive data ops teams internally, you can construct customized label review workflows to ensure the highest label quality standards possible. Using an annotation tool such as Encord can help you visualize the breakdown of your labels in high granularity to accurately estimate label quality, annotation efficiency, and model performance.

The more time and effort you put into reducing errors, bias, and unnecessary mistakes, the higher level of annotation quality can be achieved when working closely with a dataset labeling provider.

Keep Control of Costs

Costs need to be monitored closely. Especially when re-annotation is required. As a project leader, you need to ensure costs are in-line with project estimates, with an acceptable margin for error. Every annotation project budget needs project overrun contingencies.

However, you don’t want this getting out of control, especially when any time and cost overruns are the faults of an external annotation provider. Agree on all of this before signing any contract, and ask to see key performance indicator (KPI) benchmarks and service level agreements (SLAs).

Measure performance against agreed timescales, quantity assurance (QA) controls, KPIs, and SLAs to avoid annotation project cost overruns.

Leverage In-house Annotation Skills to Assess Quality

Internally, the team receiving datasets from an external annotation provider needs to have the skills to assess images and video labels, and metadata for quality and accuracy. Before a project starts, set up the quality assurance workflows and processes to manage the pipeline of data coming in. Only once complete datasets have been assessed (and any errors corrected) can they be used as training data for machine learning models.

Use Performance Tracking Tools

Performance tracking tools are a vital part of the annotation process. We cover this in more detail next. With the right performance tracking tools and a dashboard, you can create label workflow tools to guarantee quality annotation outputs.

Clearly defined label structures reduce annotator ambiguity and uncertainty. You can more effectively guarantee higher-quality results when annotation teams use the right tools to automate image and video data labeling.

Data operations team leaders need a real-time overview of annotation project progress and outputs. With the right tool, you can gain the insight and granularity you need to assess how an external annotation team is progressing.

Are they working fast enough? Are the outputs accurate enough? Questions that project managers need to ask continuously can be answered quickly with a performance dashboard, even when the annotators are working several time zones away.

Dashboards can show you a whole load of insights: a performance overview of every annotator on the project, annotation rejection and approval ratings, time spent, the volume of completed images/videos per day/team member, the types of annotations completed, and a lot more.

Example of the performance dashboard in Encord

Annotation projects require consensus benchmarks to ensure accuracy. Applying annotations, labels, metadata, bounding boxes, classifications, keypoints, object tracking, and dozens of other annotation types to thousands of images and videos takes time. Mistakes are made. Errors happen.

Your aim is to reduce those errors, mistakes, and misclassifications as much as possible. To ensure the highest level of accuracy in datasets that are fed into computer vision models, benchmark datasets and other quality assurance tools can help you achieve this.

When working with a new provider, annotation training and onboarding for tools they’re not familiar with is time well spent. It’s worth investing in annotation training as required, especially if you’re asking an annotation team to do something they’ve not done before.

For example, you might have picked a provider with excellent experience, but they’ve never done human pose estimation (HPE) before. Ensure training is provided at this stage to avoid mistakes and cost overruns later on.

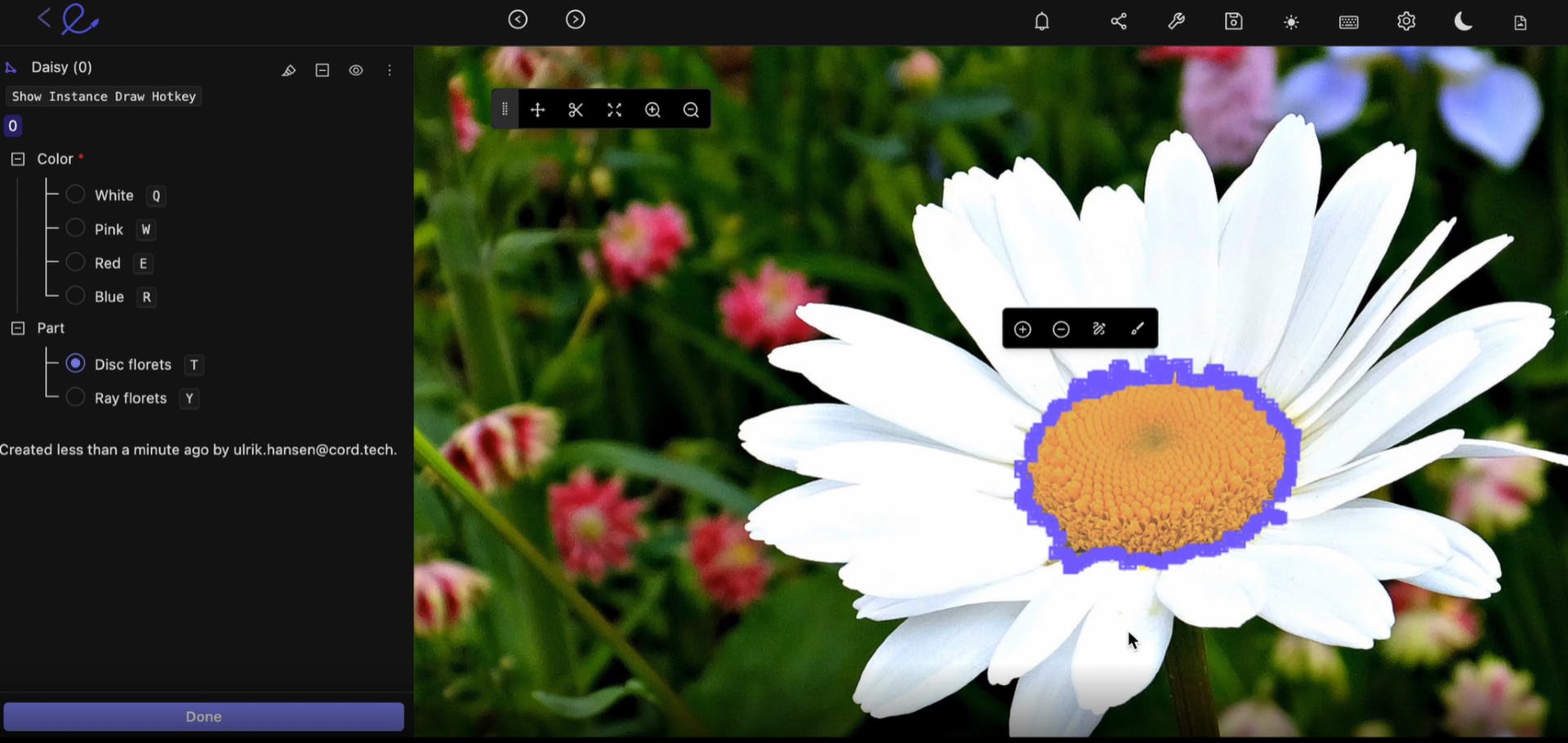

Annotation projects take time. Thankfully, there are now dozens of ways to speed up this process. With powerful and user-friendly tools, such as Encord, annotation teams can benefit from an intuitive editor suite and automated features.

Automation drastically reduces the workloads of manual annotation teams, ensuring you see results more quickly. Instead of drawing thousands of new labels, annotators can spend time reviewing many more automated labels. For annotation providers, Encord’s annotate, review, and automate features can accelerate the time it takes to deliver viable training datasets.

Automatic image segmentation in Encord

Flexible tools, automated labeling, and configurable ontologies are useful assets for external annotation providers to have in their toolkits. Depending on your working relationship and terms, you could provide an annotation team with access to software such as Encord, to integrate annotation pipelines into quality assurance processes and training models.

Outsource or keep image and video dataset annotation in-house? This a question every data operations team leader struggles with at some point. There are pros and cons to both options.

In most cases, the cost and time efficiency savings outweigh the expense and headaches that come with recruiting and managing an in-house team of visual data annotators. Provided you find the right partner, you can establish a valuable long-term relationship. Finding the right provider is not easy.

It might take some trial and error and failed attempts along the way. The effort you put in at the selection stage will be worth the rewards when you do source a reliable, trusted, expert annotation vendor. Encord can help you with this process.

Our AI-powered tools can also help your data ops teams maintain efficient processes when working with an external provider, to ensure image and video dataset annotations and labels are of the highest quality and accuracy.

How Do You Know You’ve Found a Good Outsourced Annotation Provider?

Finding a reliable, high-quality outsourced annotation provider isn’t easy. It’s a competitive and commoditized market. Providers compete for clients on price, using press coverage, awards, and case studies to prove their expertise.

It might take time to find the right provider. In most cases, especially if this is your organization’s first time working with an outsourced data annotation company, you might need to try and test several POC projects before picking one.

At the end of the day, the quality, accuracy, responsiveness, and benchmarking of datasets against the target outcomes is the only way to truly judge whether you’ve found the right partner.

How to Find an Outsourced Annotation Partner?

When looking for an outsourced annotation and dataset labeling provider apply the same principles used when outsourcing any mission-critical service.

Firstly, start with your network: ask people you trust — see who others recommend — and refer back to any providers your organization has worked with in the past.

Compare and contrast providers. Read reviews and case studies. Assess which providers have the right experience, and sector-specific expertise, and appear to be reliable and trustworthy. Price needs to come into your consideration, but don’t always go with the cheapest. You might be disappointed and find you’ve wasted time on a provider who can’t deliver.

It’s often an advantage to test several at the same time with a proof of concept (POC) dataset. Benchmark and assess the quality and accuracy of the datasets each provider annotates. In-house data annotation and machine learning teams can use the results of a POC to determine the most reliable provider you should work with for long-term and high-volume imaging dataset annotation projects.

What Are The Long-term Implications of Outsourced vs. In-house Annotation and Data Labeling?

In the long term, there are solid arguments for recruiting and managing an in-house team. You have more control and will have the talent and expertise internally to deliver annotation projects.

However, computer vision project leaders have to weigh that against an external provider being more cost and time-effective. As long as you find a reliable and trustworthy, quality-focused provider with the expertise and experience your company needs, then this is a partnership that can continue from one project to the next.

At Encord, our active learning platform for computer vision is used by a wide range of sectors - including healthcare, manufacturing, utilities, and smart cities - to annotate 1000s of images and video datasets and accelerate their computer vision model development.

Ready to automate and improve the quality of your data annotations?

Sign-up for an Encord Free Trial: The Active Learning Platform for Computer Vision, used by the world’s leading computer vision teams.

AI-assisted labeling, model training & diagnostics, find & fix dataset errors and biases, all in one collaborative active learning platform, to get to production AI faster. Try Encord for Free Today.

Want to stay updated?

Follow us on Twitter and LinkedIn for more content on computer vision, training data, and active learning.

Join the Encord Developers community to discuss the latest in computer vision, machine learning, and data-centric AI

Join the communitySoftware To Help You Turn Your Data Into AI

Forget fragmented workflows, annotation tools, and Notebooks for building AI applications. Encord Data Engine accelerates every step of taking your model into production.