How to Use Semantic Search to Curate Images of Products with Encord Active

Finding contextually relevant images from large datasets remains a substantial challenge in computer vision. Traditional search techniques often struggle to grasp the semantic nuances of user queries because they rely on image metadata. This usually leads to inefficient searches and inaccurate results.

If you are working with visual datasets, traditional search methods cause significant workflow inefficiencies and potential data oversight, particularly in critical fields such as healthcare and autonomous vehicle development.

How do we move up from searching by metadata? Enter semantic search! It uses a deep understanding of your search intent and contextual meaning to deliver accurate and semantically relevant results. So, how do you implement semantic search?

Encord Active is a platform for running semantic searches on your dataset. It uses OpenAI’s CLIP (Contrastive Language–Image Pre-Training) under the hood to understand and match the user's intent with contextually relevant images. This approach allows for a more nuanced, intent-driven search process.

This guide will teach you how to implement semantic search with Encord Active to curate datasets for upstream or downstream tasks. More specifically, you will curate datasets for annotators to label products for an online fashion retailer.

At the end of this guide, you should be able to:

- Perform semantic search on your images within Encord.

- Curate datasets to send over for annotation or downstream cleaning.

- See an increase in search efficiency, reduced manual effort, and more intuitive interaction with your data.

What are Semantic Image Search and Embeddings?

When building AI applications, semantic search, and embeddings are pivotal. Semantic search uses a deep understanding of user intent and the contextual meanings of queries to deliver search results that are accurate and semantically relevant to the user's needs.

Semantic search bridges the gap between the complex, nuanced language of the queries and the language of the images.

It interprets queries not as mere strings of keywords but as expressions of concepts and intentions. This approach allows for a more natural and intuitive interaction with image databases.

This way, you can find images that closely match your search intent, even when the explicit keywords are not in the image metadata.

Image Embeddings to Improve Model Performance

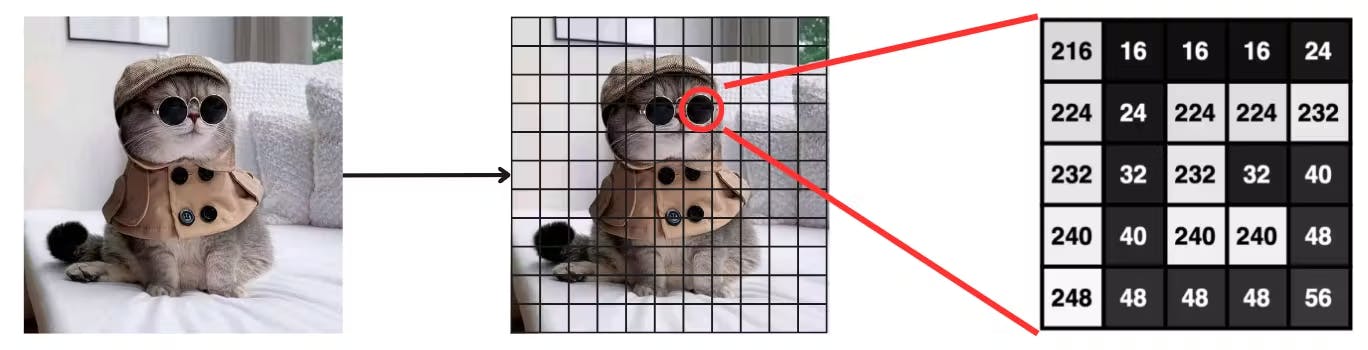

Embeddings transform data—whether text, images, or sounds—into a high-dimensional numerical vector, capturing the essence of the data's meaning. For instance, text embeddings convert sentences into vectors that reflect their semantic content, enabling machines to process and understand them.

OpenAI's Contrastive Language–Image Pre-Training (CLIP) is good at understanding and interpreting images in the context of natural language descriptions under a few lines of code. CLIP is a powerful semantic search engine, but we speak to teams running large-scale image search systems about how resource-intensive it is to run CLIP on their platform or server.

Encord Active runs CLIP under the hood to help you perform semantic search at scale.

Semantic Search with Encord Index

Encord Index uses CLIP and proprietary embedding models to index your images when integrating them with Annotate.

This indexing process involves analyzing the images and textual data to create a searchable representation that aligns images with potential textual queries.

You get this and an in-depth analysis of your data quality on an end-to-end data-centric AI platform—Encord.

You can perform semantic search with Encord Index in two ways:

- Searching your images with natural language (text-based queries).

- Searching your images using a reference or anchor image.

Import your Project

A good place to start using Active is Importing an Annotate Project. From Annotate, you can configure the Dataset, Ontology, and workflow for your Project. Once you complete that, move to Active.

Explore your Dataset

Before using semantic search in Encord Index, you must thoroughly understand and explore your dataset. Start by taking an inventory of your dataset.

Know the volume, variety, and visual content of your images. Understanding the scope and diversity of your dataset is fundamental to effectively using semantic search.

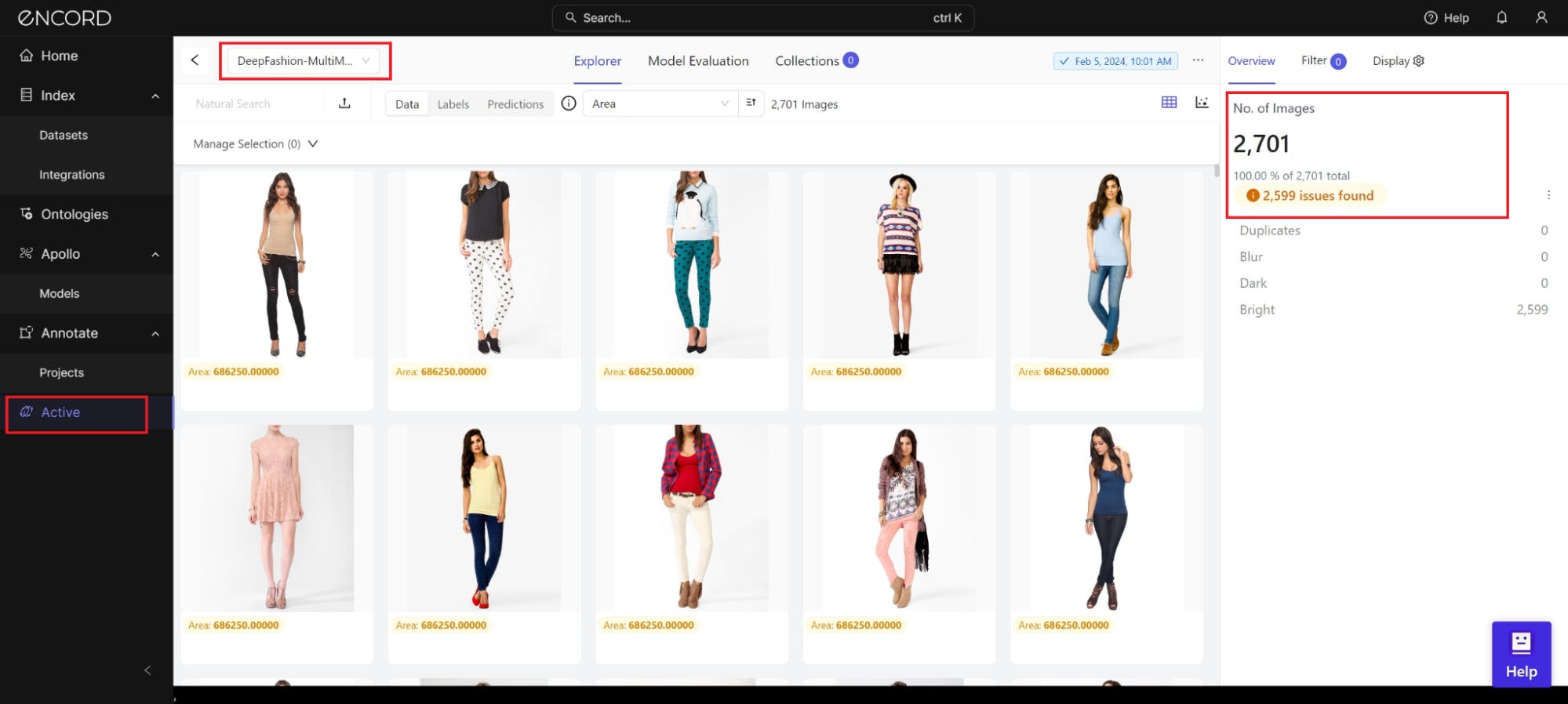

One of the most exciting features of Encord Index is that it intuitively shows you all the stats you need to explore the data.

Within the Explorer, you can already see the total number of images, probable issues within your dataset, and metrics (for example, “Area”) to explore the images:

Play with Explorer features to inspect the dataset and understand the visual quality.

You can also examine any available metadata associated with your images.

Metadata can provide valuable insights into the content, source, and characteristics of your images, which can be instrumental in refining your search.

You can also explore your image embeddings by switching from Grid View to Embeddings View in the Explorer tab:

It’s time to search smarter 🔎🧠.

Search your Images with Natural Language

You’ve explored the dataset; you are now ready to curate your fashion products for the listings with semantic searches using natural language queries.

For example, if you want images for a marketing campaign themed around "innovation and technology," enter this phrase into the search bar.

Encord Index then processes this query, considering the conceptual and contextual meanings behind the terms, and returns relevant images.

Within the Explorer, locate the “Natural Search” bar.

Say our online platform does not have enough product listings for white sneakers. What can we do? In this case, enter “white sneakers” in the search bar:

Awesome! Now you see most images of models in white sneakers and a few that aren’t. Although the total images in our set are 2,701, imagine if the product listings were an order of magnitude larger. This would be consequential.

Before curating the relevant images, let’s see how you can search by similar images to fine-tune your search even further.

Search your Images with Similar Images

Select one of the images and click the expansion button. Click the “Find similar” button to use the anchor image for the semantic similarity search:

Sweet! You just found another way to tailor your search. Next, click the “x” (or cancel) icon to return to the natural language search explorer. It’s time to curate the product images.

Curate Dataset in Collections

Within the natural language search explorer, select all the visible images of models with white sneakers.

One way to quickly comb through the data and ensure you don’t include false positives in your collections is to use the Select Visible option.

This option only selects images you can see, and then you can deselect images of models in non-white sneakers.

In this case, we will quickly select a few accurate options we can see:

On the top right-hand corner, click Add to a Collection, and if you are not there already, navigate to New Collection. Name the Collection and add an appropriate and descriptive comment in the Collection Description box. Click Submit when you:

Once you complete curating the images, you can take several actions:

- Create a new dataset slice.

- Send the collection to Encord Annotate for labeling.

- Bulk classify the images for pre-labeling.

- Export the dataset for downstream tasks.

Here, we will send the collection to Annotate to label the product listings. We named it “White Sneakers” and included comments to describe the collection.

Send Dataset to Encord Annotate

Navigate to the Collections tab and find the new collection (in our case, “White Sneakers”). Hover over the new collection and click the menu on the right to show the list of next action options. Click Send to Annotate and leave a descriptive comment for your annotator:

Export Dataset for Downstream Tasks

Say you are building computer vision applications for an online retailer or want to develop an image search engine. You can export the dataset metadata for those tasks if you need to use the data downstream for preprocessing or training.

Back to your new collection, click the menu, and find the Generate CSV option to export the dataset metadata in your collection:

Nice! Now, you have CSV with your dataset metadata and direct links to the images on Encored so that you can access them securely for downstream tasks.

Next Steps: Labeling Your Products on Annotate

After successfully using Encord Active to curate your image datasets through semantic search, the next critical step involves accurately labeling the data. Encord Annotate is a robust and intuitive platform for annotating images with high precision.

This section explores how transitioning from dataset curation to dataset labeling within the Encord ecosystem can significantly improve the value and utility of the “White Sneakers” product listing.

Encord Annotate supports various annotation types, including bounding boxes, polygons, segmentation masks, and key points for detailed representation of objects and features within images.

Features of Encord Annotate

- Integrated Workflow: Encord Annotate integrates natively with Encord Active. This means a simple transition between searching, curating, and labeling.

- Collaborative Annotation: The platform supports collaborative annotation efforts that enable teams to work together efficiently, assign tasks, and manage project progress.

- Customizable Labeling Tools: You can customize the annotation features and labels to fit the specific needs of the projects to ensure that the data is labeled in the most relevant and informative way.

- Quality Assurance Mechanisms: Built-in quality assurance features, such as annotation review and validation processes, ensure the accuracy and consistency of the labeled data.

- AI-Assisted Labeling: Encord Annotate uses AI models like the Segment Anything Model (SAM) and LLaVA to suggest annotations, significantly speeding up the labeling process while maintaining high levels of precision.

Getting Started with Encord Annotate

To begin labeling the curated dataset with Encord Annotate, ensure it is properly organized and appears in Annotate.

From there, accessing Encord Annotate is straightforward:

- Navigate to the Encord Annotate section within the platform.

- Select the dataset you wish to annotate.

- Choose or customize the annotation tools and labels to your project requirements.

- Start the annotation process individually or by assigning tasks to team members.

Key Takeaways: Semantic Search with Encord Active

Phew! Glad you were able to join me and stick through the entire guide. Let’s recap what we have learned in this article:

- Encord Active uses CLIP for Image Retrieval: Encord Active runs CLIP under the hood to enable semantic search. This approach allows for more intuitive, accurate, and contextually relevant image retrieval than traditional keyword- or metadata-based searches.

- Natural Language Improves Image Exploration Experience: Searching by natural language within Encord Active makes searching for images as simple as describing what you're looking for in your own words. This feature significantly improves the search experience, making it accessible and efficient across various domains.

- Native Transition from Curation to Annotation: Encord Active and Encord Annotate provide an integrated workflow for curating and labeling datasets. Use this integration to curate data for upstream annotation tasks or downstream tasks (data cleaning or training computer vision models).

Frequently asked questions

Encord employs various advanced techniques to optimize the image annotation process, including the ability to search through extensive datasets and identify images that are out-of-distribution. This targeted approach helps data science teams focus on the most impactful images, enhancing model performance and reducing the time spent on data wrangling.

Encord provides a robust platform for annotating images, allowing users to tag every image with relevant metadata. This metadata can be invaluable for various activities, including decoding processes and algorithm tuning, essential for machine vision applications.

Encord is designed to handle large media datasets efficiently, allowing for seamless ingestion and indexing of data. This ensures that users can easily search and access their media assets, which is essential for training machine learning models.

Yes, Encord allows for the creation of hierarchical attributes and multiple-choice options for metadata. Users can define classifications and nested layers within the ontology, providing flexibility in how attributes are organized and searched.

Encord's annotation capabilities enable users to extract various types of metadata from images, including details relevant to decoding activities. This can enhance internal processes and improve the performance of machine vision applications.

Encord offers comprehensive tools for data indexing and management, allowing users to quickly search, filter, and query video datasets. This functionality streamlines the process of finding relevant videos and specific frames for projects.

Encord is built to handle large datasets, making it ideal for projects involving tens of thousands of images. The platform allows users to categorize and cluster images effectively, enabling quicker corrections and streamlined workflows.

Encord supports the ability to upload custom metadata, allowing users to include attributes such as orientation, warehouse location, and date/time fields for images and videos. This metadata can be filtered natively within the platform.

Encord provides tools designed to streamline the process of identifying and selecting relevant images from large datasets. This capability is essential for efficiently managing and analyzing extensive collections of unlabeled images.

Yes, Encord supports embedding-based similarity searches, allowing users to find similar images based on features like fogginess or brightness before formal annotation. This capability enhances data exploration and organization.