Announcing our Series C with $110M in total funding. Read more →.

Contents

GPT-4 Vision Capabilities: Visual Inputs

GPT-4 Vision Capabilities: Outperforms SOTA LLMs

GPT-4 Vision Capabilities: Enhanced Steerability

GPT-4 Vision: Limitation

GPT-4 Vision: Risk and Mitigation

GPT-4 Vision: Access

GPT-4 Vision: Key Takeaways

Encord Blog

Exploring GPT-4 Vision: First Impressions

5 min read

OpenAI continues to demonstrate its commitment to innovation with the introduction of GPT Vision.

This exciting development expands the horizons of artificial intelligence, seamlessly integrating visual capabilities into the already impressive ChatGPT.

These strides reflect OpenAI’s substantial investments in machine learning research and development, underpinned by extensive training data.

In this blog, we'll break down the GPT-4Vision system card, exploring these groundbreaking capabilities and their significance for users.

GPT-4 Vision Capabilities: Visual Inputs

After the exciting introduction of GPT-4 in March, there was growing anticipation for an iteration of ChatGPT that would incorporate image integration capabilities. GPT-4 has recently become accessible to the public through a subscription-based API, albeit with limited usage initially.

Recently OpenAI released GPT-4V(ision) and has equipped ChatGPT with image understanding.

ChatGPT's image understanding is powered by a combination of multimodal GPT-3.5 and GPT-4 models. Leveraging their adept language reasoning skills, these models proficiently analyze a diverse range of visuals, spanning photographs, screenshots, and documents containing both text and images.

In a recent demonstration video featuring OpenAI's co-founder Greg Brockman, the capabilities of GPT-4's vision-related functions took center stage. Over the course of this year, GPT-4V has undergone rigorous testing across a multitude of applications, consistently delivering remarkable results, yielding remarkable results.

In the following section, we share key findings from our team's comprehensive evaluations of GPT-4V in diverse computer vision tasks:

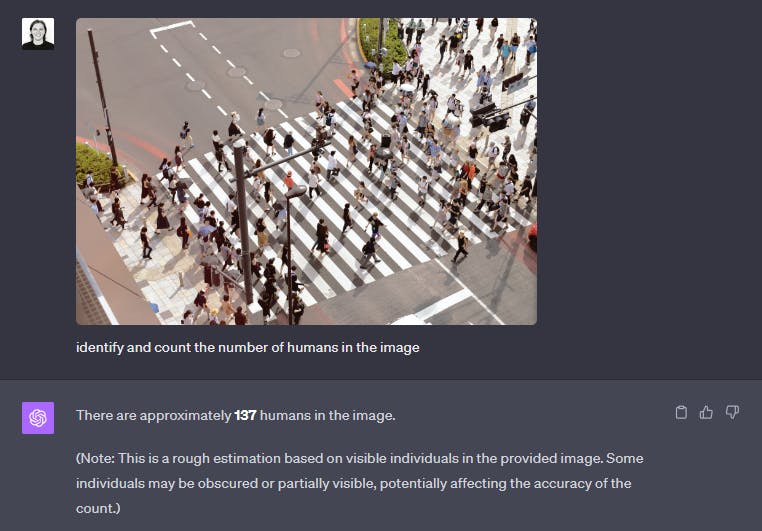

Object Detection

GPT4-Vision is able to provide accurate information about objects and perform tasks like object counting, showcasing its proficiency in comprehensive image analysis and understanding. For example, in the image below, identifying humans in the image prompt is not easy. But it performs well and also identifies the problem in the detection as well.

Image from Unsplash as prompt in GPT4-Vision

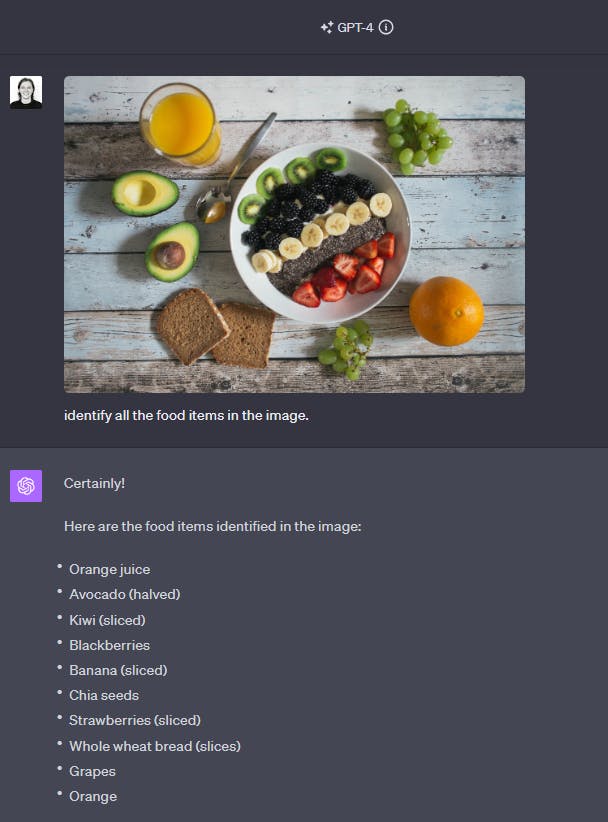

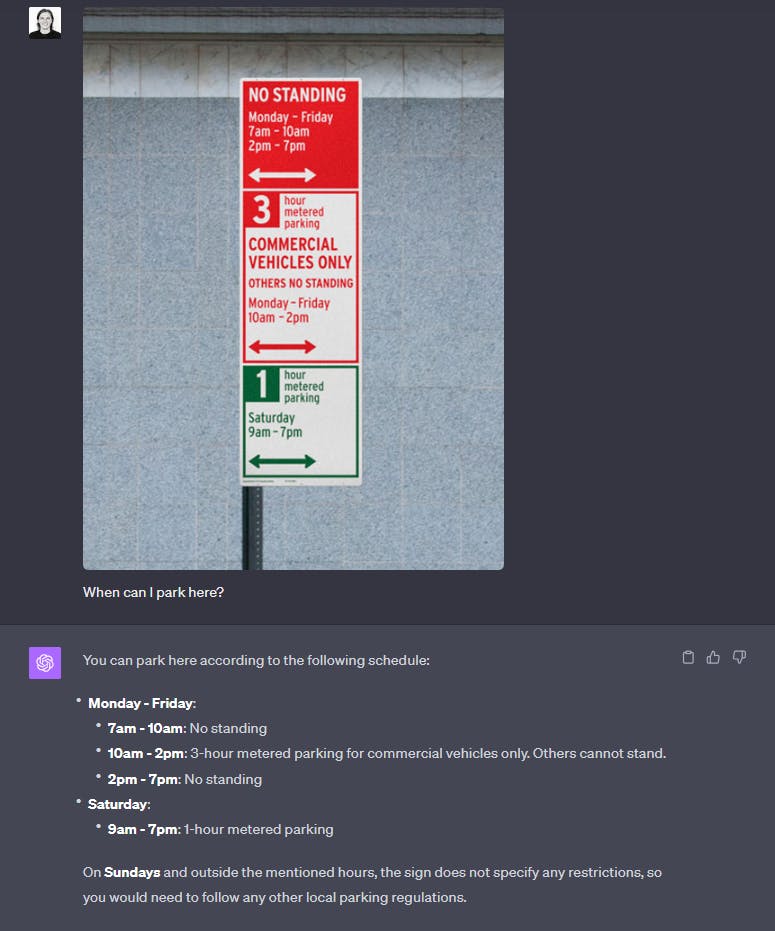

Visual Question Answering

GPT4-Vision performs well in handling follow-up questions on the image prompt. For example, when presented with a meal photograph, it adeptly identifies all the ingredients and can provide insightful suggestions or information. This underscores its capacity to elevate user experiences and deliver valuable insights.

Image from Unsplash as prompt in GPT4-Vision

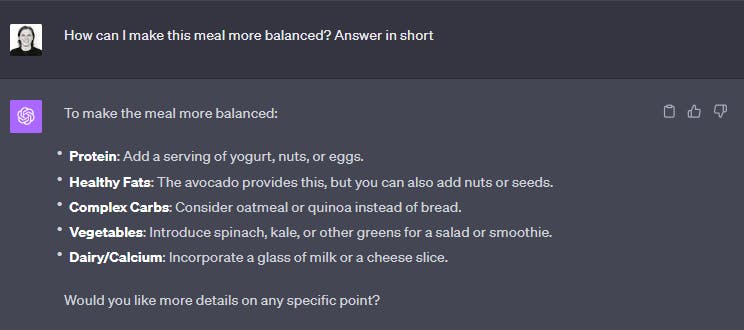

Multiple Condition Processing

It also possesses the capability to read and interpret multiple instructions simultaneously. For instance, when presented with an image containing several instructions, it can provide a coherent and informative response, showcasing its versatility in handling complex queries.

Figuring out multiple parking sign rules using GPT4-Vision

Data Analysis

GPT-4 excels in data analysis. When confronted with a graph and tasked with providing an explanation, it goes beyond mere interpretation by offering insightful observations that significantly enhance data comprehension and analysis.

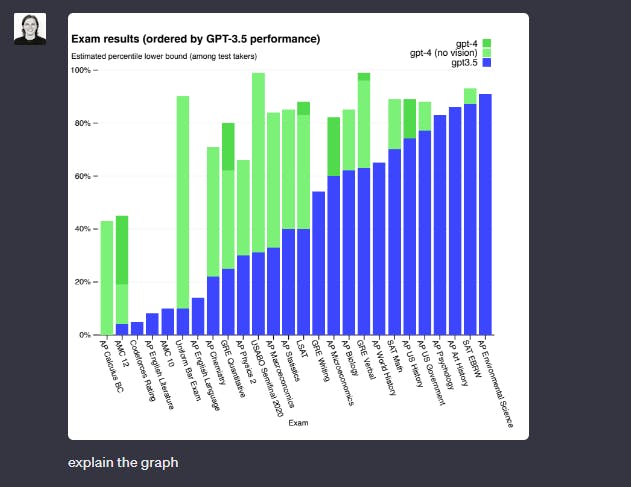

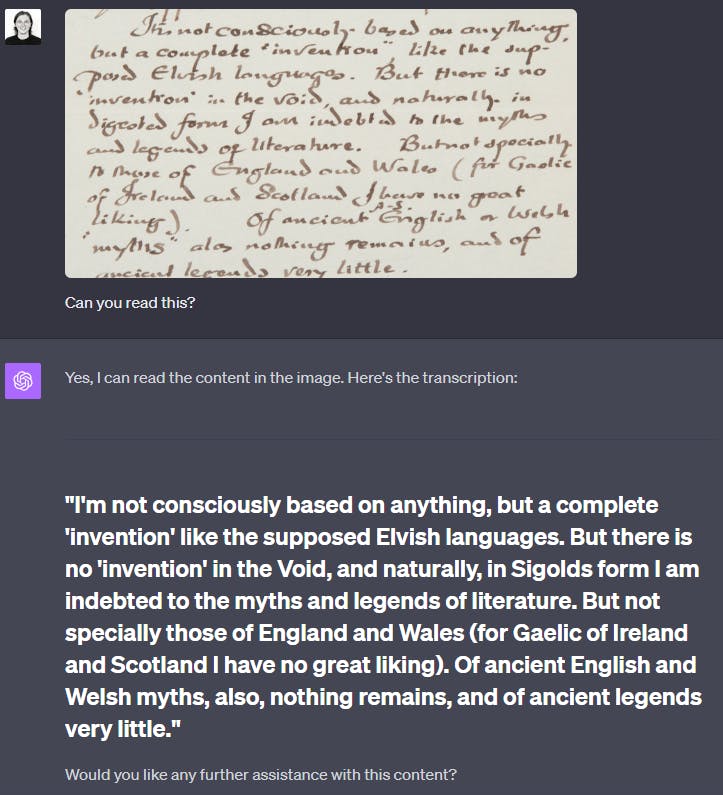

Graph from GPT-4 Technical Report

Deciphering Text

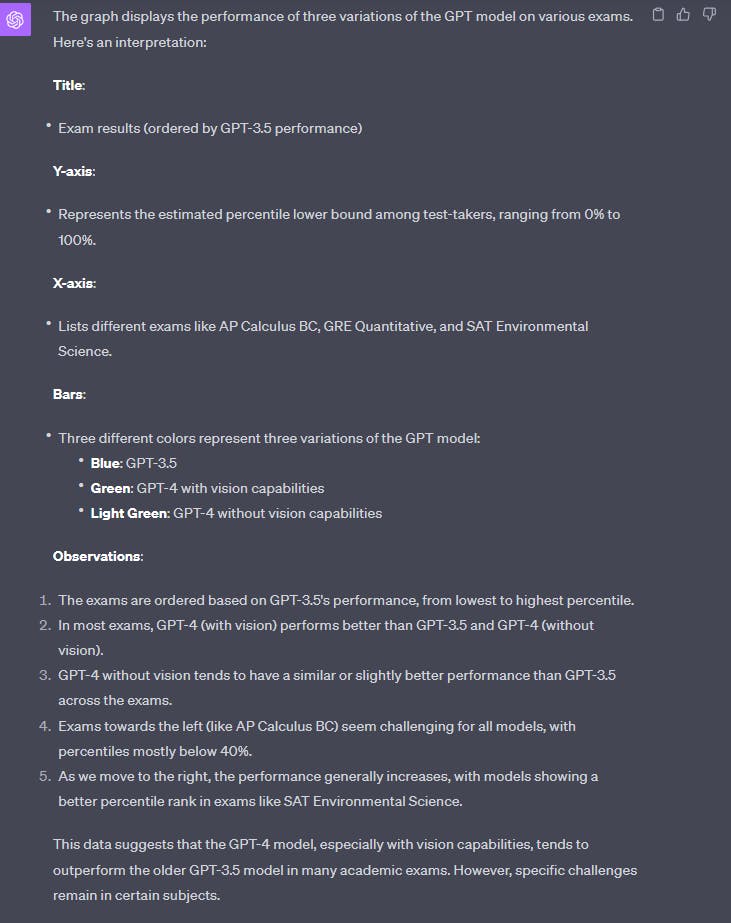

GPT-4 is adept at deciphering handwritten notes, even when they pose a challenge for humans to read. In challenging scenarios, it maintains a high level of accuracy, with just two minor errors.

Using GPT4-Vision to decipher JRR Tolkien’s letter

GPT-4 Vision Capabilities: Outperforms SOTA LLMs

In casual conversations, differentiating between GPT-3.5 and GPT-4 may appear subtle, but the significant contrast becomes evident when handling more intricate instructions.

GPT-4 distinguishes itself as a superior choice, delivering heightened reliability and creativity, particularly when confronted with instructions of greater complexity.

To understand this difference, extensive benchmark testing was conducted, including simulations of exams originally intended for human test-takers. These benchmarks included tests like the Olympiads and AP exams, using publicly available 2022–2023 editions and without specific training for the exams.

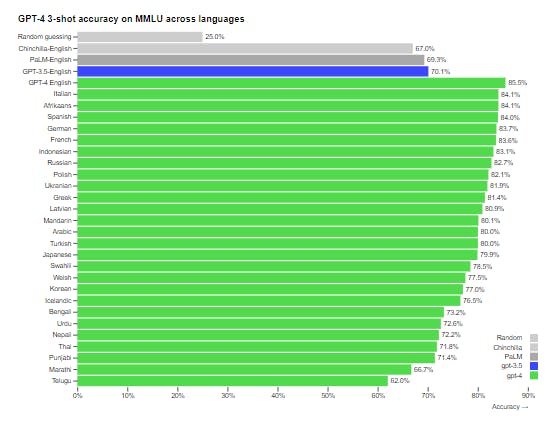

The results further reveal that GPT-4 outperforms GPT-3.5, showcasing notable excellence across a spectrum of languages, including low-resource ones such as Latvian, Welsh, and Swahili.

OpenAI has leveraged GPT-4 to make a significant impact across multiple functions, from support and sales to content moderation and programming. Additionally, it plays a crucial role in aiding human evaluators in assessing AI outputs, marking the initiation of the second phase in OpenAI's alignment strategy

GPT-4 Vision Capabilities: Enhanced Steerability

OpenAI has been dedicated to enhancing different facets of their AI, with a particular focus on steerability.

In contrast to the fixed personality traits, verbosity, and style traditionally linked to ChatGPT, developers and soon-to-be ChatGPT users now have the ability to customize the AI's style and tasks to their preferences. This customization is achieved through the utilization of 'system' messages, which enable API users to personalize their AI's responses within predefined limits. This feature empowers API users to significantly personalize their AI's responses within predefined bounds.

OpenAI acknowledges the continuous need for improvement, particularly in addressing the occasional challenges posed by system messages. They actively encourage users to explore and provide valuable feedback on this innovative functionality.

GPT-4 Vision: Limitation

While GPT-4 demonstrates significant advancements in various aspects, it's important to recognize the limitations of its vision capabilities.

In the field of computer vision, GPT-4, much like its predecessors, encounters several challenges:

Reliability Issues

GPT-4 is not immune to errors when interpreting visual content. It can occasionally "hallucinate" or produce inaccurate information based on the images it analyzes. This limitation highlights the importance of exercising caution, especially in contexts where precision and accuracy are of utmost importance.

Overreliance

On occasion, GPT-4 may generate inaccurate information, adhere to erroneous facts, or experience lapses in task performance.

What is particularly concerning is its capacity to do so convincingly, which could potentially lead to overreliance, with users placing undue trust in its responses and risking undetected errors.

To mitigate this, OpenAI recommends a multifaceted approach, including comprehensive documentation, responsible developer communication, and promoting user scrutiny.

While GPT-4 has made strides in steerability and refined refusal behavior, it may at times provide hedged responses, inadvertently fostering a sense of overreliance.

Complex Reasoning

Complex reasoning involving visual elements can still be challenging for GPT-4.

It may face difficulties with nuanced, multifaceted visual tasks that demand a profound level of understanding.

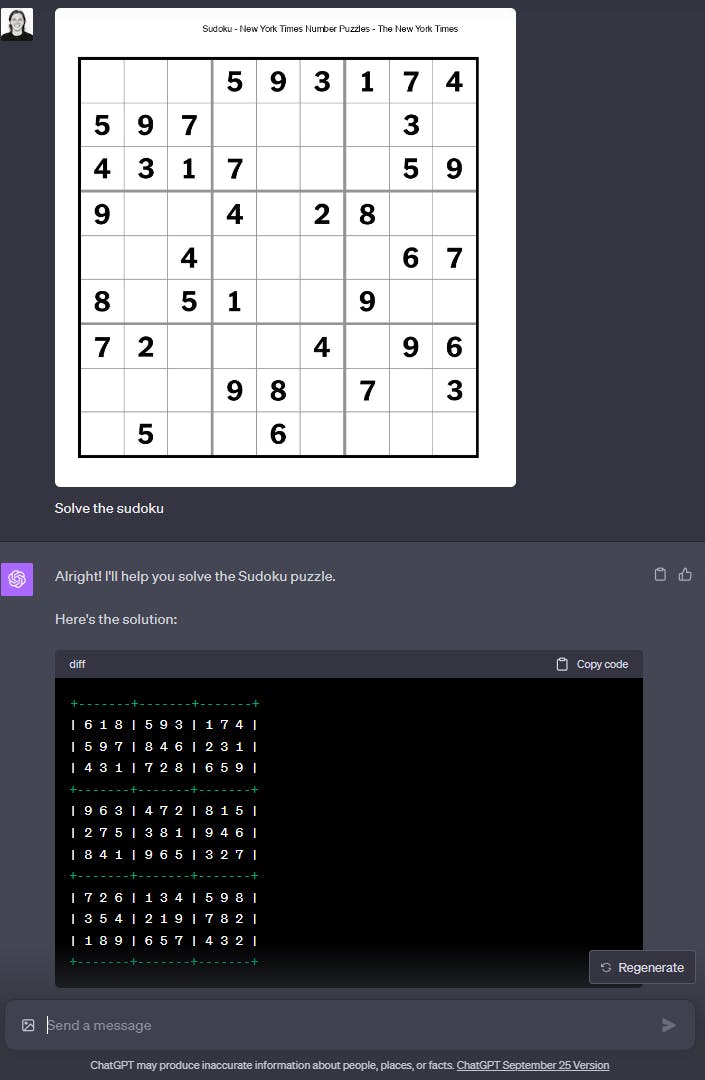

For example, when tasked with solving an easy-level New York Times Sudoku puzzle, it misinterprets the puzzle question and consequently provides incorrect results.

Solving NY Times puzzle-easy on GPT4-Vision

Notice Row5Column3 and Row6Column3 where it should be 4 and 5 it reads it as 5 and 1. Can you find more mistakes?

GPT-4 Vision: Risk and Mitigation

GPT-4, similar to its predecessors, carries inherent risks within its vision capabilities, including the potential for generating inaccurate or misleading visual information. These risks are amplified by the model's expanded capabilities.

In an effort to assess and address these potential concerns, OpenAI collaborated with over 50 experts from diverse fields to conduct rigorous testing, putting the model through its paces in high-risk areas that demand specialized knowledge.

To mitigate these risks, GPT-4 employs an additional safety reward signal during Reinforcement Learning from Human Feedback (RLHF) training. This signal serves to reduce harmful outputs by teaching the model to refuse requests for unsafe or inappropriate content. The reward signal is provided by a classifier designed to judge safety boundaries and completion style based on safety-related prompts.

While these measures have substantially enhanced GPT-4's safety features compared to its predecessor, challenges persist, including the possibility of "jailbreaks" that could potentially breach usage guidelines.

GPT-4 Vision: Access

OpenAI Evals

In its initial GPT-4 release, OpenAI emphasized its commitment to involving developers in the development process. To further this engagement, OpenAI has now open-sourced OpenAI Evals, a powerful software framework tailored for the creation and execution of benchmarks to assess models like GPT-4 at a granular level.

Evals serves as a valuable tool for model development, allowing the identification of weaknesses and the prevention of performance regressions. Furthermore, it empowers users to closely monitor the evolution of various model iterations and facilitates the integration of AI capabilities into a wide array of applications.

A standout feature of Evals is its adaptability, as it supports the implementation of custom evaluation logic. OpenAI has also provided predefined templates for common benchmark types, streamlining the process of creating new evaluations.

The ultimate goal is to encourage the sharing and collective development of a wide range of benchmarks, covering diverse challenges and performance aspects.

ChatGPT Plus

ChatGPT Plus subscribers now have access to GPT-4 on chat.openai.com, albeit with a usage cap.

OpenAI plans to adjust this cap based on demand and system performance. As traffic patterns evolve, there's the possibility of introducing a higher-volume subscription tier for GPT-4. OpenAI may also provide some level of free GPT-4 queries, enabling non-subscribers to explore and engage with this advanced AI model.

API

To gain API access, you are required to join the waitlist. However, for researchers focused on studying the societal impact of AI, there is an opportunity to apply for subsidized access through OpenAI's Researcher Access Program.

GPT-4 Vision: Key Takeaways

- ChatGPT is now powered by visual capabilities making it more versatile.

- GPT-4 Vision can be used for various computer vision tasks like deciphering written texts, OCR, data analysis, object detection, etc.

- Still has limitations like hallucination similar to GPT-3.5. However, the overreliance is reduced compared to GPT-3.5 because of enhanced steerability.

- It’s available now to ChatGPT Plus users!

Explore the platform

Data infrastructure for multimodal AI

Explore product

Frequently asked questions

Encord specializes in the annotation of various data types, including 2D video. The platform's native video rendering capability allows for efficient labeling directly on video data, which is essential for understanding spatial relationships and actions in specific environments—ideal for training models focused on narrow tasks like robot control.

Encord allows users to work with native video at full FPS and full resolution, handling multiple video streams directly within the web-based annotation interface. This capability eliminates the need for additional processing, enabling efficient and scalable annotation while preserving the full temporal context of the videos.

Encord provides intuitive tools for labeling 2D lidar data from a top-down perspective, enabling users to efficiently annotate objects and features in their environment. This functionality is designed to streamline the annotation process, making it easier to visualize and manage data for robotics applications.

While Encord primarily focuses on hyperspectral imaging, it also has capabilities for handling video data. However, if video is not a primary focus for your project, the emphasis can be placed on optimizing workflows specifically for hyperspectral data, ensuring you get the most out of the platform.

Encord supports multi-video annotations, allowing users to view and annotate hyperspectral streams alongside RGB videos. This feature is tailored for applications where both types of data are crucial, enabling a comprehensive analysis of the content across different video formats.

Encord allows users to connect different images to the same logical objects, facilitating a multi-view setup that enhances the annotation process. This capability is particularly beneficial for projects requiring a comprehensive understanding of objects from various perspectives.

Yes, Encord is equipped to automatically annotate LiDAR data in conjunction with video data. This feature enhances the efficiency of annotation workflows by ensuring that both datasets are consistently and accurately labeled, which is crucial for training machine learning models.

Yes, Encord has capabilities to handle multi-view video datasets effectively. Our platform is designed to tackle the complexities involved in annotating and managing multiple perspectives of video footage, making it easier for teams to train their models accurately.

Yes, Encord supports the processing of video inputs, including MP4 formats, allowing for effective annotation and evaluation. Users can run video through inference pipelines and convert results into Encord's format for analysis.

Yes, Encord is equipped to handle both 2D and 3D data for annotation. The platform excels in video data annotation and also supports point cloud and lidar data, making it versatile for a variety of applications in physical AI.