Announcing our Series C with $110M in total funding. Read more →.

Contents

Why do Models Fail in Production?

Reason #1: Data Labeling Errors

Reason #2: Poor Data Quality

Reason #3: Data Drift

Reason #4: Thinking Deployment is the Final Step (No Observability)

Key Takeaways: 4 Reasons Computer Vision Models Fail in Production

Encord Blog

4 Reasons Why Computer Vision Models Fail in Production

Here’s a scenario you’ve likely encountered: You spent months building your model, increased your F1 score above 90%, convinced all stakeholders to launch it, and... poof! As soon as your model sees real-world data, its performance drops below what you expected.

This is a common production machine learning (ML) problem for many teams—not just yours. It can also be a very frustrating experience for computer vision (CV) engineers, ML teams, and data scientists.

There are many potential factors behind these. Problems could stem from the quality of the production data, the design of the production pipelines, the model itself, or operational hurdles the system faces in production.

In this article, you will learn the four (4) reasons why computer vision models fail in production and thoroughly examine the ML lifecycle stages where they occur. These reasons show you the most common production CV and data science problems. Knowing their causes may help you prevent, mitigate, or fix them.

You’ll also see the various strategies for addressing these problems at each step. Let’s jump right into it!

Why do Models Fail in Production?

The ML lifecycle governs how ML models are developed and shipped; it involves sourcing data, data exploration and preparation (data cleaning and EDA), model training, and model deployment, where users can consume the model predictions.

These processes are interdependent, as an error in one stage could affect the corresponding stages, resulting in a model that doesn’t perform well—or completely fails—in production.

Organizations develop machine learning (ML) and artificial intelligence (AI) models to add value to their businesses. When errors occur at any ML development stage, they can lead to production models failing, costing businesses capital, human resources, and opportunities to satisfy customer expectations.

Consider the implications of poorly labeling data for a CV model after data collection. Or the model has an inherent bias—it could invariably affect results in a production environment.

It is noteworthy that the problem can start when businesses do not have precise reasons or objectives for developing and deploying machine learning models, which can cripple the process before it begins.

Assuming the organization has passed all stages and deployed its model, the errors we often see that lead to models failing in production include:

- Mislabeling data, which can train models on incorrect information.

- ML engineers and CV teams that prioritize data quality only at later stages rather than as a foundational practice.

- Ignoring the drift in data distribution over time can make models outdated or irrelevant.

- Implementing minimal or no validation (quality assurance) steps risks unnoticed errors progressing to production.

- Viewing model deployment as the final goal, neglecting necessary ongoing monitoring and adjustments.

Let’s look deeper at these errors and why they are the top reasons we see production models fail.

Reason #1: Data Labeling Errors

Data labeling is the foundation for training machine learning models, particularly supervised learning, where models learn patterns directly from labeled data. This involves humans or AI systems assigning informative labels to raw data—whether it be images, videos, or DICOM—to provide context that enables models to learn.

Despite its importance, data labeling is prone to errors, primarily because it often relies on human annotators. These errors can compromise a model's accuracy by teaching it incorrect patterns.

Consider a scenario in a computer vision project to identify objects in images from data sources. Even a small percentage of mislabeled images can lead the model to associate incorrect features with an object. This could mean the model makes wrong predictions in production.

Potential Solution: Automated Labeling Error Detection

A potential solution is adopting tools and frameworks that automatically detect labeling errors. These tools analyze labeling patterns to identify outliers or inconsistent labels, helping annotators revise and refine the data. An example is Encord Active.

A common data labeling issue is the border closeness of the annotations. Training data with many border-proximate annotations can lead to poor model generalization.

If a model is frequently exposed to partially visible objects during training, it might not perform well when presented with fully visible objects in a deployment scenario. This can affect the model's accuracy and reliability in production.

Let’s see how Encord Active can help you, for instance, identify border-proximate annotations.

Step 1: Select your Project.

Step 2: Under the “Explorer” dashboard, find the “Labels” tab.

Step 3: On the right pane, click on one of the issues EA found to filter your data and labels by it. In this case, “Border Closeness”; click on it.

Step 4: Select one of the images to inspect and validate the issue. Here’s a GIF with the steps:

You will notice that EA also shows you the model’s predictions alongside the annotations, so you can visually inspect the annotation issue and resulting prediction.

Step 5: Visually inspect the top images EA flags and use the Collections feature to curate them.

There are a few approaches you could take after creating the Collections:

- Exclude the images that are border-proximate from the training data if the complete structure of the object is crucial for your application. This prevents the model from learning from incomplete data, which could lead to inaccuracies in object detection.

- Send the Collection to annotators for review.

Reason #2: Poor Data Quality

The foundation of any ML model's success lies in the quality of the data it's trained on. High-quality data is characterized by its accuracy, completeness, timeliness, and relevance to the business problem ("fit for purpose").

Several common issues can compromise data quality:

- Duplicate Images: They can artificially increase the frequency of particular features or patterns in the training data. This gives the model a false impression of these features' importance, causing overfitting.

- Noise in Images: Blur, distortion, poor lighting, or irrelevant background objects can mask important image features, hindering the model's ability to learn and recognize relevant patterns.

- Unrepresentative Data: When the training dataset doesn't accurately reflect the diversity of real-world scenarios, the model can develop biases. For example, a facial recognition system trained mainly on images of people with lighter skin tones may perform poorly on individuals with darker skin tones.

- Limited Data Variation: A model trained on insufficiently diverse data (including duplicates and near-duplicates) will struggle to adapt to new or slightly different images in production. For example, if a self-driving car system is trained on images taken in sunny weather, it might fail in rainy or snowy conditions.

Potential Solution: Data Curation

One way to tackle poor data quality, especially after collection, is to curate good quality data. Here is how to use Encord Active to automatically detect and classify duplicates in your set.

Curate Duplicate Images

Your testing and validation sets might contain duplicate training images that inflate the performance metrics. This makes the model appear better than it is, which could lead to false confidence about its real-world capabilities.

Step 1: Navigate to the Explorer dashboard → Data tab

Step 2: Under the issues found, click on Duplicates to see the images EA flags as duplicates and near-duplicates with uniqueness scores of 0.0 to 0.00001.

There are two steps you could take to solve this issue:

- Carefully remove duplicates, especially when dealing with imbalanced datasets, to avoid skewing the class distribution further.

- If duplicates cannot be fully removed (e.g., to maintain the original distribution of rare cases), use data augmentation techniques to introduce variations within the set of duplicates themselves. This can help mitigate some of the overfitting effects.

Step 3: Under the Data tab, curate duplicates you want to remove or use augmentation techniques to improve by selecting them. Click Add to a Collection → Name the collection ‘Duplicates’ and add a description.

See the complete steps:

Once the duplicates are in the Collection, you can use the tag to filter them out of your training or validation data. If relevant, you can also create a new dataset to apply the data augmentation techniques.

Other solutions could include:

- Implement Robust Data Validation Checks: Use automated tools that continuously validate data accuracy, consistency, and completeness at the entry point (ingestion) and throughout the data pipeline.

- Adopt a Centralized Data Management Platform: A unified view of data across sources (e.g., data lakes) can help identify discrepancies early and simplify access for CV engineers (or DataOps teams) to maintain data integrity.

Reason #3: Data Drift

Data drift occurs when the statistical properties of the real-world images a model encounters in production change over time, diverging from the samples it was trained on. Drift can happen due to various factors, including:

- Concept Drift: The underlying relationships between features and the target variable change. For example, imagine a model trained to detect spam emails. The features that characterize spam (certain keywords, sender domains) can evolve over time.

- Covariate Shift: The input feature distribution changes while the relationship to the target variable remains unchanged. For instance, a self-driving car vision system trained in summer might see a different distribution of images (snowy roads, different leaf colors) in winter.

- Prior Probability Shift: The overall frequency of different classes changes. For example, a medical image classification model trained for a certain rare disease may encounter it more frequently as its prevalence changes in the population.

Potential Solution: Monitoring Data Drift

There are two steps you could take to address data drift:

- Use tools that monitor the model's performance and the input data distribution. Look for shifts in metrics and statistical properties over time.

- Collect new data representing current conditions and retrain the model at appropriate intervals. This can be done regularly or triggered by alerts when significant drift is detected.

You can achieve both within Encord:

Step 1: Create the Dataset on Annotate to log your input data for training or production. If your data is on a cloud platform, check out one of the data integrations to see if it works with your stack.

Step 2: Create an Ontology to define the structure of the dataset.

Step 3: Create an Annotate Project based on your dataset and the ontology. Ensure the project also includes Workflows because some features in Encord Active only support projects that include workflows.

Step 4: Import your Annotate Project to Active. This will allow you to import the data, ground truth, and any custom metrics to evaluate your data quality. See how it’s done in the video tutorial on the documentation.

Step 5: Select the Project → Import your Model Predictions.

There are two steps to inspect the issues with the input data:

- Use the analytics view to get a statistical summary of the data.

- Use the issues found by Encord Active to manually inspect where your model is struggling.

Step 6: On the Explorer dashboard → Data tab → Analytics View.

Step 7: Under the Metric Distribution chart, select a quality metric to assess the distribution of your input data on. In this example, “Diversity" applies algorithms to rank images from easy to hard samples to annotate. Easy samples have lower scores, while hard samples have higher scores.

Step 8: On the right-hand pane, click on Dark. Navigate back to Grid View → Click on one of the images to inspect the ground truth (if available) vs. model predictions.

Observe that the poor lightning could have caused the model to misidentify the toy bear as a person. (Of course, other reasons, such as class imbalance, could cause the model to misclassify the object.)

You can inspect the class balance on the Analytics View → Class Distribution chart.

Nice!

There are other ways to manage data drift, including the following approaches:

- Adaptive Learning: Consider online learning techniques where the model continuously updates itself based on new data without full retraining. Note that this is still an active area of research with challenges in computer vision.

- Domain Adaptation: If collecting substantial amounts of labeled data from the new environment is not feasible, use domain adaptation techniques to bridge the gap between the old and new domains.

Reason #4: Thinking Deployment is the Final Step (No Observability)

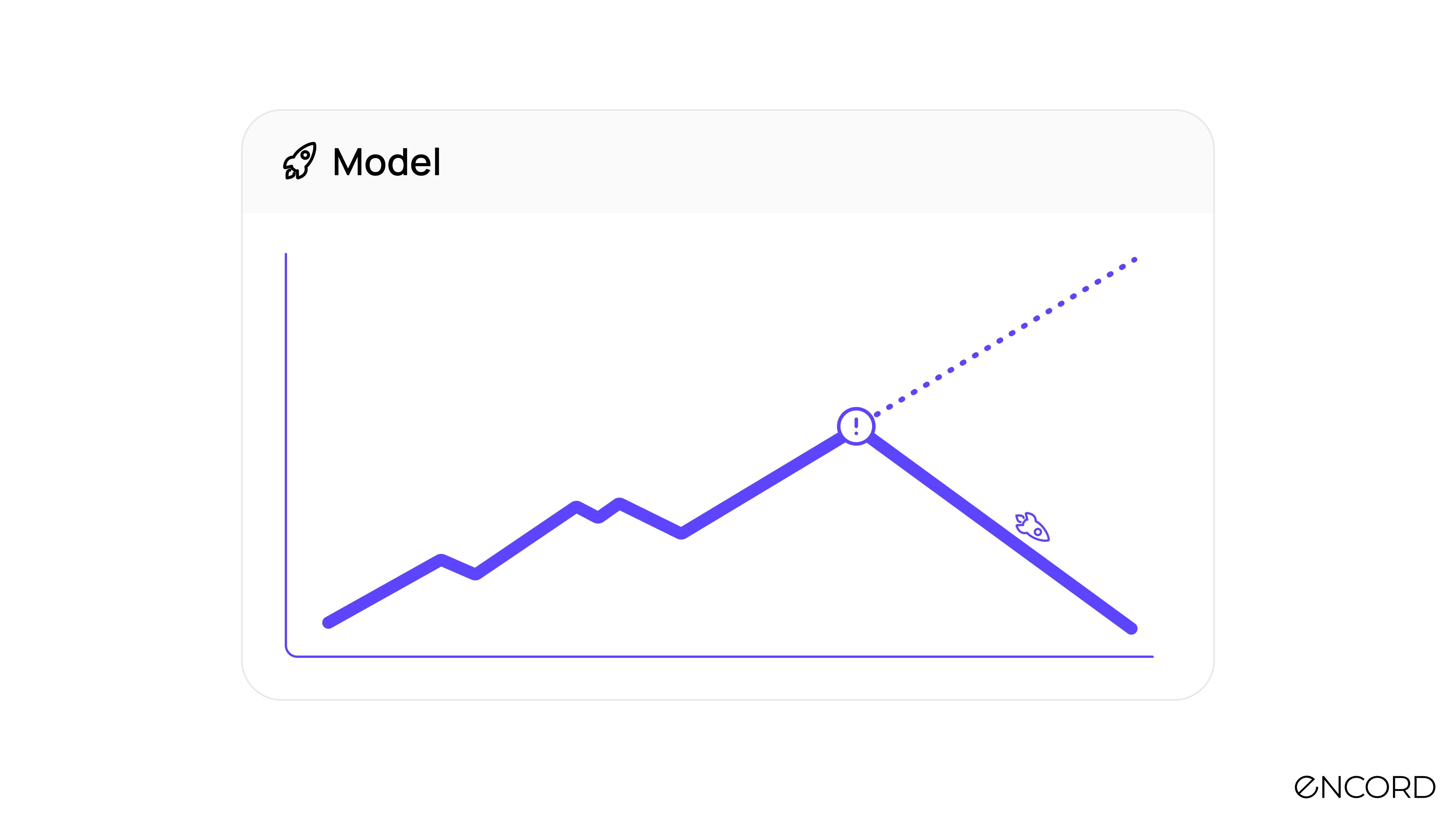

Many teams mistakenly treat deployment as the finish line, which is one reason machine learning projects fail in production. However, it's crucial to remember that this is simply one stage in a continuous cycle. Models in production often degrade over time due to factors such as data drift (changes in input data distribution) or model drift (changes in the underlying relationships the model was trained on).

Neglecting post-deployment maintenance invites model staleness and eventual failure. This is where MLOps (Machine Learning Operations) becomes essential. MLOps provides practices and technologies to monitor, maintain, and govern ML systems in production.

Potential Solution: Machine Learning Operations (MLOps)

The core principle of MLOps is ensuring your model provides continuous business value while in production. How teams operationalize ML varies, but some key practices include:

- Model Monitoring: Implement monitoring tools to track performance metrics (accuracy, precision, etc.) and automatically alert you to degradation. Consider a feedback loop to trigger retraining processes where necessary, either for real-time or batch deployment.

- Logging: Even if full MLOps tools aren't initially feasible, start by logging model predictions and comparing them against ground truth, like we showed above with Encord. This offers early detection of potential issues.

- Management and Governance: Establish reproducible ML pipelines for continuous training (CT) and automate model deployment. From the start, consider regulatory compliance issues in your industry.

Key Takeaways: 4 Reasons Computer Vision Models Fail in Production

Remember that model deployment is not the last step. Do not waste time on a model only to have it fail a few days, weeks, or months later. ML systems differ across teams and organizations, but most failures are common. If you study your ML system, you’ll likely see that some of the reasons your model fails in production are similar to those listed in this article:

1. Data labelling errors

2. Poor data quality

3. Data drift in production

4. Thinking deployment is the final step

The goal is for you to understand these failures and learn the best practices to solve or avoid them. You’d also realize that while most failure modes are data-centric, others are technology-related and involve team practices, culture, and available resources.

Explore the platform

Data infrastructure for multimodal AI

Explore product

Frequently asked questions

ML projects often fail due to poor data, unclear goals, wrong model complexity, unrealistic expectations, or lack of team collaboration.

Models degrade in production because real-world data changes over time (data drift), the underlying patterns they were trained on shift (concept drift), or model outputs create feedback loops that alter future data.

This usually indicates overfitting, meaning the model has memorized the training data too closely and doesn't generalize to new examples.

An AI model's performance depends on the quality and quantity of its training data, its architecture, how it's trained, the features it uses, and potential biases.

Encord encounters challenges in achieving accurate AI model training for complex scenarios, such as identifying gaps in car doors or detecting quality issues in products. The variability in image capture and the need for precise data annotation can limit the effectiveness of the AI models in certain use cases.

Yes, Encord is designed to help users identify misclassifications and manage new product images effectively. It allows for the integration of additional classes to ensure that all images are appropriately classified, facilitating a smoother annotation process and improving overall model accuracy.

Encord addresses scalability by providing a robust infrastructure that supports the rapid evolution of computer vision projects. As teams look to grow and implement new use cases and modalities, Encord helps eliminate inefficiencies associated with disparate solutions, enabling smoother scaling.

Encord provides tools that assist teams in identifying edge cases that may cause their models to underperform. By analyzing the data and deployment contexts, Encord can pinpoint specific areas where the model struggles, allowing for targeted improvements and better overall performance.

Encord is designed to assist teams that primarily use image-only data by providing tools for efficient annotation and data management. This enables teams to streamline their workflows and enhance the accuracy of their motion modeling models without the need for additional data types.

Encord enhances model deployment through features like model encapsulation and optimization for various outputs. This ensures that models operate efficiently under production conditions, with reduced latency and improved performance for real-world applications.

By leveraging Encord's capabilities, teams can enhance model performance, which directly influences business metrics such as yield forecasting and harvesting timing. Improved models lead to better decision-making and operational efficiency.

Yes, Encord can help diagnose model failures by tracing them back to specific data gaps. This diagnostic capability allows teams to understand the root causes of inaccuracies and take corrective actions to improve model performance.

Yes, Encord's vision language models can provide realistic descriptions and recognition for non-commercial products, such as handmade items, by leveraging the power of advanced AI models to interpret images.