Contents

What is Image Segmentation?

Factors to Validate Github Repository’s Health

Top GitHub Repositories for Image Segmentation

#1. Awesome Referring Image Segmentation

#2. Transformer-based Visual Segmentation

#3. Segment Anything

#4. Awesome Segment Anything

#5. Image Segmentation Keras

#6. Image Segmentation

#7. Portrait Segmentation

#8. BCDU-Net

#9.MedSegDiff

#10. U-Net

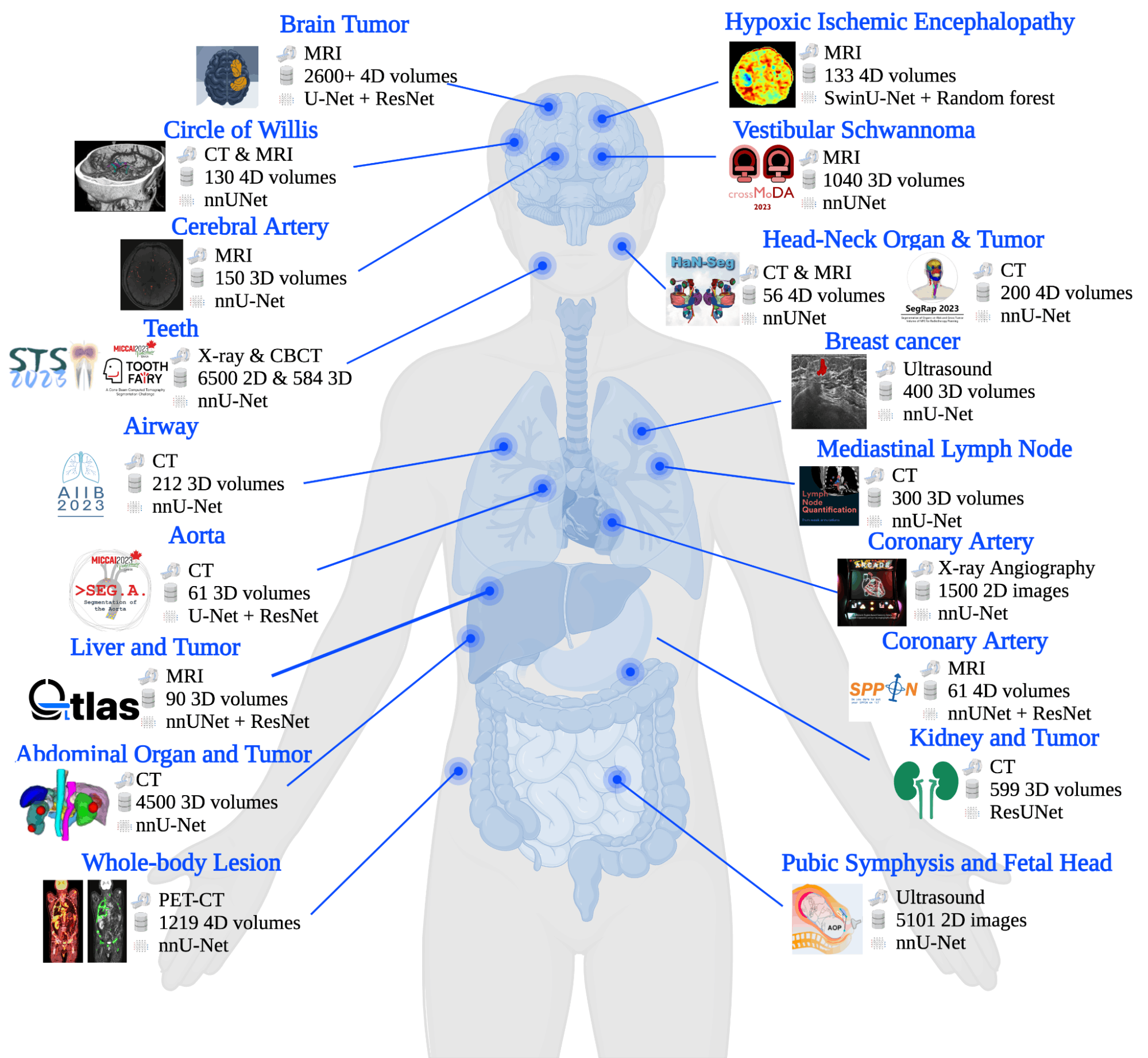

#11. SOTA-MedSeg

#12. UniverSeg

#13. Medical SAM Adapter

#14. TotalSegmentator

#15. Medical Zoo Pytorch

GitHub Repositories for Image Segmentation: Key Takeaways

Encord Blog

15 Interesting Github Repositories for Image Segmentation

A survey of Image segmentation GitHub Repositories shows how the field is rapidly advancing as computing power increases and diverse benchmark datasets emerge to evaluate model performance across various industrial domains.

Additionally, with the advent of Transformer-based architecture and few-shot learning methods, the artificial intelligence (AI) community uses Vision Transformers (ViT) to enhance segmentation accuracy. The techniques involve state-of-the-art (SOTA) algorithms that only need a few labeled data samples for model training.

With around 100 million developers contributing to GitHub globally, the platform is popular for exploring some of the most modern segmentation models currently available.

This article explores the exciting world of segmentation by delving into the top 15 GitHub repositories, which showcase different approaches to segmenting complex images.

But first, let’s understand a few things about image segmentation.

What is Image Segmentation?

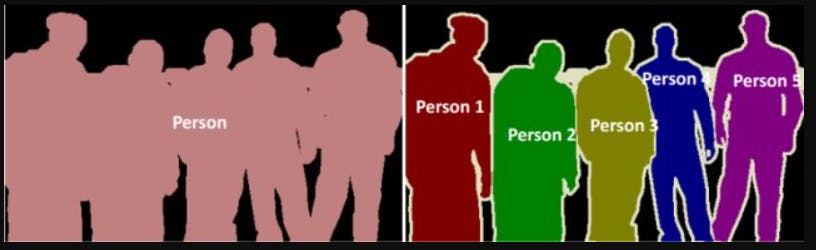

Image segmentation is a computer vision (CV) task that involves classifying each pixel in an image. The technique works by clustering similar pixels and assigning them a relevant label. The method can be categorized into:

- Semantic segmentation—categorizes unique objects based on pixel similarity.

- Instance segmentation— distinguishes different instances of the same object category.

For example, instance segmentation will recognize multiple individuals in an image as separate entities, labeling each person as “person 1”, “person 2”, “person 3”, etc.

Semantic Segmentation (Left) and Instance Segmentation (Right)

The primary applications of image segmentation include autonomous driving and medical imaging. In autonomous driving, segmentation allows the model to classify objects on the road. In medical imaging, segmentation enables healthcare professionals to detect anomalies in X-rays, MRIs, and CT scans.

Factors to Validate Github Repository’s Health

Before we list the top repositories for image segmentation, it is essential to understand how to determine a GitHub repository's health. The list below highlights a few factors you should consider to assess a repository’s reliability and sustainability:

- Level of Activity: Assess the frequency of updates by checking the number of commits, issues resolved, and pull requests.

- Contribution: Check the number of developers contributing to the repository. A large number of contributors signifies diverse community support.

- Documentation: Determine documentation quality by checking the availability of detailed readme files, support documents, tutorials, and links to relevant external research papers.

- New Releases: Examine the frequency of new releases. A higher frequency indicates continuous development.

- Responsiveness: Review how often the repository authors respond to issues raised by users. High responsiveness implies that the authors actively monitor the repository to identify and fix problems.

- Stars Received: Stars on GitHub indicate a repository's popularity and credibility within the developer community. Active contributors often attract more stars, showcasing their value and impact.

Top GitHub Repositories for Image Segmentation

Due to image segmentation’s ability to perform advanced detection tasks, the AI community offers multiple open-source GitHub repositories comprising the latest algorithms, research papers, and implementation details.

The following sections will overview the fifteen most interesting public repositories, describing their resource format and content, topics covered, key learnings, and difficulty level.

#1. Awesome Referring Image Segmentation

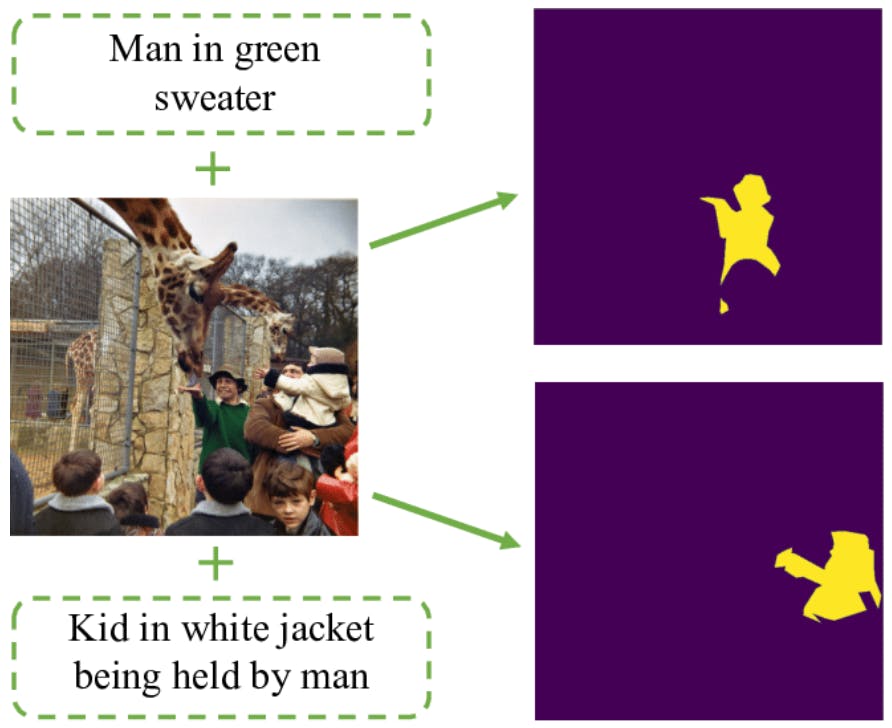

Referring image segmentation involves segmenting objects based on a natural language query. For example, the user can provide a phrase such as “a brown bag” to segment the relevant object within an image containing multiple objects.

Resource Format

The repository is a collection of benchmark datasets, research papers, and their respective code implementations.

Repository Contents

The repo comprises ten datasets, including ReferIt, Google-Ref, UNC, and UNC+, and 72 SOTA models for different referring image segmentation tasks.

Topics Covered

- Traditional Referring Image Segmentation: In the repo, you will find frameworks or traditional referring image segmentation, such as LISA, for segmentation through large language models (LLMs).

- Interactive Referring Image Segmentation: Includes the interactive PhraseClick referring image segmentation model.

- Referring Video Object Segmentation: Consists of 18 models to segment objects within videos.

- Referring 3D Instance Segmentation: There are two models for referring 3D instance segmentation tasks for segmenting point-cloud data.

Key Learnings

- Different Types of Referring Image Segmentation: Exploring this repo will allow you to understand how referring interactive, 3D instance, and video segmentation differ from traditional referring image segmentation tasks.

- Code Implementations: The code demonstrations will help you apply different frameworks to real-world scenarios.

Proficiency Level

The repo is for expert-level users with a robust understanding of image segmentation concepts.

#2. Transformer-based Visual Segmentation

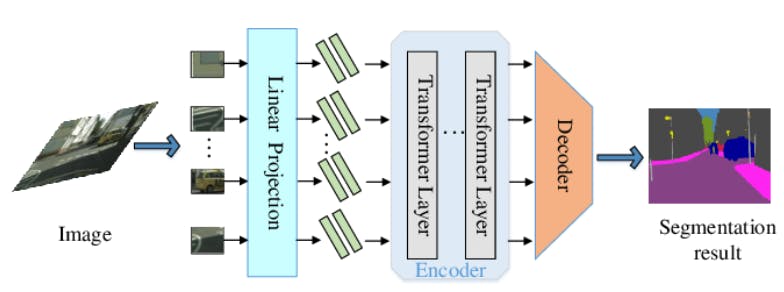

Transformer-based visual segmentation uses the transformer architecture with the self-attention mechanism to segment objects.

Transformer-based Visual Segmentation

Resource Format

The repo contains research papers and code implementations.

Resource Contents

It has several segmentation frameworks based on convolutional neural networks (CNNs), multi-head and cross-attention architectures, and query-based models.

Topics Covered

- Detection Transformer (DETR): The repository includes models built on the DETR architecture that Meta introduced.

- Attention Mechanism: Multiple models use the attention mechanism for segmenting objects.

- Pre-trained Foundation Model Tuning: Covers techniques for tuning pre-trained models.

Key Learnings

- Applications of Transformers in Segmentation: The repo will allow you to explore the latest research on using transformers to segment images in multiple ways.

- Self-supervised Learning: You will learn how to apply self-supervised learning methods to transformer-based visual segmentation.

Proficiency Level

This is an expert-level repository requiring an understanding of the transformer architecture.

#3. Segment Anything

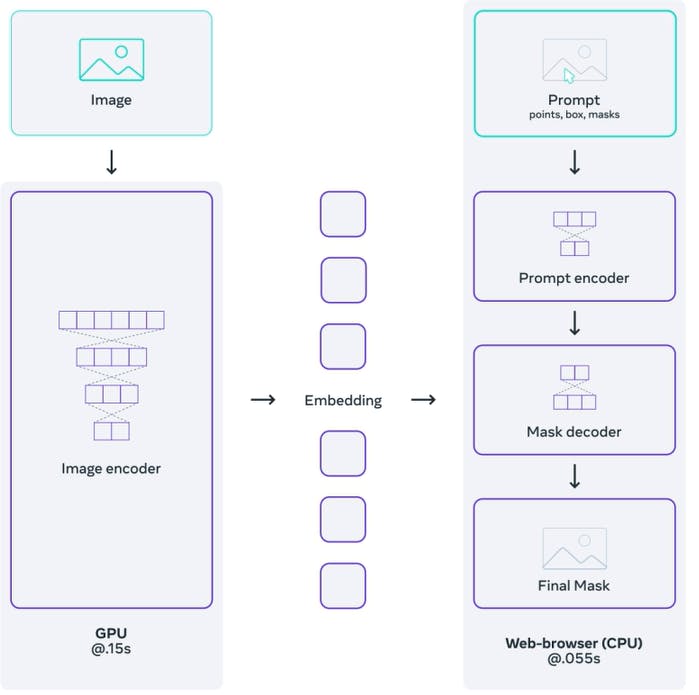

The Segment Anything Model (SAM) is a robust segmentation framework by Meta AI that generates object masks through user prompts.

Resource Format

The repo contains the research paper and an implementation guide.

Resource Contents

It consists of Jupyter notebooks and scripts with sample code for implementing SAM and has three model checkpoints, each with a different backbone size. It also provides Meta’s own SA-1B dataset for training object segmentation models.

Topics Covered

- How SAM Works: The paper explains how Meta developed the SAM framework.

- Getting Started Tutorial: The Getting Started guide helps you generate object masks using SAM.

Key Learnings

- How to Use SAM: The repo teaches you how to create segmentation masks with different model checkpoints.

Proficiency Level

This is a beginner-level repo that teaches you about SAM from scratch.

#4. Awesome Segment Anything

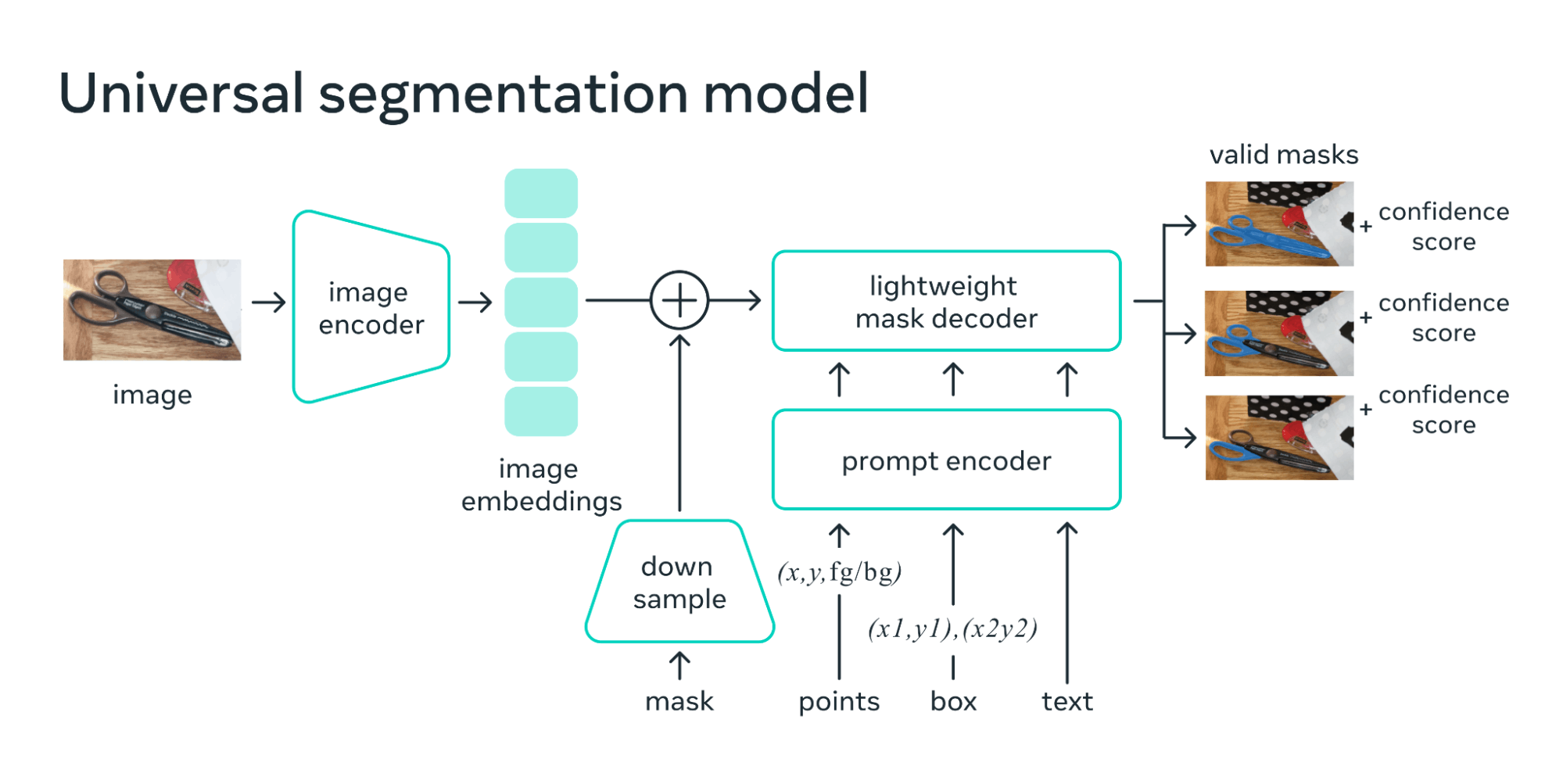

The Awesome Segment Anything repository is a comprehensive survey of models using SAM as the foundation to segment anything.

SAM mapping image features and prompt embeddings set for a segmentation mask

Resource Format

The repo is a list of papers and code.

Resource Content

It consists of SAM’s applications, historical development, and research trends.

Topics Covered

- SAM-based Models: The repo explores the research on SAM-based frameworks.

- Open-source Projects: It also covers open-source models on platforms like HuggingFace and Colab.

Key Learnings

- SAM Applications: Studying the repo will help you learn about use cases where SAM is relevant.

- Contemporary Segmentation Methods: It introduces the latest segmentation methods based on SAM.

Proficiency Level

This is an expert-level repo containing advanced research papers on SAM.

#5. Image Segmentation Keras

The repository is a Keras implementation of multiple deep learning image segmentation models.

SAM mapping image features and prompt embeddings set for a segmentation mask

Resource Format

Code implementations of segmentation models.

Resource Content

The repo consists of implementations for Segnet, FCN, U-Net, Resnet, PSPNet, and VGG-based segmentation models.

Topics Covered

- Colab Examples: The repo demonstrates implementations through a Python interface.

- Installation: There is an installation guide to run the relevant modules.

Key Learnings

- How to Use Keras: The repo will help you learn how to implement segmentation models in Keras.

- Fine-tuning and Knowledge Distillation: The repo contains sections that explain how to fine-tune pre-trained models and use knowledge distillation to develop simpler models.

Proficiency Level

The repo is an intermediate-level resource for those familiar with Python.

#6. Image Segmentation

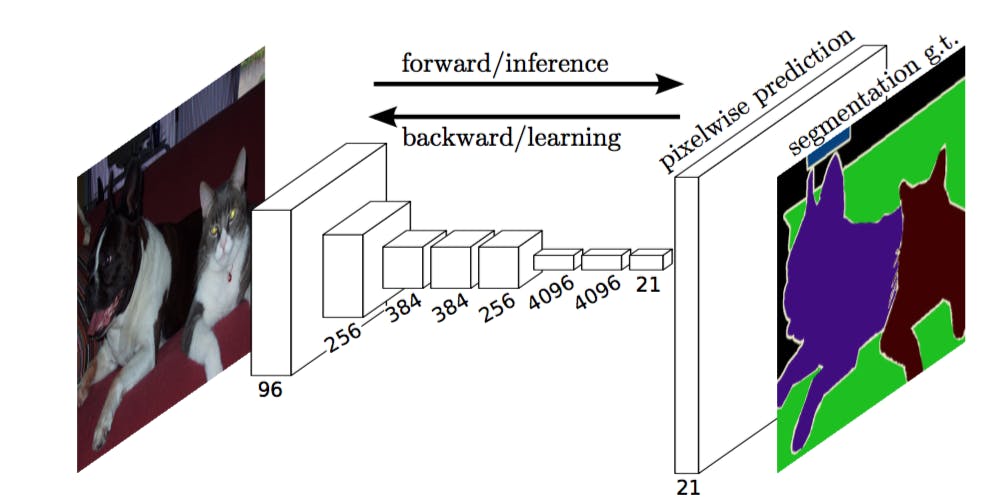

The repository is a PyTorch implementation of multiple segmentation models.

Resource Format

It consists of code and research papers.

Resource Content

The models covered include U-Net, R2U-Net, Attention U-Net, and Attention R2U-Net.

Topics Covered

- Architectures: The repo explains the models’ architectures and how they work.

- Evaluation Strategies: It tests the performance of all models using various evaluation metrics.

Key Learnings

- PyTorch: The repo will help you learn about the PyTorch library.

- U-Net: It will familiarize you with the U-Net model, a popular framework for medical image segmentation.

Proficiency Level

This is an intermediate-level repo for those familiar with deep neural networks and evaluation methods in machine learning.

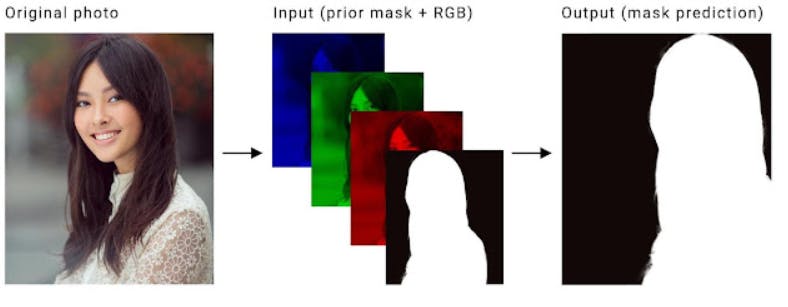

#7. Portrait Segmentation

The repository contains implementations of portrait segmentation models for mobile devices.

Resource Format

The repo contains code and a detailed tutorial.

Resource Content

It consists of checkpoints, datasets, dependencies, and demo files.

Topics Covered

- Model Architecture: The repo explains the architecture for Mobile-Unet, Deeplab V3+, Prisma-net, Portrait-net, Slim-net, and SINet.

- Evaluation: It reports the performance results of all the models.

Key Learnings

- Portrait Segmentation Techniques: The repo will teach you about portrait segmentation frameworks.

- Model Development Workflow: It gives tips and tricks for training and validating models.

Proficiency Level

This is an expert-level repo. It requires knowledge of Tensorflow, Keras, and OpenCV.

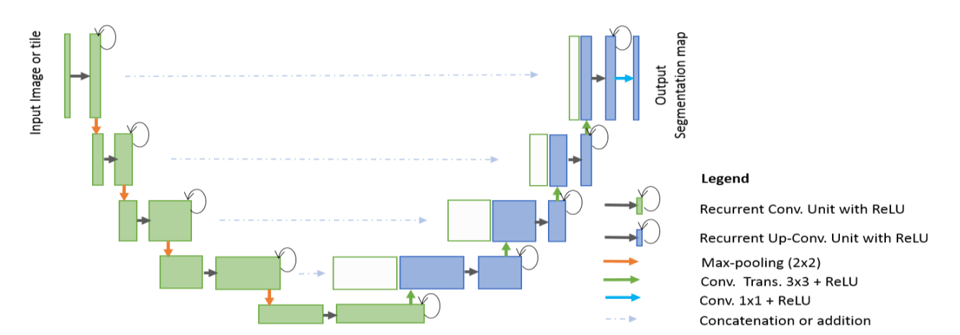

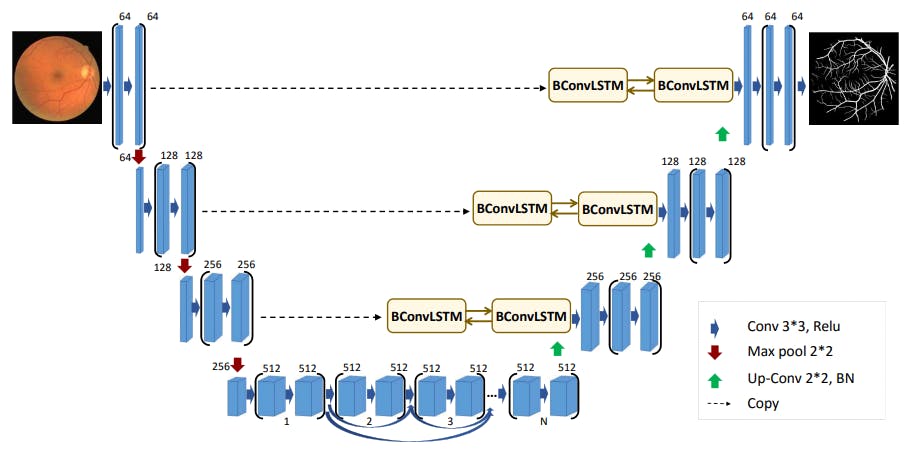

#8. BCDU-Net

The repository implements the Bi-Directional Convolutional LSTM with U-net (BCDU-Net) for medical segmentation tasks, including lung, skin lesions, and retinal blood vessel segmentation.

Resource Format

The repo contains code and an overview of the model.

Resource Content

It contains links to the research paper, updates, and a list of medical datasets for training. It also provides pre-trained weights for lung, skin lesion, and blood vessel segmentation models.

Topics Covered

- BCDU-Net Architecture: The repo explains the model architecture in detail.

- Performance Results: It reports the model's performance statistics against other SOTA frameworks.

Key Learnings

- Medical Image Analysis: Exploring the repo will familiarize you with medical image formats and how to detect anomalies using deep learning models.

- BCDU-Net Development Principles: It explains how the BCDU-net model works based on the U-net architecture. You will also learn about the Bi-directional LSTM component fused with convolutional layers.

Proficiency Level

This is an intermediate-level repo. It requires knowledge of LSTMs and CNNs.

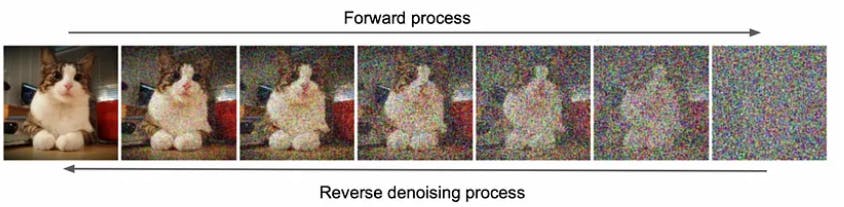

#9.MedSegDiff

The repository demonstrates the use of diffusion techniques for medical image segmentation.

Resource Format

It contains code implementations and a research paper.

Resource Contents

It overviews the model architecture and contains the brain tumor segmentation dataset.

Topics Covered

- Model Structure: The repo explains the application of the diffusion method to segmentation problems.

- Examples: It contains examples for training the model on tumor and melanoma datasets.

Key Learnings

- The Diffusion Mechanism: You will learn how the diffusion technique works.

- Hyperparameter Tuning: The repo demonstrates a few hyper-parameters to fine-tune the model.

Proficiency Level

This is an intermediate-level repo requiring knowledge of diffusion methods.

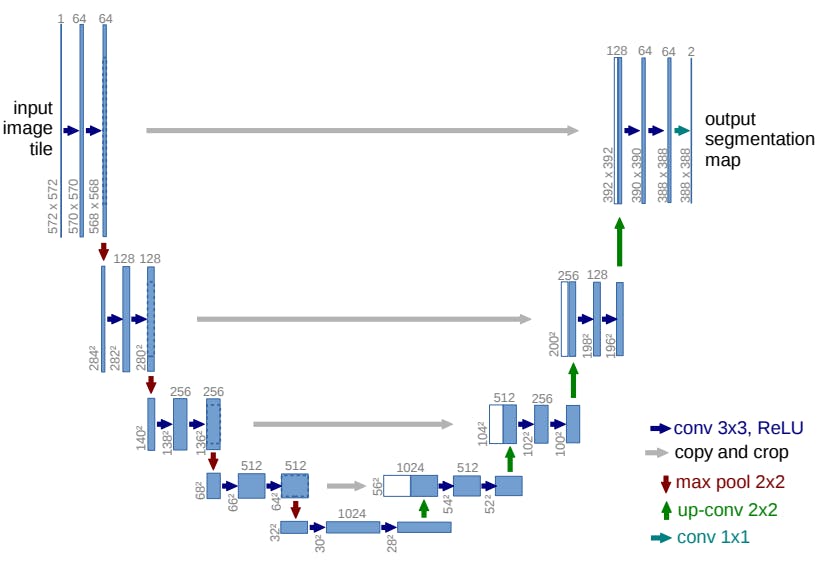

#10. U-Net

The repository is a Keras-based implementation of the U-Net architecture.

Resource Format

It contains the original training dataset, code, and a brief tutorial.

Resource Contents

The repo provides the link to the U-Net paper and contains a section that lists the dependencies and results.

Topics Covered

- U-Net Architecture: The research paper in the repo explains how the U-Net model works.

- Keras: The topic page has a section that gives an overview of the Keras library.

Key Learnings

- Data Augmentation: The primary feature of the U-net model is its use of data augmentation techniques. The repo will help you learn how the framework augments medical data for enhanced training.

Proficiency Level

This is a beginner-level repo requiring basic knowledge of Python.

#11. SOTA-MedSeg

The repository is a detailed record of medical image segmentation challenges and winning models.

Medical Imaging Segmentation Methods

Resource Format

The repo comprises research papers, code, and segmentation challenges based on different anatomical structures.

Resource Contents

It mentions the winning models for each year from 2018 to 2023 and provides their performance results on multiple segmentation tasks.

Topics Covered

- Medical Image Segmentation: The repo explores models for segmenting brain, head, kidney, and neck tumors.

- Past Challenges: It lists older medical segmentation challenges.

Key Learnings

- Latest Trends in Medical Image Processing: The repo will help you learn about the latest AI models for segmenting anomalies in multiple anatomical regions.

Proficiency Level

This is an expert-level repo requiring in-depth medical knowledge.

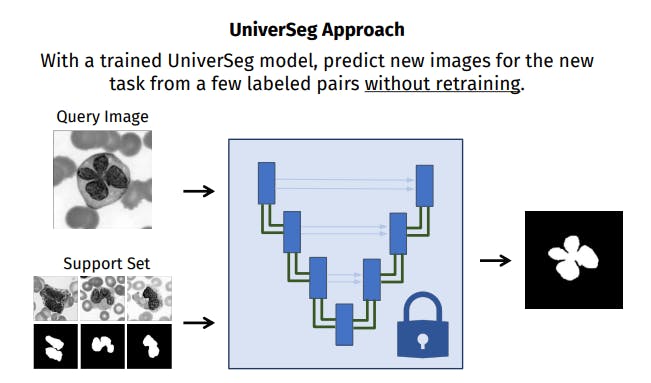

#12. UniverSeg

The repository introduces the Universal Medical Image Segmentation (UniverSeg) model that requires no fine-tuning for novel segmentation tasks (e.g. new biomedical domain, new image type, new region of interest, etc).

Resource Format

It contains the research paper and code for implementing the model.

Resource Contents

The research paper provides details of the model architecture and Python code with an example dataset.

Topics Covered

- UniverSeg Development: The repo illustrates the inner workings of the UniverSeg model.

- Implementation Guidelines: A ‘Getting Started’ section will guide you through the implementation process.

Key Learnings

- Few-shot Learning: The model employs few-shot learning methods for quick adaptation to new tasks.

Proficiency Level

This is a beginner-level repo requiring basic knowledge of few-shot learning.

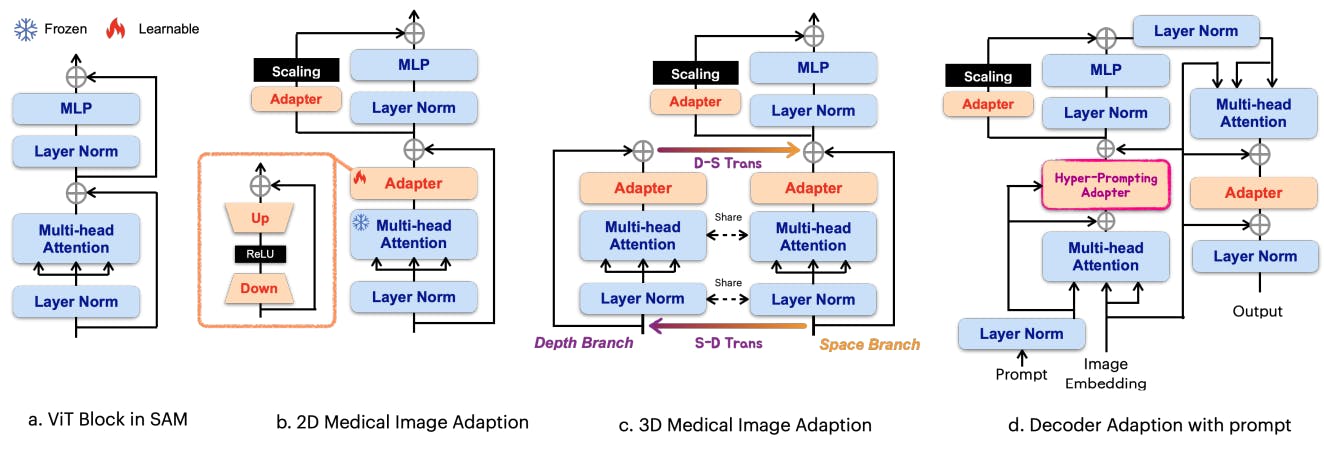

#13. Medical SAM Adapter

The repository introduces the Medical SAM Adapter (Med-SA), which fine-tunes the SAM architecture for medical-specific domains.

Resource Format

The repo contains a research paper, example datasets, and code for implementing Med-SA.

Resource Contents

The paper explains the architecture in detail, and the datasets relate to melanoma, abdominal, and optic-disc segmentation.

Topics Covered

- Model Architecture: The research paper in the repo covers a detailed explanation of how the model works.

- News: It shares a list of updates related to the model.

Key Learnings

- Vision Transformers (ViT): The model uses the ViT framework for image adaptation.

- Interactive Segmentation: You will learn how the model incorporates click prompts for model training.

Proficiency Level

The repo is an expert-level resource requiring an understanding of transformers.

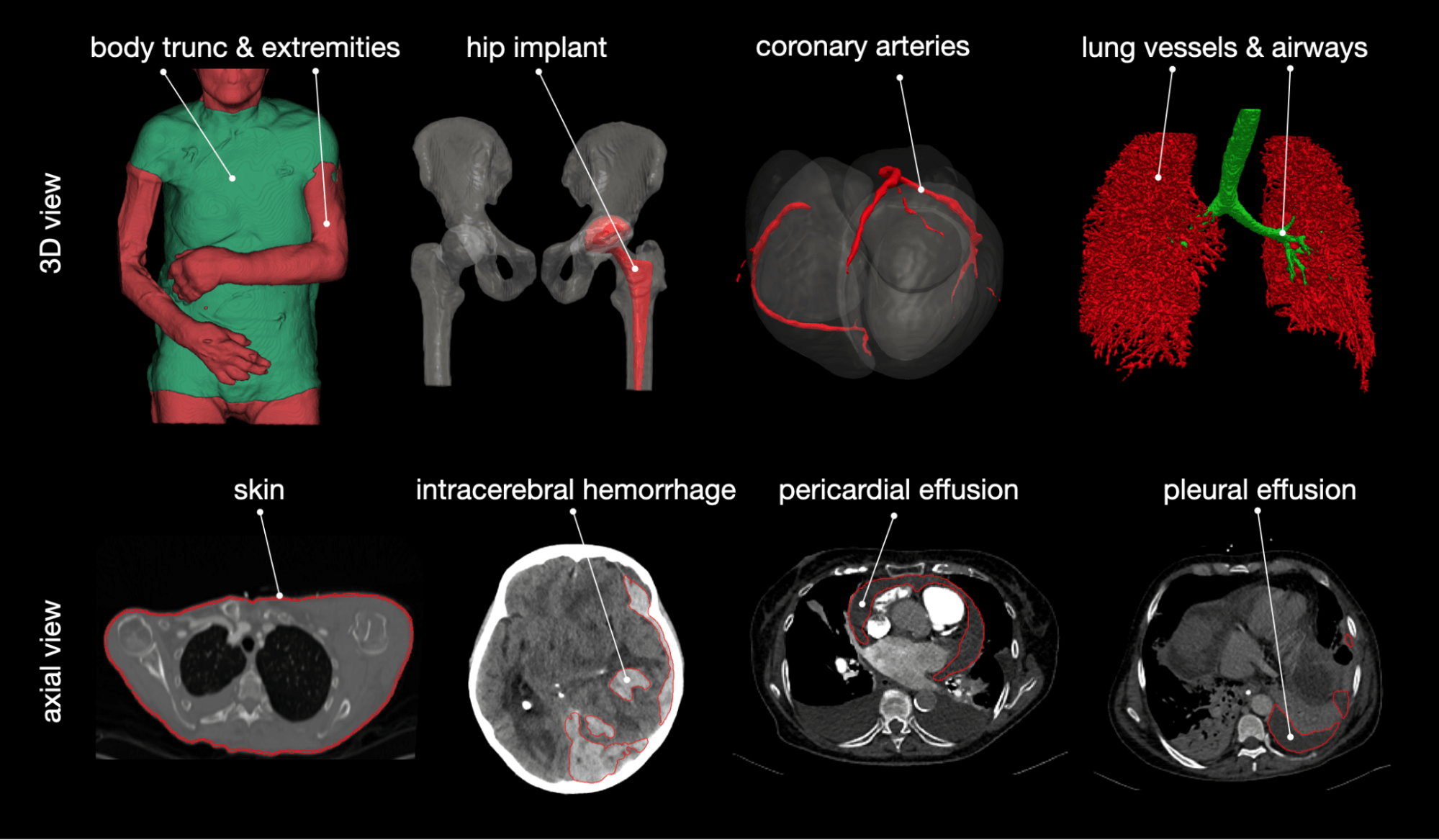

#14. TotalSegmentator

The repository introduces TotalSegmentator, a domain-specific medical segmentation model for segmenting CT images.

Resource Format

The repo provides a short installation guide, code files, and links to the research paper.

Resource Contents

The topic page lists suitable use cases, advanced settings, training validation details, a Python API, and a table with all the class names.

Topics Covered

- Total Segmentation Development: The paper discusses how the model works.

- Usage: It explains the sub-tasks the model can perform.

Key Learnings

- Implementation Using Custom Datasets: The repo teaches you how to apply the model to unique medical datasets.

- nnU-Net: The model uses nnU-Net, a semantic segmentation model that automatically adjusts parameters based on input data.

Proficiency Level

The repo is an intermediate-level resource requiring an understanding of the U-Net architecture.

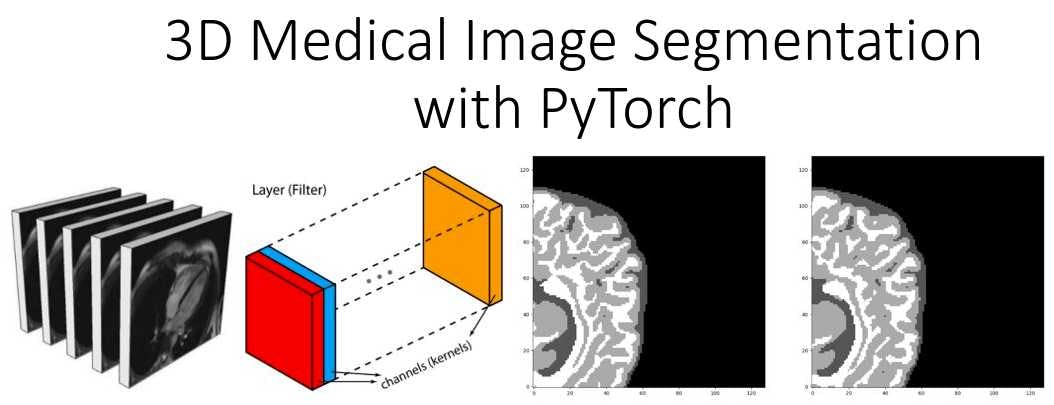

#15. Medical Zoo Pytorch

The repository implements a Pytorch-based library for 3D multi-modal medical image segmentation.

Implementing Image Segmentation in PyTorch

Resource Format

It contains the implementation code and research papers for the models featured in the library.

Resource Contents

The repo lists the implemented architectures and has a Quick Start guide with a demo in Colab.

Topics Covered

- 3D Segmentation Models: The library contains multiple models, including U-Net3D, V-net, U-Net, and MED3D.

- Image Data-loaders: It consists of data-loaders for fetching standard medical datasets.

Key Learnings

- Brain Segmentation Performance: The research paper compares the performance of implemented architectures on brain sub-region segmentation. This will help you identify the best model for brain segmentation.

- COVID-19 Segmentation: The library has a custom model for detecting COVID-19 cases. The implementation will help you classify COVID-19 patients through radiography chest images.

Proficiency Level

This is an expert-level repo requiring knowledge of several 3D segmentation models.

GitHub Repositories for Image Segmentation: Key Takeaways

While object detection and image classification models dominate the CV space, the recent rise in segmentation frameworks signals a new era for AI in various applications.

Below are a few points to remember regarding image segmentation:

- Medical Segmentation is the most significant use case. Most segmentation models discussed above aim to segment complex medical images to detect anomalies.

- Few-shot Learning: Few-shot learning methods make it easier for experts to develop models for segmenting novel images.

- Transformer-based Architectures: The transformer architecture is becoming a popular framework for segmentation tasks due to its simplicity and higher processing speeds than traditional methods.

Explore the platform

Data infrastructure for multimodal AI

Explore product

Explore our products

Image segmentation is a computer vision task that classifies each pixel in an image.

Autonomous driving, medical diagnosis, and virtual and augmented reality (VR and AR) are popular use cases for image segmentation.

The primary challenges include variability in image resolutions, occlusion in video frames, and shapes with unclear boundaries that are difficult to identify.

CIFAR-10, COCO, and STL are popular benchmark datasets for image segmentation tasks.

Semantic segmentation identifies all pixels related to a specific object, while instance segmentation distinguishes between different instances of the same object.

The Segment Anything by Meta and U-net repositories are popular recommendations for image segmentation

The accuracy and efficiency of segmentation models vary depending on the use case, training dataset, and model architecture. U-Net has high accuracy on biomedical datasets, while SAM performs better in zero-shot learning tasks.

Users can report issues, log pull requests, and ask questions about the content while seeking help from the wider developer community.

Encord supports a variety of image analysis and segmentation tools, which can be integrated into research workflows. Users can utilize commercial software like Amaris, along with open-source solutions, to conduct advanced segmentation analysis and visualization. This flexibility allows researchers to adopt the best tools that suit their specific needs.