Contents

Introduction to Multimodal AI

Overview of Multimodal Large Language Models (MLLMs)

Understanding MM1 Models

MM1 Model Experiments: Key Research Findings

Apple MM1 Model’s Features

Key Components of MM1

Ablation Study for MLLMs

Performance Evaluation of MM1 Models

Apple’s Ethical Guidelines for MM1

MM1 MLLM: Key Takeaways

Encord Blog

MM1: Apple’s Multimodal Large Language Models (MLLMs)

Power your AI models with the right data

Automate your data curation, annotation and label validation workflows.

Get startedContents

Introduction to Multimodal AI

Overview of Multimodal Large Language Models (MLLMs)

Understanding MM1 Models

MM1 Model Experiments: Key Research Findings

Apple MM1 Model’s Features

Key Components of MM1

Ablation Study for MLLMs

Performance Evaluation of MM1 Models

Apple’s Ethical Guidelines for MM1

MM1 MLLM: Key Takeaways

Written by

Akruti Acharya

What is MM1? MM1 is a family of large multimodal language models that combines text and image understanding. It boasts an impressive 30 billion parameters and excels in both pre-training and supervised fine-tuning. MM1 generates and interprets both images and text data, making it a powerful tool for various multimodal tasks. Additionally, it incorporates a mixture-of-experts (MoE) architecture, contributing to its state-of-the-art performance across benchmarks.

What is MM1? MM1 is a family of large multimodal language models that combines text and image understanding. It boasts an impressive 30 billion parameters and excels in both pre-training and supervised fine-tuning. MM1 generates and interprets both images and text data, making it a powerful tool for various multimodal tasks. Additionally, it incorporates a mixture-of-experts (MoE) architecture, contributing to its state-of-the-art performance across benchmarks. Introduction to Multimodal AI

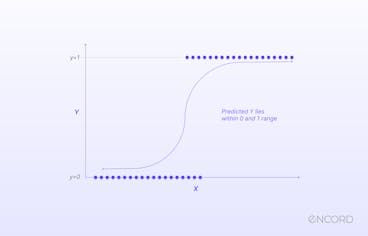

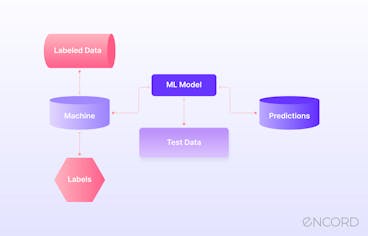

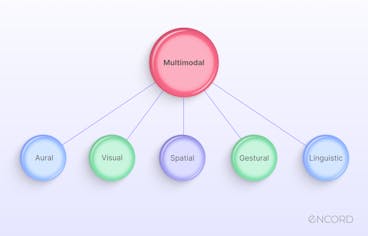

Multimodal AI models are a type of artificial intelligence model that can process and generate multiple types of data, such as text, images, and audio. These models are designed to understand the world in a way that is closer to how humans do, by integrating information from different modalities.

Multimodal AI models typically use a combination of different types of AI systems, each designed to process a specific type of data. For example, a multimodal AI model might use a convolutional neural network (CNN) to process visual data, a recurrent neural network (RNN) to process text data, and a transformer model to integrate the information from CNN and RNN.

The outputs of these networks are then combined, often using techniques such as concatenation or attention mechanisms, to produce a final output. This output can be used for a variety of tasks, such as classification, generation, or prediction.

Overview of Multimodal Large Language Models (MLLMs)

Multimodal Large Language Models (MLLMs) are generative AI systems that combine different types of information, such as text, images, videos, audio, and sensory data, to understand and generate human-like language. These models revolutionize the field of natural language processing (NLP) by going beyond text-only models and incorporating a wide range of modalities.

Here's an overview of key aspects of Multimodal Large Language Models:

Architecture

MLLMs typically extend architectures like Transformers, which have proven highly effective in processing sequential data such as text. Transformers consist of attention mechanisms that enable the model to focus on relevant parts of the input data. In MLLMs, additional layers and mechanisms are added to process and incorporate information from other modalities.

Integration of Modalities

MLLMs are designed to handle inputs from multiple modalities simultaneously. For instance, they can analyze both the text and the accompanying image in a captioning task or generate a response based on both text and audio inputs. This integration allows MLLMs to understand and generate content that is richer and more contextually grounded.

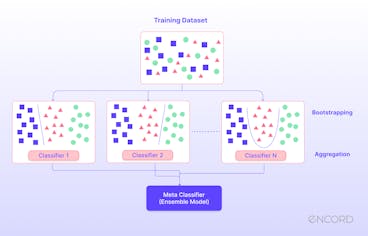

Pre-Training

Like their unimodal counterparts, MLLMs are often pre-trained on large datasets using self-supervised learning objectives. Pre-training involves exposing the model to vast amounts of multimodal data, allowing it to learn representations that capture the relationships between different modalities. Pre-training is typically followed by fine-tuning on specific downstream tasks.

State-of-the-Art Models

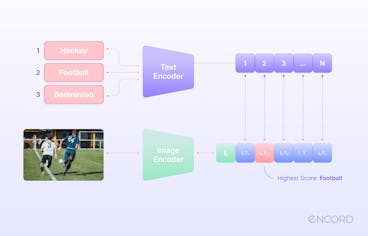

- CLIP (Contrastive Language-Image Pre-training): Developed by OpenAI, CLIP learns joint representations of images and text by contrasting semantically similar and dissimilar image-text pairs.

- GPT-4: It showcases remarkable capabilities in complex reasoning, advanced coding, and even performs well in multiple academic exams.

- Kosmos-1: Created by Microsoft, this MLLM os trained from scratch on web-scale multimodal corpora, including arbitrary interleaved text and images, image-caption pairs, and text data.

- PaLM-E: Developed by Google, PaLM-E integrates different modalities to enhance language understanding.

Understanding MM1 Models

MM1 represents a significant advancement in the domain of Multimodal Large Language Models (MLLMs), demonstrating state-of-the-art performance in pre-training metrics and competitive results in various multimodal benchmarks. The development of MM1 stems from a meticulous exploration of architecture components and data choices, aiming to distill essential design principles for building effective MLLMs.

MM1 Model Experiments: Key Research Findings

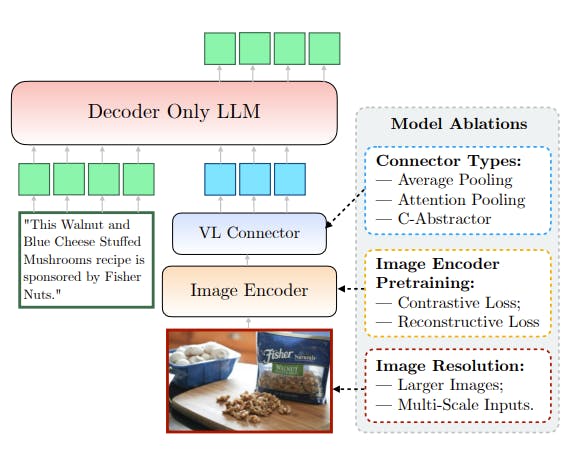

Architecture Components

- Image Encoder: The image encoder's design, along with factors such as image resolution and token count, significantly impacts MM1's performance. Through careful ablations, it was observed that optimizing the image encoder contributes substantially to MM1's capabilities.

- Vision-Language Connector: While important, the design of the vision-language connector was found to be of comparatively lesser significance compared to other architectural components. It plays a crucial role in facilitating communication between the visual and textual modalities.

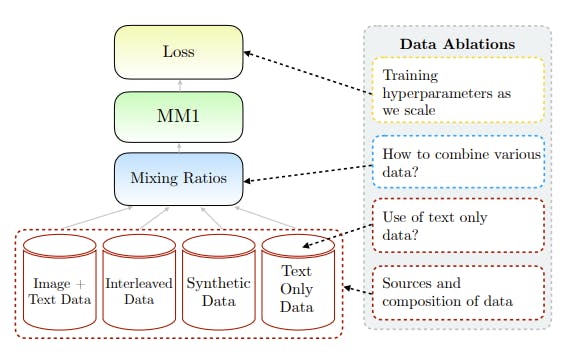

Data Choices

- Pre-training Data: MM1 leverages a diverse mix of image-caption, interleaved image-text, and text-only data for pre-training. This combination proved pivotal in achieving state-of-the-art few-shot results across multiple benchmarks. The study highlights the importance of different types of pre-training data for various tasks, with caption data being particularly impactful for zero-shot performance.

- Supervised Fine-Tuning (SFT): The effectiveness of pre-training data choices was validated through SFT, where capabilities and modeling decisions acquired during pre-training were retained, leading to competitive performance across evaluations and benchmarks.

Performance

- In-Context Learning Abilities: The MM1 model exhibits exceptional in-context learning abilities, particularly in its largest 30 billion parameter configuration. This version of the model can perform multi-step reasoning over multiple images using few-shot “chain-of-thought” prompting.

- Model Scale: MM1's scalability is demonstrated through the exploration of larger LLMs, ranging from 3B to 30B parameters, and the investigation of mixture-of-experts (MoE) models. This scalability contributes to MM1's adaptability to diverse tasks and datasets, further enhancing its performance and applicability.

- Performance: The MM1 models, which include both dense models and mixture-of-experts (MoE) variants, achieve competitive performance after supervised fine-tuning on a range of established multimodal benchmarks.

Apple MM1 Model’s Features

In-Context Predictions

The Apple MM1 model excels at making predictions within the context of a given input. By considering the surrounding information, it can generate more accurate and contextually relevant responses. For instance, when presented with a partial sentence or incomplete query, the MM1 model can intelligently infer the missing parts and provide meaningful answers.

Multi-Image Reasoning

The MM1 model demonstrates impressive capabilities in reasoning across multiple images. It can analyze and synthesize information from various visual inputs, allowing it to make informed decisions based on a broader context. For example, when evaluating a series of related images (such as frames from a video), the MM1 model can track objects, detect changes, and understand temporal relationships.

Chain-of-Thought Reasoning

One of the standout features of the MM1 model is its ability to maintain a coherent chain of thought. It can follow logical sequences, connect ideas, and provide consistent responses even in complex scenarios. For instance, when engaged in a conversation, the MM1 model remembers previous interactions and ensures continuity by referring back to relevant context.

Few-Shot Learning with Instruction Tuning

The MM1 model leverages few-shot learning techniques, enabling it to learn from a small amount of labeled data. Additionally, it fine-tunes its performance based on specific instructions, adapting to different tasks efficiently. For instance, if provided with only a handful of examples for a new task, the MM1 model can generalize and perform well without extensive training data.

Visual Question Answering (VQA)

The MM1 model can answer questions related to visual content through Visual Question Answering (VQA). Given an image and a question, it generates accurate and context-aware answers, demonstrating its robust understanding of visual information. For example, when asked, “What is the color of the car in the picture?” the MM1 model can analyze the image and provide an appropriate response.

Captioning

When presented with an image, the MM1 model can generate descriptive captions. Its ability to capture relevant details and convey them in natural language makes it valuable for image captioning tasks. For instance, if shown a picture of a serene mountain landscape, the MM1 model might generate a caption like, “Snow-capped peaks against a clear blue sky.”

For more information, read the paper of Arxiv published by Apple researchers: MM1: Methods, Analysis & Insights from Multimodal LLM Pre-training.

For more information, read the paper of Arxiv published by Apple researchers: MM1: Methods, Analysis & Insights from Multimodal LLM Pre-training.Key Components of MM1

Transformer Architecture

The transformer architecture serves as the backbone of MM1.

- Self-Attention Mechanism: Transformers use self-attention to process sequences of data. This mechanism allows them to weigh the importance of different elements within a sequence, capturing context and relationships effectively.

- Layer Stacking: Multiple layers of self-attention are stacked to create a deep neural network. Each layer refines the representation of input data.

- Positional Encoding: Transformers incorporate positional information, ensuring they understand the order of elements in a sequence.

Multimodal Pre-Training Data

MM1 benefits from a diverse training dataset:

- Image-Text Pairs: These pairs directly connect visual content (images) with corresponding textual descriptions. The model learns to associate the two modalities.

- Interleaved Documents: Combining images and text coherently allows MM1 to handle multimodal inputs seamlessly.

- Text-Only Data: Ensuring robust language understanding, even when dealing with text alone.

Image Encoder

The image encoder is pivotal for MM1’s performance:

- Feature Extraction: The image encoder processes visual input (images) and extracts relevant features. These features serve as the bridge between the visual and textual modalities.

- Resolution and Token Count: Design choices related to image resolution and token count significantly impact MM1’s ability to handle visual information.

Vision-Language Connector

The vision-language connector facilitates communication between textual and visual representations:

- Cross-Modal Interaction: It enables MM1 to align information from both modalities effectively.

- Joint Embeddings: The connector generates joint embeddings that capture shared semantics.

Ablation Study for MLLMs

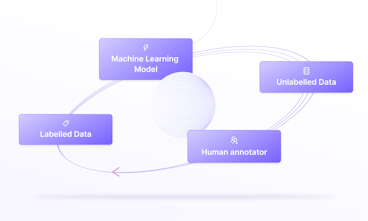

Building performant Multimodal Large Language Models (MLLMs) is an empirical process that involves carefully exploring various design decisions related to architecture, data, and training procedures. Here, the authors present a detailed ablation study conducted to identify optimal configurations for constructing a high-performing model, referred to as MM1.

The ablations are performed along three major axes:

MM1 Model Ablations

- Different pre-trained image encoders are investigated, along with various methods of connecting Large Language Models (LLMs) with these encoders.

- The architecture exploration encompasses the examination of the image encoder pre-training objective, image resolution, and the design of the vision-language connector.

MM1 Data Ablations

- Various types of data and their relative mixture weights are considered, including captioned images, interleaved image-text documents, and text-only data.

- The impact of different data sources on zero-shot and few-shot performance across multiple captioning and Visual Question Answering (VQA) tasks is evaluated.

Training Procedure Ablations

- The training procedure is explored, including hyperparameters and which parts of the model to train at different stages.

- Two types of losses are considered: contrastive losses (e.g., CLIP-style models) and reconstructive losses (e.g., AIM), with their effects on downstream performance examined.

Empirical Setup

- A smaller base configuration of the MM1 model is used for ablations, allowing for efficient assessment of model performance.

- The base configuration includes an Image Encoder (ViT-L/14 model trained with CLIP loss on DFN-5B and VeCap-300M datasets), Vision-Language Connector (C-Abstractor with 144 image tokens), Pre-training Data (mix of captioned images, interleaved image-text documents, and text-only data), and a 1.2B transformer decoder-only Language Model.

- Zero-shot and few-shot (4- and 8-shot) performance on various captioning and VQA tasks are used as evaluation metrics.

MM1 Ablation Study: Key Findings

- Image resolution, model size, and training data composition are identified as crucial factors affecting model performance.

- The number of visual tokens and image resolution significantly impact the performance of the Vision-Language Connector, while the type of connector has a minimal effect.

- Interleaved data is crucial for few-shot and text-only performance, while captioning data enhances zero-shot performance.

- Text-only data helps improve few-shot and text-only performance, contributing to better language understanding capabilities.

- Careful mixture of image and text data leads to optimal multimodal performance while retaining strong text performance.

- Synthetic caption data (VeCap) provides a notable boost in few-shot learning performance.

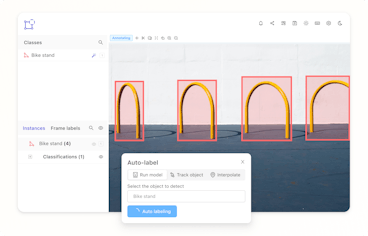

Performance Evaluation of MM1 Models

The performance evaluation of MM1 models encompasses several key aspects, including scaling via Mixture-of-Experts (MoE), supervised fine-tuning (SFT) experiments, impact of image resolution, pre-training effects, and qualitative analysis.

Scaling via Mixture-of-Experts (MoE)

- MM1 explores scaling the dense model by incorporating more experts in the Feed-Forward Network (FFN) layers of the language model.

- Two MoE models are designed: 3B-MoE with 64 experts and 7B-MoE with 32 experts, utilizing top-2 gating and router z-loss terms for training stability.

- The MoE models demonstrate improved performance over their dense counterparts across various benchmarks, indicating the potential of MoE for further scaling.

Supervised Fine-Tuning Experiments

- Supervised Fine-Tuning (SFT) is performed on top of the pre-trained MM1 models using a diverse set of datasets, including instruction-response pairs, academic task-oriented vision-language datasets, and text-only data.

- MM1 models exhibit competitive performance across 12 benchmarks, showing particularly strong results on tasks such as VQAv2, TextVQA, ScienceQA, and newer benchmarks like MMMU and MathVista.

- The models maintain multi-image reasoning capabilities even during SFT, enabling few-shot chain-of-thought reasoning.

Impact of Image Resolution

- Higher image resolution leads to improved performance, supported by methods such as positional embedding interpolation and sub-image decomposition.

- MM1 achieves a relative performance increase of 15% by supporting an image resolution of 1344×1344 compared to a baseline model with an image resolution of 336 pixels.

Pre-Training Effects

- Large-scale multimodal pre-training significantly contributes to the model's performance improvement over time, showcasing the importance of pre-training data quantity.

- MM1 demonstrates strong in-context few-shot learning and multi-image reasoning capabilities, indicating the effectiveness of large-scale pre-training for enhancing model capabilities.

Qualitative Analysis

- Qualitative examples provided in the evaluation offer further insights into MM1's capabilities, including single-image and multi-image reasoning, as well as few-shot prompting scenarios.

- These examples highlight the model's ability to understand and generate contextually relevant responses across various tasks and input modalities.

Apple’s Ethical Guidelines for MM1

- Privacy and Data Security: Apple places utmost importance on user privacy. MM1 models are designed to respect user data and adhere to strict privacy policies. Any data used for training is anonymized and aggregated.

- Bias Mitigation: Apple actively works to reduce biases in MM1 models. Rigorous testing and monitoring are conducted to identify and rectify any biases related to gender, race, or other sensitive attributes.

- Transparency: Apple aims to be transparent about the capabilities and limitations of MM1. Users should have a clear understanding of how the model works and what it can and cannot do.

- Fairness: MM1 is trained on diverse data, but Apple continues to improve fairness by addressing underrepresented groups and ensuring equitable outcomes.

- Safety and Harm Avoidance: MM1 is designed to avoid harmful or unsafe behavior. It refrains from generating content that could cause harm, promote violence, or violate ethical norms.

- Human Oversight: Apple maintains a strong human-in-the-loop approach. MM1 models are continuously monitored, and any problematic outputs are flagged for review.

MM1 MLLM: Key Takeaways

- Multimodal Integration: MM1 combines textual and visual information, achieving impressive performance.

- Ablation Study Insights: Image encoder matters, connector less so. Data mix is crucial.

- Scaling Up MM1: Up to 30 billion parameters, strong pre-training metrics, competitive fine-tuning.

- Ethical Guidelines: Privacy, fairness, safety, and human oversight are priorities.

Power your AI models with the right data

Automate your data curation, annotation and label validation workflows.

Get startedWritten by

Akruti Acharya

- The MM1 model research leveraged a diverse set of data sources. Specifically, they used a mix of Image-caption pairs, Interleaved image-text documents, Text-only data. This careful combination was crucial for achieving state-of-the-art few-shot results across multiple benchmarks compared to other published pre-training results

- To build performant Multimodal Large Language Models (MLLMs) like MM1, it’s essential to judiciously combine different types of data. In MM1’s case, the mixture of image-caption, interleaved image-text, and text-only data played a pivotal role in achieving impressive results.

- Yes, the lessons learned during pre-training do indeed transfer to supervised fine-tuning (SFT). After large-scale pre-training, MM1 maintains competitive performance on a range of established multimodal benchmarks.

- The research paper does not explicitly delve into environmental considerations for MM1. However, it’s essential to recognize that large-scale models like MM1 consume significant computational resources during training and inference. Researchers and practitioners need to be mindful of the environmental impact when deploying such models.

- MM1, with its up-to-30B-parameter family of multimodal models (including dense models and mixture-of-experts variants), achieves state-of-the-art pre-training metrics. After supervised fine-tuning, it remains competitive across various multimodal benchmarks.

- The research paper does not explicitly address data privacy and security aspects related to MM1. However, like any large-scale language model, MM1’s deployment should adhere to best practices regarding data privacy, user consent, and secure handling of sensitive information.

Related blogs

Meta’s Llama 3.1 Explained

Meta has released Llama 3.1, an open-source AI model that rivals the best closed-source models like OpenAI’s GPT-4o, Anthropic’s Claude 3, and Google Gemini in flexibility, control, and capabilities. This release marks a pivotal moment in democratizing AI development, offering advanced features like expanded context length and multilingual support. All versions of Llama 3.1 8B, 70B, and 405B are powerful models. With its state-of-the-art capabilities, it can unlock new possibilities in synthetic data generation, model distillation, and beyond. In this blog post, we'll explore the technical advancements, practical applications, and broader implications of Llama 3.1. Overview of Llama 3.1 Llama 3.1 405B is a frontier-level model designed to push the boundaries of what's possible with generative AI. It offers a context length of up to 128K tokens and supports eight languages, making it incredibly versatile. The model's capabilities in general knowledge, math, tool use, and multilingual translation are state-of-the-art, rivaling the best closed-source models available today. Llama 3.1 also introduces significant improvements in synthetic data generation and model distillation, paving the way for more efficient AI development and deployment. The Llama 3.1 collection also includes upgraded variants of the 8B and 70B models, which boast enhanced reasoning capabilities and support for advanced use cases such as long-form text summarization, multilingual conversational agents, and coding assistants. Meta's focus on openness and innovation ensures that these models are available for download and development on various platforms, providing a robust ecosystem for AI advancement. Overview of Previous Llama Models Llama 1 Released in early 2023, Llama 1 was Meta AI’s initial foray into large language models with up to 70 billion parameters. It laid the groundwork for accessible and customizable LLM models, emphasizing transparency and broad usability. Llama 2 Launched later in 2023, Llama 2 improved upon its predecessor with enhanced capabilities and larger models, reaching up to 70 billion parameters. It introduced better performance in natural language understanding and generation, making it a versatile tool for developers and researchers. Read more about it in our Llama 2 explainer blog. Importance of Openness in AI Meta’s latest release, Llama 3.1 405B, underscores the company’s unwavering commitment to open-source AI. In a letter, Mark Zuckerberg highlighted the numerous benefits of open-source AI, emphasizing how it democratizes access to advanced technology and ensures that power is not concentrated in the hands of a few. Advantages of Open-Source Models Unlike closed models, open-source model weights are fully accessible for download, allowing developers to tailor the model to their specific needs. This flexibility extends to training on new datasets, conducting, additional fine-tuning, and developing models invarious environments - whether in the cloud, on-premise, or even locally on laptop- without the need to share the data with the providers. This level of customization allows developers to fully harness the power of generative AI, making it more versatile and impactful. While some argue that closed models are more cost-effective, Llama 3.1 models offer some of the lowest cost per token in the industry, according to testing by Artificial Analysis. Read more about Meta’s commitment to open-source AI in Mark Zuckerberg’s letter Open Source AI is the Path Forward. . Technical Highlights of Llama 3.1 Model Specifications Meta Llama 3.1 is the most advanced open-source AI model to date. With a staggering 405 billion parameters, it is designed to handle complex tasks with remarkable efficiency. The model leverages a standard decoder-only transformer architecture with minor adaptations to maximize training stability and scalability. Trained on over 15 trillion tokens using 16,000 H100 GPUs, Llama 3.1 405B achieves superior performance and versatility. Performance and Capabilities Llama 3.1 405B sets a new benchmark in AI performance. Evaluated on over 150 datasets, it excels in various tasks, including general knowledge, steerability, math, tool use, and multilingual translation. Extensive human evaluations reveal that Llama 3.1 is competitive with leading models like GPT-4, GPT-4o, and Claude 3.5 Sonnet, demonstrating its state-of-the-art capabilities across a range of real-world scenarios. Source Source Multilingual and Extended Context Length One of the standout features of Llama 3.1 is its support for an expanded context length of up to 128K tokens. This significant increase enables the model to handle long-form content, making it ideal for applications such as comprehensive text summarization and in-depth conversations. Llama 3.1 also supports eight languages, enhancing its utility for multilingual applications and making it a powerful tool for global use. Model Architecture and Training Llama 3.1 uses a standard decoder-only transformer model architecture, optimized for large-scale training. The iterative post-training procedure, involving supervised fine-tuning and direct preference optimization, ensures high-quality synthetic data generation and improved performance across capabilities. By enhancing both the quantity and quality of pre- and post-training data, Llama 3.1 achieves superior results, adhering to scaling laws that predict better performance with increased model size. Source To support large-scale production inference, Llama 3.1 models are quantized from 16-bit (BF16) to 8-bit (FP8) numerics, reducing compute requirements and enabling efficient deployment within a single server node. Instruction and Chat Fine-Tuning Llama 3.1 405B excels in detailed instruction-following and chat interactions, thanks to multiple rounds of alignment on top of the pre-trained model. This involves Supervised Fine-Tuning (SFT), Rejection Sampling (RS), and Direct Preference Optimization (DPO), with synthetic data generation playing a key role. The model undergoes rigorous data processing to filter and balance the fine-tuning data, ensuring high-quality responses across all capabilities, even with the extended 128K context window. Read the paper: The Llama 3 Herd of Models. Real-World Applications of Llama 3.1 Llama 3.1’s advanced capabilities make it suitable for a wide range of applications, from real-time and batch inference to supervised fine-tuning and continual pre-training. It supports advanced workflows such as Retrieval-Augmented Generation (RAG) and function calling, offering developers robust tools to create innovative solutions. Some of the possible applications include: Healthcare: Llama 3.1’s multilingual support and extended context length are particularly beneficial in the medical field. AI models built on Llama 3.1 can assist in clinical decision-making by providing detailed analysis and recommendations based on extensive medical literature and patient data. For instance, a healthcare non-profit in Brazil has utilized Llama to streamline patient information management, improving communication and care coordination. Education: In education, Llama 3.1 can serve as an intelligent tutor, offering personalized learning experiences to students. Its ability to understand and generate long-form content makes it perfect for creating comprehensive study guides and providing detailed explanations on complex topics. An AI study buddy built with Llama and integrated into platforms like WhatsApp and Messenger showcases how it can support students in their learning journeys. Customer Service: The model’s enhanced reasoning capabilities and multilingual support can greatly improve customer service interactions. Llama 3.1 can be deployed as a conversational agent that understands and responds to customer inquiries in multiple languages, providing accurate and contextually appropriate responses, thereby enhancing user satisfaction and efficiency. Synthetic Data Generation: One of the standout features of Llama 3.1 is its ability to generate high-quality synthetic data. This can be used to train smaller models, perform simulations, and create datasets for various research purposes. Model Distillation: Llama 3.1 supports advanced model distillation techniques, allowing developers to create smaller, more efficient models without sacrificing performance. This capability is particularly useful for deploying AI on devices with limited computational resources, making high-performance AI accessible in more scenarios. Multilingual Conversational Agents: With support for eight languages and an extended context window, Llama 3.1 is ideal for building multilingual conversational agents. These chatbots can handle complex interactions, maintain context over long conversations, and provide accurate translations, making them valuable tools for global businesses and communication platforms. Building with Llama 3.1 Getting Started For developers looking to implement Llama 3.1 right away, Meta provides a comprehensive ecosystem that supports various development workflows. Whether you are looking to implement real-time inference, perform supervised fine-tuning, or generate synthetic data, Llama 3.1 offers the tools and resources needed to get started quickly. Accessibility Llama 3.1 models are available for download on Meta’s platform and Hugging Face, ensuring easy access for developers. Additionally, the models can be run in any environment—cloud, on-premises, or local—without the need to share data with Meta, providing full control over data privacy and security. Read the official documentation for Llama 3.1. You can also find the new Llama in Github and HuggingFace. Partner Ecosystem Meta’s robust partner ecosystem includes AWS, NVIDIA, Databricks, Groq, Dell, Azure, Google Cloud, and Snowflake. These partners offer services and optimizations that help developers leverage the full potential of Llama 3.1, from low-latency inference to turnkey solutions for model distillation and Retrieval-Augmented Generation (RAG). Source Advanced Workflows and Tools Meta’s Llama ecosystem is designed to support advanced AI development workflows, making it easier for developers to create and deploy applications. Synthetic Data Generation: With built-in support for easy-to-use synthetic data generation, developers can quickly produce high-quality data for training and fine-tuning smaller models. This capability accelerates the development process and enhances model performance. Model Distillation: Meta provides clear guidelines and tools for model distillation, enabling developers to create smaller, efficient models from the 405B parameter model. This process helps optimize performance while reducing computational requirements. Retrieval-Augmented Generation (RAG): Llama 3.1 supports RAG workflows, allowing developers to build applications that combine retrieval-based approaches with generative models. This results in more accurate and contextually relevant outputs, enhancing the overall user experience. Function Calling and Real-Time Inference: The model’s capabilities extend to real-time and batch inference, supporting various use cases from interactive applications to large-scale data processing tasks. This flexibility ensures that developers can build applications that meet their specific needs. Community and Support Developers can access resources, tutorials, and community forums to share knowledge and best practices. Community Projects: Meta collaborates with key community projects like vLLM, TensorRT, and PyTorch to ensure that Llama 3.1 is optimized for production deployment. These collaborations help developers get the most out of the model, regardless of their deployment environment. Safety and Security: To promote responsible AI use, Meta has introduced new security and safety tools, including Llama Guard 3 and Prompt Guard. These tools help developers build applications that adhere to best practices in AI safety and ethical considerations. Key Highlights of Llama 3.1 Massive Scale and Advanced Performance: The 405B version boasts 405 billion parameters and was trained on over 15 trillion tokens, delivering top-tier performance across various tasks. Extended Context and Multilingual Capabilities: Supports up to 128K tokens for comprehensive content generation and handles eight languages, enhancing global application versatility. Innovative Features: Enables synthetic data generation and model distillation, allowing for the creation of efficient models and robust training datasets. Comprehensive Ecosystem Support: Available for download on Meta’s platform and Hugging Face, with deployment options across cloud, on-premises, and local environments, supported by key industry partners. Enhanced Safety and Community Collaboration: Includes new safety tools like Llama Guard 3 and Prompt Guard, with active support from community projects for optimized development and deployment.

Jul 25 2024

5 M

Top 10 Multimodal Models

The current era is witnessing a significant revolution as artificial intelligence (AI) capabilities expand beyond straightforward predictions on tabular data. With greater computing power and state-of-the-art (SOTA) deep learning algorithms, AI is approaching a new era where large multimodal models dominate the AI landscape. Reports suggest the multimodal AI market will grow by 35% annually to USD 4.5 billion by 2028 as the demand for analyzing extensive unstructured data increases. These models can comprehend multiple data modalities simultaneously and generate more accurate predictions than their traditional counterparts. In this article, we will discuss what multimodal models are, how they work, the top models in 2024, current challenges, and future trends. What are Multimodal Models? Multimodal models are AI deep-learning models that simultaneously process different modalities, such as text, video, audio, and image, to generate outputs. Multimodal frameworks contain mechanisms to integrate multimodal data collected from multiple sources for more context-specific and comprehensive understanding. In contrast, unimodal models use traditional machine learning (ML) algorithms to process a single data modality simultaneously. For instance, You Only Look Once (YOLO) is a popular object detection model that only understands visual data. Unimodal vs. Multimodal Framework While unimodal models are less complex than multimodal algorithms, multimodal systems offer greater accuracy and enhanced user experience. Due to these benefits, multimodal frameworks are helpful in multiple industrial domains. For instance, manufacturers use autonomous mobile robots that process data from multiple sensors to localize objects. Moreover, healthcare professionals use multimodal models to diagnose diseases using medical images and patient history reports. How Multimodal Models Work? Although multimodal models have varied architectures, most frameworks have a few standard components. A typical architecture includes an encoder, a fusion mechanism, and a decoder. Architecture Encoders Encoders transform raw multimodal data into machine-readable feature vectors or embeddings that models use as input to understand the data’s content. Embeddings Multimodal models often have three types of encoders for each data type - image, text, and audio. Image Encoders: Convolutional neural networks (CNNs) are a popular choice for an image encoder. CNNs can convert image pixels into feature vectors to help the model understand critical image properties. Text Encoders: Text encoders transform text descriptions into embeddings that models can use for further processing. They often use transformer models like those in Generative Pre-Trained Transformer (GPT) frameworks. Audio Encoders: Audio encoders convert raw audio files into usable feature vectors that capture critical audio patterns, including rhythm, tone, and context. Wav2Vec2 is a popular choice for learning audio representations. Fusion Mechanism Strategies Once the encoders transform multiple modalities into embeddings, the next step is to combine them so the model can understand the broader context reflected in all data types. Developers can use various fusion strategies according to the use case. The list below mentions key fusion strategies. Early Fusion: Combines all modalities before passing them to the model for processing. Intermediate Fusion: Projects each modality onto a latent space and fuses the latent representations for further processing. Late Fusion: Processes all modalities in their raw form and fuses the output for each. Hybrid Fusion: Combines early, intermediate, and late fusion strategies at different model processing phases. Fusion Mechanism Methods While the list above mentions the high-level fusion strategies, developers can use multiple methods within each strategy to fuse the relevant modalities. Attention-based Methods Attention-based methods use the transformer architecture to convert embeddings from multiple modalities into a query-key-value structure. The technique emerged from a seminal paper - Attention is All You Need - published in 2017. Researchers initially employed the method for improving language models, as attention networks allowed these models to have longer context windows. However, developers now use attention-based methods in other domains, including computer vision (CV) and generative AI. Attention networks allow models to understand relationships between embeddings for context-aware processing. Cross-modal attention frameworks fuse different modalities in a multimodal context according to the inter-relationships between each data type. For instance, an attention filter will allow the model to understand which parts of a text prompt relate to an image’s visual embeddings, leading to a more efficient fusion output. Concatenation Concatenation is a straightforward fusion technique that merges multiple embeddings into a single feature representation. For instance, the method will concatenate a textual embedding with a visual feature vector to generate a consolidated multimodal feature. The method helps in intermediate fusion strategies by combining the latent representations for each modality. Dot-Product The dot-product method involves element-wise multiplication of feature vectors from different modalities. It helps capture the interactions and correlations between modalities, assisting models to understand the commonalities among different data types. However, it only helps in cases where the feature vectors do not suffer from high dimensionality. Taking dot-products of high-dimensional vectors may require extensive computational power and result in features that only capture common patterns between modalities, disregarding critical nuances. Decoders The last component is a decoder network that processes the feature vectors from different modalities to produce the required output. Decoders can contain cross-modal attention networks to focus on different parts of input data and produce relevant outputs. For instance, translation models often use cross-attention techniques to understand the meanings of sentences in different languages simultaneously. Recurrent neural network (RNN), Convolutional Neural Networks (CNN), and Generative Adversarial Network (GAN) frameworks are popular choices for constructing decoders to perform tasks involving sequential, visual, or generative processes. Learn how multimodal models work in our detailed guide on multimodal learning Multimodal Models - Use Cases With recent advancements in multimodal models, AI systems can perform complex tasks involving the simultaneous integration and interpretation of multiple modalities. The capabilities allow users to implement AI in large-scale environments with extensive and diverse data sources requiring robust processing pipelines. The list below mentions a few of these tasks that multimodal models perform efficiently. Visual Question-Answering (VQA): VQA involves a model answering user queries regarding visual content. For instance, a healthcare professional may ask a multimodal model regarding the content of an X-ray scan. By combining visual and textual prompts, multimodal models provide relevant and accurate responses to help users perform VQA. Image-to-Text and Text-to-Image Search: Multimodal models help users build powerful search engines that can type natural language queries to search for particular images. They can also build systems that retrieve relevant documents in response to image-based queries. For instance, a user may give an image as input to prompt the system to search for relevant blogs and articles containing the image. Generative AI: Generative AI models help users with text and image generation tasks that require multimodal capabilities. For instance, multimodal models can help users with image captioning, where they ask the model to generate relevant labels for a particular image. They can also use these models for natural language processing (NLP) use cases that involve generating textual descriptions based on video, image, or audio data. Image Segmentation: Image segmentation involves dividing an image into regions to distinguish between different elements within an image. Segmentation Multimodal models can help users perform segmentation more quickly by segmenting areas automatically based on textual prompts. For instance, users can ask the model to segment and label items in the image’s background. Top Multimodal Models Multimodal models are an active research area where experts build state-of-the-art frameworks to address complex issues using AI. The following sections will briefly discuss the latest models to help you understand how multimodal AI is evolving to solve real-world problems in multiple domains. CLIP Contrastive Language-Image Pre-training (CLIP) is a multimodal vision-language model by OpenAI that performs image classification tasks. It pairs descriptions from textual datasets with corresponding images to generate relevant image labels. CLIP Key Features Contrastive Framework: CLIP uses the contrastive loss function to optimize its learning objective. The approach minimizes a distance function by associating relevant text descriptions with related images to help the model understand which text best describes an image’s content. Text and Image Encoders: The architecture uses a transformer-based text encoder and a Vision Transformer (ViT) as an image encoder. Zero-shot Capability: Once CLIP learns to associate text with images, it can quickly generalize to new data and generate relevant captions for new unseen images without task-specific fine-tuning. Use Case Due to CLIP’s versatility, CLIP can help users perform multiple tasks, such as image annotation for creating training data, image retrieval for AI-based search systems, and generation of textual descriptions based on image prompts. Want to learn how to evaluate the CLIP model? Read our blog on evaluating CLIP with Encord Active DALL-E DALL-E is a generative model by Open AI that creates images based on text prompts using a framework similar to GPT-3. It can combine unrelated concepts to produce unique images involving objects, animals, and text. DALL-E Key Features CLIP-based architecture: DALL-E uses the CLIP model as a prior for associated textual descriptions to visual semantics. The method helps DALL-E encode the text prompt into a relevant visual representation in the latent space. A Diffusion Decoder: The decoder module in DALL-E uses the diffusion mechanism to generate images conditioned on textual descriptions. Larger Context Window: DALL-E is a 12-billion parameter model that can process text and image data streams containing up to 1280 tokens. The capability allows the model to generate images from scratch and manipulate existing images. Use Case DALL-E can help generate abstract images and transform existing images. The functionality can allow businesses to visualize new product ideas and help students understand complex visual concepts. LLaVA Large Language and Vision Assistant (LLaVA) is an open-source large multimodal model that combines Vicuna and CLIP to answer queries containing images and text. The model achieves SOTA performance in chat-related tasks with a 92.53% accuracy on the Science QA dataset. LLaVA Key Features Multimodal Instruction-following Data: The model uses instruction-following textual data generated from ChatGPT/GPT-4 to train LLaVA. The data contains questions regarding visual content and responses in the form of conversations, descriptions, and complex reasoning. Language Decoder: LLaVA connects Vicuna as the language decoder with CLIP for model fine-tuning on the instruction-following dataset. Trainable Project Matrix: The model implements a trainable projection matrix to map the visual representations onto the language embedding space. Use Case LLaVA is a robust visual assistant that can help users create advanced chatbots for multiple domains. For instance, LLaVA can help create a chatbot for an e-commerce site where users can provide an item’s image and ask the bot to search for similar items across the website. CogVLM Cognitive Visual Language Model (CogVLM) is an open-source visual language foundation model that uses deep fusion techniques to achieve superior vision and language understanding. The model achieves SOTA performance on seventeen cross-modal benchmarks, including image captioning and VQA datasets. CogVLM Key Features Attention-based Fusion: The model uses a visual expert module that includes attention layers to fuse text and image embeddings. The technique helps retain the performance of the LLM by keeping its layers frozen. ViT Encoder: It uses EVA2-CLIP-E as the visual encoder and a multi-layer perceptron (MLP) adapter to map visual features onto the same space as text features. Pre-trained Large Language Model (LLM): CogVLM 17B uses Vicuna 1.5-7B as the LLM for transforming textual features into word embeddings. Use Case Like LLaVA, CogVLM can help users perform VQA tasks and generate detailed textual descriptions based on visual cues. It can also supplement visual grounding tasks that involve identifying the most relevant objects within an image based on a natural language query. Gen2 Gen2 is a powerful text-to-video and image-to-video model that can generate realistic videos based on textual and visual prompts. It uses diffusion-based models to create context-aware videos using image and text samples as guides. Gen2 Key Features Encoder: Gen2 uses an autoencoder to map input video frames onto a latent space and diffuse them into low-dimensional vectors. Structure and Content: It uses MiDaS, an ML model that estimates the depth of input video frames. It also uses CLIP for image representations by encoding video frames to understand content. Cross-Attention: The model uses a cross-modal attention mechanism to merge the diffused vector with the content and structure representations derived from MiDaS and CLIP. It then performs the reverse diffusion process conditioned on content and structure to generate videos. Use Case Gen2 can help content creators generate video clips using text and image prompts. They can generate stylized videos that map a particular image’s style on an existing video. ImageBind ImageBind is a multimodal model by Meta AI that can combine data from six modalities, including text, video, audio, depth, thermal, and inertial measurement unit (IMU), into a single embedding space. It can then use any modality as input to generate output in any of the mentioned modalities. ImageBind Key Features Output: ImageBind supports audio-to-image, image-to-audio, text-to-image and audio, audio and image-to-image, and audio to generate corresponding images. Image Binding: The model pairs image data with other modalities to train the network. For instance, it finds relevant textual descriptions related to specific images and pairs videos from the web with similar images. Optimization Loss: It uses the InfoNCE loss, where NCE stands for noise-contrastive estimation. The loss function uses contrastive approaches to align non-image modalities with specific images. Use Cases ImageBind’s extensive multimodal capabilities make the model applicable in multiple domains. For instance, users can generate relevant promotional videos with the desired audio by providing a straightforward textual prompt. Read more about it in the blog ImageBind MultiJoint Embedding Model from Meta Explained. Flamingo Flamingo is a vision-language model by DeepMind that can take videos, images, and text as input and generate textual responses regarding the image or video. The model allows for few-shot learning, where users provide a few samples to prompt the model to create relevant responses. Flamingo Key Features Encoders: The model consists of a frozen pre-trained Normalizer-Free ResNet as the vision encoder trained on the contrastive objective. The encoder transforms image and video pixels into 1-dimensional feature vectors. Perceiver Resampler: The perceiver resampler generates a small number of visual tokens for every image and video. This method helps reduce computational complexity in cases of images and videos with an extensive feature set. Cross-Attention Layers: Flamingo incorporates cross-attention layers between the layers of the frozen LLM to fuse visual and textual features. Use Case Flamingo can help in image captioning, classification, and VQA. The user must frame these tasks as task prediction problems conditioned on visual cues. GPT-4o GPT-4 Omni (GPT4o) is a large multimodal model that can take audio, video, text, and image as input and generate any of these modalities as output in real time. The model offers a more interactive user experience as it can respond to prompts with human-level efficiency. GPT-4o Key Features Response Time: The model can respond within 320 milliseconds on average, achieving human-level response time. Multilingual: GPT-4o can understand over fifty languages, including Hindi, Arabic, Urdu, French, and Chinese. Performance: The model achieves GPT-turbo-level performance on multiple benchmarks, including text, reasoning, and coding expertise. Use Case GPT-4o can generate text, video, audio, and image with nuances such as tone, rhythm, and emotion provided in the user prompt. The capability can help users create more engaging and relevant content for marketing purposes. Gemini Google Gemini is a set of multimodal models that can process audio, video, text, and image data. It offers Gemini in three variants: Ultra for complex tasks, Pro for large-scale deployment, and Nano for on-device implementation. Gemini Key Features Larger Context Window: The latest Gemini versions, 1.5 Pro and 1.5 Flash, have long context windows, making it capable of processing long-form videos, text, code, and words. For instance, Gemini 1.5 Pro supports up to two million tokens, and 1.5 Flash supports up to one million tokens, Transformer-based Architecture: Google trained the model on interleaved text, image, video, and audio sequences using a transformer. Using the multimodal input, the model generates images and text as output. Post-training: The model uses supervised fine-tuning and reinforcement learning with human feedback (RLHF) to improve response quality and safety. Use Case The three Gemini model versions allow users to implement Gemini in multiple domains. For instance, Gemini Ultra can help developers generate complex code, Pro can help teachers check students’ hand-written answers, and Nano can help businesses build on-device virtual assistants. Claude 3 Claude 3 is a vision-language model by Anthropic that includes three variants in increasing order of performance: Haiku, Sonnet, and Opus. Opus exhibits SOTA performance across multiple benchmarks, including undergraduate and graduate-level reasoning. Claude Intelligence vs. Cost by Variant Key Features Long Recall: Claude 3 can process input sequences of more than 1 million tokens with powerful recall. Visual Capabilities: The model can understand photos, charts, graphs, and diagrams while processing research papers in less than three seconds. Better Safety: Claude 3 recognizes and responds to harmful prompts with more subtlety, respecting safety protocols while maintaining higher accuracy. Use Case Claude 3 can be a significant educational tool as it comprehends dense data and technical language, including complex diagrams and figures. Challenges and Future Trends While multimodal models offer significant benefits through superior AI capabilities, building and deploying these models is challenging. The list below mentions a few of these challenges to help developers understand possible solutions to overcome these problems. Challenges Data Availability: Although data for each modality exists, aligning these datasets is complex and results in noise during multimodal learning. Helpful mitigation strategies include using pre-trained foundation models, data augmentation techniques, and few-shot learning techniques to train multimodal models. Data Annotation: Annotating multimodal data requires extensive expertise and resources to ensure consistent and accurate labeling across different data types. Developers can address this issue using third-party annotation tools to streamline the annotation process. Mode Complexity: The complex architectural design makes training a multimodal model computationally expensive and prone to overfitting. Strategies such as knowledge distillation, quantization, and regularization can help mitigate these problems and boost generalization performance. Future Trends Despite the challenges, research in multimodal systems is ongoing, leading to productive developments concerning data collection and annotation tools, training methods, and explainable AI. Data Collection and Annotation Tools: Users can invest in end-to-end AI platforms that offer multiple tools to collect, curate, and annotate complex datasets. For instance, Encord is an end-to-end AI solution that offers Encord Index to collect, curate, and organize image and video datasets, and Encord Annotate to label data items using micro-models and automated labeling algorithms. Training Methods: Advancements in training strategies allow users to develop complex models using small data samples. For instance, few-shot, one-shot, and zero-shot learning techniques can help developers train models on small datasets while ensuring high generalization ability to unseen data. Explainable AI (XAI): XAI helps developers understand a model’s decision-making process in more detail. For instance, attention-based networks allow users to visualize which parts of data the model focuses on during inference. Development in XAI methods will enable experts to delve deeper into the causes of potential biases and inconsistencies in model outputs. Multimodal Models: Key Takeaways Multimodal models are revolutionizing human-AI interaction by allowing users and businesses to implement AI in complex environments requiring an advanced understanding of real-world data. Below are a few critical points regarding multimodal models: Multimodal Model Architecture: Multimodal models include an encoder to map raw data from different modalities into feature vectors, a fusion strategy to consolidate data modalities, and a decoder to process the merged embeddings to generate relevant output. Fusion Mechanism: Attention-based methods, concatenation, and dot-product techniques are popular choices for fusing multimodal data. Multimodal Use Cases: Multimodal models help in visual question-answering (VQA), image-to-text and text-to-image search, generative AI, and image segmentation tasks. Top Multimodal Models: CLIP, Dall-E, and LLaVA are popular multimodal models that can process video, image, and textual data. Multimodal Challenges: Building multimodal models involves challenges such as data availability, annotation, and model complexity. However, experts can overcome these problems through modern learning techniques, automated labeling tools, and regularization methods.

Jul 16 2024

5 M

Introducing TTI-Eval: An Open-Source Library for Evaluating Text-to-Image Embedding Models

In the past few years, computer vision and multimodal AI have come a long way, especially when it comes to text-to-image embedding models. Models such as CLIP from OpenAI can jointly embed images and text for powerful applications like natural language and image similarity search. However, evaluating the performance of these models (and even custom embedding models) on custom datasets can be challenging. That's where TTI-Eval comes in. We open-sourced TTI-Eval to help researchers and developers test their text-to-image embedding models on Hugging Face datasets or their own. With a straightforward and interactive evaluation process, TTI-Eval helps estimate how well different embedding models capture the semantic information within the dataset. This article will help you understand TTI-Eval and get started evaluating your text-to-image (TTI) embedding models against custom datasets. Why TTI-Eval? Imagine you have a data lake full of company data and need to do sampling to get relevant data for a given task. One common sampling approach is to use image similarity search and natural language search to identify the data from the data lake. You are likely looking for data with samples that look similar to the data you have in the production environment and those that hold the relevant semantic content. To do this type of sampling, you would typically embed all the data within the datalake with, e.g., CLIP and perform image similarity and natural language searches on such embeddings. A common question before investing all the required computing to embed all the data is, “Which model should I use?” It could be CLIP, an in-house vision model, or a domain-specific model like BioMedCLIP. TTI-Eval helps answer that question, particularly for the data you are dealing with. What is TTI-Eval? TTI-Eval's primary goal is to help you evaluate text-to-image embedding models (e.g., CLIP) against your datasets or those available on Hugging Face. By doing so, you can estimate how well a model will perform on a specific classification dataset. One of our key motivations behind TTI-Eval is to improve the accuracy of natural language and image similarity search features, which are critical for Encord Index customers and users. We used TTI-Eval internally at Encord to select the most suitable model for their similarity search feature. Since we have seen it work well, we decided to open-source it. We have also seen TTI-Eval invaluable for customers training vision-foundation models (VFMs) or computing embeddings on their datasets. It allows them to assess the effectiveness of their custom embeddings for similarity searches. Instead of relying on off-the-shelf embedding models that may not be optimized for your specific use case, you can use TTI-Eval to evaluate the embeddings and determine their effectiveness for similarity searches. How TTI-Eval Works TTI-Eval follows a straightforward evaluation workflow: Link data from Hugging Face text-to-image datasets or Encord's classification ontologies to TTI-Eval. Connect your CLIP-style models from Hugging Face or custom fine-tuned models to TTI-Eval. TTI-Eval computes embeddings for each image in the provided dataset using the specified model. It calculates the benchmark based on the model’s classifications to assess the similarity among image embeddings and the text descriptions of each class. It also generates the accuracy metrics for text-to-image and image-to-image search scenarios. Key Features of TTI-Eval There are a few main things about TTI-Eval that make it a useful tool for developers and researchers: Generating custom embeddings from model-dataset pairs. Evaluating the performance of embedding models on custom datasets. Generating embedding animations to visualize performance. Embeddings Generation You can choose which models and datasets to use together to create embeddings, which gives you more control over the evaluation process. Here’s how you can generate embeddings with known model and dataset pairs (CLIP, Alzheimer-MRI) from your command line with `tti-eval build`: tti-eval build --model-dataset clip/Alzheimer-MRI --model-dataset bioclip/Alzheimer-MRI Recommended: Top 8 Alternatives to the Open AI CLIP Model. Model Evaluation TTI-Eval lets you choose which models and datasets to evaluate interactively to fully understand how well the embedding models work on the dataset. Here’s how you can evaluate embeddings with known models and dataset pairs (bioclip, Alzheimer-MRI) from your command line with `tti-eval evaluate`: tti-eval evaluate --model-dataset clip/Alzheimer-MRI --model-dataset bioclip/Alzheimer-MRI See Also: Fine-Tuning VLM: Enhancing Geo-Spatial Embeddings. Embeddings Animation The library provides a visualization feature that enables users to visualize the reduction of embeddings from two models on the same dataset, which is useful for a comparative analysis. To create 2D animations of the embeddings, use the CLI command `tti-eval animate`. You can select two models and a dataset for visualization interactively. Alternatively, you can specify the models and dataset as arguments. For example: tti-eval animate clip bioclip Alzheimer-MRI The animations will be saved at the location specified by the environment variable `TTI_EVAL_OUTPUT_PATH`. By default, this path corresponds to the `output` folder in the repository directory. Use the `-- interactive` flag to explore the animation interactively in a temporary session. See the difference between CLIP and a fine-tuned CLIP variant on a dataset in an embedding space: Visualizing CLIP vs. Fine-Tuned CLIP in embedding space. Benefits of TTI-Eval in Data Curation Through internal tests and early user adoption, we have seen how TTI-Eval helps teams curate datasets. By selecting the best embeddings, they know they work with the most relevant and high-quality data for their specific tasks. Within Encord Active, TTI-Eval contributes to accurate model validation and label quality assurance by providing reliable estimates of class accuracy based on the selected embeddings. See Also: How to Use Semantic Search to Curate Images of Products with Encord Active. Example Results and Custom Models One example of where this `tti-eval` is useful is when testing different open-source models against different open-source datasets within a specific domain. Below, we focused on the medical domain. We evaluated nine models (three of which are domain-specific) against four different medical datasets (skin-cancer, chest-xray-classification, Alzheimer-MRI, LungCancer4Types). Here’s the result: The result of using TTI-Eval to evaluate different CLIP embedding models against four medical datasets. The plot indicates that for multiple datasets [1, 3, 4], you can use any of the CLIP-based medical models for the medical datasets. However, there's no reason for the second dataset (`chest-xray-classification`) to use a larger and more expensive medical model since the results from smaller and cheaper models are comparable. This helps you determine which model is ideal for your dataset and then You can explore these example results and even use your custom models and datasets from Hugging Face or Encord to conduct personalized evaluations. Getting Started with TTI-Eval To get started with TTI-Eval in your Python notebook, follow these steps: Step 1: Install the TTI-Eval library Clone the repository: git clone https://github.com/encord-team/text-to-image-eval.git Navigate to the project directory: cd text-to-image-eval Install the required dependencies: poetry shell poetry install Add environment variables: export TTI_EVAL_CACHE_PATH=$PWD/.cache export TTI_EVAL_OUTPUT_PATH=$PWD/output export ENCORD_SSH_KEY_PATH=<path_to_the_encord_ssh_key_file> Step 2: Define and instantiate the embeddings by specifying the model and dataset Say we are using CLIP as the embedding model and the `Falah/Alzheimer_MRI` dataset: from tti_eval.common import EmbeddingDefinition, Split def1 = EmbeddingDefinition(model="clip", dataset="Alzheimer-MRI") Step 3: Compute the embeddings of the dataset using the specified model from tti_eval.compute import compute_embeddings_from_definition embeddings = compute_embeddings_from_definition(def1, Split.TRAIN) Step 4: Evaluate the model's performance against the dataset from tti_eval.evaluation import I2IRetrievalEvaluator, LinearProbeClassifier, WeightedKNNClassifier, ZeroShotClassifier from tti_eval.evaluation.evaluator import run_evaluation evaluators = [ZeroShotClassifier, LinearProbeClassifier, WeightedKNNClassifier, I2IRetrievalEvaluator] performances = run_evaluation(evaluators, [def1, def2]) Here’s what a sample result looks like when you render it in a notebook: Here’s the quickstart notebook to get started with TTI-Eval using Python. We also prepared a CLI quickstart notebook guide that covers the basic usage of the CLI commands and their options for a quick way to test `tti-eval` without installing anything locally. Conclusion Our goal is for TTI-Eval to contribute significantly to the computer vision and multimodal AI community. We are actively working on developing tutorials to help you get the most out of TTI-Eval for evaluation purposes. In the meantime, check out the TTI-Eval GitHub repository for more information, documentation, and notebooks to guide you. We are also actively working on tutorials to help you harness the full potential of TTI-Eval for evaluation purposes.

Jun 26 2024

5 M

AI as a Service: The Ultimate AIaaS Guide for Business in 2024